@@ -45,7 +46,7 @@ image = pipeline(prompt).images[0]

## Textual inversion

-[Textual inversion](https://textual-inversion.github.io/) is very similar to DreamBooth and it can also personalize a diffusion model to generate certain concepts (styles, objects) from just a few images. This method works by training and finding new embeddings that represent the images you provide with a special word in the prompt. As a result, the diffusion model weights stays the same and the training process produces a relatively tiny (a few KBs) file.

+[Textual inversion](https://textual-inversion.github.io/) is very similar to DreamBooth and it can also personalize a diffusion model to generate certain concepts (styles, objects) from just a few images. This method works by training and finding new embeddings that represent the images you provide with a special word in the prompt. As a result, the diffusion model weights stay the same and the training process produces a relatively tiny (a few KBs) file.

Because textual inversion creates embeddings, it cannot be used on its own like DreamBooth and requires another model.

@@ -62,13 +63,14 @@ Now you can load the textual inversion embeddings with the [`~loaders.TextualInv

pipeline.load_textual_inversion("sd-concepts-library/gta5-artwork")

prompt = "A cute brown bear eating a slice of pizza, stunning color scheme, masterpiece, illustration, style"

image = pipeline(prompt).images[0]

+image

```

-Textual inversion can also be trained on undesirable things to create *negative embeddings* to discourage a model from generating images with those undesirable things like blurry images or extra fingers on a hand. This can be a easy way to quickly improve your prompt. You'll also load the embeddings with [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`], but this time, you'll need two more parameters:

+Textual inversion can also be trained on undesirable things to create *negative embeddings* to discourage a model from generating images with those undesirable things like blurry images or extra fingers on a hand. This can be an easy way to quickly improve your prompt. You'll also load the embeddings with [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`], but this time, you'll need two more parameters:

- `weight_name`: specifies the weight file to load if the file was saved in the 🤗 Diffusers format with a specific name or if the file is stored in the A1111 format

- `token`: specifies the special word to use in the prompt to trigger the embeddings

@@ -88,6 +90,7 @@ prompt = "A cute brown bear eating a slice of pizza, stunning color scheme, mast

negative_prompt = "EasyNegative"

image = pipeline(prompt, negative_prompt=negative_prompt, num_inference_steps=50).images[0]

+image

```

-Textual inversion can also be trained on undesirable things to create *negative embeddings* to discourage a model from generating images with those undesirable things like blurry images or extra fingers on a hand. This can be a easy way to quickly improve your prompt. You'll also load the embeddings with [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`], but this time, you'll need two more parameters:

+Textual inversion can also be trained on undesirable things to create *negative embeddings* to discourage a model from generating images with those undesirable things like blurry images or extra fingers on a hand. This can be an easy way to quickly improve your prompt. You'll also load the embeddings with [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`], but this time, you'll need two more parameters:

- `weight_name`: specifies the weight file to load if the file was saved in the 🤗 Diffusers format with a specific name or if the file is stored in the A1111 format

- `token`: specifies the special word to use in the prompt to trigger the embeddings

@@ -88,6 +90,7 @@ prompt = "A cute brown bear eating a slice of pizza, stunning color scheme, mast

negative_prompt = "EasyNegative"

image = pipeline(prompt, negative_prompt=negative_prompt, num_inference_steps=50).images[0]

+image

```

@@ -119,6 +122,7 @@ Then use the [`~loaders.LoraLoaderMixin.load_lora_weights`] method to load the [

pipeline.load_lora_weights("ostris/super-cereal-sdxl-lora", weight_name="cereal_box_sdxl_v1.safetensors")

prompt = "bears, pizza bites"

image = pipeline(prompt).images[0]

+image

```

@@ -142,6 +146,7 @@ pipeline.unet.load_attn_procs("jbilcke-hf/sdxl-cinematic-1", weight_name="pytorc

# use cnmt in the prompt to trigger the LoRA

prompt = "A cute cnmt eating a slice of pizza, stunning color scheme, masterpiece, illustration"

image = pipeline(prompt).images[0]

+image

```

@@ -184,7 +189,7 @@ pipeline = StableDiffusionXLPipeline.from_pretrained(

).to("cuda")

```

-Then load the LoRA checkpoint and fuse it with the original weights. The `lora_scale` parameter controls how much to scale the output by with the LoRA weights. It is important to make the `lora_scale` adjustments in the [`~loaders.LoraLoaderMixin.fuse_lora`] method because it won't work if you try to pass `scale` to the `cross_attention_kwargs` in the pipeline.

+Next, load the LoRA checkpoint and fuse it with the original weights. The `lora_scale` parameter controls how much to scale the output by with the LoRA weights. It is important to make the `lora_scale` adjustments in the [`~loaders.LoraLoaderMixin.fuse_lora`] method because it won't work if you try to pass `scale` to the `cross_attention_kwargs` in the pipeline.

If you need to reset the original model weights for any reason (use a different `lora_scale`), you should use the [`~loaders.LoraLoaderMixin.unfuse_lora`] method.

@@ -205,7 +210,7 @@ pipeline.fuse_lora(lora_scale=0.7)

-You can't unfuse multiple LoRA checkpoints so if you need to reset the model to its original weights, you'll need to reload it.

+You can't unfuse multiple LoRA checkpoints, so if you need to reset the model to its original weights, you'll need to reload it.

@@ -214,13 +219,14 @@ Now you can generate an image that uses the weights from both LoRAs:

```py

prompt = "A cute brown bear eating a slice of pizza, stunning color scheme, masterpiece, illustration"

image = pipeline(prompt).images[0]

+image

```

### 🤗 PEFT

-Read the [Inference with 🤗 PEFT](../tutorials/using_peft_for_inference) tutorial to learn more its integration with 🤗 Diffusers and how you can easily work with and juggle multiple adapters.

+Read the [Inference with 🤗 PEFT](../tutorials/using_peft_for_inference) tutorial to learn more about its integration with 🤗 Diffusers and how you can easily work with and juggle multiple adapters. You'll need to install 🤗 Diffusers and PEFT from source to run the example in this section.

@@ -241,11 +247,12 @@ Now use the [`~loaders.UNet2DConditionLoadersMixin.set_adapters`] to activate bo

pipeline.set_adapters(["ikea", "cereal"], adapter_weights=[0.7, 0.5])

```

-Then generate an image:

+Then, generate an image:

```py

prompt = "A cute brown bear eating a slice of pizza, stunning color scheme, masterpiece, illustration"

image = pipeline(prompt, num_inference_steps=30, cross_attention_kwargs={"scale": 1.0}).images[0]

+image

```

### Kohya and TheLastBen

@@ -254,7 +261,7 @@ Other popular LoRA trainers from the community include those by [Kohya](https://

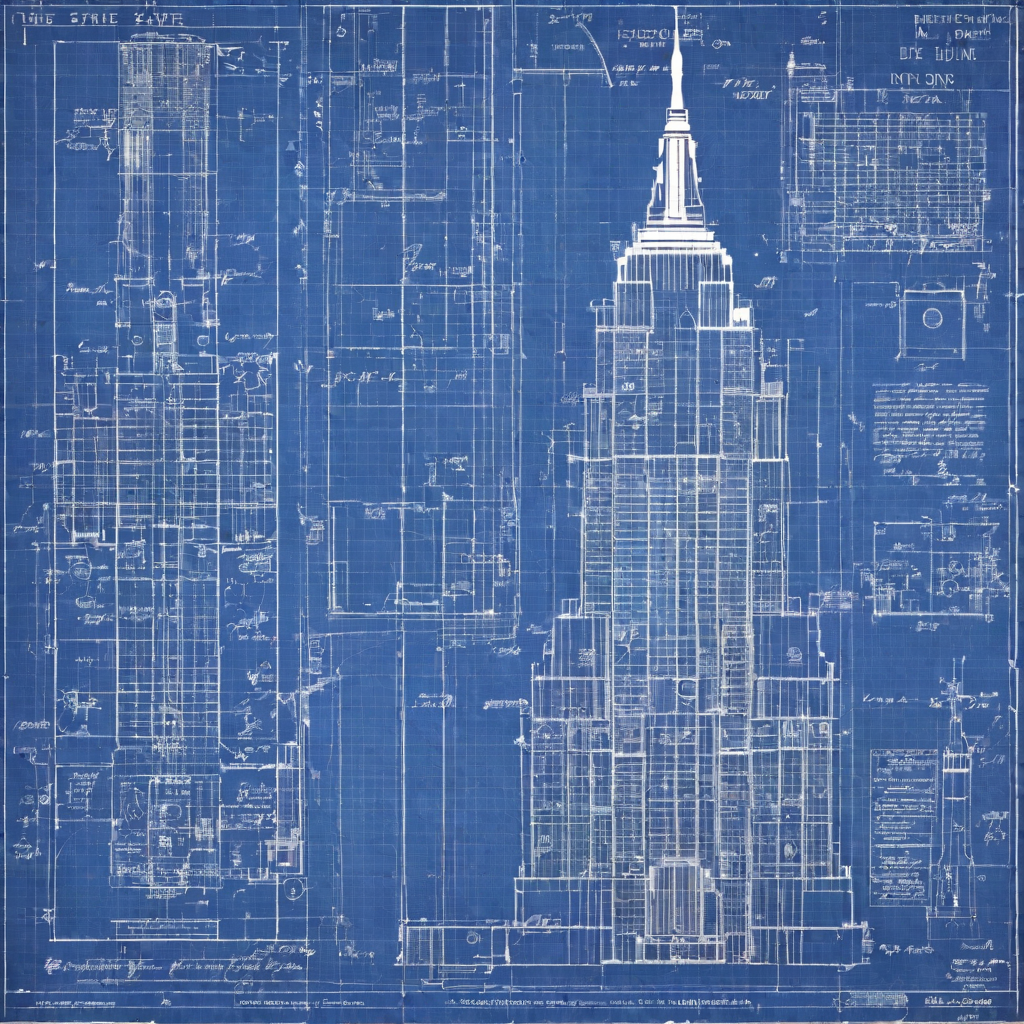

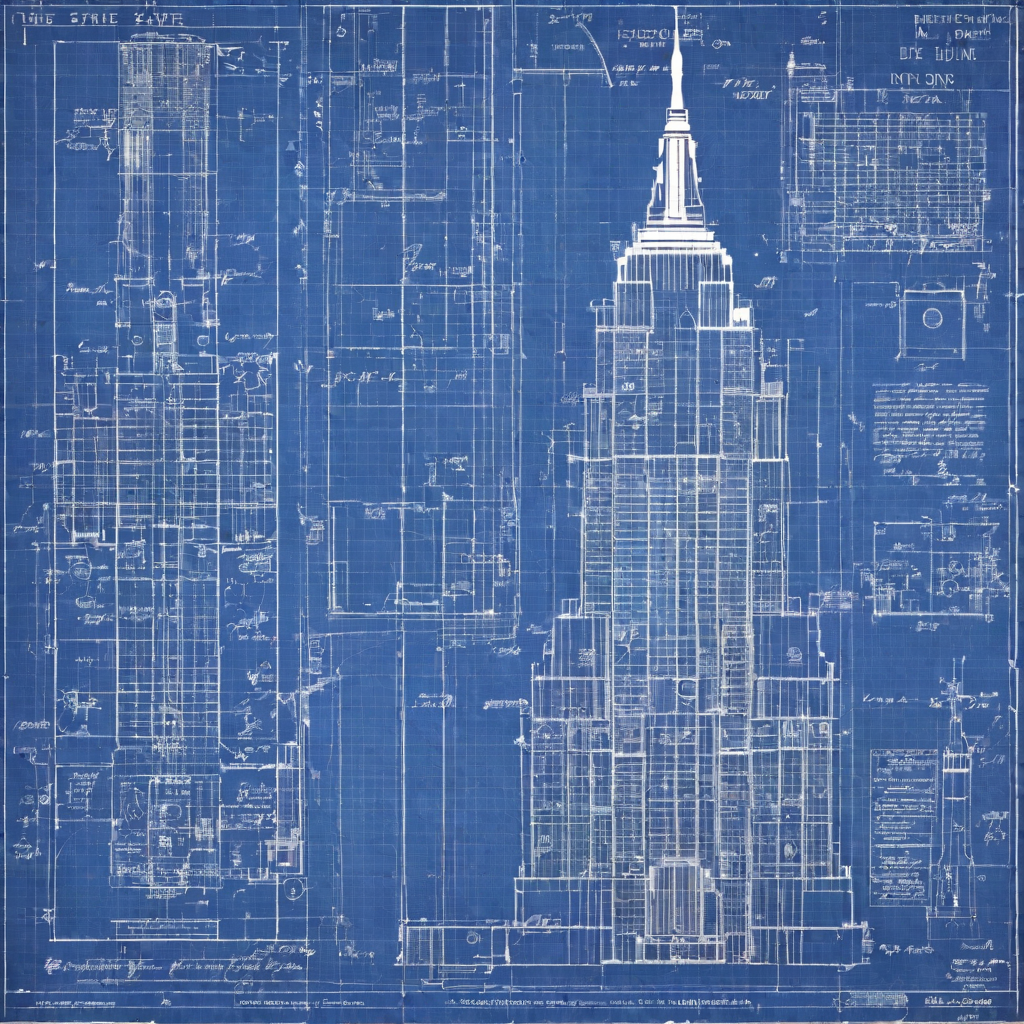

Let's download the [Blueprintify SD XL 1.0](https://civitai.com/models/150986/blueprintify-sd-xl-10) checkpoint from [Civitai](https://civitai.com/):

-```py

+```sh

!wget https://civitai.com/api/download/models/168776 -O blueprintify-sd-xl-10.safetensors

```

@@ -264,7 +271,7 @@ Load the LoRA checkpoint with the [`~loaders.LoraLoaderMixin.load_lora_weights`]

from diffusers import AutoPipelineForText2Image

import torch

-pipeline = AutoPipelineForText2Image.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0").to("cuda")

+pipeline = AutoPipelineForText2Image.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16).to("cuda")

pipeline.load_lora_weights("path/to/weights", weight_name="blueprintify-sd-xl-10.safetensors")

```

@@ -274,13 +281,14 @@ Generate an image:

# use bl3uprint in the prompt to trigger the LoRA

prompt = "bl3uprint, a highly detailed blueprint of the eiffel tower, explaining how to build all parts, many txt, blueprint grid backdrop"

image = pipeline(prompt).images[0]

+image

```

Some limitations of using Kohya LoRAs with 🤗 Diffusers include:

-- Images may not look like those generated by UIs - like ComfyUI - for multiple reasons which are explained [here](https://github.com/huggingface/diffusers/pull/4287/#issuecomment-1655110736).

+- Images may not look like those generated by UIs - like ComfyUI - for multiple reasons, which are explained [here](https://github.com/huggingface/diffusers/pull/4287/#issuecomment-1655110736).

- [LyCORIS checkpoints](https://github.com/KohakuBlueleaf/LyCORIS) aren't fully supported. The [`~loaders.LoraLoaderMixin.load_lora_weights`] method loads LyCORIS checkpoints with LoRA and LoCon modules, but Hada and LoKR are not supported.

@@ -297,4 +305,5 @@ pipeline.load_lora_weights("TheLastBen/William_Eggleston_Style_SDXL", weight_nam

# use by william eggleston in the prompt to trigger the LoRA

prompt = "a house by william eggleston, sunrays, beautiful, sunlight, sunrays, beautiful"

image = pipeline(prompt=prompt).images[0]

-```

\ No newline at end of file

+image

+```

diff --git a/docs/source/en/using-diffusers/loading_overview.md b/docs/source/en/using-diffusers/loading_overview.md

index df870505219bb..b36fdb77e6dde 100644

--- a/docs/source/en/using-diffusers/loading_overview.md

+++ b/docs/source/en/using-diffusers/loading_overview.md

@@ -14,4 +14,4 @@ specific language governing permissions and limitations under the License.

🧨 Diffusers offers many pipelines, models, and schedulers for generative tasks. To make loading these components as simple as possible, we provide a single and unified method - `from_pretrained()` - that loads any of these components from either the Hugging Face [Hub](https://huggingface.co/models?library=diffusers&sort=downloads) or your local machine. Whenever you load a pipeline or model, the latest files are automatically downloaded and cached so you can quickly reuse them next time without redownloading the files.

-This section will show you everything you need to know about loading pipelines, how to load different components in a pipeline, how to load checkpoint variants, and how to load community pipelines. You'll also learn how to load schedulers and compare the speed and quality trade-offs of using different schedulers. Finally, you'll see how to convert and load KerasCV checkpoints so you can use them in PyTorch with 🧨 Diffusers.

\ No newline at end of file

+This section will show you everything you need to know about loading pipelines, how to load different components in a pipeline, how to load checkpoint variants, and how to load community pipelines. You'll also learn how to load schedulers and compare the speed and quality trade-offs of using different schedulers. Finally, you'll see how to convert and load KerasCV checkpoints so you can use them in PyTorch with 🧨 Diffusers.

diff --git a/docs/source/en/using-diffusers/other-formats.md b/docs/source/en/using-diffusers/other-formats.md

index c2f10ff796375..84945a6da87a7 100644

--- a/docs/source/en/using-diffusers/other-formats.md

+++ b/docs/source/en/using-diffusers/other-formats.md

@@ -14,7 +14,7 @@ specific language governing permissions and limitations under the License.

[[open-in-colab]]

-Stable Diffusion models are available in different formats depending on the framework they're trained and saved with, and where you download them from. Converting these formats for use in 🤗 Diffusers allows you to use all the features supported by the library, such as [using different schedulers](schedulers) for inference, [building your custom pipeline](write_own_pipeline), and a variety of techniques and methods for [optimizing inference speed](./optimization/opt_overview).

+Stable Diffusion models are available in different formats depending on the framework they're trained and saved with, and where you download them from. Converting these formats for use in 🤗 Diffusers allows you to use all the features supported by the library, such as [using different schedulers](schedulers) for inference, [building your custom pipeline](write_own_pipeline), and a variety of techniques and methods for [optimizing inference speed](../optimization/opt_overview).

@@ -28,7 +28,7 @@ This guide will show you how to convert other Stable Diffusion formats to be com

The checkpoint - or `.ckpt` - format is commonly used to store and save models. The `.ckpt` file contains the entire model and is typically several GBs in size. While you can load and use a `.ckpt` file directly with the [`~StableDiffusionPipeline.from_single_file`] method, it is generally better to convert the `.ckpt` file to 🤗 Diffusers so both formats are available.

-There are two options for converting a `.ckpt` file; use a Space to convert the checkpoint or convert the `.ckpt` file with a script.

+There are two options for converting a `.ckpt` file: use a Space to convert the checkpoint or convert the `.ckpt` file with a script.

### Convert with a Space

@@ -116,7 +116,7 @@ pipeline = DiffusionPipeline.from_pretrained(

)

```

-Then you can generate an image like:

+Then, you can generate an image like:

```py

from diffusers import DiffusionPipeline

@@ -136,53 +136,41 @@ image = pipeline(prompt, num_inference_steps=50).images[0]

[Automatic1111](https://github.com/AUTOMATIC1111/stable-diffusion-webui) (A1111) is a popular web UI for Stable Diffusion that supports model sharing platforms like [Civitai](https://civitai.com/). Models trained with the Low-Rank Adaptation (LoRA) technique are especially popular because they're fast to train and have a much smaller file size than a fully finetuned model. 🤗 Diffusers supports loading A1111 LoRA checkpoints with [`~loaders.LoraLoaderMixin.load_lora_weights`]:

```py

-from diffusers import DiffusionPipeline, UniPCMultistepScheduler

+from diffusers import StableDiffusionXLPipeline

import torch

-pipeline = DiffusionPipeline.from_pretrained(

- "andite/anything-v4.0", torch_dtype=torch.float16, safety_checker=None

+pipeline = StableDiffusionXLPipeline.from_pretrained(

+ "Lykon/dreamshaper-xl-1-0", torch_dtype=torch.float16, variant="fp16"

).to("cuda")

-pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config)

```

-Download a LoRA checkpoint from Civitai; this example uses the [Howls Moving Castle,Interior/Scenery LoRA (Ghibli Stlye)](https://civitai.com/models/14605?modelVersionId=19998) checkpoint, but feel free to try out any LoRA checkpoint!

+Download a LoRA checkpoint from Civitai; this example uses the [Blueprintify SD XL 1.0](https://civitai.com/models/150986/blueprintify-sd-xl-10) checkpoint, but feel free to try out any LoRA checkpoint!

```py

# uncomment to download the safetensor weights

-#!wget https://civitai.com/api/download/models/19998 -O howls_moving_castle.safetensors

+#!wget https://civitai.com/api/download/models/168776 -O blueprintify.safetensors

```

Load the LoRA checkpoint into the pipeline with the [`~loaders.LoraLoaderMixin.load_lora_weights`] method:

```py

-pipeline.load_lora_weights(".", weight_name="howls_moving_castle.safetensors")

+pipeline.load_lora_weights(".", weight_name="blueprintify.safetensors")

```

Now you can use the pipeline to generate images:

```py

-prompt = "masterpiece, illustration, ultra-detailed, cityscape, san francisco, golden gate bridge, california, bay area, in the snow, beautiful detailed starry sky"

+prompt = "bl3uprint, a highly detailed blueprint of the empire state building, explaining how to build all parts, many txt, blueprint grid backdrop"

negative_prompt = "lowres, cropped, worst quality, low quality, normal quality, artifacts, signature, watermark, username, blurry, more than one bridge, bad architecture"

-images = pipeline(

+image = pipeline(

prompt=prompt,

negative_prompt=negative_prompt,

- width=512,

- height=512,

- num_inference_steps=25,

- num_images_per_prompt=4,

generator=torch.manual_seed(0),

-).images

-```

-

-Display the images:

-

-```py

-from diffusers.utils import make_image_grid

-

-make_image_grid(images, 2, 2)

+).images[0]

+image

```

-As you can see most images look very similar and are arguably of very similar quality. It often really depends on the specific use case which scheduler to choose. A good approach is always to run multiple different +As you can see, most images look very similar and are arguably of very similar quality. It often really depends on the specific use case which scheduler to choose. A good approach is always to run multiple different schedulers to compare results. ## Changing the Scheduler in Flax -If you are a JAX/Flax user, you can also change the default pipeline scheduler. This is a complete example of how to run inference using the Flax Stable Diffusion pipeline and the super-fast [DDPM-Solver++ scheduler](../api/schedulers/multistep_dpm_solver): +If you are a JAX/Flax user, you can also change the default pipeline scheduler. This is a complete example of how to run inference using the Flax Stable Diffusion pipeline and the super-fast [DPM-Solver++ scheduler](../api/schedulers/multistep_dpm_solver): ```Python import jax diff --git a/docs/source/en/using-diffusers/using_safetensors.md b/docs/source/en/using-diffusers/using_safetensors.md index 2f47eb08cb839..3e89e7eed9a01 100644 --- a/docs/source/en/using-diffusers/using_safetensors.md +++ b/docs/source/en/using-diffusers/using_safetensors.md @@ -1,3 +1,15 @@ + + # Load safetensors [[open-in-colab]] @@ -55,11 +67,11 @@ There are several reasons for using safetensors: The time it takes to load the entire pipeline: ```py - from diffusers import StableDiffusionPipeline + from diffusers import StableDiffusionPipeline - pipeline = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1", use_safetensors=True) - "Loaded in safetensors 0:00:02.033658" - "Loaded in PyTorch 0:00:02.663379" + pipeline = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1", use_safetensors=True) + "Loaded in safetensors 0:00:02.033658" + "Loaded in PyTorch 0:00:02.663379" ``` But the actual time it takes to load 500MB of the model weights is only:

-  +

+

diff --git a/docs/source/en/using-diffusers/push_to_hub.md b/docs/source/en/using-diffusers/push_to_hub.md

index 4683860317680..58598c3bc443c 100644

--- a/docs/source/en/using-diffusers/push_to_hub.md

+++ b/docs/source/en/using-diffusers/push_to_hub.md

@@ -1,3 +1,15 @@

+

+

# Push files to the Hub

[[open-in-colab]]

@@ -20,7 +32,7 @@ notebook_login()

## Models

-To push a model to the Hub, call [`~diffusers.utils.PushToHubMixin.push_to_hub`] and specfiy the repository id of the model to be stored on the Hub:

+To push a model to the Hub, call [`~diffusers.utils.PushToHubMixin.push_to_hub`] and specify the repository id of the model to be stored on the Hub:

```py

from diffusers import ControlNetModel

@@ -36,7 +48,7 @@ controlnet = ControlNetModel(

controlnet.push_to_hub("my-controlnet-model")

```

-For model's, you can also specify the [*variant*](loading#checkpoint-variants) of the weights to push to the Hub. For example, to push `fp16` weights:

+For models, you can also specify the [*variant*](loading#checkpoint-variants) of the weights to push to the Hub. For example, to push `fp16` weights:

```py

controlnet.push_to_hub("my-controlnet-model", variant="fp16")

@@ -52,7 +64,7 @@ model = ControlNetModel.from_pretrained("your-namespace/my-controlnet-model")

## Scheduler

-To push a scheduler to the Hub, call [`~diffusers.utils.PushToHubMixin.push_to_hub`] and specfiy the repository id of the scheduler to be stored on the Hub:

+To push a scheduler to the Hub, call [`~diffusers.utils.PushToHubMixin.push_to_hub`] and specify the repository id of the scheduler to be stored on the Hub:

```py

from diffusers import DDIMScheduler

@@ -159,13 +171,13 @@ pipeline = StableDiffusionPipeline.from_pretrained("your-namespace/my-pipeline")

Set `private=True` in the [`~diffusers.utils.PushToHubMixin.push_to_hub`] function to keep your model, scheduler, or pipeline files private:

```py

-controlnet.push_to_hub("my-controlnet-model", private=True)

+controlnet.push_to_hub("my-controlnet-model-private", private=True)

```

-Private repositories are only visible to you, and other users won't be able to clone the repository and your repository won't appear in search results. Even if a user has the URL to your private repository, they'll receive a `404 - Repo not found error.`

+Private repositories are only visible to you, and other users won't be able to clone the repository and your repository won't appear in search results. Even if a user has the URL to your private repository, they'll receive a `404 - Sorry, we can't find the page you are looking for.`

-To load a model, scheduler, or pipeline from a private or gated repositories, set `use_auth_token=True`:

+To load a model, scheduler, or pipeline from private or gated repositories, set `use_auth_token=True`:

```py

-model = ControlNet.from_pretrained("your-namespace/my-controlnet-model", use_auth_token=True)

-```

\ No newline at end of file

+model = ControlNetModel.from_pretrained("your-namespace/my-controlnet-model-private", use_auth_token=True)

+```

diff --git a/docs/source/en/using-diffusers/schedulers.md b/docs/source/en/using-diffusers/schedulers.md

index c791b47b78327..9a8dd29ec2ea5 100644

--- a/docs/source/en/using-diffusers/schedulers.md

+++ b/docs/source/en/using-diffusers/schedulers.md

@@ -15,13 +15,13 @@ specific language governing permissions and limitations under the License.

[[open-in-colab]]

Diffusion pipelines are inherently a collection of diffusion models and schedulers that are partly independent from each other. This means that one is able to switch out parts of the pipeline to better customize

-a pipeline to one's use case. The best example of this is the [Schedulers](../api/schedulers/overview.md).

+a pipeline to one's use case. The best example of this is the [Schedulers](../api/schedulers/overview).

Whereas diffusion models usually simply define the forward pass from noise to a less noisy sample,

schedulers define the whole denoising process, *i.e.*:

- How many denoising steps?

- Stochastic or deterministic?

-- What algorithm to use to find the denoised sample

+- What algorithm to use to find the denoised sample?

They can be quite complex and often define a trade-off between **denoising speed** and **denoising quality**.

It is extremely difficult to measure quantitatively which scheduler works best for a given diffusion pipeline, so it is often recommended to simply try out which works best.

@@ -63,7 +63,7 @@ pipeline.scheduler

```

PNDMScheduler {

"_class_name": "PNDMScheduler",

- "_diffusers_version": "0.8.0.dev0",

+ "_diffusers_version": "0.21.4",

"beta_end": 0.012,

"beta_schedule": "scaled_linear",

"beta_start": 0.00085,

@@ -72,6 +72,7 @@ PNDMScheduler {

"set_alpha_to_one": false,

"skip_prk_steps": true,

"steps_offset": 1,

+ "timestep_spacing": "leading",

"trained_betas": null

}

```

@@ -101,7 +102,7 @@ image

## Changing the scheduler

-Now we show how easy it is to change the scheduler of a pipeline. Every scheduler has a property [`SchedulerMixin.compatibles`]

+Now we show how easy it is to change the scheduler of a pipeline. Every scheduler has a property [`~SchedulerMixin.compatibles`]

which defines all compatible schedulers. You can take a look at all available, compatible schedulers for the Stable Diffusion pipeline as follows.

```python

@@ -110,27 +111,40 @@ pipeline.scheduler.compatibles

**Output**:

```

-[diffusers.schedulers.scheduling_lms_discrete.LMSDiscreteScheduler,

+[diffusers.utils.dummy_torch_and_torchsde_objects.DPMSolverSDEScheduler,

+ diffusers.schedulers.scheduling_euler_discrete.EulerDiscreteScheduler,

+ diffusers.schedulers.scheduling_lms_discrete.LMSDiscreteScheduler,

diffusers.schedulers.scheduling_ddim.DDIMScheduler,

+ diffusers.schedulers.scheduling_ddpm.DDPMScheduler,

+ diffusers.schedulers.scheduling_heun_discrete.HeunDiscreteScheduler,

diffusers.schedulers.scheduling_dpmsolver_multistep.DPMSolverMultistepScheduler,

- diffusers.schedulers.scheduling_euler_discrete.EulerDiscreteScheduler,

+ diffusers.schedulers.scheduling_deis_multistep.DEISMultistepScheduler,

diffusers.schedulers.scheduling_pndm.PNDMScheduler,

- diffusers.schedulers.scheduling_ddpm.DDPMScheduler,

- diffusers.schedulers.scheduling_euler_ancestral_discrete.EulerAncestralDiscreteScheduler]

+ diffusers.schedulers.scheduling_euler_ancestral_discrete.EulerAncestralDiscreteScheduler,

+ diffusers.schedulers.scheduling_unipc_multistep.UniPCMultistepScheduler,

+ diffusers.schedulers.scheduling_k_dpm_2_discrete.KDPM2DiscreteScheduler,

+ diffusers.schedulers.scheduling_dpmsolver_singlestep.DPMSolverSinglestepScheduler,

+ diffusers.schedulers.scheduling_k_dpm_2_ancestral_discrete.KDPM2AncestralDiscreteScheduler]

```

Cool, lots of schedulers to look at. Feel free to have a look at their respective class definitions:

-- [`LMSDiscreteScheduler`],

-- [`DDIMScheduler`],

-- [`DPMSolverMultistepScheduler`],

-- [`EulerDiscreteScheduler`],

-- [`PNDMScheduler`],

-- [`DDPMScheduler`],

-- [`EulerAncestralDiscreteScheduler`].

+- [`EulerDiscreteScheduler`],

+- [`LMSDiscreteScheduler`],

+- [`DDIMScheduler`],

+- [`DDPMScheduler`],

+- [`HeunDiscreteScheduler`],

+- [`DPMSolverMultistepScheduler`],

+- [`DEISMultistepScheduler`],

+- [`PNDMScheduler`],

+- [`EulerAncestralDiscreteScheduler`],

+- [`UniPCMultistepScheduler`],

+- [`KDPM2DiscreteScheduler`],

+- [`DPMSolverSinglestepScheduler`],

+- [`KDPM2AncestralDiscreteScheduler`].

We will now compare the input prompt with all other schedulers. To change the scheduler of the pipeline you can make use of the

-convenient [`ConfigMixin.config`] property in combination with the [`ConfigMixin.from_config`] function.

+convenient [`~ConfigMixin.config`] property in combination with the [`~ConfigMixin.from_config`] function.

```python

pipeline.scheduler.config

@@ -139,7 +153,7 @@ pipeline.scheduler.config

returns a dictionary of the configuration of the scheduler:

**Output**:

-```

+```py

FrozenDict([('num_train_timesteps', 1000),

('beta_start', 0.00085),

('beta_end', 0.012),

@@ -147,9 +161,12 @@ FrozenDict([('num_train_timesteps', 1000),

('trained_betas', None),

('skip_prk_steps', True),

('set_alpha_to_one', False),

+ ('prediction_type', 'epsilon'),

+ ('timestep_spacing', 'leading'),

('steps_offset', 1),

+ ('_use_default_values', ['timestep_spacing', 'prediction_type']),

('_class_name', 'PNDMScheduler'),

- ('_diffusers_version', '0.8.0.dev0'),

+ ('_diffusers_version', '0.21.4'),

('clip_sample', False)])

```

@@ -182,7 +199,7 @@ If you are a JAX/Flax user, please check [this section](#changing-the-scheduler-

## Compare schedulers

So far we have tried running the stable diffusion pipeline with two schedulers: [`PNDMScheduler`] and [`DDIMScheduler`].

-A number of better schedulers have been released that can be run with much fewer steps, let's compare them here:

+A number of better schedulers have been released that can be run with much fewer steps; let's compare them here:

[`LMSDiscreteScheduler`] usually leads to better results:

@@ -241,8 +258,7 @@ image

-At the time of writing this doc [`DPMSolverMultistepScheduler`] gives arguably the best speed/quality trade-off and can be run with as little

-as 20 steps.

+[`DPMSolverMultistepScheduler`] gives a reasonable speed/quality trade-off and can be run with as little as 20 steps.

```python

from diffusers import DPMSolverMultistepScheduler

@@ -260,12 +276,12 @@ image

+

+

-As you can see most images look very similar and are arguably of very similar quality. It often really depends on the specific use case which scheduler to choose. A good approach is always to run multiple different +As you can see, most images look very similar and are arguably of very similar quality. It often really depends on the specific use case which scheduler to choose. A good approach is always to run multiple different schedulers to compare results. ## Changing the Scheduler in Flax -If you are a JAX/Flax user, you can also change the default pipeline scheduler. This is a complete example of how to run inference using the Flax Stable Diffusion pipeline and the super-fast [DDPM-Solver++ scheduler](../api/schedulers/multistep_dpm_solver): +If you are a JAX/Flax user, you can also change the default pipeline scheduler. This is a complete example of how to run inference using the Flax Stable Diffusion pipeline and the super-fast [DPM-Solver++ scheduler](../api/schedulers/multistep_dpm_solver): ```Python import jax diff --git a/docs/source/en/using-diffusers/using_safetensors.md b/docs/source/en/using-diffusers/using_safetensors.md index 2f47eb08cb839..3e89e7eed9a01 100644 --- a/docs/source/en/using-diffusers/using_safetensors.md +++ b/docs/source/en/using-diffusers/using_safetensors.md @@ -1,3 +1,15 @@ + + # Load safetensors [[open-in-colab]] @@ -55,11 +67,11 @@ There are several reasons for using safetensors: The time it takes to load the entire pipeline: ```py - from diffusers import StableDiffusionPipeline + from diffusers import StableDiffusionPipeline - pipeline = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1", use_safetensors=True) - "Loaded in safetensors 0:00:02.033658" - "Loaded in PyTorch 0:00:02.663379" + pipeline = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1", use_safetensors=True) + "Loaded in safetensors 0:00:02.033658" + "Loaded in PyTorch 0:00:02.663379" ``` But the actual time it takes to load 500MB of the model weights is only: