Table of Contents

- Publication Updates

- What can I find in this repository?

- Abstract

- Usage on a GPU lab machine

- Dataset installation

- Citation

- License

- Code Authors

- Star History

- Contact

I have been working since the end of my Master's in 2020 to publish this dissertation in journal. As of May 2023, this research is published in PLOS ONE, and can be read and cited here: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0280841. Here are the latest updates of this project:

- Completed my Master's degree and submitted my dissertation. Final results: 90%.

- Began discussions with my supervisors to consider publishing the dissertation in a renown journal.

- Elected a journal to aim to publish in: PLOS ONE.

- Began transforming the project from a dissertation report to a paper (choice of language, trimming down, changing narrative, updating figures).

- After multiple iterations and feedback from both my supervisors, a first draft was created.

- Due to the time that has passed since the initial dissertation project was finished, help was enlisted by including my supervisor's PhD student. The goal was to:

- update the literature review with more recent papers,

- reproduce the results and update the code/instructions for installation and setup (code can be found in this updated repository: Adamouization/Breast-Cancer-Detection-Mammogram-Deep-Learning-Publication),

- have an extra pair of eyes to polish the whole paper and keep the flow consistent.

- The paper was submitted to PLOS ONE in July 2022.

- Reviewers got back to us in September 2022 with amendments for the paper to be considered for publication. These amendments included revamping the narrative to highlight the main contribution of the paper, add additional sections underlining the processes and decision-making process, and general amendments for the paper's flow and style to remain consistent throughout.

- The amended version was sent in October.

- The paper, entitled "A divide and conquer approach to maximise deep learning mammography classification accuracies", was formally accepted for publication in January 2023.

- In May 2023, the paper was finally published in PLOS ONE. It can be read and cited here: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0280841

You can find the full dissertation project (code + report) for the MSc Artificial Intelligence at the University of St Andrews (2020).

The publication of this project can be found here:

- Paper published in PLOS ONE: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0280841

- Peer-reviewed code: https://github.com/Adamouization/Breast-Cancer-Detection-Mammogram-Deep-Learning-Publication

The original dissertation report can be read here: Breast Cancer Detection in Mammograms using Deep Learning Techniques, Adam Jaamour (2020)

If you have any questions, issues or message, please either:

- Post a comment on PLOS ONE: https://journals.plos.org/plosone/article/comments?id=10.1371/journal.pone.0280841

- Open a new issue in this repository: https://github.com/Adamouization/Breast-Cancer-Detection-Mammogram-Deep-Learning/issues/new

The objective of this dissertation is to explore various deep learning techniques that can be used to implement a system which learns how to detect instances of breast cancer in mammograms. Nowadays, breast cancer claims 11,400 lives on average every year in the UK, making it one of the deadliest diseases. Mammography is the gold standard for detecting early signs of breast cancer, which can help cure the disease during its early stages. However, incorrect mammography diagnoses are common and may harm patients through unnecessary treatments and operations (or a lack of treatments). Therefore, systems that can learn to detect breast cancer on their own could help reduce the number of incorrect interpretations and missed cases.

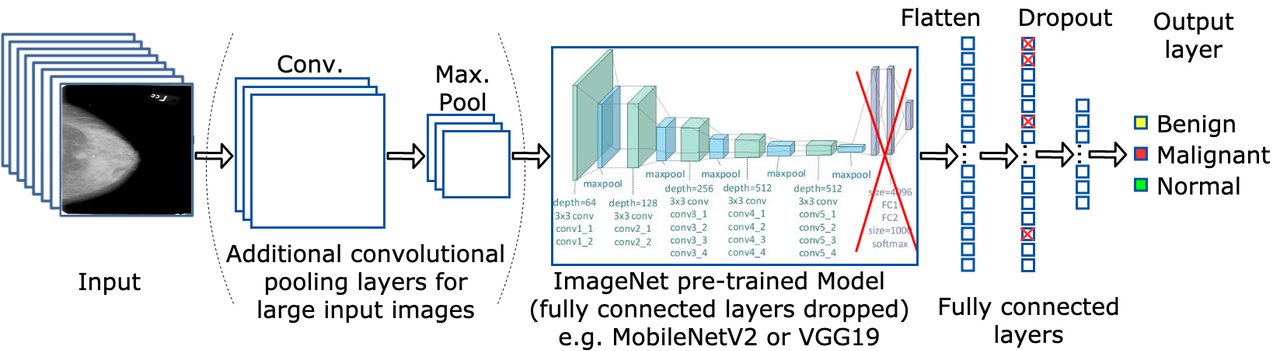

Convolution Neural Networks (CNNs) are used as part of a deep learning pipeline initially developed in a group and further extended individually. A bag-of-tricks approach is followed to analyse the effects on performance and efficiency using diverse deep learning techniques such as different architectures (VGG19, ResNet50, InceptionV3, DenseNet121, MobileNetV2), class weights, input sizes, amounts of transfer learning, and types of mammograms.

Ultimately, 67.08% accuracy is achieved on the CBIS-DDSM dataset by transfer learning pre-trained ImagetNet weights to a MobileNetV2 architecture and pre-trained weights from a binary version of the mini-MIAS dataset to the fully connected layers of the model. Furthermore, using class weights to fight the problem of imbalanced datasets and splitting CBIS-DDSM samples between masses and calcifications also increases the overall accuracy. Other techniques tested such as data augmentation and larger image sizes do not yield increased accuracies, while the mini-MIAS dataset proves to be too small for any meaningful results using deep learning techniques. These results are compared with other papers using the CBIS-DDSM and mini-MIAS datasets, and with the baseline set during the implementation of a deep learning pipeline developed as a group.

Clone the repository:

cd ~/Projects

git clone https://github.com/Adamouization/Breast-Cancer-Detection-Code

Create a repository that will be used to install Tensorflow 2 with CUDA 10 for Python and activate the virtual environment for GPU usage:

cd libraries/tf2

tar xvzf tensorflow2-cuda-10-1-e5bd53b3b5e6.tar.gz

sh build.sh

Activate the virtual environment:

source /cs/scratch/<username>/tf2/venv/bin/activate

Create outputand save_models directories to store the results:

mkdir output

mkdir saved_models

cd into the src directory and run the code:

main.py [-h] -d DATASET [-mt MAMMOGRAMTYPE] -m MODEL [-r RUNMODE] [-lr LEARNING_RATE] [-b BATCHSIZE] [-e1 MAX_EPOCH_FROZEN] [-e2 MAX_EPOCH_UNFROZEN] [-roi] [-v] [-n NAME]

where:

-his a flag for help on how to run the code.DATASETis the dataset to use. Must be eithermini-MIAS,mini-MIAS-binaryorCBIS-DDMS. Defaults toCBIS-DDMS.MAMMOGRAMTYPEis the type of mammograms to use. Can be eithercalc,massorall. Defaults toall.MODELis the model to use. Must be eitherVGG-common,VGG,ResNet,Inception,DenseNet,MobileNetorCNN.RUNMODEis the mode to run in (trainortest). Default value istrain.LEARNING_RATEis the optimiser's initial learning rate when training the model during the first training phase (frozen layers). Defaults to0.001. Must be a positive float.BATCHSIZEis the batch size to use when training the model. Defaults to2. Must be a positive integer.MAX_EPOCH_FROZENis the maximum number of epochs in the first training phrase (with frozen layers). Defaults to100.MAX_EPOCH_UNFROZENis the maximum number of epochs in the second training phrase (with unfrozen layers). Defaults to50.-roiis a flag to use versions of the images cropped around the ROI. Only usable with mini-MIAS dataset. Defaults toFalse.-vis a flag controlling verbose mode, which prints additional statements for debugging purposes.NAMEis name of the experiment being tested (used for saving plots and model weights). Defaults to an empty string.

-

This example will use the mini-MIAS dataset. After cloning the project, travel to the

data/mini-MIASdirectory (there should be 3 files in it). -

Create

images_originalandimages_processeddirectories in this directory:

cd data/mini-MIAS/

mkdir images_original

mkdir images_processed

- Move to the

images_originaldirectory and download the raw un-processed images:

cd images_original

wget http://peipa.essex.ac.uk/pix/mias/all-mias.tar.gz

- Unzip the dataset then delete all non-image files:

tar xvzf all-mias.tar.gz

rm -rf *.txt

rm -rf README

- Move back up one level and move to the

images_processeddirectory. Create 3 new directories there (benign_cases,malignant_casesandnormal_cases):

cd ../images_processed

mkdir benign_cases

mkdir malignant_cases

mkdir normal_cases

- Now run the python script for processing the dataset and render it usable with Tensorflow and Keras:

python3 ../../../src/dataset_processing_scripts/mini-MIAS-initial-pre-processing.py

These datasets are very large (exceeding 160GB) and more complex than the mini-MIAS dataset to use. They were downloaded by the University of St Andrews School of Computer Science computing officers onto \textit{BigTMP}, a 15TB filesystem that is mounted on the Centos 7 computer lab clients with NVIDIA GPUsusually used for storing large working data sets. Therefore, the download process of these datasets will not be covered in these instructions.\

The generated CSV files to use these datasets can be found in the /data/CBIS-DDSM directory, but the mammograms will have to be downloaded separately. The DDSM dataset can be downloaded here, while the CBIS-DDSM dataset can be downloaded here.

@article{10.1371/journal.pone.0280841,

doi = {10.1371/journal.pone.0280841},

author = {Jaamour, Adam AND Myles, Craig AND Patel, Ashay AND Chen, Shuen-Jen AND McMillan, Lewis AND Harris-Birtill, David},

journal = {PLOS ONE},

publisher = {Public Library of Science},

title = {A divide and conquer approach to maximise deep learning mammography classification accuracies},

year = {2023},

month = {05},

volume = {18},

url = {https://doi.org/10.1371/journal.pone.0280841},

pages = {1-24},

number = {5},

}

@software{adam_jaamour_2020_3985051,

author = {Adam Jaamour and

Ashay Patel and

Shuen-Jen Chen},

title = {{Breast Cancer Detection in Mammograms using Deep

Learning Techniques: Source Code}},

month = aug,

year = 2020,

publisher = {Zenodo},

version = {v1.0},

doi = {10.5281/zenodo.3985051},

url = {https://doi.org/10.5281/zenodo.3985051}

}

- see LICENSE file.

- Adam Jaamour

- Ashay Patel

- Shuen-Jen Chen

The common pipeline can be found at DOI 10.5281/zenodo.3975092

- Email: adam[at]jaamour[dot]com

- Website: www.adam.jaamour.com

- LinkedIn: linkedin.com/in/adamjaamour