Alejandro Saucedo | a@ethical.institute

Twitter: @AxSaucedo

[NEXT]

|

Alejandro Saucedo Twitter: @AxSaucedo |

|

[NEXT]

We're hiring: seldon.io

[NEXT]

[NEXT]

[NEXT]

[NEXT]

As our data science requirements grow...

[NEXT]

[NEXT]

- Some ♥ Tensorflow

- Some ♥ R

- Some ♥ Spark

### Some ♥ all of them

[NEXT]

[NEXT]

[NEXT]

[NEXT]

[NEXT]

[NEXT]

[NEXT]

- Orchestration

- Explainability

- Reproducibility

[NEXT SECTION]

[NEXT]

Services with different computational requirements

With often complex computational graphs

We need to be able to allocate the right resources

### This is a hard problem

[NEXT]

[NEXT]

[NEXT]

[NEXT]

[NEXT]

[NEXT]

Wrapping an income classifier Python model

[NEXT]

[NEXT]

PyTorch Hub Deployment: https://bit.ly/pytorchseldon

[NEXT]

[NEXT]

Serverness for machine learning in kubernetes based on Knative

[NEXT]

Unifying multiple external machine learning libraries on a single API

[NEXT SECTION]

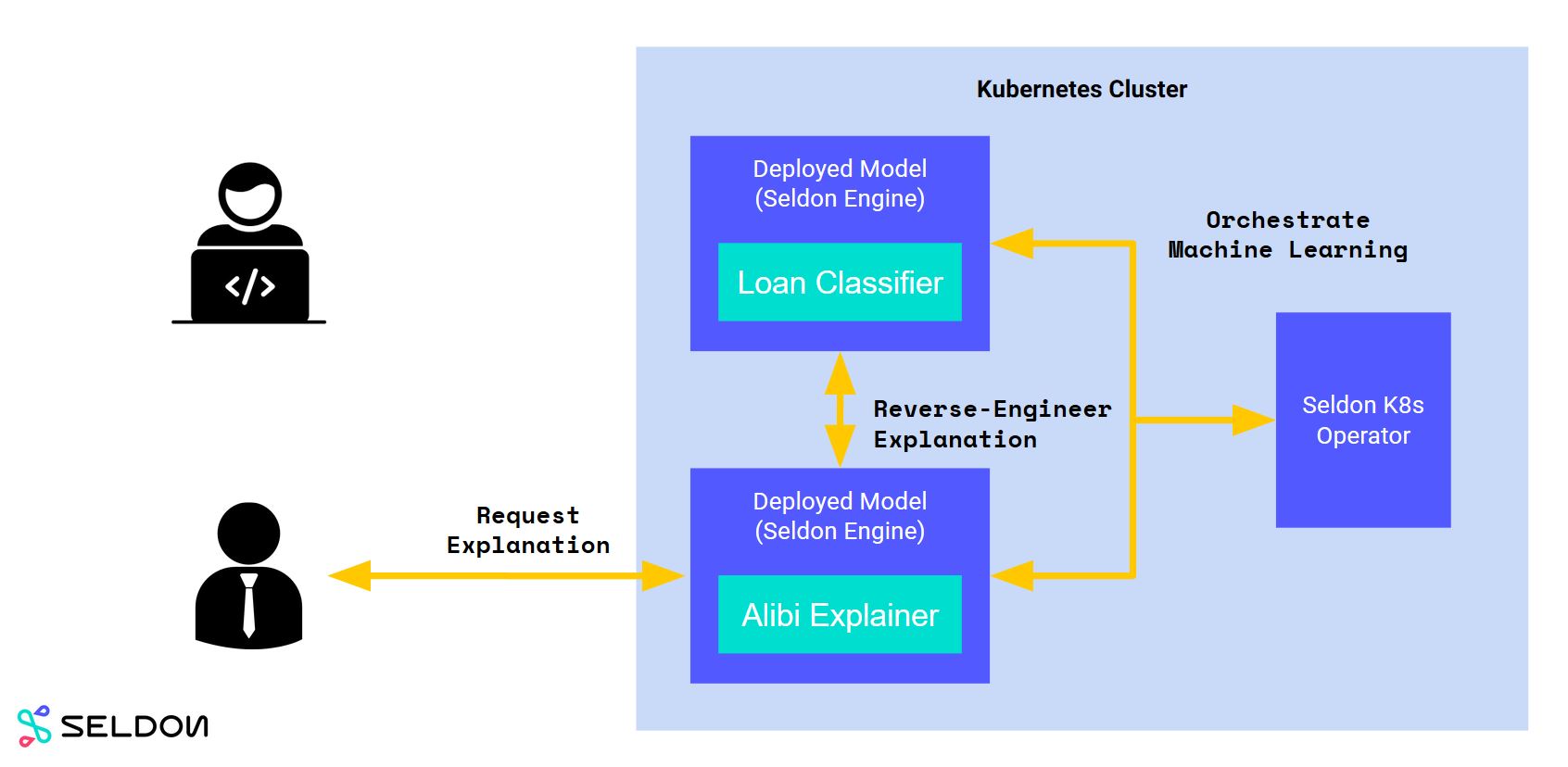

Tackling "black box model" situations

[NEXT]

Explainability through tools, process and domain expertise.

[Our talk on Explainability of Tensorflow Models]

[NEXT]

- Class imbalances

- Protected features

- Correlations

- Data representability

[NEXT]

- Feature importance

- Model specific methods

- Domain knowledge abstraction

- Model metrics analysis

[NEXT]

- Evaluation of metrics

- Manual human review

- Monitoring of anomalies

- Setting thresholds for divergence

[NEXT]

[NEXT]

[NEXT]

Deploying Explainer Modules: http://bit.ly/seldonexplainer

[NEXT]

[NEXT]

[NEXT]

Unifying multiple model explainability techniques

[NEXT]

Analyse datasets, evaluate models and monitor production

[NEXT SECTION]

[NEXT]

$ cat data-input.csv

> Date Open High Low Close Market Cap

> 1608 2013-04-28 135.30 135.98 132.10 134.21 1,500,520,000

> 1607 2013-04-29 134.44 147.49 134.00 144.54 1,491,160,000

> 1606 2013-04-30 144.00 146.93 134.05 139.00 1,597,780,000

$ cat feature-extractor.pydef open_norm_feature_extractor(df): feature = some_lib.get_open(df) return feature

[NEXT]

We can abstract our entire pipeline and data flows

[NEXT]

[NEXT]

Reusable NLP Pipelines: https://bit.ly/seldon-kf-nlp

[NEXT]

[NEXT]

dvc add images.zip

dvc run -d images.zip -o model.p ./cnn.py

dvc remote add myrepo s3://mybucket

dvc push

Check it out at dvc.org

[NEXT]

[NEXT]

[NEXT SECTION]

### Check it out & add more libraries

[NEXT]

|

Alejandro Saucedo Twitter: @AxSaucedo |

|