-

Notifications

You must be signed in to change notification settings - Fork 28

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Random Election using Stake Amount as Discrete Distribution #22

Comments

|

This is good suggestion. 👍

As above your mention, the selected validator is less than V+1. In this case, is the process of this selecting validators repeated? |

The amount of calculation depends on

Yes, it does. If we follow the case that the configuration doesn't allow a node to have multiple roles, 1) it will remove the selected validator from the candidates' list, 2) recalculate S, and 3) find the next winner, repeat these |

|

OK And how about using hash function like |

|

I think it mainly depends on its speed. And there are several considerations to using the cryptographic hash function.

|

|

My concern is that the random function can vary from platform to platform and program language and compile version. (https://en.wikipedia.org/wiki/Random_number_generation) So I suggest using the hash function. |

|

Random sampling is better than my proposal because of less calculation. I have two comment about this proposal. Duplicate election

I'll think more about the reward policy and then make a separate issue. A way to reduce computationsI think we don't need to calculate this at every round. Validators order is fixed for a while if any staking tx is not executed.

Total staking is 100000. I am a validator and someone propose a block. |

The PRNG mentioned here means an algorithm defined by LINK 2 Network as its specification (rather than languages or external libraries such as |

But, it is not disadvantageous, because the voting power is set by the staking value of user. But I'm also thinking about what it's like to give them the right to vote as many as they're elected. |

This proposal doesn't point out the incentive scheme, so it needs to do separately. We'll discuss this in #17.

That suggestion is useful. It will be able to reuse the results of the categorical distribution until the next stake transaction issued. If the stakes don't change often, we may be able to use an algorithm such as a binary tree, R-Tree or B-Tree instead of a linear search. In this case, 3.winner extraction will be O(V log N). |

|

A problem with this algorithm was raised in the last open session. Random election cannot protect for some candidate to be a validator having voting power over staking or over 1/3 of total extremely. |

|

Here a few points.

Strictly speaking, getting one node "accidentally" 1/3f+1 is a slightly different issue from getting distributed Byzantines "accidentally" 1/3f+1 of validators. However, the former has a negligible probability compared to the latter, which will occur with an apparent frequency. Therefore, I believe it is reasonable to treat these as "accidental (expected) BFT Violation Problem" in a common manner. |

|

I'm writing the results of PoC at Wikipage. However, it's not able to upload any images on Wikipage, so attach them to this ticket and use that URL. |

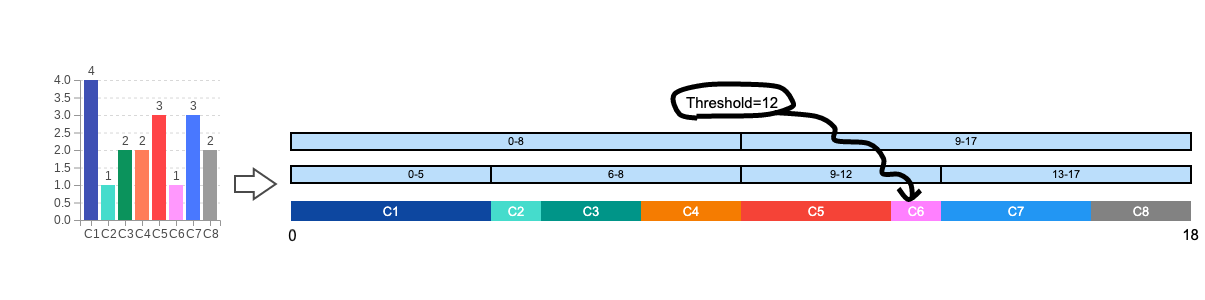

The scheme of selecting a Proposer and Validators based on PoS can be considered as random sampling from a group with a discrete probability distribution.

S: the total amount of issued stakes_i: the stake amount held by a candidatei(Σ s_i = S)Random Sampling based on Categorical Distribution

For simplicity, here is an example in which only a Proposer is selected from candidates with winning probability of

p_i = s_i / S.First, create a pseudo-random number generator using

vrf_hashas a seed, and determine the thresholdthresholdfor the Proposer. This random number algorithm should be deterministic and portable to other programming languages, but need not be cryptographic.Second, to make the result deterministic, we retrieve the candidates sorted in descending stake order.

Finally, find the candidate hit by the arrow

thresholdof Proposer.This is a common way of random sampling according to a categorical distribution by using a uniform random number. Similar to throwing an arrow on a spinning darts whose width is proportional to the probability of each item.

Selecting of a Consensus Group

By applying the above, we can select a consensus group consisting of one Proposer and

VValidators. This is equivalent to performingV+1categorical trials, which is the same as a random sampling model with a multinomial distribution. It's possible to illustrate this notion using a multinomial distribution demo I created in the past. This is equivalent to a model that selects a Proposer and Validators whenKis the number of candidates andn=V+1.As an example of intuitive code, I expand categorical sampling to multinomial.

In the above steps, a single candidate may assume multiple roles. If you want to exclude such a case, you can remove the winning candidate from the

candidates. In this case, thethresholdsmust be recalculated because the totalSof the population changes.Computational Complexity

The computational complexity is mainly affected by the number of candidates N. There is room for improvement by remembering the list of candidates that have been sorted by the stake.

The text was updated successfully, but these errors were encountered: