diff --git a/CHANGELOG.md b/CHANGELOG.md

index e4fdf73..1ff87ed 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -2,9 +2,24 @@

The following changes are present in the `main` branch of the repository and are not yet part of a release:

+ - N/A

+

+## Version 0.10.0

+

+This release implements ["Weighted Laplacian Smoothing for Surface Reconstruction of Particle-based Fluids" (Löschner, Böttcher, Jeske, Bender; 2023)](https://animation.rwth-aachen.de/publication/0583/), mesh cleanup based on ["Mesh Displacement: An Improved Contouring Method for Trivariate Data" (Moore, Warren; 1991)](https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.49.5214&rep=rep1&type=pdf) and a new, more efficient domain decomposition (see README.md for more details).

+

+ - Lib: Implement new spatial decomposition based on a regular grid of subdomains (subdomains are dense marching cubes grids)

- CLI: Make new spatial decomposition available in CLI with `--subdomain-grid=on`

- - Lib: Implement new spatial decomposition based on a regular grid of subdomains, subdomains are dense marching cubes grids

- - Lib: Support for reading and writing PLY meshes

+ - Lib: Implement weighted Laplacian smoothing to remove bumps from surfaces according to paper "Weighted Laplacian Smoothing for Surface Reconstruction of Particle-based Fluids" (Löschner, Böttcher, Jeske, Bender 2023)

+ - CLI: Add arguments to enable and control weighted Laplacian smoothing `--mesh-smoothing-iters=...`, `--mesh-smoothing-weights=on` etc.

+ - Lib: Implement `marching_cubes_cleanup` function: a marching cubes "mesh cleanup" decimation inspired by "Mesh Displacement: An Improved Contouring Method for Trivariate Data" (Moore, Warren 1991)

+ - CLI: Add argument to enable mesh cleanup: `--mesh-cleanup=on`

+ - Lib: Add functions to `TriMesh3d` to find non-manifold edges and vertices

+ - CLI: Add arguments to check if output meshes are manifold (no non-manifold edges and vertices): `--mesh-check-manifold=on`, `--mesh-check-closed=on`

+ - Lib: Support for mixed triangle and quad meshes

+ - Lib: Implement `convert_tris_to_quads` function: greedily merge triangles to quads if they fulfill certain criteria (maximum angle in quad, "squareness" of the quad, angle between triangle normals)

+ - CLI: Add arguments to enable and control triangle to quad conversion with `--generate-quads=on` etc.

+ - Lib: Support for reading and writing PLY meshes (`MixedTriQuadMesh3d`)

- CLI: Support for filtering input particles using an AABB with `--particle-aabb-min`/`--particle-aabb-max`

- CLI: Support for clamping the triangle mesh using an AABB with `--mesh-aabb-min`/`--mesh-aabb-max`

@@ -69,9 +84,9 @@ The following changes are present in the `main` branch of the repository and are

This release fixes a couple of bugs that may lead to inconsistent surface reconstructions when using domain decomposition (i.e. reconstructions with artificial bumps exactly at the subdomain boundaries, especially on flat surfaces). Currently there are no other known bugs and the domain decomposed approach appears to be really fast and robust.

-In addition the CLI now reports more detailed timing statistics for multi-threaded reconstructions.

+In addition, the CLI now reports more detailed timing statistics for multi-threaded reconstructions.

-Otherwise this release contains just some small changes to command line parameters.

+Otherwise, this release contains just some small changes to command line parameters.

- Lib: Add a `ParticleDensityComputationStrategy` enum to the `SpatialDecompositionParameters` struct. In order for domain decomposition to work consistently, the per particle densities have to be evaluated to a consistent value between domains. This is especially important for the ghost particles. Previously, this resulted inconsistent density values on boundaries if the ghost particle margin was not at least 2x the compact support radius (as this ensures that the inner ghost particles actually have the correct density). This option is now still available as the `IndependentSubdomains` strategy. The preferred way, that avoids the 2x ghost particle margin is the `SynchronizeSubdomains` where the density values of the particles in the subdomains are first collected into a global storage. This can be faster as the previous method as this avoids having to collect a double-width ghost particle layer. In addition there is the "playing it safe" option, the `Global` strategy, where the particle densities are computed in a completely global step before any domain decomposition. This approach however is *really* slow for large quantities of particles. For more information, read the documentation on the `ParticleDensityComputationStrategy` enum.

- Lib: Fix bug where the workspace storage was not cleared correctly leading to inconsistent results depending on the sequence of processed subdomains

@@ -91,7 +106,7 @@ Otherwise this release contains just some small changes to command line paramete

The biggest new feature is a domain decomposed approach for the surface reconstruction by performing a spatial decomposition of the particle set with an octree.

The resulting local patches can then be processed in parallel (leaving a single layer of boundary cells per patch untriangulated to avoid incompatible boundaries).

-Afterwards, a stitching procedure walks the octree back upwards and merges the octree leaves by averaging density values on the boundaries.

+Afterward, a stitching procedure walks the octree back upwards and merges the octree leaves by averaging density values on the boundaries.

As the library uses task based parallelism, a task for stitching can be enqueued as soon as all children of an octree node are processed.

Depending on the number of available threads and the particle data, this approach results in a speedup of 4-10x in comparison to the global parallel approach in selected benchmarks.

At the moment, this domain decomposition approach is only available when allowing to parallelize over particles using the `--mt-particles` flag.

diff --git a/CITATION.cff b/CITATION.cff

index 2cca56a..af22017 100644

--- a/CITATION.cff

+++ b/CITATION.cff

@@ -2,7 +2,7 @@

# Visit https://bit.ly/cffinit to generate yours today!

cff-version: 1.2.0

-title: splashsurf

+title: '"splashsurf" Surface Reconstruction Software'

message: >-

If you use this software in your work, please consider

citing it using these metadata.

@@ -12,10 +12,10 @@ authors:

given-names: Fabian

affiliation: RWTH Aachen University

orcid: 'https://orcid.org/0000-0001-6818-2953'

-url: 'https://www.floeschner.de/splashsurf'

+url: 'https://splashsurf.physics-simulation.org'

abstract: >-

Splashsurf is a surface reconstruction tool and framework

for reconstructing surfaces from particle data.

license: MIT

-version: 0.9.1

-date-released: '2023-04-19'

+version: 0.10.0

+date-released: '2023-09-25'

diff --git a/Cargo.lock b/Cargo.lock

index cd452f9..3183845 100644

--- a/Cargo.lock

+++ b/Cargo.lock

@@ -10,9 +10,9 @@ checksum = "f26201604c87b1e01bd3d98f8d5d9a8fcbb815e8cedb41ffccbeb4bf593a35fe"

[[package]]

name = "aho-corasick"

-version = "1.0.5"

+version = "1.1.1"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "0c378d78423fdad8089616f827526ee33c19f2fddbd5de1629152c9593ba4783"

+checksum = "ea5d730647d4fadd988536d06fecce94b7b4f2a7efdae548f1cf4b63205518ab"

dependencies = [

"memchr 2.6.3",

]

@@ -148,9 +148,9 @@ checksum = "b4682ae6287fcf752ecaabbfcc7b6f9b72aa33933dc23a554d853aea8eea8635"

[[package]]

name = "bumpalo"

-version = "3.13.0"

+version = "3.14.0"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "a3e2c3daef883ecc1b5d58c15adae93470a91d425f3532ba1695849656af3fc1"

+checksum = "7f30e7476521f6f8af1a1c4c0b8cc94f0bee37d91763d0ca2665f299b6cd8aec"

[[package]]

name = "bytecount"

@@ -172,7 +172,7 @@ checksum = "965ab7eb5f8f97d2a083c799f3a1b994fc397b2fe2da5d1da1626ce15a39f2b1"

dependencies = [

"proc-macro2",

"quote",

- "syn 2.0.32",

+ "syn 2.0.37",

]

[[package]]

@@ -235,9 +235,9 @@ checksum = "baf1de4339761588bc0619e3cbc0120ee582ebb74b53b4efbf79117bd2da40fd"

[[package]]

name = "chrono"

-version = "0.4.30"

+version = "0.4.31"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "defd4e7873dbddba6c7c91e199c7fcb946abc4a6a4ac3195400bcfb01b5de877"

+checksum = "7f2c685bad3eb3d45a01354cedb7d5faa66194d1d58ba6e267a8de788f79db38"

dependencies = [

"android-tzdata",

"iana-time-zone",

@@ -276,9 +276,9 @@ dependencies = [

[[package]]

name = "clap"

-version = "4.4.2"

+version = "4.4.4"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "6a13b88d2c62ff462f88e4a121f17a82c1af05693a2f192b5c38d14de73c19f6"

+checksum = "b1d7b8d5ec32af0fadc644bf1fd509a688c2103b185644bb1e29d164e0703136"

dependencies = [

"clap_builder",

"clap_derive",

@@ -286,9 +286,9 @@ dependencies = [

[[package]]

name = "clap_builder"

-version = "4.4.2"

+version = "4.4.4"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "2bb9faaa7c2ef94b2743a21f5a29e6f0010dff4caa69ac8e9d6cf8b6fa74da08"

+checksum = "5179bb514e4d7c2051749d8fcefa2ed6d06a9f4e6d69faf3805f5d80b8cf8d56"

dependencies = [

"anstream",

"anstyle",

@@ -305,7 +305,7 @@ dependencies = [

"heck",

"proc-macro2",

"quote",

- "syn 2.0.32",

+ "syn 2.0.37",

]

[[package]]

@@ -390,16 +390,6 @@ version = "1.1.2"

source = "registry+https://github.com/rust-lang/crates.io-index"

checksum = "7059fff8937831a9ae6f0fe4d658ffabf58f2ca96aa9dec1c889f936f705f216"

-[[package]]

-name = "crossbeam-channel"

-version = "0.5.8"

-source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "a33c2bf77f2df06183c3aa30d1e96c0695a313d4f9c453cc3762a6db39f99200"

-dependencies = [

- "cfg-if",

- "crossbeam-utils",

-]

-

[[package]]

name = "crossbeam-deque"

version = "0.8.3"

@@ -490,9 +480,9 @@ dependencies = [

[[package]]

name = "fastrand"

-version = "2.0.0"

+version = "2.0.1"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "6999dc1837253364c2ebb0704ba97994bd874e8f195d665c50b7548f6ea92764"

+checksum = "25cbce373ec4653f1a01a31e8a5e5ec0c622dc27ff9c4e6606eefef5cbbed4a5"

[[package]]

name = "fern"

@@ -581,9 +571,9 @@ checksum = "95505c38b4572b2d910cecb0281560f54b440a19336cbbcb27bf6ce6adc6f5a8"

[[package]]

name = "hermit-abi"

-version = "0.3.2"

+version = "0.3.3"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "443144c8cdadd93ebf52ddb4056d257f5b52c04d3c804e657d19eb73fc33668b"

+checksum = "d77f7ec81a6d05a3abb01ab6eb7590f6083d08449fe5a1c8b1e620283546ccb7"

[[package]]

name = "iana-time-zone"

@@ -610,9 +600,9 @@ dependencies = [

[[package]]

name = "indicatif"

-version = "0.17.6"

+version = "0.17.7"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "0b297dc40733f23a0e52728a58fa9489a5b7638a324932de16b41adc3ef80730"

+checksum = "fb28741c9db9a713d93deb3bb9515c20788cef5815265bee4980e87bde7e0f25"

dependencies = [

"console",

"instant",

@@ -691,9 +681,9 @@ dependencies = [

[[package]]

name = "libc"

-version = "0.2.147"

+version = "0.2.148"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "b4668fb0ea861c1df094127ac5f1da3409a82116a4ba74fca2e58ef927159bb3"

+checksum = "9cdc71e17332e86d2e1d38c1f99edcb6288ee11b815fb1a4b049eaa2114d369b"

[[package]]

name = "libm"

@@ -748,9 +738,9 @@ dependencies = [

[[package]]

name = "matrixmultiply"

-version = "0.3.7"

+version = "0.3.8"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "090126dc04f95dc0d1c1c91f61bdd474b3930ca064c1edc8a849da2c6cbe1e77"

+checksum = "7574c1cf36da4798ab73da5b215bbf444f50718207754cb522201d78d1cd0ff2"

dependencies = [

"autocfg",

"rawpointer",

@@ -895,16 +885,6 @@ dependencies = [

"libm",

]

-[[package]]

-name = "num_cpus"

-version = "1.16.0"

-source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "4161fcb6d602d4d2081af7c3a45852d875a03dd337a6bfdd6e06407b61342a43"

-dependencies = [

- "hermit-abi",

- "libc",

-]

-

[[package]]

name = "number_prefix"

version = "0.4.0"

@@ -1049,9 +1029,9 @@ checksum = "5b40af805b3121feab8a3c29f04d8ad262fa8e0561883e7653e024ae4479e6de"

[[package]]

name = "proc-macro2"

-version = "1.0.66"

+version = "1.0.67"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "18fb31db3f9bddb2ea821cde30a9f70117e3f119938b5ee630b7403aa6e2ead9"

+checksum = "3d433d9f1a3e8c1263d9456598b16fec66f4acc9a74dacffd35c7bb09b3a1328"

dependencies = [

"unicode-ident",

]

@@ -1134,9 +1114,9 @@ checksum = "60a357793950651c4ed0f3f52338f53b2f809f32d83a07f72909fa13e4c6c1e3"

[[package]]

name = "rayon"

-version = "1.7.0"

+version = "1.8.0"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "1d2df5196e37bcc87abebc0053e20787d73847bb33134a69841207dd0a47f03b"

+checksum = "9c27db03db7734835b3f53954b534c91069375ce6ccaa2e065441e07d9b6cdb1"

dependencies = [

"either",

"rayon-core",

@@ -1144,14 +1124,12 @@ dependencies = [

[[package]]

name = "rayon-core"

-version = "1.11.0"

+version = "1.12.0"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "4b8f95bd6966f5c87776639160a66bd8ab9895d9d4ab01ddba9fc60661aebe8d"

+checksum = "5ce3fb6ad83f861aac485e76e1985cd109d9a3713802152be56c3b1f0e0658ed"

dependencies = [

- "crossbeam-channel",

"crossbeam-deque",

"crossbeam-utils",

- "num_cpus",

]

[[package]]

@@ -1214,9 +1192,9 @@ dependencies = [

[[package]]

name = "rustix"

-version = "0.38.13"

+version = "0.38.14"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "d7db8590df6dfcd144d22afd1b83b36c21a18d7cbc1dc4bb5295a8712e9eb662"

+checksum = "747c788e9ce8e92b12cd485c49ddf90723550b654b32508f979b71a7b1ecda4f"

dependencies = [

"bitflags 2.4.0",

"errno",

@@ -1265,9 +1243,9 @@ dependencies = [

[[package]]

name = "semver"

-version = "1.0.18"

+version = "1.0.19"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "b0293b4b29daaf487284529cc2f5675b8e57c61f70167ba415a463651fd6a918"

+checksum = "ad977052201c6de01a8ef2aa3378c4bd23217a056337d1d6da40468d267a4fb0"

dependencies = [

"serde",

]

@@ -1289,14 +1267,14 @@ checksum = "4eca7ac642d82aa35b60049a6eccb4be6be75e599bd2e9adb5f875a737654af2"

dependencies = [

"proc-macro2",

"quote",

- "syn 2.0.32",

+ "syn 2.0.37",

]

[[package]]

name = "serde_json"

-version = "1.0.106"

+version = "1.0.107"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "2cc66a619ed80bf7a0f6b17dd063a84b88f6dea1813737cf469aef1d081142c2"

+checksum = "6b420ce6e3d8bd882e9b243c6eed35dbc9a6110c9769e74b584e0d68d1f20c65"

dependencies = [

"itoa",

"ryu",

@@ -1333,9 +1311,9 @@ dependencies = [

[[package]]

name = "smallvec"

-version = "1.11.0"

+version = "1.11.1"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "62bb4feee49fdd9f707ef802e22365a35de4b7b299de4763d44bfea899442ff9"

+checksum = "942b4a808e05215192e39f4ab80813e599068285906cc91aa64f923db842bd5a"

[[package]]

name = "spin"

@@ -1425,9 +1403,9 @@ dependencies = [

[[package]]

name = "syn"

-version = "2.0.32"

+version = "2.0.37"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "239814284fd6f1a4ffe4ca893952cdd93c224b6a1571c9a9eadd670295c0c9e2"

+checksum = "7303ef2c05cd654186cb250d29049a24840ca25d2747c25c0381c8d9e2f582e8"

dependencies = [

"proc-macro2",

"quote",

@@ -1464,7 +1442,7 @@ checksum = "49922ecae66cc8a249b77e68d1d0623c1b2c514f0060c27cdc68bd62a1219d35"

dependencies = [

"proc-macro2",

"quote",

- "syn 2.0.32",

+ "syn 2.0.37",

]

[[package]]

@@ -1489,9 +1467,9 @@ dependencies = [

[[package]]

name = "typenum"

-version = "1.16.0"

+version = "1.17.0"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "497961ef93d974e23eb6f433eb5fe1b7930b659f06d12dec6fc44a8f554c0bba"

+checksum = "42ff0bf0c66b8238c6f3b578df37d0b7848e55df8577b3f74f92a69acceeb825"

[[package]]

name = "ultraviolet"

@@ -1513,15 +1491,15 @@ dependencies = [

[[package]]

name = "unicode-ident"

-version = "1.0.11"

+version = "1.0.12"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "301abaae475aa91687eb82514b328ab47a211a533026cb25fc3e519b86adfc3c"

+checksum = "3354b9ac3fae1ff6755cb6db53683adb661634f67557942dea4facebec0fee4b"

[[package]]

name = "unicode-width"

-version = "0.1.10"

+version = "0.1.11"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "c0edd1e5b14653f783770bce4a4dabb4a5108a5370a5f5d8cfe8710c361f6c8b"

+checksum = "e51733f11c9c4f72aa0c160008246859e340b00807569a0da0e7a1079b27ba85"

[[package]]

name = "utf8parse"

@@ -1591,7 +1569,7 @@ dependencies = [

"once_cell",

"proc-macro2",

"quote",

- "syn 2.0.32",

+ "syn 2.0.37",

"wasm-bindgen-shared",

]

@@ -1613,7 +1591,7 @@ checksum = "54681b18a46765f095758388f2d0cf16eb8d4169b639ab575a8f5693af210c7b"

dependencies = [

"proc-macro2",

"quote",

- "syn 2.0.32",

+ "syn 2.0.37",

"wasm-bindgen-backend",

"wasm-bindgen-shared",

]

@@ -1662,9 +1640,9 @@ checksum = "ac3b87c63620426dd9b991e5ce0329eff545bccbbb34f3be09ff6fb6ab51b7b6"

[[package]]

name = "winapi-util"

-version = "0.1.5"

+version = "0.1.6"

source = "registry+https://github.com/rust-lang/crates.io-index"

-checksum = "70ec6ce85bb158151cae5e5c87f95a8e97d2c0c4b001223f33a334e3ce5de178"

+checksum = "f29e6f9198ba0d26b4c9f07dbe6f9ed633e1f3d5b8b414090084349e46a52596"

dependencies = [

"winapi",

]

diff --git a/README.md b/README.md

index 2abe987..8aea2dc 100644

--- a/README.md

+++ b/README.md

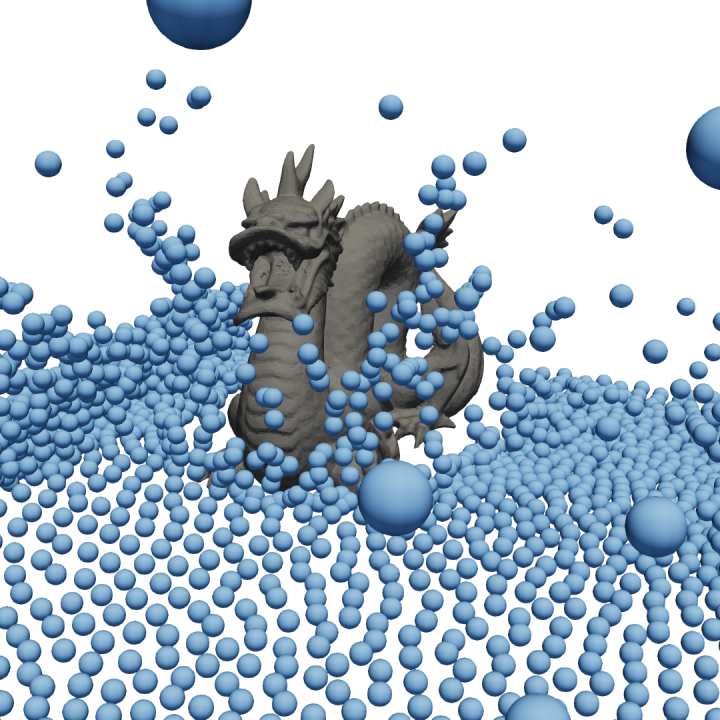

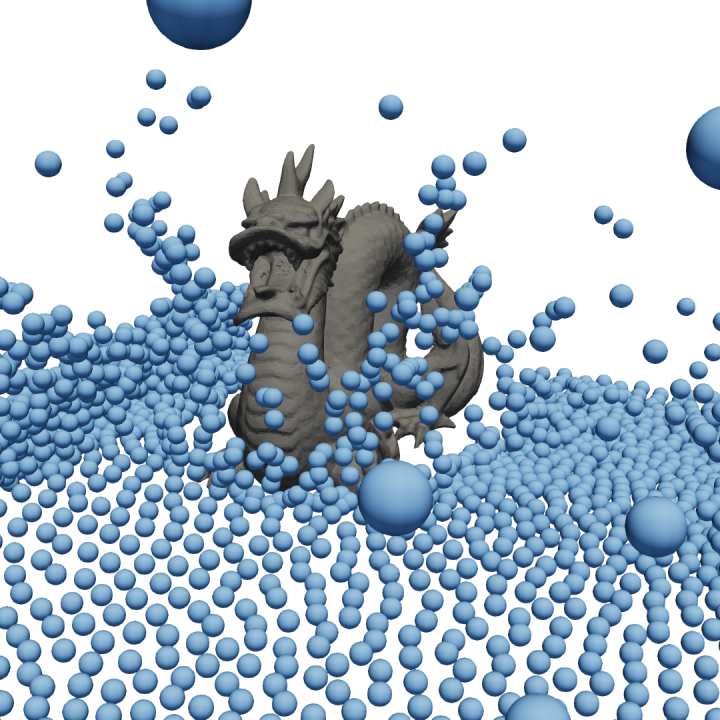

@@ -25,19 +25,27 @@ reconstructed from this particle data. The next image shows a reconstructed surf

with a "smoothing length" of `2.2` times the particles radius and a cell size of `1.1` times the particle radius. The

third image shows a finer reconstruction with a cell size of `0.45` times the particle radius. These surface meshes can

then be fed into 3D rendering software such as [Blender](https://www.blender.org/) to generate beautiful water animations.

-The result might look something like this (please excuse the lack of 3D rendering skills):

+The result might look something like this:

+Note: This animation does not show the recently added smoothing features of the tool, for more recent rendering see [this video](https://youtu.be/2bYvaUXlBQs).

+

+---

+

**Contents**

- [The `splashsurf` CLI](#the-splashsurf-cli)

- [Introduction](#introduction)

+ - [Domain decomposition](#domain-decomposition)

+ - [Octree-based decomposition](#octree-based-decomposition)

+ - [Subdomain grid-based decomposition](#subdomain-grid-based-decomposition)

- [Notes](#notes)

- [Installation](#installation)

- [Usage](#usage)

- [Recommended settings](#recommended-settings)

+ - [Weighted surface smoothing](#weighted-surface-smoothing)

- [Benchmark example](#benchmark-example)

- [Sequences of files](#sequences-of-files)

- [Input file formats](#input-file-formats)

@@ -53,34 +61,56 @@ The result might look something like this (please excuse the lack of 3D renderin

- [The `convert` subcommand](#the-convert-subcommand)

- [License](#license)

+

# The `splashsurf` CLI

The following sections mainly focus on the CLI of `splashsurf`. For more information on the library, see the [corresponding readme](https://github.com/InteractiveComputerGraphics/splashsurf/blob/main/splashsurf_lib) in the `splashsurf_lib` subfolder or the [`splashsurf_lib` crate](https://crates.io/crates/splashsurf_lib) on crates.io.

## Introduction

-This is a basic but high-performance implementation of a marching cubes based surface reconstruction for SPH fluid simulations (e.g performed with [SPlisHSPlasH](https://github.com/InteractiveComputerGraphics/SPlisHSPlasH)).

+This is CLI to run a fast marching cubes based surface reconstruction for SPH fluid simulations (e.g. performed with [SPlisHSPlasH](https://github.com/InteractiveComputerGraphics/SPlisHSPlasH)).

The output of this tool is the reconstructed triangle surface mesh of the fluid.

At the moment it supports computing normals on the surface using SPH gradients and interpolating scalar and vector particle attributes to the surface.

-No additional smoothing or decimation operations are currently implemented.

-As input, it supports reading particle positions from `.vtk`, `.bgeo`, `.ply`, `.json` and binary `.xyz` files (i.e. files containing a binary dump of a particle position array).

-In addition, required parameters are the kernel radius and particle radius (to compute the volume of particles) used for the original SPH simulation as well as the surface threshold.

-

-By default, a domain decomposition of the particle set is performed using octree-based subdivision.

-The implementation first computes the density of each particle using the typical SPH approach with a cubic kernel.

-This density is then evaluated or mapped onto a sparse grid using spatial hashing in the support radius of each particle.

-This implies that memory is only allocated in areas where the fluid density is non-zero. This is in contrast to a naive approach where the marching cubes background grid is allocated for the whole domain.

-The marching cubes reconstruction is performed only in the narrowband of grid cells where the density values cross the surface threshold. Cells completely in the interior of the fluid are skipped. For more details, please refer to the [readme of the library]((https://github.com/InteractiveComputerGraphics/splashsurf/blob/main/splashsurf_lib/README.md)).

+To get rid of the typical bumps from SPH simulations, it supports a weighted Laplacian smoothing approach [detailed below](#weighted-surface-smoothing).

+As input, it supports reading particle positions from `.vtk`/`.vtu`, `.bgeo`, `.ply`, `.json` and binary `.xyz` (i.e. files containing a binary dump of a particle position array) files.

+Required parameters to perform a reconstruction are the kernel radius and particle radius (to compute the volume of particles) used for the original SPH simulation as well as the marching cubes resolution (a default iso-surface threshold is pre-configured).

+

+## Domain decomposition

+

+A naive dense marching cubes reconstruction allocating a full 3D array over the entire fulid domain quickly becomes infeasible for larger simulations.

+Instead, one could use a global hashmap where only cubes that contain non-zero fluid density values are allocated.

+This approach is used in `splashsurf` if domain decomposition is disabled completely.

+However, the global hashmap approach does not lead to good cache locality and is not well suited for parallelization (even specialized parallel map implementations like [`dashmap`](https://github.com/xacrimon/dashmap) have their performance limitations).

+To improve on this situation `splashsurf` currently implements two domain decomposition approaches.

+

+### Octree-based decomposition

+The octree-based decomposition is currently the default approach if no other option is specified but will probably be replaced by the grid-based approach described below.

+For the octree-based decomposition an octree is built over all particles with an automatically determined target number of particles per leaf node.

+For each leaf node, a hashmap is used like outlined above.

+As each hashmap is smaller, cache locality is improved and due to the decomposition, each thread can work on its own local hashmap.

Finally, all surface patches are stitched together by walking the octree back up, resulting in a closed surface.

+Downsides of this approach are that the octree construction starting from the root and stitching back towards the root limit the amount of paralleism during some stages.

+

+### Subdomain grid-based decomposition

+

+Since version 0.10.0, `splashsurf` implements a new domain decomposition approach called the "subdomain grid" approach, toggeled with the `--subdomain-grid=on` flag.

+Here, the goal is to divide the fluid domain into subdomains with a fixed number of marching cubes cells, by default `64x64x64` cubes.

+For each subdomain a dense 3D array is allocated for the marching cubes cells.

+Of course, only subdomains that contain fluid particles are actually allocated.

+For subdomains that contain only a very small number of fluid particles (less th 5% of the largest subdomain) a hashmap is used instead to not waste too much storage.

+As most domains are dense however, the marching cubes triangulation per subdomain is very fast as it can make full use of cache locality and the entire procedure is trivially parallelizable.

+For the stitching we ensure that we perform floating point operations in the same order at the subdomain boundaries (this can be ensured without synchronization).

+If the field values on the subdomain boundaries are identical from both sides, the marching cubes triangulations will be topologically compatible and can be merged in a post-processing step that is also parallelizable.

+Overall, this approach should almost always be faster than the previous octree-based aproach.

+

## Notes

-For small numbers of fluid particles (i.e. in the low thousands or less) the multithreaded implementation may have worse performance due to the task based parallelism and the additional overhead of domain decomposition and stitching.

+For small numbers of fluid particles (i.e. in the low thousands or less) the domain decomposition implementation may have worse performance due to the task based parallelism and the additional overhead of domain decomposition and stitching.

In this case, you can try to disable the domain decomposition. The reconstruction will then use a global approach that is parallelized using thread-local hashmaps.

For larger quantities of particles the decomposition approach is expected to be always faster.

Due to the use of hash maps and multi-threading (if enabled), the output of this implementation is not deterministic.

-In the future, flags may be added to switch the internal data structures to use binary trees for debugging purposes.

As shown below, the tool can handle the output of large simulations.

However, it was not tested with a wide range of parameters and may not be totally robust against corner-cases or extreme parameters.

@@ -103,6 +133,29 @@ Good settings for the surface reconstruction depend on the original simulation a

- `surface-threshold`: a good value depends on the selected `particle-radius` and `smoothing-length` and can be used to counteract a fluid volume increase e.g. due to a larger particle radius. In combination with the other recommended values a threshold of `0.6` seemed to work well.

- `cube-size` usually should not be chosen larger than `1.0` to avoid artifacts (e.g. single particles decaying into rhomboids), start with a value in the range of `0.75` to `0.5` and decrease/increase it if the result is too coarse or the reconstruction takes too long.

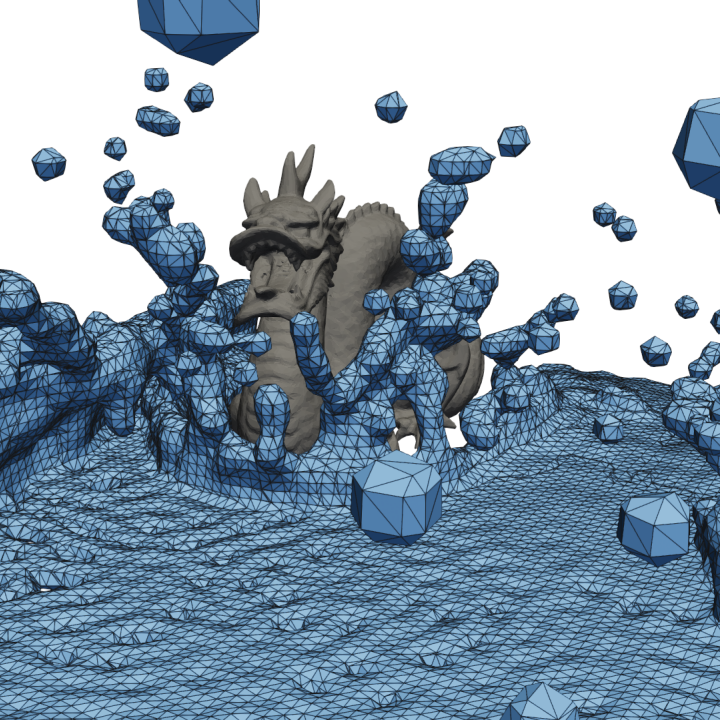

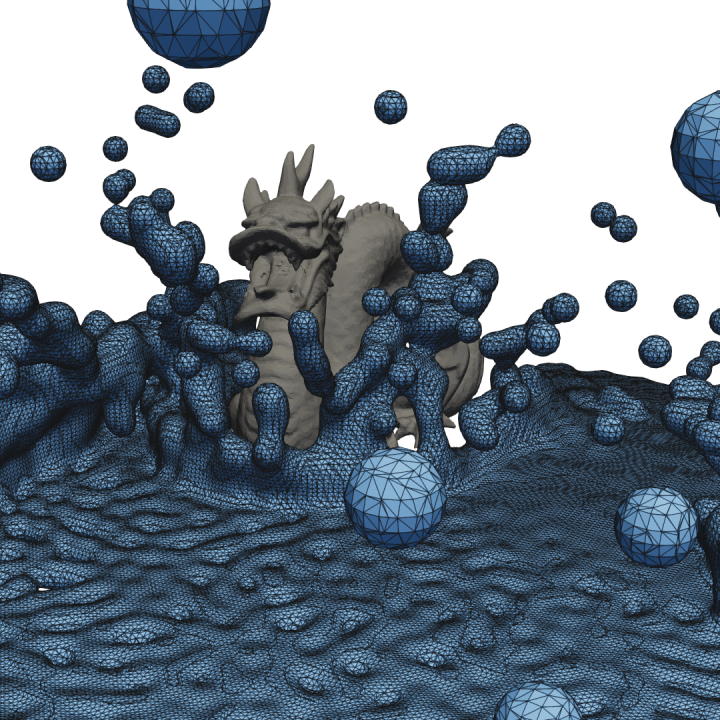

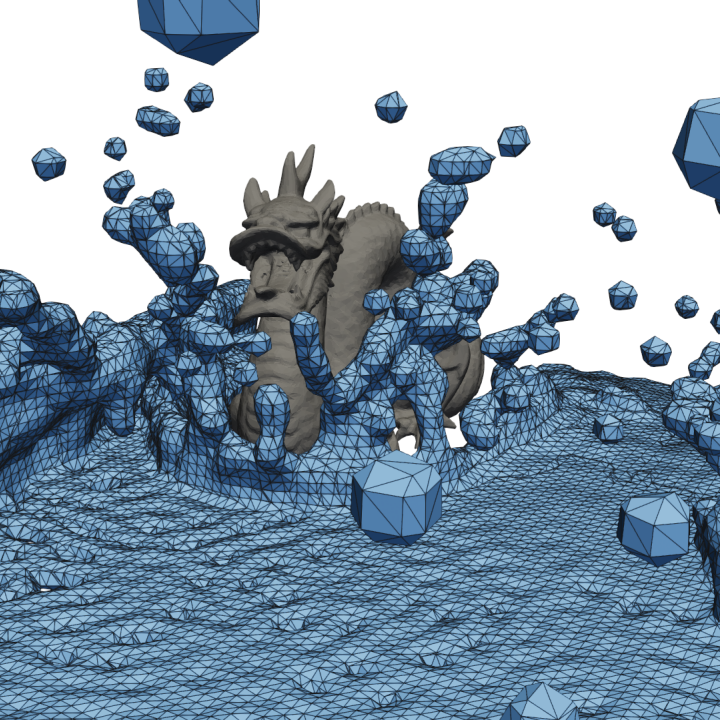

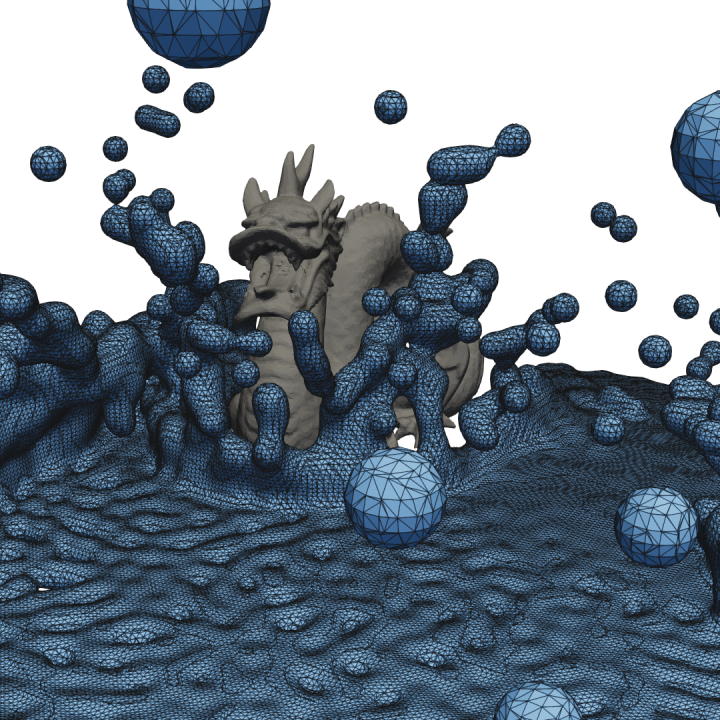

+### Weighted surface smoothing

+The CLI implements the paper ["Weighted Laplacian Smoothing for Surface Reconstruction of Particle-based Fluids" (Löschner, Böttcher, Jeske, Bender; 2023)](https://animation.rwth-aachen.de/publication/0583/) which proposes a fast smoothing approach to avoid typical bumpy surfaces while preventing loss of volume that typically occurs with simple smoothing methods.

+The following images show a rendering of a typical surface reconstruction (on the right) with visible bumps due to the particles compared to the same surface reconstruction with weighted smoothing applied (on the left):

+

+

+

+

+

+

+You can see this rendering in motion in [this video](https://youtu.be/2bYvaUXlBQs).

+To apply this smoothing, we recommend the following settings:

+ - `--mesh-smoothing-weights=on`: This enables the use of special weights during the smoothing process that preserve fluid details. For more information we refer to the [paper](https://animation.rwth-aachen.de/publication/0583/).

+ - `--mesh-smoothing-iters=25`: This enables smoothing of the output mesh. The individual iterations are relatively fast and 25 iterations appeared to strike a good balance between an initially bumpy surface and potential over-smoothing.

+ - `--mesh-cleanup=on`/`--decimate-barnacles=on`: On of the options should be used when applying smoothing, otherwise artifacts can appear on the surface (for more details see the paper). The `mesh-cleanup` flag enables a general purpose marching cubes mesh cleanup procedure that removes small sliver triangles everywhere on the mesh. The `decimate-barnacles` enables a more targeted decimation that only removes specific triangle configurations that are problematic for the smoothing. The former approach results in a "nicer" mesh overall but can be slower than the latter.

+ - `--normals-smoothing-iters=10`: If normals are being exported (with `--normals=on`), this results in an even smoother appearance during rendering.

+

+For the reconstruction parameters in conjunction with the weighted smoothing we recommend parameters close to the simulation parameters.

+That means selecting the same particle radius as in the simulation, a corresponding smoothing length (e.g. for SPlisHSPlasH a value of `2.0`), a surface-threshold between `0.6` and `0.7` and a cube size usually between `0.5` and `1.0`.

+

+A full invocation of the tool might look like this:

+```

+splashsurf reconstruct particles.vtk -r=0.025 -l=2.0 -c=0.5 -t=0.6 --subdomain-grid=on --mesh-cleanup=on --mesh-smoothing-weights=on --mesh-smoothing-iters=25 --normals=on --normals-smoothing-iters=10

+```

+

### Benchmark example

For example:

```

@@ -179,9 +232,19 @@ Note that the tool collects all existing filenames as soon as the command is inv

The first and last file of a sequences that should be processed can be specified with the `-s`/`--start-index` and/or `-e`/`--end-index` arguments.

By specifying the flag `--mt-files=on`, several files can be processed in parallel.

-If this is enabled, you should ideally also set `--mt-particles=off` as enabling both will probably degrade performance.

+If this is enabled, you should also set `--mt-particles=off` as enabling both will probably degrade performance.

The combination of `--mt-files=on` and `--mt-particles=off` can be faster if many files with only few particles have to be processed.

+The number of threads can be influenced using the `--num-threads`/`-n` argument or the `RAYON_NUM_THREADS` environment variable

+

+**NOTE:** Currently, some functions do not have a sequential implementation and always parallelize over the particles or the mesh/domain.

+This includes:

+ - the new "subdomain-grid" domain decomposition approach, as an alternative to the previous octree-based approach

+ - some post-processing functionality (interpolation of smoothing weights, interpolation of normals & other fluid attributes)

+

+Using the `--mt-particles=off` argument does not have an effect on these parts of the surface reconstruction.

+For now, it is therefore recommended to not parallelize over multiple files if this functionality is used.

+

## Input file formats

### VTK

@@ -242,16 +305,18 @@ The file format is inferred from the extension of output filename.

### The `reconstruct` command

```

-splashsurf-reconstruct (v0.9.3) - Reconstruct a surface from particle data

+splashsurf-reconstruct (v0.10.0) - Reconstruct a surface from particle data

Usage: splashsurf reconstruct [OPTIONS] --particle-radius --smoothing-length --cube-size

Options:

+ -q, --quiet Enable quiet mode (no output except for severe panic messages), overrides verbosity level

+ -v... Print more verbose output, use multiple "v"s for even more verbose output (-v, -vv)

-h, --help Print help

-V, --version Print version

Input/output:

- -o, --output-file Filename for writing the reconstructed surface to disk (default: "{original_filename}_surface.vtk")

+ -o, --output-file Filename for writing the reconstructed surface to disk (supported formats: VTK, PLY, OBJ, default: "{original_filename}_surface.vtk")

--output-dir Optional base directory for all output files (default: current working directory)

-s, --start-index Index of the first input file to process when processing a sequence of files (default: lowest index of the sequence)

-e, --end-index Index of the last input file to process when processing a sequence of files (default: highest index of the sequence)

@@ -268,39 +333,79 @@ Numerical reconstruction parameters:

The cube edge length used for marching cubes in multiplies of the particle radius, corresponds to the cell size of the implicit background grid

-t, --surface-threshold

The iso-surface threshold for the density, i.e. the normalized value of the reconstructed density level that indicates the fluid surface (in multiplies of the rest density) [default: 0.6]

- --domain-min

+ --particle-aabb-min

Lower corner of the domain where surface reconstruction should be performed (requires domain-max to be specified)

- --domain-max

+ --particle-aabb-max

Upper corner of the domain where surface reconstruction should be performed (requires domain-min to be specified)

Advanced parameters:

- -d, --double-precision= Whether to enable the use of double precision for all computations [default: off] [possible values: off, on]

- --mt-files= Flag to enable multi-threading to process multiple input files in parallel [default: off] [possible values: off, on]

- --mt-particles= Flag to enable multi-threading for a single input file by processing chunks of particles in parallel [default: on] [possible values: off, on]

+ -d, --double-precision= Enable the use of double precision for all computations [default: off] [possible values: off, on]

+ --mt-files= Enable multi-threading to process multiple input files in parallel (NOTE: Currently, the subdomain-grid domain decomposition approach and some post-processing functions including interpolation do not have sequential versions and therefore do not work well with this option enabled) [default: off] [possible values: off, on]

+ --mt-particles= Enable multi-threading for a single input file by processing chunks of particles in parallel [default: on] [possible values: off, on]

-n, --num-threads Set the number of threads for the worker thread pool

-Octree (domain decomposition) parameters:

+Domain decomposition (octree or grid) parameters:

+ --subdomain-grid=

+ Enable spatial decomposition using a regular grid-based approach [default: off] [possible values: off, on]

+ --subdomain-cubes

+ Each subdomain will be a cube consisting of this number of MC cube cells along each coordinate axis [default: 64]

--octree-decomposition=

- Whether to enable spatial decomposition using an octree (faster) instead of a global approach [default: on] [possible values: off, on]

+ Enable spatial decomposition using an octree (faster) instead of a global approach [default: on] [possible values: off, on]

--octree-stitch-subdomains=

- Whether to enable stitching of the disconnected local meshes resulting from the reconstruction when spatial decomposition is enabled (slower, but without stitching meshes will not be closed) [default: on] [possible values: off, on]

+ Enable stitching of the disconnected local meshes resulting from the reconstruction when spatial decomposition is enabled (slower, but without stitching meshes will not be closed) [default: on] [possible values: off, on]

--octree-max-particles

The maximum number of particles for leaf nodes of the octree, default is to compute it based on the number of threads and particles

--octree-ghost-margin-factor

Safety factor applied to the kernel compact support radius when it's used as a margin to collect ghost particles in the leaf nodes when performing the spatial decomposition

--octree-global-density=

- Whether to compute particle densities in a global step before domain decomposition (slower) [default: off] [possible values: off, on]

+ Enable computing particle densities in a global step before domain decomposition (slower) [default: off] [possible values: off, on]

--octree-sync-local-density=

- Whether to compute particle densities per subdomain but synchronize densities for ghost-particles (faster, recommended). Note: if both this and global particle density computation is disabled the ghost particle margin has to be increased to at least 2.0 to compute correct density values for ghost particles [default: on] [possible values: off, on]

+ Enable computing particle densities per subdomain but synchronize densities for ghost-particles (faster, recommended). Note: if both this and global particle density computation is disabled the ghost particle margin has to be increased to at least 2.0 to compute correct density values for ghost particles [default: on] [possible values: off, on]

-Interpolation:

+Interpolation & normals:

--normals=

- Whether to compute surface normals at the mesh vertices and write them to the output file [default: off] [possible values: off, on]

+ Enable omputing surface normals at the mesh vertices and write them to the output file [default: off] [possible values: off, on]

--sph-normals=

- Whether to compute the normals using SPH interpolation (smoother and more true to actual fluid surface, but slower) instead of just using area weighted triangle normals [default: on] [possible values: off, on]

+ Enable computing the normals using SPH interpolation instead of using the area weighted triangle normals [default: off] [possible values: off, on]

+ --normals-smoothing-iters

+ Number of smoothing iterations to run on the normal field if normal interpolation is enabled (disabled by default)

+ --output-raw-normals=

+ Enable writing raw normals without smoothing to the output mesh if normal smoothing is enabled [default: off] [possible values: off, on]

--interpolate-attributes

List of point attribute field names from the input file that should be interpolated to the reconstructed surface. Currently this is only supported for VTK and VTU input files

+Postprocessing:

+ --mesh-cleanup=

+ Enable MC specific mesh decimation/simplification which removes bad quality triangles typically generated by MC [default: off] [possible values: off, on]

+ --decimate-barnacles=

+ Enable decimation of some typical bad marching cubes triangle configurations (resulting in "barnacles" after Laplacian smoothing) [default: off] [possible values: off, on]

+ --keep-verts=

+ Enable keeping vertices without connectivity during decimation instead of filtering them out (faster and helps with debugging) [default: off] [possible values: off, on]

+ --mesh-smoothing-iters

+ Number of smoothing iterations to run on the reconstructed mesh

+ --mesh-smoothing-weights=

+ Enable feature weights for mesh smoothing if mesh smoothing enabled. Preserves isolated particles even under strong smoothing [default: off] [possible values: off, on]

+ --mesh-smoothing-weights-normalization

+ Normalization value from weighted number of neighbors to mesh smoothing weights [default: 13.0]

+ --output-smoothing-weights=

+ Enable writing the smoothing weights as a vertex attribute to the output mesh file [default: off] [possible values: off, on]

+ --generate-quads=

+ Enable trying to convert triangles to quads if they meet quality criteria [default: off] [possible values: off, on]

+ --quad-max-edge-diag-ratio

+ Maximum allowed ratio of quad edge lengths to its diagonals to merge two triangles to a quad (inverse is used for minimum) [default: 1.75]

+ --quad-max-normal-angle

+ Maximum allowed angle (in degrees) between triangle normals to merge them to a quad [default: 10]

+ --quad-max-interior-angle

+ Maximum allowed vertex interior angle (in degrees) inside of a quad to merge two triangles to a quad [default: 135]

+ --mesh-aabb-min

+ Lower corner of the bounding-box for the surface mesh, triangles completely outside are removed (requires mesh-aabb-max to be specified)

+ --mesh-aabb-max

+ Upper corner of the bounding-box for the surface mesh, triangles completely outside are removed (requires mesh-aabb-min to be specified)

+ --mesh-aabb-clamp-verts=

+ Enable clamping of vertices outside of the specified mesh AABB to the AABB (only has an effect if mesh-aabb-min/max are specified) [default: off] [possible values: off, on]

+ --output-raw-mesh=

+ Enable writing the raw reconstructed mesh before applying any post-processing steps [default: off] [possible values: off, on]

+

Debug options:

--output-dm-points

Optional filename for writing the point cloud representation of the intermediate density map to disk

@@ -309,7 +414,13 @@ Debug options:

--output-octree

Optional filename for writing the octree used to partition the particles to disk

--check-mesh=

- Whether to check the final mesh for topological problems such as holes (note that when stitching is disabled this will lead to a lot of reported problems) [default: off] [possible values: off, on]

+ Enable checking the final mesh for holes and non-manifold edges and vertices [default: off] [possible values: off, on]

+ --check-mesh-closed=

+ Enable checking the final mesh for holes [default: off] [possible values: off, on]

+ --check-mesh-manifold=

+ Enable checking the final mesh for non-manifold edges and vertices [default: off] [possible values: off, on]

+ --check-mesh-debug=

+ Enable debug output for the check-mesh operations (has no effect if no other check-mesh option is enabled) [default: off] [possible values: off, on]

```

### The `convert` subcommand

@@ -343,6 +454,6 @@ Options:

# License

-For license information of this project, see the LICENSE file.

+For license information of this project, see the [LICENSE](LICENSE) file.

The splashsurf logo is based on two graphics ([1](https://www.svgrepo.com/svg/295647/wave), [2](https://www.svgrepo.com/svg/295652/surfboard-surfboard)) published on SVG Repo under a CC0 ("No Rights Reserved") license.

The dragon model shown in the images on this page are part of the ["Stanford 3D Scanning Repository"](https://graphics.stanford.edu/data/3Dscanrep/).

diff --git a/data/icosphere.obj b/data/icosphere.obj

new file mode 100644

index 0000000..b374c99

--- /dev/null

+++ b/data/icosphere.obj

@@ -0,0 +1,126 @@

+# Blender 3.5.0

+# www.blender.org

+o Icosphere

+v 0.000000 -1.000000 0.000000

+v 0.723607 -0.447220 0.525725

+v -0.276388 -0.447220 0.850649

+v -0.894426 -0.447216 0.000000

+v -0.276388 -0.447220 -0.850649

+v 0.723607 -0.447220 -0.525725

+v 0.276388 0.447220 0.850649

+v -0.723607 0.447220 0.525725

+v -0.723607 0.447220 -0.525725

+v 0.276388 0.447220 -0.850649

+v 0.894426 0.447216 0.000000

+v 0.000000 1.000000 0.000000

+v -0.162456 -0.850654 0.499995

+v 0.425323 -0.850654 0.309011

+v 0.262869 -0.525738 0.809012

+v 0.850648 -0.525736 0.000000

+v 0.425323 -0.850654 -0.309011

+v -0.525730 -0.850652 0.000000

+v -0.688189 -0.525736 0.499997

+v -0.162456 -0.850654 -0.499995

+v -0.688189 -0.525736 -0.499997

+v 0.262869 -0.525738 -0.809012

+v 0.951058 0.000000 0.309013

+v 0.951058 0.000000 -0.309013

+v 0.000000 0.000000 1.000000

+v 0.587786 0.000000 0.809017

+v -0.951058 0.000000 0.309013

+v -0.587786 0.000000 0.809017

+v -0.587786 0.000000 -0.809017

+v -0.951058 0.000000 -0.309013

+v 0.587786 0.000000 -0.809017

+v 0.000000 0.000000 -1.000000

+v 0.688189 0.525736 0.499997

+v -0.262869 0.525738 0.809012

+v -0.850648 0.525736 0.000000

+v -0.262869 0.525738 -0.809012

+v 0.688189 0.525736 -0.499997

+v 0.162456 0.850654 0.499995

+v 0.525730 0.850652 0.000000

+v -0.425323 0.850654 0.309011

+v -0.425323 0.850654 -0.309011

+v 0.162456 0.850654 -0.499995

+s 0

+f 1 14 13

+f 2 14 16

+f 1 13 18

+f 1 18 20

+f 1 20 17

+f 2 16 23

+f 3 15 25

+f 4 19 27

+f 5 21 29

+f 6 22 31

+f 2 23 26

+f 3 25 28

+f 4 27 30

+f 5 29 32

+f 6 31 24

+f 7 33 38

+f 8 34 40

+f 9 35 41

+f 10 36 42

+f 11 37 39

+f 39 42 12

+f 39 37 42

+f 37 10 42

+f 42 41 12

+f 42 36 41

+f 36 9 41

+f 41 40 12

+f 41 35 40

+f 35 8 40

+f 40 38 12

+f 40 34 38

+f 34 7 38

+f 38 39 12

+f 38 33 39

+f 33 11 39

+f 24 37 11

+f 24 31 37

+f 31 10 37

+f 32 36 10

+f 32 29 36

+f 29 9 36

+f 30 35 9

+f 30 27 35

+f 27 8 35

+f 28 34 8

+f 28 25 34

+f 25 7 34

+f 26 33 7

+f 26 23 33

+f 23 11 33

+f 31 32 10

+f 31 22 32

+f 22 5 32

+f 29 30 9

+f 29 21 30

+f 21 4 30

+f 27 28 8

+f 27 19 28

+f 19 3 28

+f 25 26 7

+f 25 15 26

+f 15 2 26

+f 23 24 11

+f 23 16 24

+f 16 6 24

+f 17 22 6

+f 17 20 22

+f 20 5 22

+f 20 21 5

+f 20 18 21

+f 18 4 21

+f 18 19 4

+f 18 13 19

+f 13 3 19

+f 16 17 6

+f 16 14 17

+f 14 1 17

+f 13 15 3

+f 13 14 15

+f 14 2 15

diff --git a/data/icosphere_normals.obj b/data/icosphere_normals.obj

new file mode 100644

index 0000000..5fadb4f

--- /dev/null

+++ b/data/icosphere_normals.obj

@@ -0,0 +1,206 @@

+# Blender 3.5.0

+# www.blender.org

+o Icosphere

+v 0.000000 -1.000000 0.000000

+v 0.723607 -0.447220 0.525725

+v -0.276388 -0.447220 0.850649

+v -0.894426 -0.447216 0.000000

+v -0.276388 -0.447220 -0.850649

+v 0.723607 -0.447220 -0.525725

+v 0.276388 0.447220 0.850649

+v -0.723607 0.447220 0.525725

+v -0.723607 0.447220 -0.525725

+v 0.276388 0.447220 -0.850649

+v 0.894426 0.447216 0.000000

+v 0.000000 1.000000 0.000000

+v -0.162456 -0.850654 0.499995

+v 0.425323 -0.850654 0.309011

+v 0.262869 -0.525738 0.809012

+v 0.850648 -0.525736 0.000000

+v 0.425323 -0.850654 -0.309011

+v -0.525730 -0.850652 0.000000

+v -0.688189 -0.525736 0.499997

+v -0.162456 -0.850654 -0.499995

+v -0.688189 -0.525736 -0.499997

+v 0.262869 -0.525738 -0.809012

+v 0.951058 0.000000 0.309013

+v 0.951058 0.000000 -0.309013

+v 0.000000 0.000000 1.000000

+v 0.587786 0.000000 0.809017

+v -0.951058 0.000000 0.309013

+v -0.587786 0.000000 0.809017

+v -0.587786 0.000000 -0.809017

+v -0.951058 0.000000 -0.309013

+v 0.587786 0.000000 -0.809017

+v 0.000000 0.000000 -1.000000

+v 0.688189 0.525736 0.499997

+v -0.262869 0.525738 0.809012

+v -0.850648 0.525736 0.000000

+v -0.262869 0.525738 -0.809012

+v 0.688189 0.525736 -0.499997

+v 0.162456 0.850654 0.499995

+v 0.525730 0.850652 0.000000

+v -0.425323 0.850654 0.309011

+v -0.425323 0.850654 -0.309011

+v 0.162456 0.850654 -0.499995

+vn 0.1024 -0.9435 0.3151

+vn 0.7002 -0.6617 0.2680

+vn -0.2680 -0.9435 0.1947

+vn -0.2680 -0.9435 -0.1947

+vn 0.1024 -0.9435 -0.3151

+vn 0.9050 -0.3304 0.2680

+vn 0.0247 -0.3304 0.9435

+vn -0.8897 -0.3304 0.3151

+vn -0.5746 -0.3304 -0.7488

+vn 0.5346 -0.3304 -0.7779

+vn 0.8026 -0.1256 0.5831

+vn -0.3066 -0.1256 0.9435

+vn -0.9921 -0.1256 -0.0000

+vn -0.3066 -0.1256 -0.9435

+vn 0.8026 -0.1256 -0.5831

+vn 0.4089 0.6617 0.6284

+vn -0.4713 0.6617 0.5831

+vn -0.7002 0.6617 -0.2680

+vn 0.0385 0.6617 -0.7488

+vn 0.7240 0.6617 -0.1947

+vn 0.2680 0.9435 -0.1947

+vn 0.4911 0.7947 -0.3568

+vn 0.4089 0.6617 -0.6284

+vn -0.1024 0.9435 -0.3151

+vn -0.1876 0.7947 -0.5773

+vn -0.4713 0.6617 -0.5831

+vn -0.3313 0.9435 -0.0000

+vn -0.6071 0.7947 -0.0000

+vn -0.7002 0.6617 0.2680

+vn -0.1024 0.9435 0.3151

+vn -0.1876 0.7947 0.5773

+vn 0.0385 0.6617 0.7488

+vn 0.2680 0.9435 0.1947

+vn 0.4911 0.7947 0.3568

+vn 0.7240 0.6617 0.1947

+vn 0.8897 0.3304 -0.3151

+vn 0.7947 0.1876 -0.5773

+vn 0.5746 0.3304 -0.7488

+vn -0.0247 0.3304 -0.9435

+vn -0.3035 0.1876 -0.9342

+vn -0.5346 0.3304 -0.7779

+vn -0.9050 0.3304 -0.2680

+vn -0.9822 0.1876 -0.0000

+vn -0.9050 0.3304 0.2680

+vn -0.5346 0.3304 0.7779

+vn -0.3035 0.1876 0.9342

+vn -0.0247 0.3304 0.9435

+vn 0.5746 0.3304 0.7488

+vn 0.7947 0.1876 0.5773

+vn 0.8897 0.3304 0.3151

+vn 0.3066 0.1256 -0.9435

+vn 0.3035 -0.1876 -0.9342

+vn 0.0247 -0.3304 -0.9435

+vn -0.8026 0.1256 -0.5831

+vn -0.7947 -0.1876 -0.5773

+vn -0.8897 -0.3304 -0.3151

+vn -0.8026 0.1256 0.5831

+vn -0.7947 -0.1876 0.5773

+vn -0.5746 -0.3304 0.7488

+vn 0.3066 0.1256 0.9435

+vn 0.3035 -0.1876 0.9342

+vn 0.5346 -0.3304 0.7779

+vn 0.9921 0.1256 -0.0000

+vn 0.9822 -0.1876 -0.0000

+vn 0.9050 -0.3304 -0.2680

+vn 0.4713 -0.6617 -0.5831

+vn 0.1876 -0.7947 -0.5773

+vn -0.0385 -0.6617 -0.7488

+vn -0.4089 -0.6617 -0.6284

+vn -0.4911 -0.7947 -0.3568

+vn -0.7240 -0.6617 -0.1947

+vn -0.7240 -0.6617 0.1947

+vn -0.4911 -0.7947 0.3568

+vn -0.4089 -0.6617 0.6284

+vn 0.7002 -0.6617 -0.2680

+vn 0.6071 -0.7947 -0.0000

+vn 0.3313 -0.9435 -0.0000

+vn -0.0385 -0.6617 0.7488

+vn 0.1876 -0.7947 0.5773

+vn 0.4713 -0.6617 0.5831

+s 0

+f 1//1 14//1 13//1

+f 2//2 14//2 16//2

+f 1//3 13//3 18//3

+f 1//4 18//4 20//4

+f 1//5 20//5 17//5

+f 2//6 16//6 23//6

+f 3//7 15//7 25//7

+f 4//8 19//8 27//8

+f 5//9 21//9 29//9

+f 6//10 22//10 31//10

+f 2//11 23//11 26//11

+f 3//12 25//12 28//12

+f 4//13 27//13 30//13

+f 5//14 29//14 32//14

+f 6//15 31//15 24//15

+f 7//16 33//16 38//16

+f 8//17 34//17 40//17

+f 9//18 35//18 41//18

+f 10//19 36//19 42//19

+f 11//20 37//20 39//20

+f 39//21 42//21 12//21

+f 39//22 37//22 42//22

+f 37//23 10//23 42//23

+f 42//24 41//24 12//24

+f 42//25 36//25 41//25

+f 36//26 9//26 41//26

+f 41//27 40//27 12//27

+f 41//28 35//28 40//28

+f 35//29 8//29 40//29

+f 40//30 38//30 12//30

+f 40//31 34//31 38//31

+f 34//32 7//32 38//32

+f 38//33 39//33 12//33

+f 38//34 33//34 39//34

+f 33//35 11//35 39//35

+f 24//36 37//36 11//36

+f 24//37 31//37 37//37

+f 31//38 10//38 37//38

+f 32//39 36//39 10//39

+f 32//40 29//40 36//40

+f 29//41 9//41 36//41

+f 30//42 35//42 9//42

+f 30//43 27//43 35//43

+f 27//44 8//44 35//44

+f 28//45 34//45 8//45

+f 28//46 25//46 34//46

+f 25//47 7//47 34//47

+f 26//48 33//48 7//48

+f 26//49 23//49 33//49

+f 23//50 11//50 33//50

+f 31//51 32//51 10//51

+f 31//52 22//52 32//52

+f 22//53 5//53 32//53

+f 29//54 30//54 9//54

+f 29//55 21//55 30//55

+f 21//56 4//56 30//56

+f 27//57 28//57 8//57

+f 27//58 19//58 28//58

+f 19//59 3//59 28//59

+f 25//60 26//60 7//60

+f 25//61 15//61 26//61

+f 15//62 2//62 26//62

+f 23//63 24//63 11//63

+f 23//64 16//64 24//64

+f 16//65 6//65 24//65

+f 17//66 22//66 6//66

+f 17//67 20//67 22//67

+f 20//68 5//68 22//68

+f 20//69 21//69 5//69

+f 20//70 18//70 21//70

+f 18//71 4//71 21//71

+f 18//72 19//72 4//72

+f 18//73 13//73 19//73

+f 13//74 3//74 19//74

+f 16//75 17//75 6//75

+f 16//76 14//76 17//76

+f 14//77 1//77 17//77

+f 13//78 15//78 3//78

+f 13//79 14//79 15//79

+f 14//80 2//80 15//80

diff --git a/data/plane.obj b/data/plane.obj

new file mode 100644

index 0000000..98e138e

--- /dev/null

+++ b/data/plane.obj

@@ -0,0 +1,1245 @@

+# Blender 3.5.0

+# www.blender.org

+o Grid

+v -1.000000 0.000000 1.000000

+v -0.900000 0.000000 1.000000

+v -0.800000 0.000000 1.000000

+v -0.700000 0.000000 1.000000

+v -0.600000 0.000000 1.000000

+v -0.500000 0.000000 1.000000

+v -0.400000 0.000000 1.000000

+v -0.300000 0.000000 1.000000

+v -0.200000 0.000000 1.000000

+v -0.100000 0.000000 1.000000

+v 0.000000 0.000000 1.000000

+v 0.100000 0.000000 1.000000

+v 0.200000 0.000000 1.000000

+v 0.300000 0.000000 1.000000

+v 0.400000 0.000000 1.000000

+v 0.500000 0.000000 1.000000

+v 0.600000 0.000000 1.000000

+v 0.700000 0.000000 1.000000

+v 0.800000 0.000000 1.000000

+v 0.900000 0.000000 1.000000

+v 1.000000 0.000000 1.000000

+v -1.000000 0.000000 0.900000

+v -0.900000 0.000000 0.900000

+v -0.800000 0.000000 0.900000

+v -0.700000 0.000000 0.900000

+v -0.600000 0.000000 0.900000

+v -0.500000 0.000000 0.900000

+v -0.400000 0.000000 0.900000

+v -0.300000 0.000000 0.900000

+v -0.200000 0.000000 0.900000

+v -0.100000 0.000000 0.900000

+v 0.000000 0.000000 0.900000

+v 0.100000 0.000000 0.900000

+v 0.200000 0.000000 0.900000

+v 0.300000 0.000000 0.900000

+v 0.400000 0.000000 0.900000

+v 0.500000 0.000000 0.900000

+v 0.600000 0.000000 0.900000

+v 0.700000 0.000000 0.900000

+v 0.800000 0.000000 0.900000

+v 0.900000 0.000000 0.900000

+v 1.000000 0.000000 0.900000

+v -1.000000 0.000000 0.800000

+v -0.900000 0.000000 0.800000

+v -0.800000 0.000000 0.800000

+v -0.700000 0.000000 0.800000

+v -0.600000 0.000000 0.800000

+v -0.500000 0.000000 0.800000

+v -0.400000 0.000000 0.800000

+v -0.300000 0.000000 0.800000

+v -0.200000 0.000000 0.800000

+v -0.100000 0.000000 0.800000

+v 0.000000 0.000000 0.800000

+v 0.100000 0.000000 0.800000

+v 0.200000 0.000000 0.800000

+v 0.300000 0.000000 0.800000

+v 0.400000 0.000000 0.800000

+v 0.500000 0.000000 0.800000

+v 0.600000 0.000000 0.800000

+v 0.700000 0.000000 0.800000

+v 0.800000 0.000000 0.800000

+v 0.900000 0.000000 0.800000

+v 1.000000 0.000000 0.800000

+v -1.000000 0.000000 0.700000

+v -0.900000 0.000000 0.700000

+v -0.800000 0.000000 0.700000

+v -0.700000 0.000000 0.700000

+v -0.600000 0.000000 0.700000

+v -0.500000 0.000000 0.700000

+v -0.400000 0.000000 0.700000

+v -0.300000 0.000000 0.700000

+v -0.200000 0.000000 0.700000

+v -0.100000 0.000000 0.700000

+v 0.000000 0.000000 0.700000

+v 0.100000 0.000000 0.700000

+v 0.200000 0.000000 0.700000

+v 0.300000 0.000000 0.700000

+v 0.400000 0.000000 0.700000

+v 0.500000 0.000000 0.700000

+v 0.600000 0.000000 0.700000

+v 0.700000 0.000000 0.700000

+v 0.800000 0.000000 0.700000

+v 0.900000 0.000000 0.700000

+v 1.000000 0.000000 0.700000

+v -1.000000 0.000000 0.600000

+v -0.900000 0.000000 0.600000

+v -0.800000 0.000000 0.600000

+v -0.700000 0.000000 0.600000

+v -0.600000 0.000000 0.600000

+v -0.500000 0.000000 0.600000

+v -0.400000 0.000000 0.600000

+v -0.300000 0.000000 0.600000

+v -0.200000 0.000000 0.600000

+v -0.100000 0.000000 0.600000

+v 0.000000 0.000000 0.600000

+v 0.100000 0.000000 0.600000

+v 0.200000 0.000000 0.600000

+v 0.300000 0.000000 0.600000

+v 0.400000 0.000000 0.600000

+v 0.500000 0.000000 0.600000

+v 0.600000 0.000000 0.600000

+v 0.700000 0.000000 0.600000

+v 0.800000 0.000000 0.600000

+v 0.900000 0.000000 0.600000

+v 1.000000 0.000000 0.600000

+v -1.000000 0.000000 0.500000

+v -0.900000 0.000000 0.500000

+v -0.800000 0.000000 0.500000

+v -0.700000 0.000000 0.500000

+v -0.600000 0.000000 0.500000

+v -0.500000 0.000000 0.500000

+v -0.400000 0.000000 0.500000

+v -0.300000 0.000000 0.500000

+v -0.200000 0.000000 0.500000

+v -0.100000 0.000000 0.500000

+v 0.000000 0.000000 0.500000

+v 0.100000 0.000000 0.500000

+v 0.200000 0.000000 0.500000

+v 0.300000 0.000000 0.500000

+v 0.400000 0.000000 0.500000

+v 0.500000 0.000000 0.500000

+v 0.600000 0.000000 0.500000

+v 0.700000 0.000000 0.500000

+v 0.800000 0.000000 0.500000

+v 0.900000 0.000000 0.500000

+v 1.000000 0.000000 0.500000

+v -1.000000 0.000000 0.400000

+v -0.900000 0.000000 0.400000

+v -0.800000 0.000000 0.400000

+v -0.700000 0.000000 0.400000

+v -0.600000 0.000000 0.400000

+v -0.500000 0.000000 0.400000

+v -0.400000 0.000000 0.400000

+v -0.300000 0.000000 0.400000

+v -0.200000 0.000000 0.400000

+v -0.100000 0.000000 0.400000

+v 0.000000 0.000000 0.400000

+v 0.100000 0.000000 0.400000

+v 0.200000 0.000000 0.400000

+v 0.300000 0.000000 0.400000

+v 0.400000 0.000000 0.400000

+v 0.500000 0.000000 0.400000

+v 0.600000 0.000000 0.400000

+v 0.700000 0.000000 0.400000

+v 0.800000 0.000000 0.400000

+v 0.900000 0.000000 0.400000

+v 1.000000 0.000000 0.400000

+v -1.000000 0.000000 0.300000

+v -0.900000 0.000000 0.300000

+v -0.800000 0.000000 0.300000

+v -0.700000 0.000000 0.300000

+v -0.600000 0.000000 0.300000

+v -0.500000 0.000000 0.300000

+v -0.400000 0.000000 0.300000

+v -0.300000 0.000000 0.300000

+v -0.200000 0.000000 0.300000

+v -0.100000 0.000000 0.300000

+v 0.000000 0.000000 0.300000

+v 0.100000 0.000000 0.300000

+v 0.200000 0.000000 0.300000

+v 0.300000 0.000000 0.300000

+v 0.400000 0.000000 0.300000

+v 0.500000 0.000000 0.300000

+v 0.600000 0.000000 0.300000

+v 0.700000 0.000000 0.300000

+v 0.800000 0.000000 0.300000

+v 0.900000 0.000000 0.300000

+v 1.000000 0.000000 0.300000

+v -1.000000 0.000000 0.200000

+v -0.900000 0.000000 0.200000

+v -0.800000 0.000000 0.200000

+v -0.700000 0.000000 0.200000

+v -0.600000 0.000000 0.200000

+v -0.500000 0.000000 0.200000

+v -0.400000 0.000000 0.200000

+v -0.300000 0.000000 0.200000

+v -0.200000 0.000000 0.200000

+v -0.100000 0.000000 0.200000

+v 0.000000 0.000000 0.200000

+v 0.100000 0.000000 0.200000

+v 0.200000 0.000000 0.200000

+v 0.300000 0.000000 0.200000

+v 0.400000 0.000000 0.200000

+v 0.500000 0.000000 0.200000

+v 0.600000 0.000000 0.200000

+v 0.700000 0.000000 0.200000

+v 0.800000 0.000000 0.200000

+v 0.900000 0.000000 0.200000

+v 1.000000 0.000000 0.200000

+v -1.000000 0.000000 0.100000

+v -0.900000 0.000000 0.100000

+v -0.800000 0.000000 0.100000

+v -0.700000 0.000000 0.100000

+v -0.600000 0.000000 0.100000

+v -0.500000 0.000000 0.100000

+v -0.400000 0.000000 0.100000

+v -0.300000 0.000000 0.100000

+v -0.200000 0.000000 0.100000

+v -0.100000 0.000000 0.100000

+v 0.000000 0.000000 0.100000

+v 0.100000 0.000000 0.100000

+v 0.200000 0.000000 0.100000

+v 0.300000 0.000000 0.100000

+v 0.400000 0.000000 0.100000

+v 0.500000 0.000000 0.100000

+v 0.600000 0.000000 0.100000

+v 0.700000 0.000000 0.100000

+v 0.800000 0.000000 0.100000

+v 0.900000 0.000000 0.100000

+v 1.000000 0.000000 0.100000

+v -1.000000 0.000000 0.000000

+v -0.900000 0.000000 0.000000

+v -0.800000 0.000000 0.000000

+v -0.700000 0.000000 0.000000

+v -0.600000 0.000000 0.000000

+v -0.500000 0.000000 0.000000

+v -0.400000 0.000000 0.000000

+v -0.300000 0.000000 0.000000

+v -0.200000 0.000000 0.000000

+v -0.100000 0.000000 0.000000

+v 0.000000 0.000000 0.000000

+v 0.100000 0.000000 0.000000

+v 0.200000 0.000000 0.000000

+v 0.300000 0.000000 0.000000

+v 0.400000 0.000000 0.000000

+v 0.500000 0.000000 0.000000

+v 0.600000 0.000000 0.000000

+v 0.700000 0.000000 0.000000

+v 0.800000 0.000000 0.000000

+v 0.900000 0.000000 0.000000

+v 1.000000 0.000000 0.000000

+v -1.000000 0.000000 -0.100000

+v -0.900000 0.000000 -0.100000

+v -0.800000 0.000000 -0.100000

+v -0.700000 0.000000 -0.100000

+v -0.600000 0.000000 -0.100000

+v -0.500000 0.000000 -0.100000

+v -0.400000 0.000000 -0.100000

+v -0.300000 0.000000 -0.100000

+v -0.200000 0.000000 -0.100000

+v -0.100000 0.000000 -0.100000

+v 0.000000 0.000000 -0.100000

+v 0.100000 0.000000 -0.100000

+v 0.200000 0.000000 -0.100000

+v 0.300000 0.000000 -0.100000

+v 0.400000 0.000000 -0.100000

+v 0.500000 0.000000 -0.100000

+v 0.600000 0.000000 -0.100000

+v 0.700000 0.000000 -0.100000

+v 0.800000 0.000000 -0.100000

+v 0.900000 0.000000 -0.100000

+v 1.000000 0.000000 -0.100000

+v -1.000000 0.000000 -0.200000

+v -0.900000 0.000000 -0.200000

+v -0.800000 0.000000 -0.200000

+v -0.700000 0.000000 -0.200000

+v -0.600000 0.000000 -0.200000

+v -0.500000 0.000000 -0.200000

+v -0.400000 0.000000 -0.200000

+v -0.300000 0.000000 -0.200000

+v -0.200000 0.000000 -0.200000

+v -0.100000 0.000000 -0.200000

+v 0.000000 0.000000 -0.200000

+v 0.100000 0.000000 -0.200000

+v 0.200000 0.000000 -0.200000

+v 0.300000 0.000000 -0.200000

+v 0.400000 0.000000 -0.200000

+v 0.500000 0.000000 -0.200000

+v 0.600000 0.000000 -0.200000

+v 0.700000 0.000000 -0.200000

+v 0.800000 0.000000 -0.200000

+v 0.900000 0.000000 -0.200000

+v 1.000000 0.000000 -0.200000

+v -1.000000 0.000000 -0.300000

+v -0.900000 0.000000 -0.300000

+v -0.800000 0.000000 -0.300000

+v -0.700000 0.000000 -0.300000

+v -0.600000 0.000000 -0.300000

+v -0.500000 0.000000 -0.300000

+v -0.400000 0.000000 -0.300000

+v -0.300000 0.000000 -0.300000

+v -0.200000 0.000000 -0.300000

+v -0.100000 0.000000 -0.300000

+v 0.000000 0.000000 -0.300000

+v 0.100000 0.000000 -0.300000

+v 0.200000 0.000000 -0.300000

+v 0.300000 0.000000 -0.300000

+v 0.400000 0.000000 -0.300000

+v 0.500000 0.000000 -0.300000

+v 0.600000 0.000000 -0.300000

+v 0.700000 0.000000 -0.300000

+v 0.800000 0.000000 -0.300000

+v 0.900000 0.000000 -0.300000

+v 1.000000 0.000000 -0.300000

+v -1.000000 0.000000 -0.400000

+v -0.900000 0.000000 -0.400000

+v -0.800000 0.000000 -0.400000

+v -0.700000 0.000000 -0.400000

+v -0.600000 0.000000 -0.400000

+v -0.500000 0.000000 -0.400000

+v -0.400000 0.000000 -0.400000

+v -0.300000 0.000000 -0.400000

+v -0.200000 0.000000 -0.400000

+v -0.100000 0.000000 -0.400000

+v 0.000000 0.000000 -0.400000

+v 0.100000 0.000000 -0.400000

+v 0.200000 0.000000 -0.400000

+v 0.300000 0.000000 -0.400000

+v 0.400000 0.000000 -0.400000

+v 0.500000 0.000000 -0.400000

+v 0.600000 0.000000 -0.400000

+v 0.700000 0.000000 -0.400000

+v 0.800000 0.000000 -0.400000

+v 0.900000 0.000000 -0.400000

+v 1.000000 0.000000 -0.400000

+v -1.000000 0.000000 -0.500000

+v -0.900000 0.000000 -0.500000

+v -0.800000 0.000000 -0.500000

+v -0.700000 0.000000 -0.500000

+v -0.600000 0.000000 -0.500000

+v -0.500000 0.000000 -0.500000

+v -0.400000 0.000000 -0.500000

+v -0.300000 0.000000 -0.500000

+v -0.200000 0.000000 -0.500000

+v -0.100000 0.000000 -0.500000

+v 0.000000 0.000000 -0.500000

+v 0.100000 0.000000 -0.500000

+v 0.200000 0.000000 -0.500000

+v 0.300000 0.000000 -0.500000

+v 0.400000 0.000000 -0.500000

+v 0.500000 0.000000 -0.500000

+v 0.600000 0.000000 -0.500000

+v 0.700000 0.000000 -0.500000

+v 0.800000 0.000000 -0.500000

+v 0.900000 0.000000 -0.500000

+v 1.000000 0.000000 -0.500000

+v -1.000000 0.000000 -0.600000

+v -0.900000 0.000000 -0.600000

+v -0.800000 0.000000 -0.600000

+v -0.700000 0.000000 -0.600000

+v -0.600000 0.000000 -0.600000

+v -0.500000 0.000000 -0.600000

+v -0.400000 0.000000 -0.600000

+v -0.300000 0.000000 -0.600000

+v -0.200000 0.000000 -0.600000

+v -0.100000 0.000000 -0.600000

+v 0.000000 0.000000 -0.600000

+v 0.100000 0.000000 -0.600000

+v 0.200000 0.000000 -0.600000

+v 0.300000 0.000000 -0.600000

+v 0.400000 0.000000 -0.600000

+v 0.500000 0.000000 -0.600000

+v 0.600000 0.000000 -0.600000

+v 0.700000 0.000000 -0.600000

+v 0.800000 0.000000 -0.600000

+v 0.900000 0.000000 -0.600000

+v 1.000000 0.000000 -0.600000

+v -1.000000 0.000000 -0.700000

+v -0.900000 0.000000 -0.700000

+v -0.800000 0.000000 -0.700000

+v -0.700000 0.000000 -0.700000

+v -0.600000 0.000000 -0.700000

+v -0.500000 0.000000 -0.700000

+v -0.400000 0.000000 -0.700000

+v -0.300000 0.000000 -0.700000

+v -0.200000 0.000000 -0.700000

+v -0.100000 0.000000 -0.700000

+v 0.000000 0.000000 -0.700000

+v 0.100000 0.000000 -0.700000

+v 0.200000 0.000000 -0.700000

+v 0.300000 0.000000 -0.700000

+v 0.400000 0.000000 -0.700000

+v 0.500000 0.000000 -0.700000

+v 0.600000 0.000000 -0.700000

+v 0.700000 0.000000 -0.700000

+v 0.800000 0.000000 -0.700000

+v 0.900000 0.000000 -0.700000

+v 1.000000 0.000000 -0.700000

+v -1.000000 0.000000 -0.800000

+v -0.900000 0.000000 -0.800000

+v -0.800000 0.000000 -0.800000

+v -0.700000 0.000000 -0.800000

+v -0.600000 0.000000 -0.800000

+v -0.500000 0.000000 -0.800000

+v -0.400000 0.000000 -0.800000

+v -0.300000 0.000000 -0.800000

+v -0.200000 0.000000 -0.800000

+v -0.100000 0.000000 -0.800000

+v 0.000000 0.000000 -0.800000

+v 0.100000 0.000000 -0.800000

+v 0.200000 0.000000 -0.800000

+v 0.300000 0.000000 -0.800000

+v 0.400000 0.000000 -0.800000

+v 0.500000 0.000000 -0.800000

+v 0.600000 0.000000 -0.800000

+v 0.700000 0.000000 -0.800000

+v 0.800000 0.000000 -0.800000

+v 0.900000 0.000000 -0.800000

+v 1.000000 0.000000 -0.800000

+v -1.000000 0.000000 -0.900000

+v -0.900000 0.000000 -0.900000

+v -0.800000 0.000000 -0.900000

+v -0.700000 0.000000 -0.900000

+v -0.600000 0.000000 -0.900000

+v -0.500000 0.000000 -0.900000

+v -0.400000 0.000000 -0.900000

+v -0.300000 0.000000 -0.900000

+v -0.200000 0.000000 -0.900000

+v -0.100000 0.000000 -0.900000

+v 0.000000 0.000000 -0.900000

+v 0.100000 0.000000 -0.900000

+v 0.200000 0.000000 -0.900000

+v 0.300000 0.000000 -0.900000

+v 0.400000 0.000000 -0.900000

+v 0.500000 0.000000 -0.900000

+v 0.600000 0.000000 -0.900000

+v 0.700000 0.000000 -0.900000

+v 0.800000 0.000000 -0.900000

+v 0.900000 0.000000 -0.900000

+v 1.000000 0.000000 -0.900000

+v -1.000000 0.000000 -1.000000

+v -0.900000 0.000000 -1.000000

+v -0.800000 0.000000 -1.000000

+v -0.700000 0.000000 -1.000000

+v -0.600000 0.000000 -1.000000

+v -0.500000 0.000000 -1.000000

+v -0.400000 0.000000 -1.000000

+v -0.300000 0.000000 -1.000000

+v -0.200000 0.000000 -1.000000

+v -0.100000 0.000000 -1.000000

+v 0.000000 0.000000 -1.000000

+v 0.100000 0.000000 -1.000000

+v 0.200000 0.000000 -1.000000

+v 0.300000 0.000000 -1.000000

+v 0.400000 0.000000 -1.000000

+v 0.500000 0.000000 -1.000000

+v 0.600000 0.000000 -1.000000

+v 0.700000 0.000000 -1.000000

+v 0.800000 0.000000 -1.000000

+v 0.900000 0.000000 -1.000000

+v 1.000000 0.000000 -1.000000

+s 0

+f 2 22 1

+f 3 23 2

+f 4 24 3

+f 5 25 4

+f 6 26 5

+f 7 27 6

+f 8 28 7

+f 9 29 8

+f 10 30 9

+f 11 31 10

+f 12 32 11

+f 13 33 12

+f 14 34 13

+f 15 35 14

+f 16 36 15

+f 17 37 16

+f 18 38 17

+f 19 39 18

+f 20 40 19

+f 21 41 20

+f 23 43 22

+f 24 44 23

+f 25 45 24

+f 26 46 25

+f 27 47 26

+f 28 48 27

+f 29 49 28

+f 30 50 29

+f 31 51 30

+f 32 52 31

+f 33 53 32

+f 34 54 33

+f 35 55 34

+f 36 56 35

+f 37 57 36

+f 38 58 37

+f 39 59 38

+f 40 60 39

+f 41 61 40

+f 42 62 41

+f 44 64 43

+f 45 65 44

+f 46 66 45

+f 47 67 46

+f 48 68 47

+f 49 69 48

+f 50 70 49

+f 51 71 50

+f 52 72 51

+f 53 73 52

+f 54 74 53

+f 55 75 54

+f 56 76 55

+f 57 77 56

+f 58 78 57

+f 59 79 58

+f 60 80 59

+f 61 81 60

+f 62 82 61

+f 63 83 62

+f 65 85 64

+f 66 86 65

+f 67 87 66

+f 68 88 67

+f 69 89 68

+f 70 90 69

+f 71 91 70

+f 72 92 71

+f 73 93 72

+f 74 94 73

+f 75 95 74

+f 76 96 75

+f 77 97 76

+f 78 98 77

+f 79 99 78

+f 80 100 79

+f 81 101 80

+f 82 102 81

+f 83 103 82

+f 84 104 83

+f 86 106 85

+f 87 107 86

+f 88 108 87

+f 89 109 88

+f 90 110 89

+f 91 111 90

+f 92 112 91

+f 93 113 92

+f 94 114 93

+f 95 115 94

+f 96 116 95

+f 97 117 96

+f 98 118 97

+f 99 119 98

+f 100 120 99

+f 101 121 100

+f 102 122 101

+f 103 123 102

+f 104 124 103

+f 105 125 104

+f 107 127 106

+f 108 128 107

+f 109 129 108

+f 110 130 109

+f 111 131 110

+f 112 132 111

+f 113 133 112

+f 114 134 113

+f 115 135 114

+f 116 136 115

+f 117 137 116

+f 118 138 117

+f 119 139 118

+f 120 140 119

+f 121 141 120

+f 122 142 121

+f 123 143 122

+f 124 144 123

+f 125 145 124

+f 126 146 125

+f 128 148 127

+f 129 149 128

+f 130 150 129

+f 131 151 130

+f 132 152 131

+f 133 153 132

+f 134 154 133

+f 135 155 134

+f 136 156 135

+f 137 157 136

+f 138 158 137

+f 139 159 138

+f 140 160 139

+f 141 161 140

+f 142 162 141

+f 143 163 142

+f 144 164 143

+f 145 165 144

+f 146 166 145

+f 147 167 146

+f 149 169 148

+f 150 170 149

+f 151 171 150

+f 152 172 151

+f 153 173 152

+f 154 174 153

+f 155 175 154

+f 156 176 155

+f 157 177 156

+f 158 178 157

+f 159 179 158

+f 160 180 159

+f 161 181 160

+f 162 182 161

+f 163 183 162

+f 164 184 163

+f 165 185 164

+f 166 186 165

+f 167 187 166

+f 168 188 167

+f 170 190 169

+f 171 191 170

+f 172 192 171

+f 173 193 172

+f 174 194 173

+f 175 195 174

+f 176 196 175

+f 177 197 176

+f 178 198 177

+f 179 199 178

+f 180 200 179

+f 181 201 180

+f 182 202 181

+f 183 203 182

+f 184 204 183

+f 185 205 184

+f 186 206 185

+f 187 207 186

+f 188 208 187

+f 189 209 188

+f 191 211 190

+f 192 212 191

+f 193 213 192

+f 194 214 193

+f 195 215 194

+f 196 216 195

+f 197 217 196

+f 198 218 197

+f 199 219 198

+f 200 220 199

+f 201 221 200

+f 202 222 201

+f 203 223 202

+f 204 224 203

+f 205 225 204

+f 206 226 205

+f 207 227 206

+f 208 228 207

+f 209 229 208

+f 210 230 209

+f 212 232 211

+f 213 233 212

+f 214 234 213

+f 215 235 214

+f 216 236 215

+f 217 237 216

+f 218 238 217

+f 219 239 218

+f 220 240 219

+f 221 241 220

+f 222 242 221

+f 223 243 222

+f 224 244 223

+f 225 245 224

+f 226 246 225

+f 227 247 226

+f 228 248 227

+f 229 249 228

+f 230 250 229

+f 231 251 230

+f 233 253 232

+f 234 254 233

+f 235 255 234

+f 236 256 235

+f 237 257 236

+f 238 258 237

+f 239 259 238

+f 240 260 239

+f 241 261 240

+f 242 262 241

+f 243 263 242

+f 244 264 243

+f 245 265 244

+f 246 266 245

+f 247 267 246

+f 248 268 247

+f 249 269 248

+f 250 270 249

+f 251 271 250

+f 252 272 251

+f 254 274 253

+f 255 275 254

+f 256 276 255

+f 257 277 256

+f 258 278 257

+f 259 279 258

+f 260 280 259

+f 261 281 260

+f 262 282 261

+f 263 283 262

+f 264 284 263

+f 265 285 264

+f 266 286 265

+f 267 287 266

+f 268 288 267

+f 269 289 268

+f 270 290 269

+f 271 291 270

+f 272 292 271

+f 273 293 272

+f 275 295 274

+f 276 296 275

+f 277 297 276

+f 278 298 277

+f 279 299 278

+f 280 300 279

+f 281 301 280

+f 282 302 281

+f 283 303 282

+f 284 304 283

+f 285 305 284

+f 286 306 285

+f 287 307 286

+f 288 308 287

+f 289 309 288

+f 290 310 289

+f 291 311 290

+f 292 312 291

+f 293 313 292

+f 294 314 293

+f 296 316 295

+f 297 317 296

+f 298 318 297

+f 299 319 298

+f 300 320 299

+f 301 321 300

+f 302 322 301

+f 303 323 302

+f 304 324 303

+f 305 325 304

+f 306 326 305

+f 307 327 306

+f 308 328 307

+f 309 329 308

+f 310 330 309

+f 311 331 310

+f 312 332 311

+f 313 333 312

+f 314 334 313

+f 315 335 314

+f 317 337 316

+f 318 338 317

+f 319 339 318

+f 320 340 319

+f 321 341 320

+f 322 342 321

+f 323 343 322

+f 324 344 323

+f 325 345 324

+f 326 346 325

+f 327 347 326

+f 328 348 327

+f 329 349 328

+f 330 350 329

+f 331 351 330

+f 332 352 331

+f 333 353 332

+f 334 354 333

+f 335 355 334

+f 336 356 335

+f 338 358 337

+f 339 359 338

+f 340 360 339

+f 341 361 340

+f 342 362 341

+f 343 363 342

+f 344 364 343

+f 345 365 344

+f 346 366 345

+f 347 367 346

+f 348 368 347

+f 349 369 348

+f 350 370 349

+f 351 371 350

+f 352 372 351

+f 353 373 352

+f 354 374 353

+f 355 375 354

+f 356 376 355

+f 357 377 356

+f 359 379 358

+f 360 380 359

+f 361 381 360

+f 362 382 361

+f 363 383 362

+f 364 384 363

+f 365 385 364

+f 366 386 365

+f 367 387 366

+f 368 388 367

+f 369 389 368

+f 370 390 369

+f 371 391 370

+f 372 392 371

+f 373 393 372