-

Notifications

You must be signed in to change notification settings - Fork 4.6k

technion

To view AirSim git and the original README, please go to AirSim git.

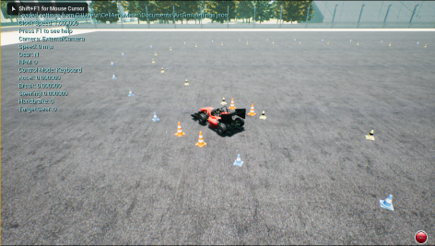

This project is about training and implementing self-driving algorithm for Formula Student Driverless competitions. In such competitions, a formula race car, designed and built by students, is challenged to drive through previously unseen tracks that are marked by traffic cones.

We present a simulator for formula student car and the environment of a driverless competition. The simulator is based on AirSim.

The Technion Formula Student car. Actual car (left), simulated car (right)

AirSim is a simulator for drones, cars and more built on Unreal Engine. It is open-source, cross platform and supports hardware-in-loop with popular flight controllers such as PX4 for physically and visually realistic simulations. It is developed as an Unreal plugin that can simply be dropped in to any Unreal environment you want.

Our goal is to provide a platform for AI research to experiment with deep learning, in particular imitation learning, for Formula Student Driverless cars.

The model of the Formula Student Technion car is provided by Ryan Pourati.

The environment scene is provided by PolyPixel.

Driving in real-world using trained imitation learning model, based on AirSim data only

- Operating system: Windows 10

- GPU: Nvidia GTX 1080 or higher (recommended)

- Software: Unreal Engine 4.18 and Visual Studio 2017 (see upgrade instructions)

- Note: This repository is forked from AirSim 1.2

By default AirSim will prompt you for choosing Car or Multirotor mode. You can use SimMode setting to specify the default vehicle to car (Formula Technion Student car).

If you have a steering wheel (Logitech G920) as shown below, you can manually control the car in the simulator. Also, you can use arrow keys to drive manually.

Using imitation learning, we trained a deep learning model to steer a Formula Student car with an input of only one camera. Our code files for the training procedure are available here and are based on AirSim cookbook.

We added a few graphic features to ease the procedure of recording data.

You can change the positions of the cameras using this tutorial.

There are two ways you can generate training data from AirSim for deep learning. The easiest way is to simply press the record button on the lower right corner. This will start writing pose and images for each frame. The data logging code is pretty simple and you can modify it to your heart's desire.

A better way to generate training data exactly the way you want is by accessing the APIs. This allows you to be in full control of how, what, where and when you want to log data.

Our implementation of the algorithm on Nvidia Jetson TX2 can be found in this repository.

Tom Hirshberg, Dean Zadok and Amir Biran.

We would like to thank our advisors: Dr. Kira Radinsky, Dr. Ashish Kapoor and Boaz Sternfeld.

Thanks to the Intelligent Systems Lab (ISL) in the Technion for the support.