diff --git a/docs/demo_guides/amlogic_npu.md b/docs/demo_guides/amlogic_npu.md

index 19396472882..8941b001cc0 100644

--- a/docs/demo_guides/amlogic_npu.md

+++ b/docs/demo_guides/amlogic_npu.md

@@ -223,12 +223,13 @@ Paddle Lite 已支持晶晨 NPU 的预测部署。

3)`build.sh` 根据入参生成针对不同操作系统、体系结构的二进制程序,需查阅注释信息配置正确的参数值。

4)`run_with_adb.sh` 入参包括模型名称、操作系统、体系结构、目标设备、设备序列号等,需查阅注释信息配置正确的参数值。

5)`run_with_ssh.sh` 入参包括模型名称、操作系统、体系结构、目标设备、ip地址、用户名、用户密码等,需查阅注释信息配置正确的参数值。

+ 6)下述命令行示例中涉及的具体IP、SSH账号密码、设备序列号等均为示例环境,请用户根据自身实际设备环境修改。

在 ARM CPU 上运行 mobilenet_v1_int8_224_per_layer 全量化模型

$ cd PaddleLite-generic-demo/image_classification_demo/shell

For C308X

- $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer linux arm64 cpu

+ $ ./run_with_ssh.sh mobilenet_v1_int8_224_per_layer linux arm64 cpu 192.168.100.244 22 root 123456

(C308X)

warmup: 1 repeat: 5, average: 167.6916 ms, max: 207.458000 ms, min: 159.823239 ms

results: 3

@@ -240,7 +241,7 @@ Paddle Lite 已支持晶晨 NPU 的预测部署。

Postprocess time: 0.542000 ms

For A311D

- $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer linux arm64 cpu

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer linux arm64 cpu 0123456789ABCDEF

(A311D)

warmup: 1 repeat: 15, average: 81.678067 ms, max: 81.945999 ms, min: 81.591003 ms

results: 3

@@ -252,7 +253,7 @@ Paddle Lite 已支持晶晨 NPU 的预测部署。

Postprocess time: 0.407000 ms

For S905D3(Android版)

- $ ./run_with_ssh.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a cpu

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a cpu c8631471d5cd

(S905D3(Android版))

warmup: 1 repeat: 5, average: 280.465997 ms, max: 358.815002 ms, min: 268.549812 ms

results: 3

@@ -269,7 +270,7 @@ Paddle Lite 已支持晶晨 NPU 的预测部署。

$ cd PaddleLite-generic-demo/image_classification_demo/shell

For C308X

- $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer linux arm64 amlogic_npu

+ $ ./run_with_ssh.sh mobilenet_v1_int8_224_per_layer linux arm64 amlogic_npu 192.168.100.244 22 root 123456

(C308X)

warmup: 1 repeat: 5, average: 6.982800 ms, max: 7.045000 ms, min: 6.951000 ms

results: 3

@@ -281,7 +282,7 @@ Paddle Lite 已支持晶晨 NPU 的预测部署。

Postprocess time: 0.509000 ms

For A311D

- $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer linux arm64 amlogic_npu

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer linux arm64 amlogic_npu 0123456789ABCDEF

( A311D)

warmup: 1 repeat: 15, average: 5.567867 ms, max: 5.723000 ms, min: 5.461000 ms

results: 3

@@ -293,7 +294,7 @@ Paddle Lite 已支持晶晨 NPU 的预测部署。

Postprocess time: 0.411000 ms

For S905D3(Android版)

- $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a amlogic_npu

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a amlogic_npu c8631471d5cd

(S905D3(Android版))

warmup: 1 repeat: 5, average: 13.4116 ms, max: 15.751210 ms, min: 12.433400 ms

results: 3

diff --git a/docs/demo_guides/rockchip_npu.md b/docs/demo_guides/rockchip_npu.md

index 4906745c637..ae92f94442e 100644

--- a/docs/demo_guides/rockchip_npu.md

+++ b/docs/demo_guides/rockchip_npu.md

@@ -208,6 +208,7 @@ Paddle Lite 已支持 Rockchip NPU 的预测部署。

3)`build.sh` 根据入参生成针对不同操作系统、体系结构的二进制程序,需查阅注释信息配置正确的参数值。

4)`run_with_adb.sh` 入参包括模型名称、操作系统、体系结构、目标设备、设备序列号等,需查阅注释信息配置正确的参数值。

5)`run_with_ssh.sh` 入参包括模型名称、操作系统、体系结构、目标设备、ip 地址、用户名、用户密码等,需查阅注释信息配置正确的参数值。

+ 6)下述命令行示例中涉及的具体IP、SSH账号密码、设备序列号等均为示例环境,请用户根据自身实际设备环境修改。

在 ARM CPU 上运行 mobilenet_v1_int8_224_per_layer 全量化模型

$ cd PaddleLite-generic-demo/image_classification_demo/shell

diff --git a/docs/demo_guides/verisilicon_timvx.md b/docs/demo_guides/verisilicon_timvx.md

new file mode 100644

index 00000000000..011fd95f7af

--- /dev/null

+++ b/docs/demo_guides/verisilicon_timvx.md

@@ -0,0 +1,434 @@

+# 芯原 TIM-VX 部署示例

+

+Paddle Lite 已支持通过 TIM-VX 的方式调用芯原 NPU 算力的预测部署。

+其接入原理是与其他接入 Paddle Lite 的新硬件类似,即加载并分析 Paddle 模型,首先将 Paddle 算子转成 NNAdapter 标准算子,其次再通过 TIM-VX 的组网 API 进行网络构建,在线编译模型并执行模型。

+

+需要注意的是,芯原(verisilicon)作为 IP 设计厂商,本身并不提供实体SoC产品,而是授权其 IP 给芯片厂商,如:晶晨(Amlogic),瑞芯微(Rockchip)等。因此本文是适用于被芯原授权了 NPU IP 的芯片产品。只要芯片产品没有大副修改芯原的底层库,则该芯片就可以使用本文档作为 Paddle Lite 推理部署的参考和教程。在本文中,晶晨 SoC 中的 NPU 和 瑞芯微 SoC 中的 NPU 统称为芯原 NPU。

+

+本文档与[ 晶晨 NPU 部署示例 ](./amlogic_npu)和[ 瑞芯微 NPU 部署示例 ](./rockchip_npu)中所描述的部署示例相比,虽然涉及的部分芯片产品相同,但前者是通过 IP 厂商芯原的 TIM-VX 框架接入 Paddle Lite,后二者是通过各自芯片 DDK 接入 Paddle Lite。接入方式不同,支持的算子和模型范围也有所区别。

+

+## 支持现状

+

+### 已支持的芯片

+

+- Amlogic A311D

+

+- Amlogic S905D3

+

+ 注意:理论上支持所有经过芯原授权了 NPU IP 的 SoC(须有匹配版本的 NPU 驱动,下文描述),上述为经过测试的部分。

+

+### 已支持的 Paddle 模型

+

+#### 模型

+

+- [mobilenet_v1_int8_224_per_layer](https://paddlelite-demo.bj.bcebos.com/models/mobilenet_v1_int8_224_per_layer.tar.gz)

+- [resnet50_int8_224_per_layer](https://paddlelite-demo.bj.bcebos.com/models/resnet50_int8_224_per_layer.tar.gz)

+- [ssd_mobilenet_v1_relu_voc_int8_300_per_layer](https://paddlelite-demo.bj.bcebos.com/models/ssd_mobilenet_v1_relu_voc_int8_300_per_layer.tar.gz)

+

+#### 性能

+

+- 测试环境

+ - 编译环境

+ - Ubuntu 16.04,GCC 5.4 for ARMLinux armhf and aarch64

+

+ - 硬件环境

+ - Amlogic A311D

+ - CPU:4 x ARM Cortex-A73 \+ 2 x ARM Cortex-A53

+ - NPU:5 TOPs for INT8

+ - Amlogic S905D3(Android 版本)

+ - CPU:2 x ARM Cortex-55

+ - NPU:1.2 TOPs for INT8

+

+- 测试方法

+ - warmup=1, repeats=5,统计平均时间,单位是 ms

+ - 线程数为1,`paddle::lite_api::PowerMode CPU_POWER_MODE`设置为` paddle::lite_api::PowerMode::LITE_POWER_HIGH `

+ - 分类模型的输入图像维度是{1, 3, 224, 224}

+

+- 测试结果

+

+ |模型 |A311D||S905D3(Android 版本)||

+ |---|---|---|---|---|

+ | |CPU(ms) | NPU(ms) |CPU(ms) | NPU(ms) |

+ |mobilenet_v1_int8_224_per_layer| 81.632133 | 5.112500 | 280.465997 | 12.808100 |

+ |resnet50_int8_224_per_layer| 390.498300| 17.583200 | 787.532340 | 41.313999 |

+ |ssd_mobilenet_v1_relu_voc_int8_300_per_layer| 134.991560| 15.216700 | 295.48919| 40.108970 |

+

+### 已支持(或部分支持)NNAdapter 的 Paddle 算子

+

+您可以查阅[ NNAdapter 算子支持列表](https://github.com/PaddlePaddle/Paddle-Lite/blob/develop/lite/kernels/nnadapter/converter/all.h)获得各算子在不同新硬件上的最新支持信息。

+

+## 参考示例演示

+

+### 测试设备

+

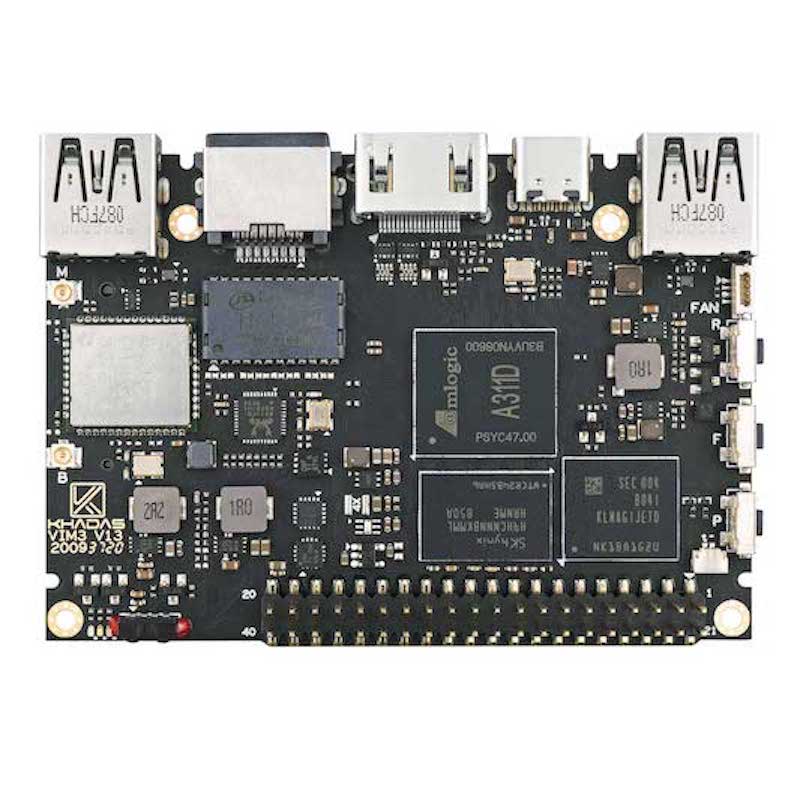

+- Khadas VIM3 开发板(SoC 为 Amlogic A311D)

+

+  +

+

+

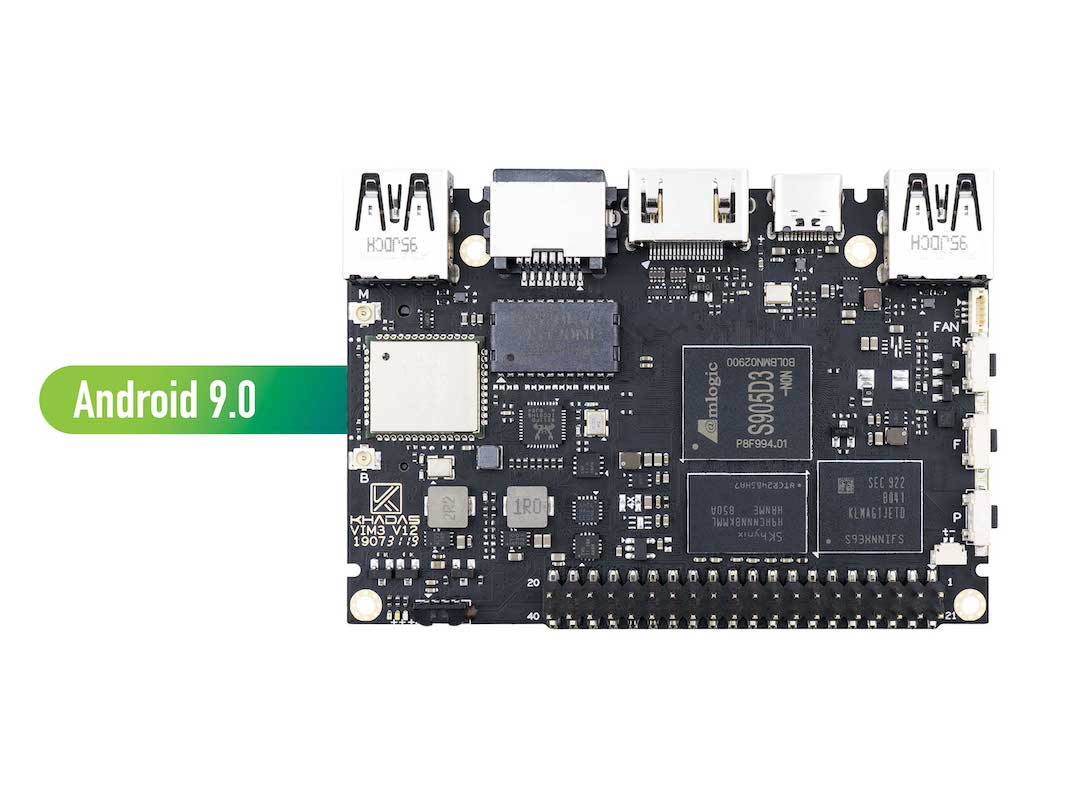

+- Khadas VIM3L 开发板(SoC 为 Amlogic S905D3)

+

+

+

+

+

+- Khadas VIM3L 开发板(SoC 为 Amlogic S905D3)

+

+  +

+### 准备设备环境

+

+- A311D

+

+ - 需要驱动版本为 6.4.4.3(下载驱动请联系开发板厂商)。

+

+ - 注意是 64 位系统。

+

+ - 提供了网络连接 SSH 登录的方式,部分系统提供了adb连接的方式。

+

+ - 可通过 `dmesg | grep Galcore` 查询系统版本:

+

+ ```shell

+ $ dmesg | grep Galcore

+ [ 24.140820] Galcore version 6.4.4.3.310723AAA

+ ```

+

+- S905D3(Android 版本)

+

+ - 需要驱动版本为 6.4.4.3(下载驱动请联系开发板厂商)。

+ - 注意是 32 位系统。

+ - `adb root + adb remount` 以获得修改系统库的权限。

+

+ ```shell

+ $ dmesg | grep Galcore

+ [ 9.020168] <6>[ 9.020168@0] Galcore version 6.4.4.3.310723a

+ ```

+

+ - 示例程序和 Paddle Lite 库的编译需要采用交叉编译方式,通过 `adb`或`ssh` 进行设备的交互和示例程序的运行。

+

+

+### 准备交叉编译环境

+

+- 为了保证编译环境一致,建议参考[ Docker 环境准备](../source_compile/docker_environment)中的 Docker 开发环境进行配置;

+- 由于有些设备只提供网络访问方式(根据开发版的实际情况),需要通过 `scp` 和 `ssh` 命令将交叉编译生成的Paddle Lite 库和示例程序传输到设备上执行,因此,在进入 Docker 容器后还需要安装如下软件:

+

+ ```

+ # apt-get install openssh-client sshpass

+ ```

+

+### 运行图像分类示例程序

+

+- 下载 Paddle Lite 通用示例程序[PaddleLite-generic-demo.tar.gz](https://paddlelite-demo.bj.bcebos.com/devices/generic/PaddleLite-generic-demo.tar.gz),解压后目录主体结构如下:

+

+ ```shell

+ - PaddleLite-generic-demo

+ - image_classification_demo

+ - assets

+ - images

+ - tabby_cat.jpg # 测试图片

+ - tabby_cat.raw # 经过 convert_to_raw_image.py 处理后的 RGB Raw 图像

+ - labels

+ - synset_words.txt # 1000 分类 label 文件

+ - models

+ - mobilenet_v1_int8_224_per_layer

+ - __model__ # Paddle fluid 模型组网文件,可使用 netron 查看网络结构

+ — conv1_weights # Paddle fluid 模型参数文件

+ - batch_norm_0.tmp_2.quant_dequant.scale # Paddle fluid 模型量化参数文件

+ — subgraph_partition_config_file.txt # 自定义子图分割配置文件

+ ...

+ - shell

+ - CMakeLists.txt # 示例程序 CMake 脚本

+ - build.linux.arm64 # arm64 编译工作目录

+ - image_classification_demo # 已编译好的,适用于 arm64 的示例程序

+ - build.linux.armhf # armhf编译工作目录

+ - image_classification_demo # 已编译好的,适用于 armhf 的示例程序

+ - build.android.armeabi-v7a # Android armv7编译工作目录

+ - image_classification_demo # 已编译好的,适用于 Android armv7 的示例程序

+ ...

+ - image_classification_demo.cc # 示例程序源码

+ - build.sh # 示例程序编译脚本

+ - run.sh # 示例程序本地运行脚本

+ - run_with_ssh.sh # 示例程序ssh运行脚本

+ - run_with_adb.sh # 示例程序adb运行脚本

+ - libs

+ - PaddleLite

+ - linux

+ - arm64 # Linux 64 位系统

+ - include # Paddle Lite 头文件

+ - lib # Paddle Lite 库文件

+ - verisilicon_timvx # 芯原 TIM-VX DDK、NNAdapter 运行时库、device HAL 库

+ - libnnadapter.so # NNAdapter 运行时库

+ - libGAL.so # 芯原 DDK

+ - libVSC.so # 芯原 DDK

+ - libOpenVX.so # 芯原 DDK

+ - libarchmodelSw.so # 芯原 DDK

+ - libNNArchPerf.so # 芯原 DDK

+ - libOvx12VXCBinary.so # 芯原 DDK

+ - libNNVXCBinary.so # 芯原 DDK

+ - libOpenVXU.so # 芯原 DDK

+ - libNNGPUBinary.so # 芯原 DDK

+ - libovxlib.so # 芯原 DDK

+ - libOpenCL.so # OpenCL

+ - libverisilicon_timvx.so # # NNAdapter device HAL 库

+ - libtim-vx.so # 芯原 TIM-VX

+ - libgomp.so.1 # gnuomp 库

+ - libpaddle_full_api_shared.so # 预编译 PaddleLite full api 库

+ - libpaddle_light_api_shared.so # 预编译 PaddleLite light api 库

+ ...

+ - android

+ - armeabi-v7a # Android 32 位系统

+ - include # Paddle Lite 头文件

+ - lib # Paddle Lite 库文件

+ - verisilicon_timvx # 芯原 TIM-VX DDK、NNAdapter 运行时库、device HAL 库

+ - libnnadapter.so # NNAdapter 运行时库

+ - libGAL.so # 芯原 DDK

+ - libVSC.so # 芯原 DDK

+ - libOpenVX.so # 芯原 DDK

+ - libarchmodelSw.so # 芯原 DDK

+ - libNNArchPerf.so # 芯原 DDK

+ - libOvx12VXCBinary.so # 芯原 DDK

+ - libNNVXCBinary.so # 芯原 DDK

+ - libOpenVXU.so # 芯原 DDK

+ - libNNGPUBinary.so # 芯原 DDK

+ - libovxlib.so # 芯原 DDK

+ - libOpenCL.so # OpenCL

+ - libverisilicon_timvx.so # # NNAdapter device HAL 库

+ - libtim-vx.so # 芯原 TIM-VX

+ - libgomp.so.1 # gnuomp 库

+ - libc++_shared.so

+ - libpaddle_full_api_shared.so # 预编译 Paddle Lite full api 库

+ - libpaddle_light_api_shared.so # 预编译 Paddle Lite light api 库

+ - OpenCV # OpenCV 预编译库

+ - ssd_detection_demo # 基于 ssd 的目标检测示例程序

+ ```

+

+- 按照以下命令分别运行转换后的ARM CPU模型和 芯原 TIM-VX 模型,比较它们的性能和结果;

+

+ ```shell

+ 注意:

+ 1)`run_with_adb.sh` 不能在 Docker 环境执行,否则可能无法找到设备,也不能在设备上运行。

+ 2)`run_with_ssh.sh` 不能在设备上运行,且执行前需要配置目标设备的 IP 地址、SSH 账号和密码。

+ 3)`build.sh` 根据入参生成针对不同操作系统、体系结构的二进制程序,需查阅注释信息配置正确的参数值。

+ 4)`run_with_adb.sh` 入参包括模型名称、操作系统、体系结构、目标设备、设备序列号等,需查阅注释信息配置正确的参数值。

+ 5)`run_with_ssh.sh` 入参包括模型名称、操作系统、体系结构、目标设备、ip地址、用户名、用户密码等,需查阅注释信息配置正确的参数值。

+ 6)下述命令行示例中涉及的具体IP、SSH账号密码、设备序列号等均为示例环境,请用户根据自身实际设备环境修改。

+

+ 在 ARM CPU 上运行 mobilenet_v1_int8_224_per_layer 全量化模型

+ $ cd PaddleLite-generic-demo/image_classification_demo/shell

+

+ For A311D

+ $ ./run_with_ssh.sh mobilenet_v1_int8_224_per_layer linux arm64 cpu 192.168.100.30 22 khadas khadas

+ (A311D)

+ warmup: 1 repeat: 15, average: 81.678067 ms, max: 81.945999 ms, min: 81.591003 ms

+ results: 3

+ Top0 Egyptian cat - 0.512545

+ Top1 tabby, tabby cat - 0.402567

+ Top2 tiger cat - 0.067904

+ Preprocess time: 1.352000 ms

+ Prediction time: 81.678067 ms

+ Postprocess time: 0.407000 ms

+

+ For S905D3(Android版)

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a cpu c8631471d5cd

+ (S905D3(Android版))

+ warmup: 1 repeat: 5, average: 280.465997 ms, max: 358.815002 ms, min: 268.549812 ms

+ results: 3

+ Top0 Egyptian cat - 0.512545

+ Top1 tabby, tabby cat - 0.402567

+ Top2 tiger cat - 0.067904

+ Preprocess time: 3.199000 ms

+ Prediction time: 280.465997 ms

+ Postprocess time: 0.596000 ms

+

+ ------------------------------

+

+ 在 芯原 NPU 上运行 mobilenet_v1_int8_224_per_layer 全量化模型

+ $ cd PaddleLite-generic-demo/image_classification_demo/shell

+

+ For A311D

+ $ ./run_with_ssh.sh mobilenet_v1_int8_224_per_layer linux arm64 verisilicon_timvx 192.168.100.30 22 khadas khadas

+ (A311D)

+ warmup: 1 repeat: 15, average: 5.112500 ms, max: 5.223000 ms, min: 5.009130 ms

+ results: 3

+ Top0 Egyptian cat - 0.508929

+ Top1 tabby, tabby cat - 0.415333

+ Top2 tiger cat - 0.064347

+ Preprocess time: 1.356000 ms

+ Prediction time: 5.112500 ms

+ Postprocess time: 0.411000 ms

+

+ For S905D3(Android版)

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a verisilicon_timvx c8631471d5cd

+ (S905D3(Android版))

+ warmup: 1 repeat: 5, average: 13.4116 ms, max: 14.7615 ms, min: 12.80810 ms

+ results: 3

+ Top0 Egyptian cat - 0.508929

+ Top1 tabby, tabby cat - 0.415333

+ Top2 tiger cat - 0.064347

+ Preprocess time: 3.170000 ms

+ Prediction time: 13.4116 ms

+ Postprocess time: 0.634000 ms

+ ```

+

+- 如果需要更改测试图片,可将图片拷贝到 `PaddleLite-generic-demo/image_classification_demo/assets/images` 目录下,然后调用 `convert_to_raw_image.py` 生成相应的 RGB Raw 图像,最后修改 `run_with_adb.sh`、`run_with_ssh.sh` 的 IMAGE_NAME 变量即可;

+- 重新编译示例程序:

+ ```shell

+ 注意:

+ 1)请根据 `buid.sh` 配置正确的参数值。

+ 2)需在 Docker 环境中编译。

+

+ # 对于 A311D

+ ./build.sh linux arm64

+

+ # 对于 S905D3(Android版)

+ ./build.sh android armeabi-v7a

+ ```

+

+### 更新模型

+- 通过 Paddle 训练或 X2Paddle 转换得到 MobileNetv1 foat32 模型[ mobilenet_v1_fp32_224 ](https://paddlelite-demo.bj.bcebos.com/models/mobilenet_v1_fp32_224_fluid.tar.gz)

+- 通过 Paddle+PaddleSlim 后量化方式,生成[ mobilenet_v1_int8_224_per_layer 量化模型](https://paddlelite-demo.bj.bcebos.com/devices/rockchip/mobilenet_v1_int8_224_fluid.tar.gz)

+- 下载[ PaddleSlim-quant-demo.tar.gz ](https://paddlelite-demo.bj.bcebos.com/tools/PaddleSlim-quant-demo.tar.gz),解压后清单如下:

+ ```shell

+ - PaddleSlim-quant-demo

+ - image_classification_demo

+ - quant_post # 后量化

+ - quant_post_rockchip_npu.sh # 一键量化脚本,Amlogic 和瑞芯微底层都使用芯原的 NPU,所以通用

+ - README.md # 环境配置说明,涉及 PaddlePaddle、PaddleSlim 的版本选择、编译和安装步骤

+ - datasets # 量化所需要的校准数据集合

+ - ILSVRC2012_val_100 # 从 ImageNet2012 验证集挑选的 100 张图片

+ - inputs # 待量化的 fp32 模型

+ - mobilenet_v1

+ - resnet50

+ - outputs # 产出的全量化模型

+ - scripts # 后量化内置脚本

+ ```

+- 查看 `README.md` 完成 PaddlePaddle 和 PaddleSlim 的安装

+- 直接执行 `./quant_post_rockchip_npu.sh` 即可在 `outputs` 目录下生成mobilenet_v1_int8_224_per_layer 量化模型

+ ```shell

+ ----------- Configuration Arguments -----------

+ activation_bits: 8

+ activation_quantize_type: moving_average_abs_max

+ algo: KL

+ batch_nums: 10

+ batch_size: 10

+ data_dir: ../dataset/ILSVRC2012_val_100

+ is_full_quantize: 1

+ is_use_cache_file: 0

+ model_path: ../models/mobilenet_v1

+ optimize_model: 1

+ output_path: ../outputs/mobilenet_v1

+ quantizable_op_type: conv2d,depthwise_conv2d,mul

+ use_gpu: 0

+ use_slim: 1

+ weight_bits: 8

+ weight_quantize_type: abs_max

+ ------------------------------------------------

+ quantizable_op_type:['conv2d', 'depthwise_conv2d', 'mul']

+ 2021-08-30 05:52:10,048-INFO: Load model and set data loader ...

+ 2021-08-30 05:52:10,129-INFO: Optimize FP32 model ...

+ I0830 05:52:10.139564 14447 graph_pattern_detector.cc:91] --- detected 14 subgraphs

+ I0830 05:52:10.148236 14447 graph_pattern_detector.cc:91] --- detected 13 subgraphs

+ 2021-08-30 05:52:10,167-INFO: Collect quantized variable names ...

+ 2021-08-30 05:52:10,168-WARNING: feed is not supported for quantization.

+ 2021-08-30 05:52:10,169-WARNING: fetch is not supported for quantization.

+ 2021-08-30 05:52:10,170-INFO: Preparation stage ...

+ 2021-08-30 05:52:11,853-INFO: Run batch: 0

+ 2021-08-30 05:52:16,963-INFO: Run batch: 5

+ 2021-08-30 05:52:21,037-INFO: Finish preparation stage, all batch:10

+ 2021-08-30 05:52:21,048-INFO: Sampling stage ...

+ 2021-08-30 05:52:31,800-INFO: Run batch: 0

+ 2021-08-30 05:53:23,443-INFO: Run batch: 5

+ 2021-08-30 05:54:03,773-INFO: Finish sampling stage, all batch: 10

+ 2021-08-30 05:54:03,774-INFO: Calculate KL threshold ...

+ 2021-08-30 05:54:28,580-INFO: Update the program ...

+ 2021-08-30 05:54:29,194-INFO: The quantized model is saved in ../outputs/mobilenet_v1

+ post training quantization finish, and it takes 139.42292165756226.

+

+ ----------- Configuration Arguments -----------

+ batch_size: 20

+ class_dim: 1000

+ data_dir: ../dataset/ILSVRC2012_val_100

+ image_shape: 3,224,224

+ inference_model: ../outputs/mobilenet_v1

+ input_img_save_path: ./img_txt

+ save_input_img: False

+ test_samples: -1

+ use_gpu: 0

+ ------------------------------------------------

+ Testbatch 0, acc1 0.8, acc5 1.0, time 1.63 sec

+ End test: test_acc1 0.76, test_acc5 0.92

+ --------finish eval int8 model: mobilenet_v1-------------

+ ```

+ - 参考[模型转化方法](../user_guides/model_optimize_tool),利用 opt 工具转换生成 TIM-VX 模型,仅需要将 `valid_targets` 设置为 `verisilicon_timvx`, `arm` 即可。

+ ```shell

+ $ ./opt --model_dir=mobilenet_v1_int8_224_per_layer \

+ --optimize_out_type=naive_buffer \

+ --optimize_out=opt_model \

+ --valid_targets=verisilicon_timvx,arm

+ ```

+### 更新支持 TIM-VX 的 Paddle Lite 库

+

+- 下载 Paddle Lite 源码

+

+ ```shell

+ $ git clone https://github.com/PaddlePaddle/Paddle-Lite.git

+ $ cd Paddle-Lite

+ $ git checkout

+ # 注意:编译中依赖的 verisilicon_timvx 相关代码和依赖项会在后续编译脚本中自动下载,无需用户手动下载。

+ ```

+

+- 编译并生成 `Paddle Lite+Verisilicon_TIMVX` 的部署库

+

+ - For A311D

+ - tiny_publish 编译方式

+ ```shell

+ $ ./lite/tools/build_linux.sh --with_extra=ON --with_log=ON --with_nnadapter=ON --nnadapter_with_verisilicon_timvx=ON --nnadapter_verisilicon_timvx_src_git_tag=main --nnadapter_verisilicon_timvx_viv_sdk_url=http://paddlelite-demo.bj.bcebos.com/devices/verisilicon/sdk/viv_sdk_linux_arm64_6_4_4_3_generic.tgz

+

+ ```

+ - full_publish 编译方式

+ ```shell

+ $ ./lite/tools/build_linux.sh --with_extra=ON --with_log=ON --with_nnadapter=ON --nnadapter_with_verisilicon_timvx=ON --nnadapter_verisilicon_timvx_src_git_tag=main --nnadapter_verisilicon_timvx_viv_sdk_url=http://paddlelite-demo.bj.bcebos.com/devices/verisilicon/sdk/viv_sdk_linux_arm64_6_4_4_3_generic.tgz full_publish

+

+ ```

+ - 替换头文件和库

+ ```shell

+ # 替换 include 目录

+ $ cp -rf build.lite.linux.armv8.gcc/inference_lite_lib.armlinux.armv8.nnadapter/cxx/include/ PaddleLite-generic-demo/libs/PaddleLite/linux/arm64/include/

+ # 替换 NNAdapter 运行时库

+ $ cp -rf build.lite.linux.armv8.gcc/inference_lite_lib.armlinux.armv8.nnadapter/cxx/lib/libnnadapter.so PaddleLite-generic-demo/libs/PaddleLite/linux/arm64/lib/verisilicon_timvx/

+ # 替换 NNAdapter device HAL 库

+ $ cp -rf build.lite.linux.armv8.gcc/inference_lite_lib.armlinux.armv8.nnadapter/cxx/lib/libverisilicon_timvx.so PaddleLite-generic-demo/libs/PaddleLite/linux/arm64/lib/verisilicon_timvx/

+ # 替换 芯原 TIM-VX 库

+ $ cp -rf build.lite.linux.armv8.gcc/inference_lite_lib.armlinux.armv8.nnadapter/cxx/lib/libtim-vx.so PaddleLite-generic-demo/libs/PaddleLite/linux/arm64/lib/verisilicon_timvx/

+ # 替换 libpaddle_light_api_shared.so

+ $ cp -rf build.lite.linux.armv8.gcc/inference_lite_lib.armlinux.armv8.nnadapter/cxx/lib/libpaddle_light_api_shared.so PaddleLite-generic-demo/libs/PaddleLite/linux/arm64/lib/

+ # 替换 libpaddle_full_api_shared.so (仅在 full_publish 编译方式下)

+ $ cp -rf build.lite.linux.armv8.gcc/inference_lite_lib.armlinux.armv8.nnadapter/cxx/lib/libpaddle_full_api_shared.so PaddleLite-generic-demo/libs/PaddleLite/linux/arm64/lib/

+ ```

+

+ - S905D3(Android 版)

+ - tiny_publish 编译方式

+ ```shell

+ $ ./lite/tools/build_android.sh --arch=armv7 --toolchain=clang --android_stl=c++_shared --with_extra=ON --with_exception=ON --with_cv=ON --with_log=ON --with_nnadapter=ON --nnadapter_with_verisilicon_timvx=ON --nnadapter_verisilicon_timvx_src_git_tag=main --nnadapter_verisilicon_timvx_viv_sdk_url=http://paddlelite-demo.bj.bcebos.com/devices/verisilicon/sdk/viv_sdk_android_9_armeabi_v7a_6_4_4_3_generic.tgz

+ ```

+

+ - full_publish 编译方式

+ ```shell

+ $ ./lite/tools/build_android.sh --arch=armv7 --toolchain=clang --android_stl=c++_shared --with_extra=ON --with_exception=ON --with_cv=ON --with_log=ON --with_nnadapter=ON --nnadapter_with_verisilicon_timvx=ON --nnadapter_verisilicon_timvx_src_git_tag=main --nnadapter_verisilicon_timvx_viv_sdk_url=http://paddlelite-demo.bj.bcebos.com/devices/verisilicon/sdk/viv_sdk_android_9_armeabi_v7a_6_4_4_3_generic.tgz full_publish

+ ```

+ - 替换头文件和库

+ ```shell

+ # 替换 include 目录

+ $ cp -rf build.lite.android.armv7.clang/inference_lite_lib.android.armv7.nnadapter/cxx/include/ PaddleLite-generic-demo/libs/PaddleLite/linux/armhf/include/

+ # 替换 NNAdapter 运行时库

+ $ cp -rf build.lite.android.armv7.clang/inference_lite_lib.android.armv7.nnadapter/cxx/lib/libnnadapter.so PaddleLite-generic-demo/libs/PaddleLite/android/armeabi-v7a/lib/verisilicon_timvx/

+ # 替换 NNAdapter device HAL 库

+ $ cp -rf build.lite.android.armv7.clang/inference_lite_lib.android.armv7.nnadapter/cxx/lib/libverisilicon_timvx.so PaddleLite-generic-demo/libs/PaddleLite/android/armeabi-v7a/lib/verisilicon_timvx/

+ # 替换 芯原 TIM-VX 库

+ $ cp -rf build.lite.android.armv7.clang/inference_lite_lib.android.armv7.nnadapter/cxx/lib/libtim-vx.so PaddleLite-generic-demo/libs/PaddleLite/android/armeabi-v7a/lib/verisilicon_timvx/

+ # 替换 libpaddle_light_api_shared.so

+ $ cp -rf build.lite.android.armv7.clang/inference_lite_lib.android.armv7.nnadapter/cxx/lib/libpaddle_light_api_shared.so PaddleLite-generic-demo/libs/PaddleLite/android/armeabi-v7a/lib/

+ # 替换 libpaddle_full_api_shared.so(仅在 full_publish 编译方式下)

+ $ cp -rf build.lite.android.armv7.clang/inference_lite_lib.android.armv7.nnadapter/cxx/lib/libpaddle_full_api_shared.so PaddleLite-generic-demo/libs/PaddleLite/android/armeabi-v7a/lib/

+ ```

+

+- 替换头文件后需要重新编译示例程序

+

+## 其它说明

+

+- Paddle Lite 研发团队正在持续扩展基于TIM-VX的算子和模型。

diff --git a/docs/index.rst b/docs/index.rst

index 37a923d3a1d..7bc950b092a 100644

--- a/docs/index.rst

+++ b/docs/index.rst

@@ -78,6 +78,7 @@ Welcome to Paddle-Lite's documentation!

demo_guides/baidu_xpu

demo_guides/rockchip_npu

demo_guides/amlogic_npu

+ demo_guides/verisilicon_timvx

demo_guides/mediatek_apu

demo_guides/imagination_nna

demo_guides/bitmain

diff --git a/docs/performance/benchmark.md b/docs/performance/benchmark.md

index 60f13c0c2e2..db19374b1f3 100644

--- a/docs/performance/benchmark.md

+++ b/docs/performance/benchmark.md

@@ -137,7 +137,10 @@

请参考 [Paddle Lite 使用瑞芯微 NPU 预测部署](../demo_guides/rockchip_npu)的最新性能数据

## 晶晨 NPU 的性能数据

-请参考 [Paddle Lite 使用晶晨NPU 预测部署](../demo_guides/amlogic_npu)的最新性能数据

+请参考 [Paddle Lite 使用晶晨 NPU 预测部署](../demo_guides/amlogic_npu)的最新性能数据

+

+## 芯原 TIM-VX 的性能数据

+请参考 [Paddle Lite 使用芯原 TIM-VX 预测部署](../demo_guides/verisilicon_timvx)的最新性能数据

## 联发科 APU 的性能数据

请参考 [Paddle Lite 使用联发科 APU 预测部署](../demo_guides/mediatek_apu)的最新性能数据

diff --git a/docs/quick_start/support_model_list.md b/docs/quick_start/support_model_list.md

index fd24a5b5d78..5c65c3176ff 100644

--- a/docs/quick_start/support_model_list.md

+++ b/docs/quick_start/support_model_list.md

@@ -6,7 +6,7 @@

| 类别 | 类别细分 | 模型 | 支持平台 |

|-|-|:-|:-|

-| CV | 分类 | [MobileNetV1](https://paddlelite-demo.bj.bcebos.com/models/mobilenet_v1_fp32_224_fluid.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiKirinNPU, RockchipNPU, MediatekAPU, KunlunxinXPU, HuaweiAscendNPU |

+| CV | 分类 | [MobileNetV1](https://paddlelite-demo.bj.bcebos.com/models/mobilenet_v1_fp32_224_fluid.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiKirinNPU, RockchipNPU, MediatekAPU, KunlunxinXPU, HuaweiAscendNPU, VerisiliconTIMVX |

| CV | 分类 | [MobileNetV2](https://paddlelite-demo.bj.bcebos.com/models/mobilenet_v2_fp32_224_fluid.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiKirinNPU, KunlunxinXPU, HuaweiAscendNPU |

| CV | 分类 | [MobileNetV3_large](https://paddle-inference-dist.bj.bcebos.com/AI-Rank/mobile/MobileNetV3_large_x1_0.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiAscendNPU |

| CV | 分类 | [MobileNetV3_small](https://paddle-inference-dist.bj.bcebos.com/AI-Rank/mobile/MobileNetV3_small_x1_0.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiAscendNPU |

@@ -19,8 +19,8 @@

| CV | 分类 | HRNet_W18_C | ARM, X86 |

| CV | 分类 | RegNetX_4GF | ARM, X86 |

| CV | 分类 | Xception41 | ARM, X86 |

-| CV | 分类 | [ResNet18](https://paddlelite-demo.bj.bcebos.com/models/resnet18_fp32_224_fluid.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiKirinNPU, RockchipNPU, KunlunxinXPU, HuaweiAscendNPU |

-| CV | 分类 | [ResNet50](https://paddlelite-demo.bj.bcebos.com/models/resnet50_fp32_224_fluid.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiKirinNPU, RockchipNPU, KunlunxinXPU, HuaweiAscendNPU |

+| CV | 分类 | [ResNet18](https://paddlelite-demo.bj.bcebos.com/models/resnet18_fp32_224_fluid.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiKirinNPU, RockchipNPU, KunlunxinXPU, HuaweiAscendNPU, VerisiliconTIMVX |

+| CV | 分类 | [ResNet50](https://paddlelite-demo.bj.bcebos.com/models/resnet50_fp32_224_fluid.tar.gz) | ARM, X86, GPU(OPENCL,METAL), HuaweiKirinNPU, RockchipNPU, KunlunxinXPU, HuaweiAscendNPU, VerisiliconTIMVX |

| CV | 分类 | [ResNet101](https://paddlelite-demo.bj.bcebos.com/NNAdapter/models/PaddleClas/ResNet101.tgz) | ARM, X86, HuaweiKirinNPU, RockchipNPU, KunlunxinXPU, HuaweiAscendNPU |

| CV | 分类 | [ResNeXt50](https://paddlelite-demo.bj.bcebos.com/NNAdapter/models/PaddleClas/ResNeXt50_32x4d.tgz) | ARM, X86, HuaweiAscendNPU |

| CV | 分类 | [MnasNet](https://paddlelite-demo.bj.bcebos.com/models/mnasnet_fp32_224_fluid.tar.gz)| ARM, HuaweiKirinNPU, HuaweiAscendNPU |

@@ -32,7 +32,7 @@

| CV | 分类 | VGG16 | ARM, X86, GPU(OPENCL), KunlunxinXPU, HuaweiAscendNPU |

| CV | 分类 | VGG19 | ARM, X86, GPU(OPENCL,METAL), KunlunxinXPU, HuaweiAscendNPU|

| CV | 分类 | GoogleNet | ARM, X86, KunlunxinXPU, HuaweiAscendNPU |

-| CV | 检测 | [SSD-MobileNetV1](https://paddlelite-demo.bj.bcebos.com/models/ssd_mobilenet_v1_pascalvoc_fp32_300_fluid.tar.gz) | ARM, HuaweiKirinNPU*, HuaweiAscendNPU* |

+| CV | 检测 | [SSD-MobileNetV1](https://paddlelite-demo.bj.bcebos.com/models/ssd_mobilenet_v1_pascalvoc_fp32_300_fluid.tar.gz) | ARM, HuaweiKirinNPU*, HuaweiAscendNPU*, VerisiliconTIMVX* |

| CV | 检测 | [SSD-MobileNetV3-large](https://paddle-inference-dist.bj.bcebos.com/AI-Rank/mobile/ssdlite_mobilenet_v3_large.tar.gz) | ARM, X86, GPU(OPENCL,METAL) |

| CV | 检测 | [SSD-VGG16](https://paddlelite-demo.bj.bcebos.com/NNAdapter/models/PaddleDetection/ssd_vgg16_300_240e_voc.tgz) | ARM, X86, HuaweiAscendNPU* |

| CV | 检测 | [YOLOv3-DarkNet53](https://paddlelite-demo.bj.bcebos.com/NNAdapter/models/PaddleDetection/yolov3_darknet53_270e_coco.tgz) | ARM, X86, HuaweiAscendNPU* |

diff --git a/docs/quick_start/support_operation_list.md b/docs/quick_start/support_operation_list.md

index a85d9b708ff..2c98239f2a6 100644

--- a/docs/quick_start/support_operation_list.md

+++ b/docs/quick_start/support_operation_list.md

@@ -10,99 +10,99 @@ Host 端 Kernel 是算子在任意 CPU 上纯 C/C++ 的具体实现,具有可

以 ARM CPU 为例,如果模型中某个算子没有 ARM 端 Kernel,但是有 Host 端 Kernel,那么模型优化阶段该算子会选择 Host 端 Kernel,该模型还是可以顺利部署。

-| OP_name| ARM | OpenCL | Metal | 昆仑芯XPU | Host | X86 | 比特大陆 | 英特尔FPGA | 寒武纪mlu | 华为昇腾NPU | 联发科APU | 瑞芯微NPU | 华为麒麟NPU | 颖脉NNA | 晶晨NPU |

-|-:|-| -| -| -| -| -| -| -| -| -| -| -| -| -| -|

-| affine_channel|Y| | | | | | | | | | | | | | |

-| affine_grid|Y| | | | | | | | | | | | | | |

-| arg_max|Y|Y| |Y|Y| | | |Y|Y| | | | | |

-| assign_value| | | |Y|Y| |Y| | |Y| | | | | |

-| batch_norm|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| bilinear_interp|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |

-| bilinear_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |

-| box_coder|Y|Y|Y|Y|Y|Y|Y| | | | | | | | |

-| calib|Y| | |Y| | | | |Y| | | | | | |

-| cast| | | |Y|Y| |Y| |Y|Y| | | | | |

-| concat|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

-| conv2d_transpose|Y|Y|Y|Y| |Y|Y| | |Y| | | | |Y|

-| density_prior_box| | | |Y|Y|Y|Y| | | | | | | | |

-| depthwise_conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

-| depthwise_conv2d_transpose| |Y| | | | |Y| | | | | | | | |

-| dropout|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| elementwise_add|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| elementwise_div|Y| | |Y| |Y|Y| | |Y|Y|Y|Y| |Y|

-| elementwise_floordiv|Y| | | | |Y| | | | | | | | | |

-| elementwise_max|Y| | |Y| |Y| | | |Y| | | | | |

-| elementwise_min|Y| | | | |Y| | | |Y| | | | | |

-| elementwise_mod|Y| | | | |Y| | | | | | | | | |

-| elementwise_mul|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| elementwise_pow|Y| | | | |Y| | | |Y| | | | | |

-| elementwise_sub|Y|Y|Y|Y| |Y|Y| | |Y|Y|Y|Y| |Y|

-| elu|Y| | | |Y| | | | | | | | | | |

-| erf|Y| | | | | | | | | | | | | | |

-| expand| |Y| | |Y| | | | | | | | | | |

-| expand_as| | | | |Y| | | | | | | | | | |

-| fc|Y|Y|Y| | |Y| | |Y|Y|Y|Y|Y|Y|Y|

-| feed| | |Y| |Y| | | | | | | | | | |

-| fetch| | |Y| |Y| | | | | | | | | | |

-| fill_constant| | | |Y|Y| |Y| | |Y| | | | | |

-| fill_constant_batch_size_like| | | |Y|Y| | | | | | | | | | |

-| flatten| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| flatten2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| flatten_contiguous_range| | | |Y|Y| | | | |Y|Y|Y|Y| |Y|

-|fusion_elementwise_add_activation|Y|Y|Y| | |Y| | | |Y|Y|Y|Y| |Y|

-|fusion_elementwise_div_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y|

-|fusion_elementwise_max_activation|Y| | | | |Y| | | |Y| | | | | |

-|fusion_elementwise_min_activation|Y| | | | |Y| | | |Y| | | | | |

-|fusion_elementwise_mul_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y|

-|fusion_elementwise_pow_activation| | | | | | | | | |Y| | | | | |

-|fusion_elementwise_sub_activation|Y|Y| | | |Y| | | |Y|Y|Y|Y| |Y|

-| grid_sampler|Y|Y| |Y| |Y| | | | | | | | | |

-| instance_norm|Y|Y| |Y| |Y| | | |Y| | | | | |

-| io_copy| |Y|Y|Y| | | | |Y| | | | | | |

-| io_copy_once| |Y|Y|Y| | | | | | | | | | | |

-| layout|Y|Y| | | |Y| | |Y| | | | | | |

-| layout_once|Y|Y| | | | | | | | | | | | | |

-| leaky_relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y| | | | | |

-| lod_array_length| | | | |Y| | | | | | | | | | |

-| matmul|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |

-| mul|Y| | |Y| |Y|Y| | | | | | | | |

-| multiclass_nms|Y| | | |Y| |Y| | | | | | | | |

-| multiclass_nms2|Y| | | |Y| |Y| | | | | | | | |

-| multiclass_nms3|Y| | | |Y| | | | | | | | | | |

-| nearest_interp|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| nearest_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |

-| pad2d|Y|Y|Y|Y|Y| | | | |Y| | | | | |

-| pool2d|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|

-| prelu|Y|Y| |Y|Y| | | | |Y| | | | | |

-| prior_box|Y| | |Y|Y| |Y| | | | | | | | |

-| range| | | | |Y| | | | |Y| | | | | |

-| reduce_mean|Y|Y| |Y| |Y|Y| | |Y| | | | | |

-| relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|

-| relu6|Y|Y|Y|Y|Y|Y| | |Y|Y|Y|Y|Y|Y|Y|

-| reshape| |Y| |Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| reshape2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| scale|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| search_fc| | | |Y| | | | | | | | | | | |

-| sequence_topk_avg_pooling| | | |Y| |Y| | | | | | | | | |

-| shuffle_channel|Y|Y|Y| |Y| | | | | | | | | | |

-| sigmoid|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y| |Y|

-| slice|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| softmax|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|

-| softplus|Y| | | | | | | | | | | | | | |

-| squeeze| |Y| |Y|Y| |Y| |Y|Y| | | | | |

-| squeeze2| |Y| |Y|Y| |Y| |Y|Y| | | | | |

-| stack| | | |Y|Y|Y| | | |Y| | | | | |

-| subgraph| | | | | | | | |Y| | | | | | |

-| sync_batch_norm|Y|Y| | | |Y| | | | | | | | | |

-| tanh|Y|Y| |Y|Y|Y| | | |Y|Y|Y|Y| |Y|

-| thresholded_relu|Y| | | |Y| | | | | | | | | | |

-| transpose|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| transpose2|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| unsqueeze| |Y| |Y|Y| | | | |Y| | | | | |

-| unsqueeze2| |Y| |Y|Y| | | | |Y| | | | | |

-| write_back| | | | |Y| | | | | | | | | | |

-| yolo_box|Y|Y|Y|Y|Y| |Y| | | | | | | | |

+| OP_name| ARM | OpenCL | Metal | 昆仑芯XPU | Host | X86 | 比特大陆 | 英特尔FPGA | 寒武纪mlu | 华为昇腾NPU | 联发科APU | 瑞芯微NPU | 华为麒麟NPU | 颖脉NNA | 晶晨NPU | 芯原TIM-VX |

+|-:|-| -| -| -| -| -| -| -| -| -| -| -| -| -| -| -|

+| affine_channel|Y| | | | | | | | | | | | | | | |

+| affine_grid|Y| | | | | | | | | | | | | | | |

+| arg_max|Y|Y| |Y|Y| | | |Y|Y| | | | | | |

+| assign_value| | | |Y|Y| |Y| | |Y| | | | | | |

+| batch_norm|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| bilinear_interp|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |Y|

+| bilinear_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |Y|

+| box_coder|Y|Y|Y|Y|Y|Y|Y| | | | | | | | | |

+| calib|Y| | |Y| | | | |Y| | | | | | | |

+| cast| | | |Y|Y| |Y| |Y|Y| | | | | | |

+| concat|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

+| conv2d_transpose|Y|Y|Y|Y| |Y|Y| | |Y| | | | |Y|Y|

+| density_prior_box| | | |Y|Y|Y|Y| | | | | | | | | |

+| depthwise_conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

+| depthwise_conv2d_transpose| |Y| | | | |Y| | | | | | | | | |

+| dropout|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| elementwise_add|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| elementwise_div|Y| | |Y| |Y|Y| | |Y|Y|Y|Y| |Y|Y|

+| elementwise_floordiv|Y| | | | |Y| | | | | | | | | | |

+| elementwise_max|Y| | |Y| |Y| | | |Y| | | | | |Y|

+| elementwise_min|Y| | | | |Y| | | |Y| | | | | |Y|

+| elementwise_mod|Y| | | | |Y| | | | | | | | | | |

+| elementwise_mul|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| elementwise_pow|Y| | | | |Y| | | |Y| | | | | |Y|

+| elementwise_sub|Y|Y|Y|Y| |Y|Y| | |Y|Y|Y|Y| |Y|Y|

+| elu|Y| | | |Y| | | | | | | | | | | |

+| erf|Y| | | | | | | | | | | | | | | |

+| expand| |Y| | |Y| | | | | | | | | | | |

+| expand_as| | | | |Y| | | | | | | | | | | |

+| fc|Y|Y|Y| | |Y| | |Y|Y|Y|Y|Y|Y|Y|Y|

+| feed| | |Y| |Y| | | | | | | | | | | |

+| fetch| | |Y| |Y| | | | | | | | | | | |

+| fill_constant| | | |Y|Y| |Y| | |Y| | | | | | |

+| fill_constant_batch_size_like| | | |Y|Y| | | | | | | | | | |Y|

+| flatten| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| flatten2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| flatten_contiguous_range| | | |Y|Y| | | | |Y|Y|Y|Y| |Y|Y|

+|fusion_elementwise_add_activation|Y|Y|Y| | |Y| | | |Y|Y|Y|Y| |Y| |

+|fusion_elementwise_div_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y| |

+|fusion_elementwise_max_activation|Y| | | | |Y| | | |Y| | | | | | |

+|fusion_elementwise_min_activation|Y| | | | |Y| | | |Y| | | | | | |

+|fusion_elementwise_mul_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y| |

+|fusion_elementwise_pow_activation| | | | | | | | | |Y| | | | | | |

+|fusion_elementwise_sub_activation|Y|Y| | | |Y| | | |Y|Y|Y|Y| |Y| |

+| grid_sampler|Y|Y| |Y| |Y| | | | | | | | | | |

+| instance_norm|Y|Y| |Y| |Y| | | |Y| | | | | | |

+| io_copy| |Y|Y|Y| | | | |Y| | | | | | | |

+| io_copy_once| |Y|Y|Y| | | | | | | | | | | | |

+| layout|Y|Y| | | |Y| | |Y| | | | | | | |

+| layout_once|Y|Y| | | | | | | | | | | | | | |

+| leaky_relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y| | | | | |Y|

+| lod_array_length| | | | |Y| | | | | | | | | | | |

+| matmul|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |Y|

+| mul|Y| | |Y| |Y|Y| | | | | | | | | |

+| multiclass_nms|Y| | | |Y| |Y| | | | | | | | | |

+| multiclass_nms2|Y| | | |Y| |Y| | | | | | | | | |

+| multiclass_nms3|Y| | | |Y| | | | | | | | | | | |

+| nearest_interp|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| nearest_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |Y|

+| pad2d|Y|Y|Y|Y|Y| | | | |Y| | | | | | |

+| pool2d|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|

+| prelu|Y|Y| |Y|Y| | | | |Y| | | | | | |

+| prior_box|Y| | |Y|Y| |Y| | | | | | | | | |

+| range| | | | |Y| | | | |Y| | | | | | |

+| reduce_mean|Y|Y| |Y| |Y|Y| | |Y| | | | | | |

+| relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|

+| relu6|Y|Y|Y|Y|Y|Y| | |Y|Y|Y|Y|Y|Y|Y|Y|

+| reshape| |Y| |Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| reshape2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| scale|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| search_fc| | | |Y| | | | | | | | | | | | |

+| sequence_topk_avg_pooling| | | |Y| |Y| | | | | | | | | | |

+| shuffle_channel|Y|Y|Y| |Y| | | | | | | | | | |Y|

+| sigmoid|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| slice|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| softmax|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|

+| softplus|Y| | | | | | | | | | | | | | | |

+| squeeze| |Y| |Y|Y| |Y| |Y|Y| | | | | |Y|

+| squeeze2| |Y| |Y|Y| |Y| |Y|Y| | | | | |Y|

+| stack| | | |Y|Y|Y| | | |Y| | | | | | |

+| subgraph| | | | | | | | |Y| | | | | | | |

+| sync_batch_norm|Y|Y| | | |Y| | | | | | | | | | |

+| tanh|Y|Y| |Y|Y|Y| | | |Y|Y|Y|Y| |Y| |

+| thresholded_relu|Y| | | |Y| | | | | | | | | | | |

+| transpose|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| transpose2|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| unsqueeze| |Y| |Y|Y| | | | |Y| | | | | |Y|

+| unsqueeze2| |Y| |Y|Y| | | | |Y| | | | | |Y|

+| write_back| | | | |Y| | | | | | | | | | | |

+| yolo_box|Y|Y|Y|Y|Y| |Y| | | | | | | | | |

### 附加算子

@@ -110,273 +110,273 @@ Host 端 Kernel 是算子在任意 CPU 上纯 C/C++ 的具体实现,具有可

加上附加算子共计 269 个,需要在编译时打开 `--with_extra=ON` 开关才会编译,具体请参考[参数详情](../source_compile/compile_options)。

-| OP_name| ARM | OpenCL | Metal | 昆仑芯XPU | Host | X86 | 比特大陆 | 英特尔FPGA | 寒武纪mlu | 华为昇腾NPU | 联发科APU | 瑞芯微NPU | 华为麒麟NPU | 颖脉NNA | 晶晨NPU |

-|-:|-| -| -| -| -| -| -| -| -| -| -| -| -| -| -|

-| abs|Y|Y| |Y|Y| | | | |Y| | | | | |

-| affine_channel|Y| | | | | | | | | | | | | | |

-| affine_grid|Y| | | | | | | | | | | | | | |

-| anchor_generator| | | |Y|Y| | | | | | | | | | |

-| arg_max|Y|Y| |Y|Y| | | |Y|Y| | | | | |

-| arg_min| | | | | | | | | |Y| | | | | |

-| argsort| | | | |Y| | | | | | | | | | |

-| assign| | | |Y|Y| | | | |Y| | | | | |

-| assign_value| | | |Y|Y| |Y| | |Y| | | | | |

-| attention_padding_mask| | | | | | | | | | | | | | | |

-| axpy|Y| | | | | | | | | | | | | | |

-| batch_norm|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| beam_search| | | | |Y| | | | | | | | | | |

-| beam_search_decode| | | | |Y| | | | | | | | | | |

-| bilinear_interp|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |

-| bilinear_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |

-| bmm| | | |Y| | | | | | | | | | | |

-| box_clip| | | |Y|Y| | | | | | | | | | |

-| box_coder|Y|Y|Y|Y|Y|Y|Y| | | | | | | | |

-| calib|Y| | |Y| | | | |Y| | | | | | |

-| calib_once|Y| | |Y| | | | |Y| | | | | | |

-| cast| | | |Y|Y| |Y| |Y|Y| | | | | |

-| clip|Y|Y| |Y| |Y| | | |Y| | | | | |

-| collect_fpn_proposals| | | | |Y| | | | | | | | | | |

-| concat|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| conditional_block| | | | |Y| | | | | | | | | | |

-| conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

-| conv2d_transpose|Y|Y|Y|Y| |Y|Y| | |Y| | | | |Y|

-| correlation| | | |Y|Y| | | | | | | | | | |

-| cos| |Y| | |Y| | | | | | | | | | |

-| cos_sim| | | | |Y| | | | | | | | | | |

-| crf_decoding| | | | |Y| | | | | | | | | | |

-| crop| | | | |Y| | | | | | | | | | |

-| crop_tensor| | | | |Y| | | | | | | | | | |

-| ctc_align| | | | |Y| | | | | | | | | | |

-| cumsum| | | | |Y| | | | |Y| | | | | |

-| decode_bboxes|Y| | | | | | | | | | | | | | |

-| deformable_conv|Y| | | |Y| | | | |Y| | | | | |

-| density_prior_box| | | |Y|Y|Y|Y| | | | | | | | |

-| depthwise_conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

-| depthwise_conv2d_transpose| |Y| | | | |Y| | | | | | | | |

-| distribute_fpn_proposals| | | | |Y| | | | | | | | | | |

-| dropout|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| elementwise_add|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| elementwise_div|Y| | |Y| |Y|Y| | |Y|Y|Y|Y| |Y|

-| elementwise_floordiv|Y| | | | |Y| | | | | | | | | |

-| elementwise_max|Y| | |Y| |Y| | | |Y| | | | | |

-| elementwise_min|Y| | | | |Y| | | |Y| | | | | |

-| elementwise_mod|Y| | | | |Y| | | | | | | | | |

-| elementwise_mul|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| elementwise_pow|Y| | | | |Y| | | |Y| | | | | |

-| elementwise_sub|Y|Y|Y|Y| |Y|Y| | |Y|Y|Y|Y| |Y|

-| elu|Y| | | |Y| | | | | | | | | | |

-| equal| | | | |Y| | | | |Y| | | | | |

-| erf|Y| | | | | | | | | | | | | | |

-| exp|Y|Y|Y|Y|Y| | | | |Y| | | | | |

-| expand| |Y| | |Y| | | | | | | | | | |

-| expand_as| | | | |Y| | | | | | | | | | |

-| expand_v2| | | |Y|Y| | | | |Y| | | | | |

-| fake_channel_wise_dequantize_max_abs| | | | | | | | | | | | | | | |

-| fake_channel_wise_quantize_dequantize_abs_max| | | | | | | | | | | | | | | |

-| fake_dequantize_max_abs| | | | | | | | | | | | | | | |

-| fake_quantize_abs_max| | | | | | | | | | | | | | | |

-| fake_quantize_dequantize_abs_max| | | | | | | | | | | | | | | |

-|fake_quantize_dequantize_moving_average_abs_max| | | | | | | | | | | | | | | |

-| fake_quantize_moving_average_abs_max| | | | | | | | | | | | | | | |

-| fake_quantize_range_abs_max| | | | | | | | | | | | | | | |

-| fc|Y|Y|Y| | |Y| | |Y|Y|Y|Y|Y|Y|Y|

-| feed| | |Y| |Y| | | | | | | | | | |

-| fetch| | |Y| |Y| | | | | | | | | | |

-| fill_any_like| | | |Y|Y| | | | |Y| | | | | |

-| fill_constant| | | |Y|Y| |Y| | |Y| | | | | |

-| fill_constant_batch_size_like| | | |Y|Y| | | | | | | | | | |

-| fill_zeros_like| | | |Y|Y| | | | | | | | | | |

-| flatten| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| flatten2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| flatten_contiguous_range| | | |Y|Y| | | | |Y|Y|Y|Y| |Y|

-| flip| | | | |Y| | | | | | | | | | |

-| floor|Y| | | |Y| | | | | | | | | | |

-| fusion_elementwise_add_activation|Y|Y|Y| | |Y| | | |Y|Y|Y|Y| |Y|

-| fusion_elementwise_div_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y|

-| fusion_elementwise_max_activation|Y| | | | |Y| | | |Y| | | | | |

-| fusion_elementwise_min_activation|Y| | | | |Y| | | |Y| | | | | |

-| fusion_elementwise_mul_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y|

-| fusion_elementwise_pow_activation| | | | | | | | | |Y| | | | | |

-| fusion_elementwise_sub_activation|Y|Y| | | |Y| | | |Y|Y|Y|Y| |Y|

-| gather|Y| | |Y|Y|Y| | |Y| | | | | | |

-| gather_nd| | | | |Y| | | | | | | | | | |

-| gather_tree| | | | |Y| | | | | | | | | | |

-| gelu|Y| | |Y| |Y| | | |Y| | | | | |

-| generate_proposals| | | |Y|Y| | | | | | | | | | |

-| generate_proposals_v2|Y| | | | | | | | | | | | | | |

-| greater_equal| | | | |Y| | | | |Y| | | | | |

-| greater_than| |Y| | |Y| | | | |Y| | | | | |

-| grid_sampler|Y|Y| |Y| |Y| | | | | | | | | |

-| group_norm|Y| | | | |Y| | | | | | | | | |

-| gru|Y| | |Y| |Y| | | | | | | | | |

-| gru_unit|Y| | |Y| |Y| | | | | | | | | |

-| hard_sigmoid|Y|Y|Y|Y|Y| |Y| | |Y| | | | | |

-| hard_swish|Y|Y|Y|Y|Y|Y|Y| | |Y| | | | | |

-| im2sequence|Y| | |Y| | |Y| | | | | | | | |

-| increment| | | |Y|Y| | | | | | | | | | |

-| index_select| | | | |Y| | | | | | | | | | |

-| instance_norm|Y|Y| |Y| |Y| | | |Y| | | | | |

-| inverse| | | | |Y| | | | | | | | | | |

-| io_copy| |Y|Y|Y| | | | |Y| | | | | | |

-| io_copy_once| |Y|Y|Y| | | | | | | | | | | |

-| is_empty| | | |Y|Y| | | | | | | | | | |

-| layer_norm|Y| | |Y| |Y| | | |Y| | | | | |

-| layout|Y|Y| | | |Y| | |Y| | | | | | |

-| layout_once|Y|Y| | | | | | | | | | | | | |

-| leaky_relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y| | | | | |

-| less_equal| | | | |Y| | | | |Y| | | | | |

-| less_than| | | |Y|Y| | | | |Y| | | | | |

-| linspace| | | | |Y| | | | | | | | | | |

-| lod_array_length| | | | |Y| | | | | | | | | | |

-| lod_reset| | | | |Y| | | | | | | | | | |

-| log|Y| | |Y|Y| | | | |Y| | | | | |

-| logical_and| | | |Y|Y| | | | | | | | | | |

-| logical_not| | | |Y|Y| | | | | | | | | | |

-| logical_or| | | | |Y| | | | | | | | | | |

-| logical_xor| | | | |Y| | | | | | | | | | |

-| lookup_table|Y| | |Y| |Y| | | | | | | | | |

-| lookup_table_dequant|Y| | | | | | | | | | | | | | |

-| lookup_table_v2|Y| | |Y| |Y| | | |Y| | | | | |

-| lrn|Y|Y| |Y| | | | |Y| | | | | | |

-| lstm|Y| | | | | | | | | | | | | | |

-| match_matrix_tensor| | | |Y| |Y| | | | | | | | | |

-| matmul|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |

-| matmul_v2|Y|Y| |Y| | | | | |Y| | | | | |

-| matrix_nms| | | | |Y| | | | | | | | | | |

-| max_pool2d_with_index| | | |Y| | |Y| | | | | | | | |

-| mean|Y| | | | | | | | | | | | | | |

-| merge_lod_tensor|Y| | | | | | | | | | | | | | |

-| meshgrid| | | | |Y| | | | | | | | | | |

-| mish|Y| | | | |Y| | | | | | | | | |

-| mul|Y| | |Y| |Y|Y| | | | | | | | |

-| multiclass_nms|Y| | | |Y| |Y| | | | | | | | |

-| multiclass_nms2|Y| | | |Y| |Y| | | | | | | | |

-| multiclass_nms3|Y| | | |Y| | | | | | | | | | |

-| nearest_interp|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| nearest_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |

-| negative|Y| | | | | | | | | | | | | | |

-| norm|Y| | |Y|Y| |Y| |Y|Y| | | | | |

-| not_equal| | | | |Y| | | | |Y| | | | | |

-| one_hot| | | | |Y| | | | | | | | | | |

-| one_hot_v2| | | | |Y| | | | | | | | | | |

-| p_norm|Y| | | |Y| | | | |Y| | | | | |

-| pad2d|Y|Y|Y|Y|Y| | | | |Y| | | | | |

-| pad3d| | | | |Y| | | | |Y| | | | | |

-| pixel_shuffle|Y|Y| |Y|Y| | | | | | | | | | |

-| polygon_box_transform| | | | |Y| | | | | | | | | | |

-| pool2d|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|

-| pow|Y| | |Y| |Y| | | |Y| | | | | |

-| prelu|Y|Y| |Y|Y| | | | |Y| | | | | |

-| print| | | | |Y| | | | | | | | | | |

-| prior_box|Y| | |Y|Y| |Y| | | | | | | | |

-| range| | | | |Y| | | | |Y| | | | | |

-| read_from_array| | | |Y|Y| | | | | | | | | | |

-| reciprocal|Y| | |Y|Y| | | | | | | | | | |

-| reduce_all| | | |Y|Y| | | | | | | | | | |

-| reduce_any| | | |Y|Y| | | | | | | | | | |

-| reduce_max|Y|Y| |Y| |Y|Y| | | | | | | | |

-| reduce_mean|Y|Y| |Y| |Y|Y| | |Y| | | | | |

-| reduce_min|Y| | |Y| |Y| | | | | | | | | |

-| reduce_prod|Y| | |Y| |Y| | | | | | | | | |

-| reduce_sum|Y| | |Y| |Y|Y| | | | | | | | |

-| relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|

-| relu6|Y|Y|Y|Y|Y|Y| | |Y|Y|Y|Y|Y|Y|Y|

-| relu_clipped|Y| | | |Y| | | | | | | | | | |

-| reshape| |Y| |Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| reshape2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|

-| retinanet_detection_output| | | | |Y| | | | | | | | | | |

-| reverse| | | | |Y| | | | | | | | | | |

-| rnn|Y| | |Y| |Y| | | | | | | | | |

-| roi_align| | | |Y|Y| | | | | | | | | | |

-| roi_perspective_transform| | | | |Y| | | | | | | | | | |

-| rsqrt|Y|Y| |Y|Y|Y| | | | | | | | | |

-| scale|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| scatter|Y| | | | | | | | | | | | | | |

-| scatter_nd_add| | | | |Y| | | | | | | | | | |

-| search_aligned_mat_mul| | | | | |Y| | | | | | | | | |

-| search_attention_padding_mask| | | | | |Y| | | | | | | | | |

-| search_fc| | | |Y| | | | | | | | | | | |

-| search_grnn| | | |Y| |Y| | | | | | | | | |

-| search_group_padding| | | | | |Y| | | | | | | | | |

-| search_seq_arithmetic| | | |Y| |Y| | | | | | | | | |

-| search_seq_depadding| | | | | |Y| | | | | | | | | |

-| search_seq_fc| | | | | |Y| | | | | | | | | |

-| search_seq_softmax| | | | | |Y| | | | | | | | | |

-| select_input| | | | |Y| | | | | | | | | | |

-| sequence_arithmetic| | | |Y| |Y| | | | | | | | | |

-| sequence_concat| | | |Y| |Y| | | | | | | | | |

-| sequence_conv|Y| | | | |Y| | | | | | | | | |

-| sequence_expand| | | | |Y| | | | | | | | | | |

-| sequence_expand_as|Y| | | | |Y| | | | | | | | | |

-| sequence_mask| | | |Y|Y| | | | | | | | | | |

-| sequence_pad| | | |Y|Y| | | | | | | | | | |

-| sequence_pool|Y| | |Y| |Y| | | | | | | | | |

-| sequence_reshape| | | | | |Y| | | | | | | | | |

-| sequence_reverse| | | |Y| |Y| | | | | | | | | |

-| sequence_softmax| | | | |Y| | | | | | | | | | |

-| sequence_topk_avg_pooling| | | |Y| |Y| | | | | | | | | |

-| sequence_unpad| | | |Y|Y| | | | | | | | | | |

-| shape| |Y| |Y|Y| |Y| | |Y| | | | | |

-| shuffle_channel|Y|Y|Y| |Y| | | | | | | | | | |

-| sigmoid|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y| |Y|

-| sign|Y| | |Y| | | | | | | | | | | |

-| sin| |Y| | |Y| | | | | | | | | | |

-| slice|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |

-| softmax|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|

-| softplus|Y| | | | | | | | | | | | | | |

-| softsign| | | |Y| |Y| | | | | | | | | |

-| sparse_conv2d|Y| | | | | | | | | | | | | | |

-| split| |Y|Y|Y|Y| |Y| |Y|Y| | |Y| | |

-| split_lod_tensor|Y| | | | | | | | | | | | | | |

-| sqrt|Y|Y| |Y| |Y|Y| | | | | | | | |

-| square|Y|Y| |Y|Y|Y|Y| | | | | | | | |

-| squeeze| |Y| |Y|Y| |Y| |Y|Y| | | | | |

-| squeeze2| |Y| |Y|Y| |Y| |Y|Y| | | | | |

-| stack| | | |Y|Y|Y| | | |Y| | | | | |

-| strided_slice| | | | |Y| | | | | | | | | | |

-| subgraph| | | | | | | | |Y| | | | | | |

-| sum|Y| | |Y| | | | | | | | | | | |

-| swish|Y|Y|Y|Y|Y| |Y| | |Y| | | | | |

-| sync_batch_norm|Y|Y| | | |Y| | | | | | | | | |

-| tanh|Y|Y| |Y|Y|Y| | | |Y|Y|Y|Y| |Y|

-| tensor_array_to_tensor| | | | |Y| | | | | | | | | | |

-| thresholded_relu|Y| | | |Y| | | | | | | | | | |

-| tile| | | | |Y| | | | | | | | | | |

-| top_k| | | |Y|Y| | | | |Y| | | | | |

-| top_k_v2| | | | |Y| | | | |Y| | | | | |

-| transpose|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| transpose2|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|

-| tril_triu| | | | |Y| | | | | | | | | | |

-| uniform_random| | | | |Y| | | | | | | | | | |

-| unique_with_counts| | | | |Y| | | | | | | | | | |

-| unsqueeze| |Y| |Y|Y| | | | |Y| | | | | |

-| unsqueeze2| |Y| |Y|Y| | | | |Y| | | | | |

-| unstack| | | |Y|Y| | | | | | | | | | |

-| var_conv_2d| | | |Y| |Y| | | | | | | | | |

-| where| | | | |Y| | | | | | | | | | |

-| where_index| | | | |Y| | | | | | | | | | |

-| while| | | | |Y| | | | | | | | | | |

-| write_back| | | | |Y| | | | | | | | | | |

-| write_to_array| | | |Y|Y| | | | | | | | | | |

-| yolo_box|Y|Y|Y|Y|Y| |Y| | | | | | | | |

-| __xpu__bigru| | | |Y| | | | | | | | | | | |

-| __xpu__conv2d| | | |Y| | | | | | | | | | | |

-| __xpu__dynamic_lstm_fuse_op| | | |Y| | | | | | | | | | | |

-| __xpu__embedding_with_eltwise_add| | | |Y| | | | | | | | | | | |

-| __xpu__fc| | | |Y| | | | | | | | | | | |

-| __xpu__generate_sequence| | | |Y| | | | | | | | | | | |

-| __xpu__logit| | | |Y| | | | | | | | | | | |

-| __xpu__mmdnn_bid_emb_att| | | |Y| | | | | | | | | | | |

-| __xpu__mmdnn_bid_emb_grnn_att| | | |Y| | | | | | | | | | | |

-| __xpu__mmdnn_bid_emb_grnn_att2| | | |Y| | | | | | | | | | | |

-| __xpu__mmdnn_match_conv_topk| | | |Y| | | | | | | | | | | |

-| __xpu__mmdnn_merge_all| | | |Y| | | | | | | | | | | |

-| __xpu__mmdnn_search_attention| | | |Y| | | | | | | | | | | |

-| __xpu__mmdnn_search_attention2| | | |Y| | | | | | | | | | | |

-| __xpu__multi_encoder| | | |Y| | | | | | | | | | | |

-| __xpu__multi_softmax| | | |Y| | | | | | | | | | | |

-| __xpu__resnet50| | | |Y| | | | | | | | | | | |

-| __xpu__resnet_cbam| | | |Y| | | | | | | | | | | |

-| __xpu__sfa_head| | | |Y| | | | | | | | | | | |

-| __xpu__softmax_topk| | | |Y| | | | | | | | | | | |

-| __xpu__squeeze_excitation_block| | | |Y| | | | | | | | | | | |

+| OP_name| ARM | OpenCL | Metal | 昆仑芯XPU | Host | X86 | 比特大陆 | 英特尔FPGA | 寒武纪mlu | 华为昇腾NPU | 联发科APU | 瑞芯微NPU | 华为麒麟NPU | 颖脉NNA | 晶晨NPU | 芯原TIM-VX |

+|-:|-| -| -| -| -| -| -| -| -| -| -| -| -| -| -| -|

+| abs|Y|Y| |Y|Y| | | | |Y| | | | | | |

+| affine_channel|Y| | | | | | | | | | | | | | | |

+| affine_grid|Y| | | | | | | | | | | | | | | |

+| anchor_generator| | | |Y|Y| | | | | | | | | | | |

+| arg_max|Y|Y| |Y|Y| | | |Y|Y| | | | | | |

+| arg_min| | | | | | | | | |Y| | | | | | |

+| argsort| | | | |Y| | | | | | | | | | | |

+| assign| | | |Y|Y| | | | |Y| | | | | | |

+| assign_value| | | |Y|Y| |Y| | |Y| | | | | | |

+| attention_padding_mask| | | | | | | | | | | | | | | | |

+| axpy|Y| | | | | | | | | | | | | | | |

+| batch_norm|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| beam_search| | | | |Y| | | | | | | | | | | |

+| beam_search_decode| | | | |Y| | | | | | | | | | | |

+| bilinear_interp|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |Y|

+| bilinear_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |Y|

+| bmm| | | |Y| | | | | | | | | | | | |

+| box_clip| | | |Y|Y| | | | | | | | | | | |

+| box_coder|Y|Y|Y|Y|Y|Y|Y| | | | | | | | | |

+| calib|Y| | |Y| | | | |Y| | | | | | | |

+| calib_once|Y| | |Y| | | | |Y| | | | | | | |

+| cast| | | |Y|Y| |Y| |Y|Y| | | | | | |

+| clip|Y|Y| |Y| |Y| | | |Y| | | | | | |

+| collect_fpn_proposals| | | | |Y| | | | | | | | | | | |

+| concat|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| conditional_block| | | | |Y| | | | | | | | | | | |

+| conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

+| conv2d_transpose|Y|Y|Y|Y| |Y|Y| | |Y| | | | |Y|Y|

+| correlation| | | |Y|Y| | | | | | | | | | | |

+| cos| |Y| | |Y| | | | | | | | | | | |

+| cos_sim| | | | |Y| | | | | | | | | | | |

+| crf_decoding| | | | |Y| | | | | | | | | | | |

+| crop| | | | |Y| | | | | | | | | | | |

+| crop_tensor| | | | |Y| | | | | | | | | | | |

+| ctc_align| | | | |Y| | | | | | | | | | | |

+| cumsum| | | | |Y| | | | |Y| | | | | | |

+| decode_bboxes|Y| | | | | | | | | | | | | | | |

+| deformable_conv|Y| | | |Y| | | | |Y| | | | | | |

+| density_prior_box| | | |Y|Y|Y|Y| | | | | | | | | |

+| depthwise_conv2d|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|Y|

+| depthwise_conv2d_transpose| |Y| | | | |Y| | | | | | | | | |

+| distribute_fpn_proposals| | | | |Y| | | | | | | | | | | |

+| dropout|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| elementwise_add|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| elementwise_div|Y| | |Y| |Y|Y| | |Y|Y|Y|Y| |Y|Y|

+| elementwise_floordiv|Y| | | | |Y| | | | | | | | | | |

+| elementwise_max|Y| | |Y| |Y| | | |Y| | | | | |Y|

+| elementwise_min|Y| | | | |Y| | | |Y| | | | | |Y|

+| elementwise_mod|Y| | | | |Y| | | | | | | | | | |

+| elementwise_mul|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| elementwise_pow|Y| | | | |Y| | | |Y| | | | | |Y|

+| elementwise_sub|Y|Y|Y|Y| |Y|Y| | |Y|Y|Y|Y| |Y|Y|

+| elu|Y| | | |Y| | | | | | | | | | | |

+| equal| | | | |Y| | | | |Y| | | | | | |

+| erf|Y| | | | | | | | | | | | | | | |

+| exp|Y|Y|Y|Y|Y| | | | |Y| | | | | | |

+| expand| |Y| | |Y| | | | | | | | | | | |

+| expand_as| | | | |Y| | | | | | | | | | | |

+| expand_v2| | | |Y|Y| | | | |Y| | | | | | |

+| fake_channel_wise_dequantize_max_abs| | | | | | | | | | | | | | | | |

+| fake_channel_wise_quantize_dequantize_abs_max| | | | | | | | | | | | | | | | |

+| fake_dequantize_max_abs| | | | | | | | | | | | | | | | |

+| fake_quantize_abs_max| | | | | | | | | | | | | | | | |

+| fake_quantize_dequantize_abs_max| | | | | | | | | | | | | | | | |

+|fake_quantize_dequantize_moving_average_abs_max| | | | | | | | | | | | | | | | |

+| fake_quantize_moving_average_abs_max| | | | | | | | | | | | | | | | |

+| fake_quantize_range_abs_max| | | | | | | | | | | | | | | | |

+| fc|Y|Y|Y| | |Y| | |Y|Y|Y|Y|Y|Y|Y|Y|

+| feed| | |Y| |Y| | | | | | | | | | | |

+| fetch| | |Y| |Y| | | | | | | | | | | |

+| fill_any_like| | | |Y|Y| | | | |Y| | | | | |Y|

+| fill_constant| | | |Y|Y| |Y| | |Y| | | | | | |

+| fill_constant_batch_size_like| | | |Y|Y| | | | | | | | | | |Y|

+| fill_zeros_like| | | |Y|Y| | | | | | | | | | | |

+| flatten| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| flatten2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| flatten_contiguous_range| | | |Y|Y| | | | |Y|Y|Y|Y| |Y|Y|

+| flip| | | | |Y| | | | | | | | | | | |

+| floor|Y| | | |Y| | | | | | | | | | | |

+| fusion_elementwise_add_activation|Y|Y|Y| | |Y| | | |Y|Y|Y|Y| |Y| |

+| fusion_elementwise_div_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y| |

+| fusion_elementwise_max_activation|Y| | | | |Y| | | |Y| | | | | | |

+| fusion_elementwise_min_activation|Y| | | | |Y| | | |Y| | | | | | |

+| fusion_elementwise_mul_activation|Y| | | | |Y| | | |Y|Y|Y|Y| |Y| |

+| fusion_elementwise_pow_activation| | | | | | | | | |Y| | | | | | |

+| fusion_elementwise_sub_activation|Y|Y| | | |Y| | | |Y|Y|Y|Y| |Y| |

+| gather|Y| | |Y|Y|Y| | |Y| | | | | | | |

+| gather_nd| | | | |Y| | | | | | | | | | | |

+| gather_tree| | | | |Y| | | | | | | | | | | |

+| gelu|Y| | |Y| |Y| | | |Y| | | | | | |

+| generate_proposals| | | |Y|Y| | | | | | | | | | | |

+| generate_proposals_v2|Y| | | | | | | | | | | | | | | |

+| greater_equal| | | | |Y| | | | |Y| | | | | | |

+| greater_than| |Y| | |Y| | | | |Y| | | | | | |

+| grid_sampler|Y|Y| |Y| |Y| | | | | | | | | | |

+| group_norm|Y| | | | |Y| | | | | | | | | | |

+| gru|Y| | |Y| |Y| | | | | | | | | | |

+| gru_unit|Y| | |Y| |Y| | | | | | | | | | |

+| hard_sigmoid|Y|Y|Y|Y|Y| |Y| | |Y| | | | | |Y|

+| hard_swish|Y|Y|Y|Y|Y|Y|Y| | |Y| | | | | |Y|

+| im2sequence|Y| | |Y| | |Y| | | | | | | | | |

+| increment| | | |Y|Y| | | | | | | | | | | |

+| index_select| | | | |Y| | | | | | | | | | | |

+| instance_norm|Y|Y| |Y| |Y| | | |Y| | | | | | |

+| inverse| | | | |Y| | | | | | | | | | | |

+| io_copy| |Y|Y|Y| | | | |Y| | | | | | | |

+| io_copy_once| |Y|Y|Y| | | | | | | | | | | | |

+| is_empty| | | |Y|Y| | | | | | | | | | | |

+| layer_norm|Y| | |Y| |Y| | | |Y| | | | | | |

+| layout|Y|Y| | | |Y| | |Y| | | | | | | |

+| layout_once|Y|Y| | | | | | | | | | | | | | |

+| leaky_relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y| | | | | |Y|

+| less_equal| | | | |Y| | | | |Y| | | | | | |

+| less_than| | | |Y|Y| | | | |Y| | | | | | |

+| linspace| | | | |Y| | | | | | | | | | | |

+| lod_array_length| | | | |Y| | | | | | | | | | | |

+| lod_reset| | | | |Y| | | | | | | | | | | |

+| log|Y| | |Y|Y| | | | |Y| | | | | | |

+| logical_and| | | |Y|Y| | | | | | | | | | | |

+| logical_not| | | |Y|Y| | | | | | | | | | | |

+| logical_or| | | | |Y| | | | | | | | | | | |

+| logical_xor| | | | |Y| | | | | | | | | | | |

+| lookup_table|Y| | |Y| |Y| | | | | | | | | | |

+| lookup_table_dequant|Y| | | | | | | | | | | | | | | |

+| lookup_table_v2|Y| | |Y| |Y| | | |Y| | | | | | |

+| lrn|Y|Y| |Y| | | | |Y| | | | | | | |

+| lstm|Y| | | | | | | | | | | | | | | |

+| match_matrix_tensor| | | |Y| |Y| | | | | | | | | | |

+| matmul|Y|Y|Y|Y| |Y|Y| | |Y| | | | | |Y|

+| matmul_v2|Y|Y| |Y| | | | | |Y| | | | | |Y|

+| matrix_nms| | | | |Y| | | | | | | | | | | |

+| max_pool2d_with_index| | | |Y| | |Y| | | | | | | | | |

+| mean|Y| | | | | | | | | | | | | | | |

+| merge_lod_tensor|Y| | | | | | | | | | | | | | | |

+| meshgrid| | | | |Y| | | | | | | | | | | |

+| mish|Y| | | | |Y| | | | | | | | | | |

+| mul|Y| | |Y| |Y|Y| | | | | | | | | |

+| multiclass_nms|Y| | | |Y| |Y| | | | | | | | | |

+| multiclass_nms2|Y| | | |Y| |Y| | | | | | | | | |

+| multiclass_nms3|Y| | | |Y| | | | | | | | | | | |

+| nearest_interp|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| nearest_interp_v2|Y|Y|Y|Y| |Y| | | |Y| | | | | |Y|

+| negative|Y| | | | | | | | | | | | | | | |

+| norm|Y| | |Y|Y| |Y| |Y|Y| | | | | | |

+| not_equal| | | | |Y| | | | |Y| | | | | | |

+| one_hot| | | | |Y| | | | | | | | | | | |

+| one_hot_v2| | | | |Y| | | | | | | | | | | |

+| p_norm|Y| | | |Y| | | | |Y| | | | | | |

+| pad2d|Y|Y|Y|Y|Y| | | | |Y| | | | | | |

+| pad3d| | | | |Y| | | | |Y| | | | | | |

+| pixel_shuffle|Y|Y| |Y|Y| | | | | | | | | | | |

+| polygon_box_transform| | | | |Y| | | | | | | | | | | |

+| pool2d|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|

+| pow|Y| | |Y| |Y| | | |Y| | | | | | |

+| prelu|Y|Y| |Y|Y| | | | |Y| | | | | | |

+| print| | | | |Y| | | | | | | | | | | |

+| prior_box|Y| | |Y|Y| |Y| | | | | | | | | |

+| range| | | | |Y| | | | |Y| | | | | | |

+| read_from_array| | | |Y|Y| | | | | | | | | | | |

+| reciprocal|Y| | |Y|Y| | | | | | | | | | | |

+| reduce_all| | | |Y|Y| | | | | | | | | | | |

+| reduce_any| | | |Y|Y| | | | | | | | | | | |

+| reduce_max|Y|Y| |Y| |Y|Y| | | | | | | | | |

+| reduce_mean|Y|Y| |Y| |Y|Y| | |Y| | | | | | |

+| reduce_min|Y| | |Y| |Y| | | | | | | | | | |

+| reduce_prod|Y| | |Y| |Y| | | | | | | | | | |

+| reduce_sum|Y| | |Y| |Y|Y| | | | | | | | | |

+| relu|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|

+| relu6|Y|Y|Y|Y|Y|Y| | |Y|Y|Y|Y|Y|Y|Y|Y|

+| relu_clipped|Y| | | |Y| | | | | | | | | | | |

+| reshape| |Y| |Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| reshape2| |Y|Y|Y|Y| |Y| |Y|Y|Y|Y|Y| |Y|Y|

+| retinanet_detection_output| | | | |Y| | | | | | | | | | | |

+| reverse| | | | |Y| | | | | | | | | | | |

+| rnn|Y| | |Y| |Y| | | | | | | | | | |

+| roi_align| | | |Y|Y| | | | | | | | | | | |

+| roi_perspective_transform| | | | |Y| | | | | | | | | | | |

+| rsqrt|Y|Y| |Y|Y|Y| | | | | | | | | | |

+| scale|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| scatter|Y| | | | | | | | | | | | | | | |

+| scatter_nd_add| | | | |Y| | | | | | | | | | | |

+| search_aligned_mat_mul| | | | | |Y| | | | | | | | | | |

+| search_attention_padding_mask| | | | | |Y| | | | | | | | | | |

+| search_fc| | | |Y| | | | | | | | | | | | |

+| search_grnn| | | |Y| |Y| | | | | | | | | | |

+| search_group_padding| | | | | |Y| | | | | | | | | | |

+| search_seq_arithmetic| | | |Y| |Y| | | | | | | | | | |

+| search_seq_depadding| | | | | |Y| | | | | | | | | | |

+| search_seq_fc| | | | | |Y| | | | | | | | | | |

+| search_seq_softmax| | | | | |Y| | | | | | | | | | |

+| select_input| | | | |Y| | | | | | | | | | | |

+| sequence_arithmetic| | | |Y| |Y| | | | | | | | | | |

+| sequence_concat| | | |Y| |Y| | | | | | | | | | |

+| sequence_conv|Y| | | | |Y| | | | | | | | | | |

+| sequence_expand| | | | |Y| | | | | | | | | | | |

+| sequence_expand_as|Y| | | | |Y| | | | | | | | | | |

+| sequence_mask| | | |Y|Y| | | | | | | | | | | |

+| sequence_pad| | | |Y|Y| | | | | | | | | | | |

+| sequence_pool|Y| | |Y| |Y| | | | | | | | | | |

+| sequence_reshape| | | | | |Y| | | | | | | | | | |

+| sequence_reverse| | | |Y| |Y| | | | | | | | | | |

+| sequence_softmax| | | | |Y| | | | | | | | | | | |

+| sequence_topk_avg_pooling| | | |Y| |Y| | | | | | | | | | |

+| sequence_unpad| | | |Y|Y| | | | | | | | | | | |

+| shape| |Y| |Y|Y| |Y| | |Y| | | | | | |

+| shuffle_channel|Y|Y|Y| |Y| | | | | | | | | | |Y|

+| sigmoid|Y|Y|Y|Y|Y|Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| sign|Y| | |Y| | | | | | | | | | | | |

+| sin| |Y| | |Y| | | | | | | | | | | |

+| slice|Y|Y|Y|Y| |Y|Y| |Y|Y| | | | | |Y|

+| softmax|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y|Y|Y|Y|

+| softplus|Y| | | | | | | | | | | | | | | |

+| softsign| | | |Y| |Y| | | | | | | | | | |

+| sparse_conv2d|Y| | | | | | | | | | | | | | | |

+| split| |Y|Y|Y|Y| |Y| |Y|Y| | |Y| | |Y|

+| split_lod_tensor|Y| | | | | | | | | | | | | | | |

+| sqrt|Y|Y| |Y| |Y|Y| | | | | | | | | |

+| square|Y|Y| |Y|Y|Y|Y| | | | | | | | | |

+| squeeze| |Y| |Y|Y| |Y| |Y|Y| | | | | |Y|

+| squeeze2| |Y| |Y|Y| |Y| |Y|Y| | | | | |Y|

+| stack| | | |Y|Y|Y| | | |Y| | | | | | |

+| strided_slice| | | | |Y| | | | | | | | | | | |

+| subgraph| | | | | | | | |Y| | | | | | | |

+| sum|Y| | |Y| | | | | | | | | | | | |

+| swish|Y|Y|Y|Y|Y| |Y| | |Y| | | | | | |

+| sync_batch_norm|Y|Y| | | |Y| | | | | | | | | | |

+| tanh|Y|Y| |Y|Y|Y| | | |Y|Y|Y|Y| |Y| |

+| tensor_array_to_tensor| | | | |Y| | | | | | | | | | | |

+| thresholded_relu|Y| | | |Y| | | | | | | | | | | |

+| tile| | | | |Y| | | | | | | | | | | |

+| top_k| | | |Y|Y| | | | |Y| | | | | | |

+| top_k_v2| | | | |Y| | | | |Y| | | | | | |

+| transpose|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| transpose2|Y|Y|Y|Y| |Y|Y| |Y|Y|Y|Y|Y| |Y|Y|

+| tril_triu| | | | |Y| | | | | | | | | | | |

+| uniform_random| | | | |Y| | | | | | | | | | | |

+| unique_with_counts| | | | |Y| | | | | | | | | | | |

+| unsqueeze| |Y| |Y|Y| | | | |Y| | | | | |Y|

+| unsqueeze2| |Y| |Y|Y| | | | |Y| | | | | |Y|

+| unstack| | | |Y|Y| | | | | | | | | | | |

+| var_conv_2d| | | |Y| |Y| | | | | | | | | | |

+| where| | | | |Y| | | | | | | | | | | |

+| where_index| | | | |Y| | | | | | | | | | | |

+| while| | | | |Y| | | | | | | | | | | |

+| write_back| | | | |Y| | | | | | | | | | | |

+| write_to_array| | | |Y|Y| | | | | | | | | | | |

+| yolo_box|Y|Y|Y|Y|Y| |Y| | | | | | | | | |

+| __xpu__bigru| | | |Y| | | | | | | | | | | | |

+| __xpu__conv2d| | | |Y| | | | | | | | | | | | |

+| __xpu__dynamic_lstm_fuse_op| | | |Y| | | | | | | | | | | | |

+| __xpu__embedding_with_eltwise_add| | | |Y| | | | | | | | | | | | |

+| __xpu__fc| | | |Y| | | | | | | | | | | | |

+| __xpu__generate_sequence| | | |Y| | | | | | | | | | | | |

+| __xpu__logit| | | |Y| | | | | | | | | | | | |

+| __xpu__mmdnn_bid_emb_att| | | |Y| | | | | | | | | | | | |

+| __xpu__mmdnn_bid_emb_grnn_att| | | |Y| | | | | | | | | | | | |

+| __xpu__mmdnn_bid_emb_grnn_att2| | | |Y| | | | | | | | | | | | |

+| __xpu__mmdnn_match_conv_topk| | | |Y| | | | | | | | | | | | |

+| __xpu__mmdnn_merge_all| | | |Y| | | | | | | | | | | | |

+| __xpu__mmdnn_search_attention| | | |Y| | | | | | | | | | | | |

+| __xpu__mmdnn_search_attention2| | | |Y| | | | | | | | | | | | |

+| __xpu__multi_encoder| | | |Y| | | | | | | | | | | | |

+| __xpu__multi_softmax| | | |Y| | | | | | | | | | | | |

+| __xpu__resnet50| | | |Y| | | | | | | | | | | | |

+| __xpu__resnet_cbam| | | |Y| | | | | | | | | | | | |

+| __xpu__sfa_head| | | |Y| | | | | | | | | | | | |

+| __xpu__softmax_topk| | | |Y| | | | | | | | | | | | |

+| __xpu__squeeze_excitation_block| | | |Y| | | | | | | | | | | | |

diff --git a/docs/source_compile/include/multi_device_support/nnadapter_support_verisilicon_timvx.rst b/docs/source_compile/include/multi_device_support/nnadapter_support_verisilicon_timvx.rst

new file mode 100644

index 00000000000..fcb3c51d3bb

--- /dev/null

+++ b/docs/source_compile/include/multi_device_support/nnadapter_support_verisilicon_timvx.rst

@@ -0,0 +1,27 @@

+NNAdapter 支持芯原 TIM-VX

+^^^^^^^^^^^^^^^^^^^^^^^^

+

+.. list-table::

+

+ * - 参数

+ - 说明

+ - 可选范围

+ - 默认值

+ * - nnadapter_with_verisilicon_timvx

+ - 是否编译芯原 TIM-VX 的 NNAdapter HAL 库

+ - OFF / ON

+ - OFF

+ * - nnadapter_verisilicon_timvx_src_git_tag

+ - 设置芯原 TIM-VX 的代码分支

+ - TIM-VX repo 分支名

+ - main

+ * - nnadapter_verisilicon_timvx_viv_sdk_url

+ - 设置芯原 TIM-VX SDK 的下载链接

+ - 用户自定义

+ - Android系统:http://paddlelite-demo.bj.bcebos.com/devices/verisilicon/sdk/viv_sdk_android_9_armeabi_v7a_6_4_4_3_generic.tgz

+ Linux系统:http://paddlelite-demo.bj.bcebos.com/devices/verisilicon/sdk/viv_sdk_linux_arm64_6_4_4_3_generic.tgz

+ * - nnadapter_verisilicon_timvx_viv_sdk_root

+ - 设置芯原 TIM-VX 的本地路径

+ - 用户自定义

+ - 空值

+详细请参考 `芯原 TIM-VX 部署示例 `_

diff --git a/docs/source_compile/linux_x86_compile_android.rst b/docs/source_compile/linux_x86_compile_android.rst

index e32c4ff45d1..c69e0e7e358 100644

--- a/docs/source_compile/linux_x86_compile_android.rst

+++ b/docs/source_compile/linux_x86_compile_android.rst

@@ -242,3 +242,5 @@ Paddle Lite 仓库中\ ``/lite/tools/build_android.sh``\ 脚本文件用于构

.. include:: include/multi_device_support/nnadapter_support_mediatek_apu.rst

.. include:: include/multi_device_support/nnadapter_support_amlogic_npu.rst

+

+.. include:: include/multi_device_support/nnadapter_support_verisilicon_timvx.rst

\ No newline at end of file

diff --git a/docs/source_compile/linux_x86_compile_arm_linux.rst b/docs/source_compile/linux_x86_compile_arm_linux.rst

index 136309891ad..4b0635827b1 100644

--- a/docs/source_compile/linux_x86_compile_arm_linux.rst

+++ b/docs/source_compile/linux_x86_compile_arm_linux.rst

@@ -186,3 +186,5 @@ Paddle Lite 仓库中 \ ``./lite/tools/build_linux.sh``\ 脚本文件用于构

.. include:: include/multi_device_support/nnadapter_support_rockchip_npu.rst

.. include:: include/multi_device_support/nnadapter_support_amlogic_npu.rst

+

+.. include:: include/multi_device_support/nnadapter_support_verisilicon_timvx.rst

\ No newline at end of file

+

+### 准备设备环境

+

+- A311D

+

+ - 需要驱动版本为 6.4.4.3(下载驱动请联系开发板厂商)。

+

+ - 注意是 64 位系统。

+

+ - 提供了网络连接 SSH 登录的方式,部分系统提供了adb连接的方式。

+

+ - 可通过 `dmesg | grep Galcore` 查询系统版本:

+

+ ```shell

+ $ dmesg | grep Galcore

+ [ 24.140820] Galcore version 6.4.4.3.310723AAA

+ ```

+

+- S905D3(Android 版本)

+

+ - 需要驱动版本为 6.4.4.3(下载驱动请联系开发板厂商)。

+ - 注意是 32 位系统。

+ - `adb root + adb remount` 以获得修改系统库的权限。

+

+ ```shell

+ $ dmesg | grep Galcore

+ [ 9.020168] <6>[ 9.020168@0] Galcore version 6.4.4.3.310723a

+ ```

+

+ - 示例程序和 Paddle Lite 库的编译需要采用交叉编译方式,通过 `adb`或`ssh` 进行设备的交互和示例程序的运行。

+

+

+### 准备交叉编译环境

+

+- 为了保证编译环境一致,建议参考[ Docker 环境准备](../source_compile/docker_environment)中的 Docker 开发环境进行配置;

+- 由于有些设备只提供网络访问方式(根据开发版的实际情况),需要通过 `scp` 和 `ssh` 命令将交叉编译生成的Paddle Lite 库和示例程序传输到设备上执行,因此,在进入 Docker 容器后还需要安装如下软件:

+

+ ```

+ # apt-get install openssh-client sshpass

+ ```

+

+### 运行图像分类示例程序

+

+- 下载 Paddle Lite 通用示例程序[PaddleLite-generic-demo.tar.gz](https://paddlelite-demo.bj.bcebos.com/devices/generic/PaddleLite-generic-demo.tar.gz),解压后目录主体结构如下:

+

+ ```shell

+ - PaddleLite-generic-demo

+ - image_classification_demo

+ - assets

+ - images

+ - tabby_cat.jpg # 测试图片

+ - tabby_cat.raw # 经过 convert_to_raw_image.py 处理后的 RGB Raw 图像

+ - labels

+ - synset_words.txt # 1000 分类 label 文件

+ - models

+ - mobilenet_v1_int8_224_per_layer

+ - __model__ # Paddle fluid 模型组网文件,可使用 netron 查看网络结构

+ — conv1_weights # Paddle fluid 模型参数文件

+ - batch_norm_0.tmp_2.quant_dequant.scale # Paddle fluid 模型量化参数文件

+ — subgraph_partition_config_file.txt # 自定义子图分割配置文件

+ ...

+ - shell

+ - CMakeLists.txt # 示例程序 CMake 脚本

+ - build.linux.arm64 # arm64 编译工作目录

+ - image_classification_demo # 已编译好的,适用于 arm64 的示例程序

+ - build.linux.armhf # armhf编译工作目录

+ - image_classification_demo # 已编译好的,适用于 armhf 的示例程序

+ - build.android.armeabi-v7a # Android armv7编译工作目录

+ - image_classification_demo # 已编译好的,适用于 Android armv7 的示例程序

+ ...

+ - image_classification_demo.cc # 示例程序源码

+ - build.sh # 示例程序编译脚本

+ - run.sh # 示例程序本地运行脚本

+ - run_with_ssh.sh # 示例程序ssh运行脚本

+ - run_with_adb.sh # 示例程序adb运行脚本

+ - libs

+ - PaddleLite

+ - linux

+ - arm64 # Linux 64 位系统

+ - include # Paddle Lite 头文件

+ - lib # Paddle Lite 库文件

+ - verisilicon_timvx # 芯原 TIM-VX DDK、NNAdapter 运行时库、device HAL 库

+ - libnnadapter.so # NNAdapter 运行时库

+ - libGAL.so # 芯原 DDK

+ - libVSC.so # 芯原 DDK

+ - libOpenVX.so # 芯原 DDK

+ - libarchmodelSw.so # 芯原 DDK

+ - libNNArchPerf.so # 芯原 DDK

+ - libOvx12VXCBinary.so # 芯原 DDK

+ - libNNVXCBinary.so # 芯原 DDK

+ - libOpenVXU.so # 芯原 DDK

+ - libNNGPUBinary.so # 芯原 DDK

+ - libovxlib.so # 芯原 DDK

+ - libOpenCL.so # OpenCL

+ - libverisilicon_timvx.so # # NNAdapter device HAL 库

+ - libtim-vx.so # 芯原 TIM-VX

+ - libgomp.so.1 # gnuomp 库

+ - libpaddle_full_api_shared.so # 预编译 PaddleLite full api 库

+ - libpaddle_light_api_shared.so # 预编译 PaddleLite light api 库

+ ...

+ - android

+ - armeabi-v7a # Android 32 位系统

+ - include # Paddle Lite 头文件

+ - lib # Paddle Lite 库文件

+ - verisilicon_timvx # 芯原 TIM-VX DDK、NNAdapter 运行时库、device HAL 库

+ - libnnadapter.so # NNAdapter 运行时库

+ - libGAL.so # 芯原 DDK

+ - libVSC.so # 芯原 DDK

+ - libOpenVX.so # 芯原 DDK

+ - libarchmodelSw.so # 芯原 DDK

+ - libNNArchPerf.so # 芯原 DDK

+ - libOvx12VXCBinary.so # 芯原 DDK

+ - libNNVXCBinary.so # 芯原 DDK

+ - libOpenVXU.so # 芯原 DDK

+ - libNNGPUBinary.so # 芯原 DDK

+ - libovxlib.so # 芯原 DDK

+ - libOpenCL.so # OpenCL

+ - libverisilicon_timvx.so # # NNAdapter device HAL 库

+ - libtim-vx.so # 芯原 TIM-VX

+ - libgomp.so.1 # gnuomp 库

+ - libc++_shared.so

+ - libpaddle_full_api_shared.so # 预编译 Paddle Lite full api 库

+ - libpaddle_light_api_shared.so # 预编译 Paddle Lite light api 库

+ - OpenCV # OpenCV 预编译库

+ - ssd_detection_demo # 基于 ssd 的目标检测示例程序

+ ```

+

+- 按照以下命令分别运行转换后的ARM CPU模型和 芯原 TIM-VX 模型,比较它们的性能和结果;

+

+ ```shell

+ 注意:

+ 1)`run_with_adb.sh` 不能在 Docker 环境执行,否则可能无法找到设备,也不能在设备上运行。

+ 2)`run_with_ssh.sh` 不能在设备上运行,且执行前需要配置目标设备的 IP 地址、SSH 账号和密码。

+ 3)`build.sh` 根据入参生成针对不同操作系统、体系结构的二进制程序,需查阅注释信息配置正确的参数值。

+ 4)`run_with_adb.sh` 入参包括模型名称、操作系统、体系结构、目标设备、设备序列号等,需查阅注释信息配置正确的参数值。

+ 5)`run_with_ssh.sh` 入参包括模型名称、操作系统、体系结构、目标设备、ip地址、用户名、用户密码等,需查阅注释信息配置正确的参数值。

+ 6)下述命令行示例中涉及的具体IP、SSH账号密码、设备序列号等均为示例环境,请用户根据自身实际设备环境修改。

+

+ 在 ARM CPU 上运行 mobilenet_v1_int8_224_per_layer 全量化模型

+ $ cd PaddleLite-generic-demo/image_classification_demo/shell

+

+ For A311D

+ $ ./run_with_ssh.sh mobilenet_v1_int8_224_per_layer linux arm64 cpu 192.168.100.30 22 khadas khadas

+ (A311D)

+ warmup: 1 repeat: 15, average: 81.678067 ms, max: 81.945999 ms, min: 81.591003 ms

+ results: 3

+ Top0 Egyptian cat - 0.512545

+ Top1 tabby, tabby cat - 0.402567

+ Top2 tiger cat - 0.067904

+ Preprocess time: 1.352000 ms

+ Prediction time: 81.678067 ms

+ Postprocess time: 0.407000 ms

+

+ For S905D3(Android版)

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a cpu c8631471d5cd

+ (S905D3(Android版))

+ warmup: 1 repeat: 5, average: 280.465997 ms, max: 358.815002 ms, min: 268.549812 ms

+ results: 3

+ Top0 Egyptian cat - 0.512545

+ Top1 tabby, tabby cat - 0.402567

+ Top2 tiger cat - 0.067904

+ Preprocess time: 3.199000 ms

+ Prediction time: 280.465997 ms

+ Postprocess time: 0.596000 ms

+

+ ------------------------------

+

+ 在 芯原 NPU 上运行 mobilenet_v1_int8_224_per_layer 全量化模型

+ $ cd PaddleLite-generic-demo/image_classification_demo/shell

+

+ For A311D

+ $ ./run_with_ssh.sh mobilenet_v1_int8_224_per_layer linux arm64 verisilicon_timvx 192.168.100.30 22 khadas khadas

+ (A311D)

+ warmup: 1 repeat: 15, average: 5.112500 ms, max: 5.223000 ms, min: 5.009130 ms

+ results: 3

+ Top0 Egyptian cat - 0.508929

+ Top1 tabby, tabby cat - 0.415333

+ Top2 tiger cat - 0.064347

+ Preprocess time: 1.356000 ms

+ Prediction time: 5.112500 ms

+ Postprocess time: 0.411000 ms

+

+ For S905D3(Android版)

+ $ ./run_with_adb.sh mobilenet_v1_int8_224_per_layer android armeabi-v7a verisilicon_timvx c8631471d5cd

+ (S905D3(Android版))

+ warmup: 1 repeat: 5, average: 13.4116 ms, max: 14.7615 ms, min: 12.80810 ms

+ results: 3

+ Top0 Egyptian cat - 0.508929

+ Top1 tabby, tabby cat - 0.415333

+ Top2 tiger cat - 0.064347

+ Preprocess time: 3.170000 ms

+ Prediction time: 13.4116 ms

+ Postprocess time: 0.634000 ms

+ ```

+