load 172 public time-series datasets with a single line of code ;-)

📣 TSDB now supports a total of 1️⃣7️⃣2️⃣ time-series datasets

‼️

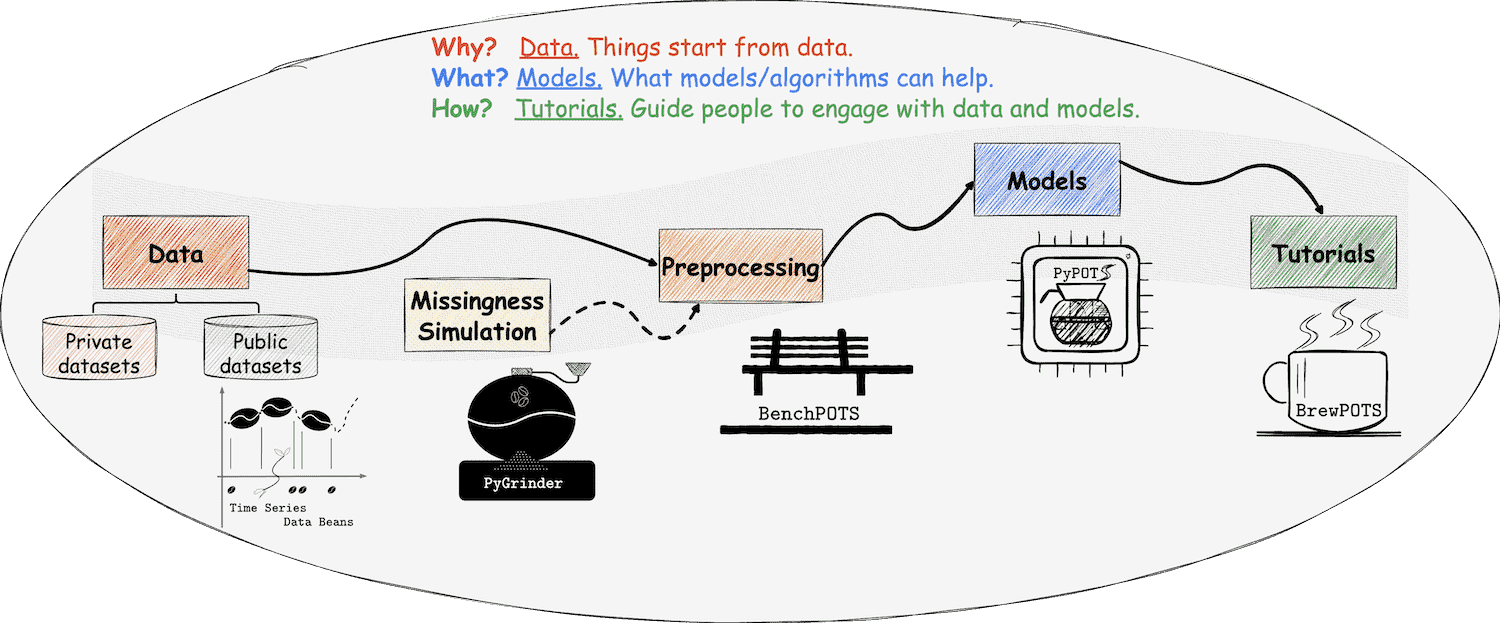

TSDB is a part of

PyPOTS

TSDB is created to help researchers and engineers get rid of data collecting and downloading, and focus back on data processing details. TSDB provides all-in-one-stop convenience for downloading and loading open-source time-series datasets (available datasets listed below).

❗️Please note that due to people have very different requirements for data processing, data-loading functions in TSDB only contain the most general steps (e.g. removing invalid samples) and won't process the data (not even normalize it). So, no worries, TSDB won't affect your data preprocessing. If you only want the raw datasets, TSDB can help you download and save raw datasets as well (take a look at Usage Examples below).

🤝 If you need TSDB to integrate an open-source dataset or want to add it into TSDB yourself, please feel free to request for it by creating an issue or make a PR to merge your code.

🤗 Please star this repo to help others notice TSDB if you think it is a useful toolkit. Please properly cite TSDB and PyPOTS in your publications if it helps with your research. This really means a lot to our open-source research. Thank you!

Important

TSDB is available on both

Install via pip:

pip install tsdb

or install from source code:

pip install

https://github.com/WenjieDu/TSDB/archive/main.zip

or install via conda:

conda install tsdb -c conda-forge

import tsdb

# list all available datasets in TSDB

tsdb.list()

# ['physionet_2012',

# 'physionet_2019',

# 'electricity_load_diagrams',

# 'beijing_multisite_air_quality',

# 'italy_air_quality',

# 'vessel_ais',

# 'electricity_transformer_temperature',

# 'pems_traffic',

# 'solar_alabama',

# 'ucr_uea_ACSF1',

# 'ucr_uea_Adiac',

# ...

# select the dataset you need and load it, TSDB will download, extract, and process it automatically

data = tsdb.load('physionet_2012')

# if you need the raw data, use download_and_extract()

tsdb.download_and_extract('physionet_2012', './save_it_here')

# datasets you once loaded are cached, and you can check them with list_cached_data()

tsdb.list_cache()

# you can delete only one specific dataset's pickled cache

tsdb.delete_cache(dataset_name='physionet_2012', only_pickle=True)

# you can delete only one specific dataset raw files and preserve others

tsdb.delete_cache(dataset_name='physionet_2012')

# or you can delete all cache with delete_cached_data() to free disk space

tsdb.delete_cache()

# The default cache directory is ~/.pypots/tsdb under the user's home directory.

# To avoid taking up too much space if downloading many datasets ,

# TSDB cache directory can be migrated to an external disk

tsdb.migrate_cache("/mnt/external_disk/TSDB_cache")That's all. Simple and efficient. Enjoy it! 😃

| Name | Main Tasks |

|---|---|

| PhysioNet Challenge 2012 | Forecasting, Imputation, Classification |

| PhysioNet Challenge 2019 | Forecasting, Imputation, Classification |

| Beijing Multi-Site Air-Quality | Forecasting, Imputation |

| Italy Air Quality | Forecasting, Imputation |

| Electricity Load Diagrams | Forecasting, Imputation |

| Electricity Transformer Temperature (ETT) | Forecasting, Imputation |

| Vessel AIS | Forecasting, Imputation, Classification |

| PeMS Traffic | Forecasting, Imputation |

| Solar Alabama | Forecasting, Imputation |

| UCR & UEA Datasets (all 163 datasets) | Classification |

The paper introducing PyPOTS is available on arXiv, A short version of it is accepted by the 9th SIGKDD international workshop on Mining and Learning from Time Series (MiLeTS'23)). Additionally, PyPOTS has been included as a PyTorch Ecosystem project. We are pursuing to publish it in prestigious academic venues, e.g. JMLR (track for Machine Learning Open Source Software). If you use PyPOTS in your work, please cite it as below and 🌟star this repository to make others notice this library. 🤗

There are scientific research projects using PyPOTS and referencing in their papers. Here is an incomplete list of them.

@article{du2023pypots,

title={{PyPOTS: a Python toolbox for data mining on Partially-Observed Time Series}},

author={Wenjie Du},

journal={arXiv preprint arXiv:2305.18811},

year={2023},

}or

Wenjie Du. PyPOTS: a Python toolbox for data mining on Partially-Observed Time Series. arXiv, abs/2305.18811, 2023.