-

Notifications

You must be signed in to change notification settings - Fork 528

Memory Leak in 1.1.0 #1260

Comments

|

I wasn't able to reproduce it's locally, can you provide your |

|

@pakrym I'll look into it when I'm in the office. |

|

After upgrading all our app dependencies to 1.1.0 we also got memory leak problem on Linux (the process growing after start), but I was not able to reproduce it on Windows and unfortunately there is no tools to profile memory like dotMemory on Linux, so we rolled back to 1.0.1. I hope it's related and will be fixed |

|

@agoretsky is this from cert use? (Releated bug and repo you raised in coreclr https://github.com/dotnet/coreclr/issues/8660); or unreleated issue? |

|

@benaadams, yes it is due to raised issue |

|

I build the 1.1 server with the code changes from PR 1261 and the leak is gone. |

|

@meichtf thank you for testing. |

|

I hope to see this in the 1.1.1 release. |

|

Hey all, |

|

.net core 1.1.1, a self-contained package, kestrel, linux - no requests, just leaving the process running - memory grows slowly until it's all eaten by the dotnet core process. |

|

Hi @pakrym, Do you have any hints how to avoid this problem? We are running some ASP.NET Core APIs in docker images (based on Debian), and they all have a constantly increasing memory usage, even in our development and staging environments, where they are—except for health check calls—basically idle. We are seeing memory usage patterns like these (these are from 2 different APIs, and they are constantly being restarted because they run out of memory): Is this a tracked issue on Linux which will be solved eventually? Might the 2.0 release bring any remedy to this? Or this should not happen and we're doing something wrong? Thanks in advance! |

|

REOPEN! |

|

@avezenkov Feel free to open a new issue with more details. It should have information about what the project looks like. Is it a template project? If so, which one and did you make any modifications? If not, could you upload the project to a github repo? What packages and runtime are you using? Are you running a debug or release configuration? Are you using After the memory has grown for a while, could you take a memory dump using gcore? If I'm lucky I might be able to use that to see what's on the managed heap. If you want to take a stab at it yourself, you can read guides here and here that go into detail on how to debug dotnet linux processes using lldb and libsosplugin.so. I don't want to overwhelm you. If any of these details are too difficult to come by, you can leave them out for now and maybe I'll still be able to get a repro. But right now, I have almost nothing to go on. We investigated this issue, fixed it, and verified the fix prior to releasing 1.1.1. I haven't seen the memory leak issue on linux since then which I test on a lot, but that's without running MVC or other higher-level services since I primarily work on lower levels of the stack. Just now, I reinstalled 1.1.1 on on my Debain 8.9 VM and printed out the |

|

@halter73 I see. Thanks for your efforts. I'll try to isolate the problem and describe the details, upload a demo project etc. Net core is our hope for a minimal, modular, stable, multiplatform framework. |

|

@halter73 And thanks for the linux debugging guides. I'll create a new issue once I have more details. |

|

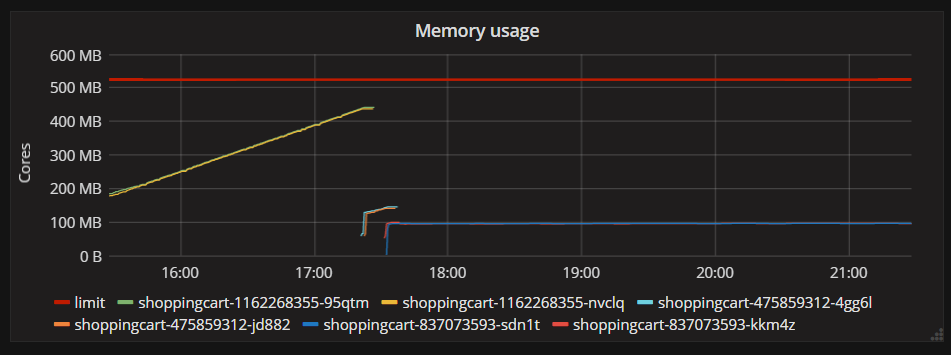

Btw. I have some new information about our experience, I'll share it for future reference, or to see if someone might have an idea about it. It seems that the APIs we have don't increase their memory usage infinitely, it's just that their usage is unexpectedly high. We are running these APIs in Kubernetes in Google Cloud. Previously we had their memory limit set to 500MB, and they were exceeding it and were being constantly restarted. This is an example of the recent memory usage of some of the instances. This usage still seems to be excessive, and I couldn't find an explanation for it. We can only reproduce it in Kubernetes in GCE, and nowhere else. I tried running and load testing the same API

But in none of these tests have I seen the same excessive memory usage, in all the tests the memory consumption peaked at around ~150MB. Did anyone else encounter something similar? (The last thing I can think of which is a difference between the tests and the production scenario is that Google Cloud is using a specialized OS, the Container Optimized OS to run the production docker images, but I haven't got around to investigate how I could test with that.) |

|

Sounds like the memory cap on the container is not being communicated to the GC? (So it goes over 500MB thinking it has more?) |

|

@benaadams I was thinking about that. In Kubernetes the specified memory limit is passed to Docker in the Do you know how we could verify that this is not the problem? |

|

I presume docker locally and on the cloud test are x64 so I will ignore that for now. What is the reported core count in both local and remote tests? It has a decent impact on memory held by the GC |

|

The change was here dotnet/coreclr#10064 |

|

Hi @Drawaes, I tried printing

Can this cause the issue? Is there any other diagnostics I should print? Btw. what I tried in the meantime is to use different base Docker images. Originally I was using a custom image on top of |

|

This makes a big difference. By default asp.net core runs on the server GC. This tends to allow memory use to grow a lot more, preferring throughput over memory use. However it doesn't matter what you set the garbage collector to (workstation or server) it will go to workstation mode if there is a single CPU. Try forcing your app to workstation and re-running on google cloud, or resource limiting your container to a single CPU. If you want to force your application you can do it in the csproj with this setting <PropertyGroup>

<ServerGarbageCollection>true</ServerGarbageCollection>

</PropertyGroup>That should give you the same behaviour as you see locally. (You need to make sure your launchsettings.json isn't overriding this value as well). Try that and see if the results match what you see on docker locally. |

|

For your reference for the difference between the server and workstation GC just look at the size of the ephemeral segments it allocates. Your docker because of the single cpu will have the Workstation one running, and the google cloud one the Server one running with the category > 4 cpus (2gb). Using workstation GC on multi procs will use a bit more cpu but will keep your memory use lower (more collections etc). |

|

The container memory cap was merged 23 Mar 2017 Guess you might have to use Net CoreApp 2,0 to have the the caps work? (Can still use same version of ASP.NET Core) |

|

Sure, but the behaviour was okay on containers locally, where it was a single cpu, but not on the google containers where there were 8 "cpus" visible to the container. So while you may still have that issue "the cap is not seen correctly" the workstation CPU is saving your bacon locally and keeping the memory limit low. |

|

Thanks a lot for the help guys, I didn't know about the difference the number of CPUs cause. Btw. @Drawaes if I understand correctly the problem might be that in Google Cloud, .NET is using the Server GC, thus allocating much more memory. And what I'd have to try is to force Workstation GC, right? Then shouldn't the flag in the csproj be false? |

|

Yes sorry... it should be false... typo :) good luck and tell us how you get on for sure |

|

The change to Workstation GC seems to have helped! This is what the memory usage looks like after the deployment. (This is still on the development deployment, so this API is not getting any real load. I'll test it on production soon.) Btw. before doing the csproj change, I printed Do I understand correctly that the problem was caused by the combination of these two things?

So I should be able to switch back Server GC once I upgraded to .NET 2.0, and it'll respect the memory limit coming with |

|

Users allocate, GC doesn't 😉 I'd phrase it more as

This might be a good primer to show the difference: https://docs.microsoft.com/en-us/dotnet/standard/garbage-collection/fundamentals Generally you want to be using Server GC (the defaults) for a server app. I have a request in for manual limits https://github.com/dotnet/coreclr/issues/11338 |

|

@benaadams thanks for the clarification! Just a quick update: I also deployed to production, where the API has some load (~2 requests per second), and the memory usage seems to be stable so far: |

|

I am not 100% sure that server GC with 8 cores but a 1gb limit is ever a good idea. The core to memory ratio is out of wack. You get heaps per core in server gc. |

|

I'm trying to figure out if the cpu limit in Kubernetes actually limits the amount of cores it runs on or is just a cap on how much time a process is allowed to spend on all cores of the host combined. The particular application @markvincze mentions has a Kubernetes resource limit of 250m, which is a quarter of a core. However the host is an 8-core machine, hence the |

|

It will be treated as 8 core. The issue is 8 core with 1gb of ram. You have 125mb per core and with the server gc that is never going to be a good situation. I would recommend the workstation GC always in that scenario. |

|

@markvincze .NET Core 2.0 which has the fix has just been released https://blogs.msdn.microsoft.com/dotnet/2017/08/14/announcing-net-core-2-0/ (Also ASP.NET Core 2.0 has also just been released https://blogs.msdn.microsoft.com/webdev/2017/08/14/announcing-asp-net-core-2-0/) |

|

@benaadams thanks for the info! (I'm working on upgrading my API already 🙂) |

|

Hello @markvincze! Did Helped in 1.1.0 or you had to upgrade to 2.0? |

|

It helped according to his report back. |

|

@Drawaes thank you, will try it |

|

Yes, it helped. Since then I upgraded to 2.0, but I still had to keep |

|

Detecting docker memory limit is in 2.0 (dotnet/coreclr@b511095) |

|

The memory limit was potentially an issue, but I think google was actually reporting 8 cores to the container. If that is the case then the server GC is never going to be comfortable with 500mb memory limit. Would be interesting to hear back what the Environment reports for CPU count now. |

@markvincze does it hit the memory threshold with 2.0 and get restarted or just use all the memory with 2.0? Hopefully it doesn't hit it; forcing a restart (prob via Else that's probably a bug 😄 |

|

@benaadams After upgrading to 2.0, if I switch to Server GC, it still runs over the limit and gets restarted. For example with setting the limit to 300MB it looks like this (graph is a bit flaky): |

|

Probably should raise an issue in https://github.com/dotnet/coreclr about that |

|

The interesting thing is that I can't reproduce the same issue with local Docker on my machine. Even if I pass in a small value with I'm trying to find now a way to verify if Kubernetes is actually passing in the memory resource limit as |

|

Looks like someone else might be having container issue dotnet/core#871 |

Since updating to 1.1.0 (on Win10 64bit) my server keeps using memory at about ~0.5 Mb per minute. Is there a new setting that must be set or is there a memory leak in 1.1.0?

It's very easy to reproduce:

The text was updated successfully, but these errors were encountered: