- Use packer to create azure image that functions as a squid proxy.

- Use terraform to create vm / vmss, as squid proxy instance(s).

- Deploy necessary networking infra.

- Deploy Azure Databricks Workspace with all outbound traffic going through squid proxy, as such, we can achieve granular ACL control for outbound destinations.

Credits to andrew.weaver@databricks.com for creating the original instructions to set up squid proxy and shu.wu@databricks.com for efforts in testing and debugging init scripts for databricks cluster proxy setup.

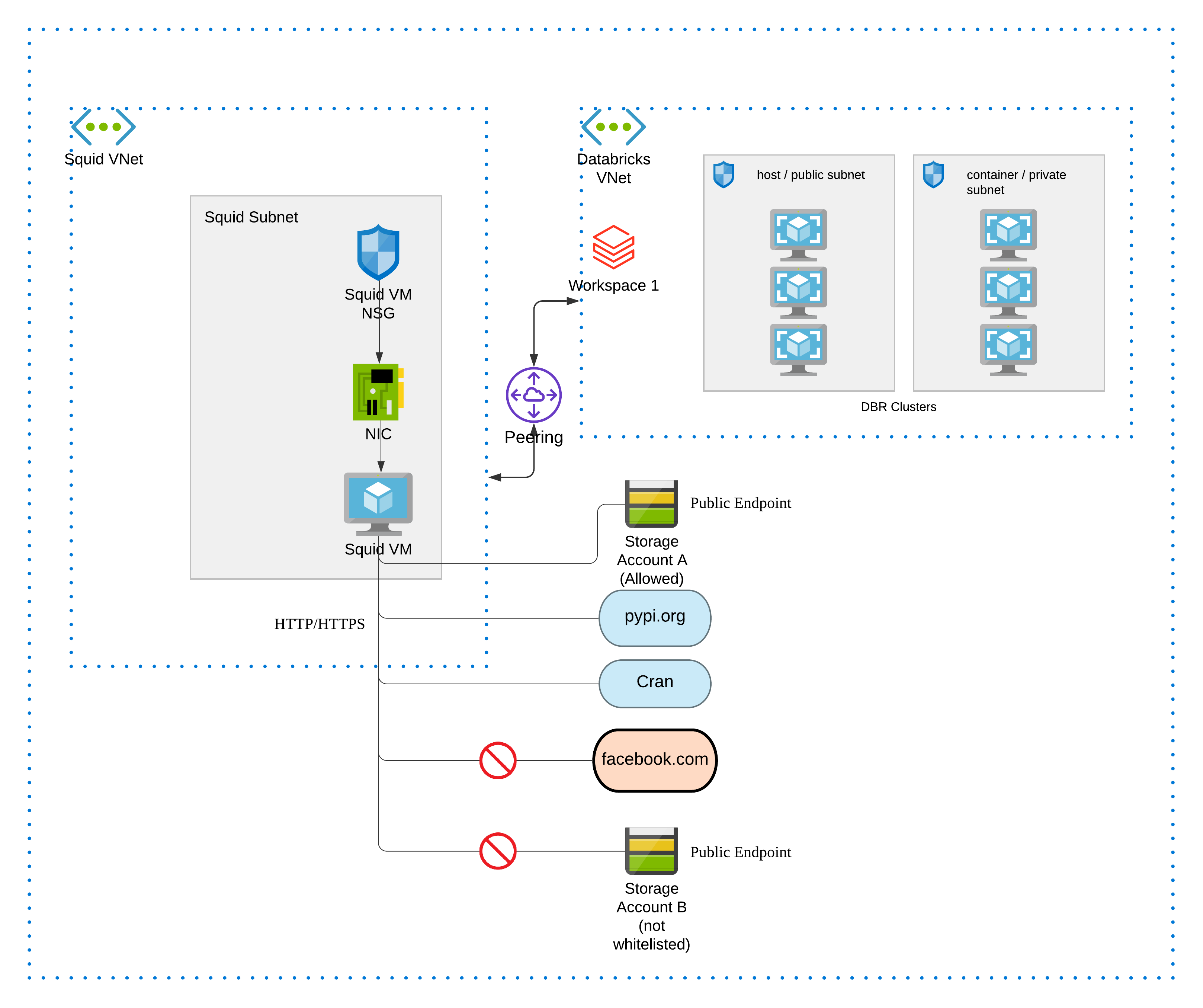

Narratives: Databricks workspace 1 is deployed into a VNet, which is peered to another VNet hosting a single Squid proxy server, every databricks spark cluster will be configured using init script to direct traffic to this Squid server. We control ACL in squid.conf, such that we can allow/deny traffic to certain outbound destinations.

Does this apply to azure services as well (that goes through azure backbone network)

This step creates an empty resource group for hosting custom-built squid image and a local file of config variables in /packer/os.

Redirect to /packer/tf_coldstart, run:

terraform initterraform apply

This step you will use packer to build squid image. Packer will read the auto-generated *.auto.pkrvars.hcl file and build the image.

Redirect to /packer/os, run:

packer build .

This step creates all the other infra for this project, specified in /main.

Redirect to /main, run:

terraform initterraform apply

Now in folder of /main, you can find the auto-generated private key for ssh, to ssh into the provisioned vm, run:

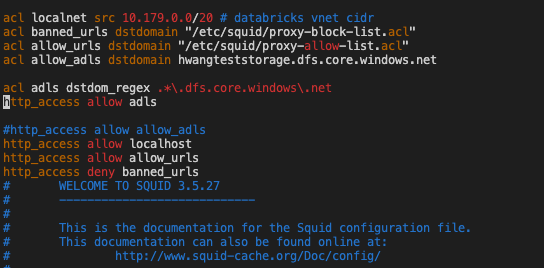

ssh -i ./ssh_private.pem azureuser@52.230.84.169, change to the public ip of the squid vm accordingly. Check the nsg rules of the squid vm, we have inbound rule 300 allowing any source for ssh, this is for testing purpose only! You do not need ssh on squid vm for production setup. Once you ssh into squid vm, vi /etc/squid/squid.conf and you will find similar content like:

The content was auto inserted by packer in step 2.

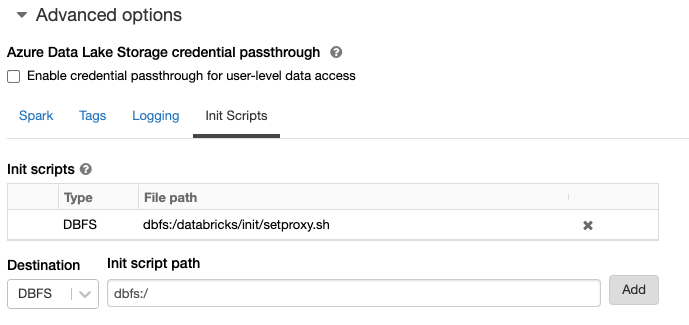

Open your databricks workspace, you will find a notebook been created in Shared/ folder, this is the notebook to create the cluster init script.

Create a small vanilla cluster to run this notebook.

Spin up another cluster using the init script generated in step 4, spark traffic will be routed to the squid proxy.

Now all your clusters that spins up using this init script, will route spark/non-spark traffic to the squid proxy and ACL rules in squid.conf will be applied. Example effects are shown below:

Traffic to storage accounts will also be allowed / blocked by the proxy. These rules are to be set in /packer/scripts/setproxy.sh script.

We used a single instance of squid proxy server to control granular outbound traffic from Databricks clusters. You can use cluster proxy to enforce the init script, such that all clusters will abide to the init script config and go through the proxy.

To expand to VMSS from current 1 vm setup, with load balancer. For now this project achieves the purpose of granular outbound traffic control, without using a firewall.