-

-

Notifications

You must be signed in to change notification settings - Fork 8.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

XGBoost has trouble modeling multiplication/division #4069

Comments

|

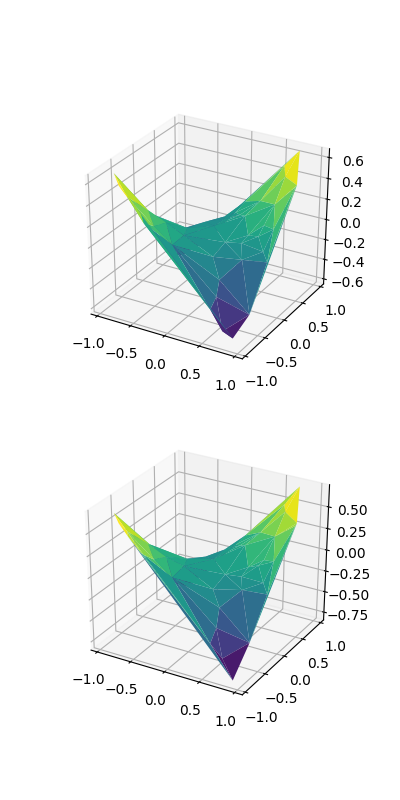

@ledmaster I tried to generate the following dataset, is it the right one? x = np.random.rand(64, 2)

x = x * 2 - 1.0

y_true = x[:, 0] * x[:, 1]Following above script: dtrain = xgb.DMatrix(x, label=y_true)

params = {

'tree_method': 'gpu_hist'

}

bst = xgb.train(params, dtrain, evals=[(dtrain, "train")], num_boost_round=10)

y_pred = bst.predict(dtrain)

# Z = pred_y

X = x[:, 0]

Y = x[:, 1]

fig = plt.figure(figsize=plt.figaspect(2.))

ax = fig.add_subplot(2, 1, 1, projection='3d')

ax.plot_trisurf(X, Y, y_pred, cmap='viridis')

ax = fig.add_subplot(2, 1, 2, projection='3d')

ax.plot_trisurf(X, Y, y_true, cmap='viridis')

plt.show()I got: Seems pretty reasonable. Did I generate the wrong dataset? |

|

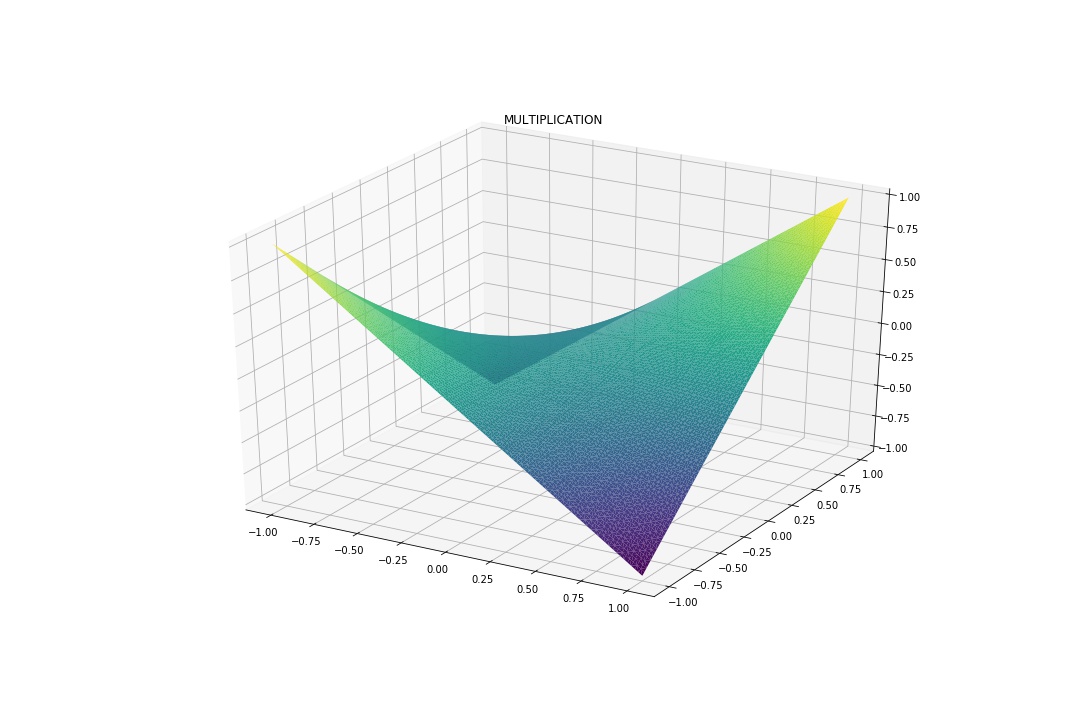

@trivialfis size = 10000

X = np.zeros((size, 2))

Z = np.meshgrid(np.linspace(-1,1, 100), np.linspace(-1,1, 100))

X[:, 0] = Z[0].flatten()

X[:, 1] = Z[1].flatten()

y_mul = X[:,0] * X[:, 1]

y_div = X[:,0] / X[:, 1]

ops = [('MULTIPLICATION', y_mul), ('DIVISION', y_div)]

for name, op in ops:

fig = plt.figure(figsize=(15,10))

ax = fig.gca(projection='3d')

ax.set_title(name)

ax.plot_trisurf(X[:, 0], X[:, 1], op, cmap=plt.cm.viridis, linewidth=0.2)

#plt.show()

plt.savefig("{}.jpg".format(name))

ops = [('MULTIPLICATION', y_mul), ('DIVISION', y_div)]

for name, op in ops:

mdl = xgb.XGBRegressor()

mdl.fit(X, op)

fig = plt.figure(figsize=(15,10))

ax = fig.gca(projection='3d')

ax.set_title("{} - NOISE = 0".format(name))

ax.plot_trisurf(X[:, 0], X[:, 1], mdl.predict(X), cmap=plt.cm.viridis, linewidth=0.2)

#plt.show()

plt.savefig("{}_noise0.jpg".format(name))Figure for the non predict plot: |

|

I lose. Adding small normal noise ( noise = np.random.randn(size)

noise = noise / 1000

y = y + noise # somehow this helps XGBoost leave the local minimum

dtrain = xgb.DMatrix(X, label=y)I'm not sure if this is a bug. XGBoost relies on greedy algorithm after all. Would love to hear some other opinions. @RAMitchell |

|

I agree with @trivialfis - it's not a bug. But it's a nice example of data with "perfect symmetry" with unstable balance. With such perfect dataset, when the algorithm is looking for a split, say in variable X1, the sums of residuals at each x1 location of it WRT X2 are always zero, thus it cannot find any split and is only able to approximate the total average. Any random disturbance, e.g., some noise or |

|

@khotilov That's a very interesting example. I wouldn't come up with it myself. Maybe we can document it in a tutorial? |

|

@ledmaster Thanks for the article: http://mariofilho.com/can-gradient-boosting-learn-simple-arithmetic/ |

|

Thanks @khotilov and @trivialfis for the answers and investigation. As @hcho3 cited above, I wrote an article about GBMs and arithmetic operations, so this is why I ended up finding the issue. I added your answer there. Feel free to link to it. |

|

Decision trees can only look at one feature at a time. In your example if I take any 1d feature range such as 0.25 < f0 < 0.5 and average all the samples in that slice I suspect you will always get exactly 0 due to the symmetry of your problem. So xgboost cant find anything when it looks from the perspective of a single feature. |

Hi

I am using Python 3.6 and XGBoost version 0.81. When I try a simple experiment, creating a matrix X of numbers between 1 and -1, and then Y = X1 * X2 or Y = X1 / X2, xgboost can't learn and predicts a constant number.

Now, if I add gaussian noise, it can model the function:

I tried changing the range, tuning hyperparameters, base_score, using the native xgb.train vs XGBoostRegressor, but couldn't make it learn.

Is this a known issue? Do you know why it happens?

Thanks

The text was updated successfully, but these errors were encountered: