-

Notifications

You must be signed in to change notification settings - Fork 4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Proposal: IL optimization step #15929

Comments

|

Does something like rewriting LINQ as loops fit into a process like this? |

|

Speaking of optimziations, int square(int num) {

int a = 0;

for (int x = 0; x < num; x+=2) {

if (x % 2) {

a += x;

}

}

return a;

}gcc changes the above function to return zero. 😄 |

|

And when we're at it! maybe I don't know but maybe this is the right phase for handling |

|

I think IL rewrite and constexpr are two separate things. |

|

@fanoI They are two different things but what lead me to write this is really this part:

Maybe during debug compilation the compiler won't evaluate functions as Just because they are two different things doesn't mean it's not the right phase to handle it. |

|

Part of the challenge in building an IL-IL optimizer is that in general the scope of optimizations is limited to a method or perhaps a single assembly. One can do some cool things at this scope but quite often the code one would like to optimize is scattered across many methods and many assemblies. To broaden the scope, you can gather all the relevant assemblies together sometime well before execution and optimize them all together. But by doing this you inevitably build in strong dependence on the exact versions of the assemblies involved, and so the resulting set of optimized IL assemblies must now be deployed and versioned and serviced together. For some app models this is a natural fit; for others it is not. Engineering challenges abound. @mikedn touched on some of them over in #15644, but there are many more. Just to touch on a few:

I'm not saying that building an IL-IL optimizer is a bad idea, mind you -- but building one that is broadly applicable is a major undertaking. |

In C++ we face the exact same problem, don't we? static linking vs dynamic linking? there's trade-offs and yet many people choose to have static linking for their applications. All this can be part of a compiler flag, I guess it needs to be an opt-in feature. |

What problem? C++ has headers and many C++ libraries are header only, starting with the C++ standard library (with a few exceptions). Besides, many used static linking for convenience, not for performance reasons. And static linking is certainly not the norm, for example you can find many games where the code is spread across many dlls.

Such a feature is being worked on. It's called corert. |

I think you missed my point... my point being is people that do choose static linking face the following problems which he mentions in his post:

It certainly isn't the norm for games but for many other applications static linking is fairly common. I wasn't speaking about performance at all and it wasn't my intention to imply that the analogy has anything to do with the discussed feature itself but merely to point out that in the C++ world some people would accept these trade-offs and if people do that out of convenience then they would surely do it if it can increase performance especially in the .NET world so they might pay the price for bundling everything together but they will get optimized code which might worth it for them.

As far as I understand CoreRT is mostly designed for AOT scenarios and the emitted code isn't really IL, this isn't part of Roslyn and there's no compiler flag so how is it related? or as you said being worked on? maybe I'm missing something. |

Could be. It's somewhat unavoidable when apples and oranges comparisons are used. The C++ world is rather different from the .NET world and attempts to make decisions in one world based on what happens in the other may very well result in failure.

Why do you think that bundling everything together would increase performance? Do you even know that you have all the code after bundling? No, there's always Can you transform a virtual call into a direct call because you can see that no class has overridden the called method? No, you can't because someone may create a class at runtime and override that method. Can you look at all the

Well, do you want better compiler optimizations? Are you willing to merge all the code into a single binary and give up runtime code loading? Are you willing to give up runtime code generation? Are you willing to give up full reflection functionality? Then it sounds to me that CoreRT is exactly what you're looking for. Do want it to emit IL? Why would you want that? I thought you wanted performance. Do you want it to be part of Roslyn as a compiler flag? Again, I thought you wanted performance. The problem with this proposal is that it is extremely nebulous. Most people just want better performance and don't care how they get it. Some propose that we might get better performance by doing certain things but nobody knows exactly what and how much performance we can get by doing that. And then we have the Roslyn team who had to cut a lot of stuff from C# 7 to deliver it. And the JIT team who too has a lot of work to do. And the CoreRT teams who for whatever reasons moves rather slowly. And the proposition is to embark into this new nebulous project that overlaps existing projects and delivers unknowns. Hmm... |

Okay, first of all I didn't just compare two worlds I made an analogy between two worlds and I merely pointed out that people choose one thing over the other regardless to the trade-offs in one world so they might do the same in a different world where the impact might be greater...

I never said it will increase performance! I said that if it can increase performance then people will use it just for this fact alone and will accept the trade-offs he pointed out in his post. I'm not an expert on the subject so I'm not going to pretend like my words are made of steel but based on what @AndyAyersMS wrote it seems like bundling assembly can eliminate some challenges. By everything I only refer to the assemblies you own not 3rd-party ones.

No, I don't know.

ATM I don't know what would be the trade-offs, there's no proposal about it or design work that delve into limitations, so how did you decide what would be the limitations for such a feature?

Maybe... but I don't think so.

Just because I want to optimize existing IL for performance to reduce run-time overheads it means that now I want to compile it to native code?

I agree but how would you get to something without discussing it first? don't you think we need to have an open discussion about it? and what we really want to get out of it? This isn't really a proposal, this is the reason it was marked as

You're right but I don't think it's our job as a community to get into their schedule, we can still discuss things and make proposals without stepping into their shoes, when and if these things will see the light of day, that's a different story so to that I'd say patience is a virtue. |

|

@eyalsk we're always happy to take in feedback and consider new ideas. And yes, we have a lot of work to do, but we also have the responsibility to continually evaluate whether we're working on the right things and adapt and adjust. So please continue to raise issues and make proposals. We do read and think about these things. I personally find it easier to kickstart a healthy discussion by working from the specific towards the general. When someone brings concrete ideas for areas where we can change or improve, or points out examples from existing code, we are more readily able to brainstorm about how to best approach making things better. When we see something broad and general we can end up talking past one another as broad proposals often create more questions than answers. The discussion here has perhaps generalized a bit too quickly and might benefit from turning back to discussing specifics for a while. So I'm curious what you have in mind for a Likewise for some of the other things mentioned above: linq rewriting, things too expensive to do at jit time, etc.... |

I'm still thinking about Personally, I want |

Well, it was a rhetorical question.

Some but not all. As already mentioned, even if you have all the code there are problems with reflection and runtime code generation. And if you limit yourself only to your own code then the optimization possibilities will likely be even more limited.

The trouble is that I have no idea what we are discussing. As I already said, this whole thing is nebulous.

Dragging constexpr into this discussion only serves to muddy the waters. Constexpr, as defined in C++, has little to do with performance. C++ got It may be useful to be able to write |

|

The scenario I'm thinking of in particular ASP.NET app publish

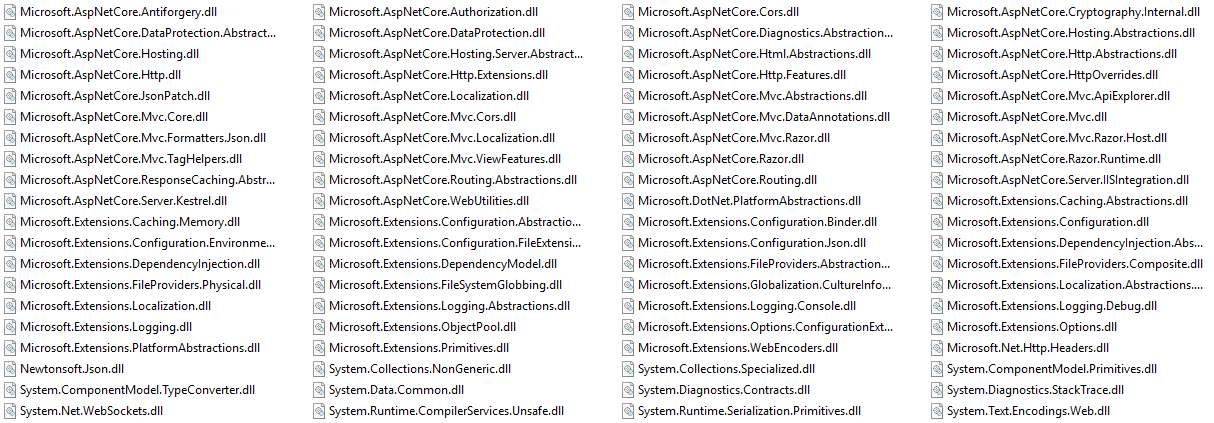

It would be a partial link step of everything above .NET Standard/Platform Runtime; e.g. app+dependencies, which is still quite a lot of things e.g. these are the ASP.NET dependencies brought into the publish directory: So it would still use platform/global versioned .NET Standard dlls ( Sort of the inverse of what Xamarin does for Android and iOS; where it focuses on mscorlib (discarding unused code), then optionally your own libraries. So you'd end up with a single dll + pdb for deployment of your app; that would remain platform independent and portable; and be jitted at runtime. With windows or linux, 32 bit or 64 bit remaining a deferred choice and operational decision rather than a pre-baked developer decision.

No because I want the benefits the runtime Jit provides. I want Vector acceleration enabled for the specific CPU architecture its deployed on, I want runtime readonly statics converted to consts and branch elimination because of it; etc... I want a portable cross platform executable etc... |

So far this sounds just like an ordinary IL merge tool.

Why at runtime? What's wrong with crossgen?

Why not add such missing features to CoreRT/Crossgen? |

And this is the point I'm suggesting that optimizations can be made that rosyln won't (or can't) do and the jit can't do. Either due to lack of whole program view or time.

Crossgen doesn't work for VM deployed apps which can be resized and moved between physical hardware yet remain the same fully deployed O/S; unless the first start up step is to recheck every assumption made and potentially re-crossgen. e.g. on Azure if I switch between an A series VM and an H series; if its compiled for the H series it will fail on the A series; if its compiled for the A series it won't take advantage of the H series improved CPUs. |

This is not correct. crossgen will either not generate the native code at all, leave hole in the native image and the method gets JITed at runtime from IL; or it will generate code that works for all supported CPUs. We are actually too conservative in some cases and leaving too many holes in the native images, for example https://github.com/dotnet/coreclr/issues/7780 |

Good to know; I take that back 😁 Will the code it generates be more conservative the otherway also e.g. take less advantage of newer procs, or will it only generate the code that will be the same? Maybe a better example then would be |

The whole program view has been mentioned before and it is problematic. The lack of time is a bit of a red herring. Crossgen doesn't lack time. And one can imagine adding more JIT optimizations that are opt-in if they turn out to be slow. And the fact that you do some IL optimizations doesn't mean that the JIT won't benefit from having more time.

That sounds like a reasonable solution unless the crossgen time is problematic.

Yes but sometimes that isn't exactly useful. If I know that all the CPUs the program is going to run have AVX2 then I so no reason why crossgen can't be convinced to generate AVX2 code. |

I wasn't trying to answer any question but to clarify what I wrote.

Sometimes, some optimization is better than nothing, again we're not speaking about details here so I don't know whether it make sense or not.. I know it's going to be limited but this limit is undefined and maybe, just maybe it would be enough to warrant this feature, I really don't know.

I guess I can respect that but I wasn't speaking about

That's your own interpretation and opinion not a fact because many people would tell you a different story. However, I did not imply that

Yeah? the moment you start asking questions like why it's required? you soon realize that it has a lot with performance and not as little as you think but really, performance is a vague word without context so it's useless to speak about it.

Yeah, okay I won't derail the discussion any further about it. |

LOL, it is esoteric. You want some kind of IL optimizer but at the same time you want CPU dependent optimizations 😄 |

I was saying why I wanted an il optimizer and also runtime Jitted code. |

|

@AndyAyersMS for linq optimization I assume the kind of things done by LinqOptimizer or roslyn-linq-rewrite which essentially converting linq to procedural code; dropping interfaces for concrete types etc. |

Yeah, I know. But it's funny because the IL optimizer is supposed to do some optimizations without knowing the context and then the JIT has to sort things out. |

|

CoreRT compiler or crossgen are focused on transparent non-breaking optimizations. They have limited opportunities to change shape of the program because of such changes are potentially breaking for the reasons mentioned here - anything can be potentially inspected and accessed via reflection, etc. I do like the idea of linking or optimizing set of assemblies (not entire app) together at IL level. It may be interesting to look at certain optimizations discussed here as plugins for https://github.com/mono/linker. The linker is changing the shape of the program already, so it is potentially breaking non-transparent optimization. If your program depends on reflection, you have to give hints to the linker about what it can or cannot assume. If the IL optimizer can see a set of assemblies and it can be assured that nothing (or subset) is accesses externally or via reflection, it opens opportunities for devirtualization and more aggressive tree shaking at IL level. The promised assumptions can be enforced at runtime - DisablePrivateReflectionAttribute is a prior art in this space. Similarly, if you give optimizer hints that your linq expressions are reasonable (functional and do not depend on the exact side-effects), it opens opportunities for optimizing Linq expressions - like what is done Linq optimizers mentioned by @benaadams . Or if you are interested in optimizations for size, you can instruct the linker that you do not care about error messages in ArgumentExceptions and it can strip all the error messages. This optimization is actually done in a custom way in .NET Native for UWP toolchain. |

|

I think the benefit is that a general purpose tool applies to all IL based languages and can include all optimizations. Things like stripping the |

|

Other optimizations like replacing multiple comparisons with a single compare and such work as well: There are tens (if not hundreds) of these micro-optimizations that native compilers do, and things like the AOT could do, but which the JIT may not be able to do (due to time constraints). |

That happens to work but another similar one -

And like the one above many won't be possible in IL because they require knowledge about the target hw. Or the optimization result is simply not expressible in IL. You end up with a tool that can't do its job properly but needs a lot of effort to be written. And it's duplicated effort since you still the need to optimize code.

Eh, the eternal story of time constraints. Except that nobody attempted to implement such optimization in the JIT to see how much time they consume. For example, in some cases the cost of recognizing a pattern might be amortized by generating smaller IR, less basic blocks, less work in the register allocator and so on. Besides, some people might very well be willing to put up with a slower JIT if it produces faster code. Ultimately what people want is for code to run faster. Nobody really cares how that happens (unless it happens in such a cumbersome manner that it gives headaches). That MS never bothered too much with the JIT doesn't mean that there's no room for improvement or that IL optimizers needs to be created. Note to say that IL rewriters are completely pointless. But they're not a panacea to CQ problems. |

|

Thanks, @tannergooding, I realize that roslyn-link-rewrite's scope is narrower than "everything". What I'm trying to ask is, for the sort of transforms that are in its scope, is there a benefit/desire to have an IL rewrite step perform those same ones? If so, why, and if not, what similar transforms have people had in mind when pointing to it as an example? |

|

I would think that a general purpose IL rewriter should be scoped to whatever optimizations are valid and would likely duplicate some of the ones the JIT/AOT already cover. As @mikedn pointed out, there are plenty that can't be done (or can only be partially done) based on the target bitness/endianness/etc. However, I would think (at least from a primitive perspective) this could be made easier by building on-top of what the JIT/AOT compilers already have. That is, today the JIT/AOT compilers support reading and parsing IL, as well as generating and transforming the various trees it creates from the IL> If that code was generalized slightly, I would imagine it would be possible to perform machine-independent transformations and save them back to the assembly outside of the JIT itself. This would mean that the JIT can effectively skip most of the machine-independent transformations (or prioritize them lower) for code that has gone through this pass and it can instead focus on the machine-dependent transformations/code-gen. Not that it would be easy to do so, but I think it would be beneficial in the long run (and having the JIT/AOT code shared/extensible would also, theoretically, allow other better tools to be written as well). |

|

Yes, I also realize that an IL rewrite step needn't be limited to things that are in scope for roslyn-linq-rewrite. This thread has many specific suggestions of those. I am trying to ask a question specifically about the linq-related suggestions that have been made on this thread, please don't interpret it as a statement on anything beyond that. Since my question keeps getting buried by answers to a more general one that wasn't what I was trying to ask, I'll repeat it: for the sort of transforms that roslyn-linq-rewrite performs, is there a benefit/desire to have an IL rewrite step perform those same ones? If so, why, and if not, what similar transforms have people had in mind when pointing to it as an example? |

|

Sorry. I had misunderstood your question originally, my bad 😄 (if I still managed to derail your question below, just ping me and I'll remove).

I don't (personally) think there is any major benefit to having the transforms However, I do think there are some minor benefits:

I think LINQ is a big one just because it makes writing your code so much easier, but it can also slow your code down if not down carefully. I think auto-vectorization and auto-parallelization would be other similar transformations (just thinking of the more complex optimizations a native compiler might do). I think both of these are generally considered machine-independent (but of course, there are exceptions). |

You're so wrong here :) It has huge benefit.

It slows down your code whenever you care about it or not. Because it involves tons of delegate callbacks instead of pure imperative code. If you are working with DB then you don't care because you already are on slow path, but in-memory transformations via linq are so sweet and this slow too, up to 100 times IIRC. For example, in my current project I use LINQ 1733 times in 8051 files. That seems to be a lot. |

Well, what is that benefit? That was the question. |

|

@mikedn see Steno project, I don't know. MS research: |

The question was not about the effect of the optimization. I don't think anybody questions the fact that LINQ is not exactly efficient and that replacing the zillion of calls and allocations it generates would speed things up. The question it about the various optimization approaches. Roslyn rewriter. IL rewriter. And, why not, even "JIT rewriter". |

|

|

So I understand correctly you prefer the Roslyn approach. Not because you actually need such optimizations to be implemented this way but because the other approaches seem more complicated. Makes sense but at the same time it means that this implementation is tied to Roslyn and thus available to C# and VB only. Other languages will have to do their own thing. |

|

The way I see it is

.NET IL Linker is an example of linking/tree-shaking with whole program analysis. |

Yes, exactly. My team is looking at expanding the rewrites available in .NET IL Linker. I'm currently trying to assess what rewrites people are interested in having made available, for planning/prioritization purposes, which naturally brought me to this issue where there's been much discussion of that. LINQ rewriting seems to generate a lot of interest, and for the various reasons already mentioned above doesn't seem like it will have a home in the JIT or in Roslyn proper (where by "proper" I mean excluding opt-in extensions). So that would make it a good candidate, except that AFAIK anybody who would benefit from having it available to opt into in .NET IL Linker could just as well opt into it by adding roslyn-linq-rewrite to their build process. But I've been wondering if I'm somehow glossing over something with that line of reasoning, hence my questions about it. The takeaway I'm getting from the responses is that no, there isn't any benefit to LINQ rewriting in .NET IL Linker over what's already available via roslyn-linq-rewrite, and that LINQ rewriting has come up on this thread simply as an example of something useful that has been done that doesn't fit in the JIT or Roslyn proper. |

The difference is credibility. roslyn-linq-rewrite is one-man project, last updated one year ago. It is a custom build of Roslyn compiler. It is hard to use for any serious project in the current form. |

Come to think of it, the real reason such optimizations may not belong in the JIT hasn't been mentioned. These LINQ "optimizations" aren't optimizations in the true sense, those rewriters don't understand the System.Linq code and optimize it, they assume that LINQ's methods do certain things and generate code that supposedly behaves identical. That is, they treat those methods as intrinsics. That's something that the JIT could probably do too, except it requires generating significant amounts of IR and that may be cumbersome. But probably the main problem with doing this in the JIT is that, for better or worse, there's not a single JIT. There's RyuJIT, there's .NETNative, there's Mono... Sheesh, one way or another some duplicate work will happen.

So where's IL linker's repository? Let people create issues, discuss, vote on them etc. That's better than "hijacking" an existing thread like this. Granted, you may end up with a bunch of noise but that's life. Here's a fancy idea. What if the IL linker would CSE and hoist everything it can and then the JIT would do some kind of rematerialization to account for target architecture realities? Perhaps it would be cheaper for the JIT to do that instead of CSE. Granted... that's a bit beyond the idea of "linker" :) |

I believe that it is hard for the more interesting Linq optimizations to preserve all side-effects. It should not matter for well-written Linq queries, but it is a problem for poorly written Linq queries. It is ok for a opt-in built-time tool to change behavior of poorly written Linq queries. It is not ok for JIT to do it at runtime. |

https://github.com/mono/linker

Yes, I completely agree it will be more productive to discuss potential new rewrites over there, with separate issues for each. I wasn't trying to extend general discussion on this thread, just ask a clarifying question about a few specific comments on it (to which I now have the answer, thanks @jkotas). |

|

I think the conclusion here is that such work would not likely be part of Roslyn, but more likely in https://github.com/mono/linker . However, we'll leave this open so people can find this. |

|

But in this case it's tied to mono, isn't it? What's about core, full framework etc? |

|

It is not tied to mono. dotnet/announcements#30 |

The jit can only apply so many optimizations as it is time constrained at runtime.

AOT/NGen which has more time ends up with asm but loses some optimizations the jit can do at runtime as it needs to be conservative as to cpu architecture; static readonly to consts etc

The compile to il compilers (C#/VB.NET/etc); which aren't as time constrained but have a lot of optimizations considered out of scope.

Do we need a 3rd compiler between roslyn and jit that optimizes the il as part of the regular compile or a "publish" compile?

This could be a collaboration between the jit and roslyn teams?

I'm sure there are lots of low hanging fruit between the two; that the jit would like to do but are too expensive.

There is also the whole program optimization or linker + tree shaking which is also a component in this picture. (e.g. Mono/Xamarin linker). Likely also partial linking (e.g. nugets/non-platform runtime libs)

From #15644 (comment)

/cc @migueldeicaza @gafter @jkotas @AndyAyersMS

The text was updated successfully, but these errors were encountered: