-

Notifications

You must be signed in to change notification settings - Fork 1k

2 编写一个配置式爬虫

上一章介绍了基本爬虫的写法,这种写法呢完全由自己写解析,写数据存储,自由度很高但相对代码量也要多很多。DotnetSpider 默认实现了一种基于实体配置的爬虫编写方式。请看示例代码:

public class EntitySpider : Spider

{

public EntitySpider(IOptions<SpiderOptions> options, DependenceServices services,

ILogger<Spider> logger) : base(

options, services, logger)

{

}

protected override async Task InitializeAsync(CancellationToken stoppingToken = default)

{

AddDataFlow(new DataParser<CnblogsEntry>());

AddDataFlow(GetDefaultStorage());

await AddRequestsAsync(

new Request(

"https://news.cnblogs.com/n/page/1", new Dictionary<string, object> {{"网站", "博客园"}}));

}

protected override SpiderId GenerateSpiderId()

{

return new(ObjectId.CreateId().ToString(), "博客园");

}

[Schema("cnblogs", "news")]

[EntitySelector(Expression = ".//div[@class='news_block']", Type = SelectorType.XPath)]

[GlobalValueSelector(Expression = ".//a[@class='current']", Name = "类别", Type = SelectorType.XPath)]

[GlobalValueSelector(Expression = "//title", Name = "Title", Type = SelectorType.XPath)]

[FollowRequestSelector(Expressions = new[] {"//div[@class='pager']"})]

public class CnblogsEntry : EntityBase<CnblogsEntry>

{

protected override void Configure()

{

HasIndex(x => x.Title);

HasIndex(x => new {x.WebSite, x.Guid}, true);

}

public int Id { get; set; }

[Required]

[StringLength(200)]

[ValueSelector(Expression = "类别", Type = SelectorType.Environment)]

public string Category { get; set; }

[Required]

[StringLength(200)]

[ValueSelector(Expression = "网站", Type = SelectorType.Environment)]

public string WebSite { get; set; }

[StringLength(200)]

[ValueSelector(Expression = "Title", Type = SelectorType.Environment)]

[ReplaceFormatter(NewValue = "", OldValue = " - 博客园")]

public string Title { get; set; }

[StringLength(40)]

[ValueSelector(Expression = "GUID", Type = SelectorType.Environment)]

public string Guid { get; set; }

[ValueSelector(Expression = ".//h2[@class='news_entry']/a")]

public string News { get; set; }

[ValueSelector(Expression = ".//h2[@class='news_entry']/a/@href")]

public string Url { get; set; }

[ValueSelector(Expression = ".//div[@class='entry_summary']")]

[TrimFormatter]

public string PlainText { get; set; }

[ValueSelector(Expression = "DATETIME", Type = SelectorType.Environment)]

public DateTime CreationTime { get; set; }

}

}首先你需要定义一个数据实体,实体必须继承自 EntityBase<>,只有继承自 EntityBase<> 的数据实体才能被框架默认实现的解析器 DataParse 和 实体存储器

EntityStorage 存储

Schema 定义数据实体需要存到的哪个数据库、哪个表,可以支持表名后缀:周度、月度、当天

定义如何从文本中要抽出数据对象,若是没有配置此特性,表示这个数据对象为页面级别的,即一个页面只产生一个数据对象,也即一条数据。

如上示例代码中:

[EntitySelector(Expression = ".//div[@class='news_block']", Type = SelectorType.XPath)]

表示使用 XPath 查询器查询出符合 .//div[@class='news_block'] 的所有内容块,每个内容块为一个数据对象,也即对应一条数据。

定义从文本中查询出的数据暂存到环境数据中,可以供数据实体内部属性查询,可以配置多个。

如上示例代码中:

[GlobalValueSelector(Expression = ".//a[@class='current']", Name = "类别", Type = SelectorType.XPath)]

[GlobalValueSelector(Expression = "//title", Name = "Title", Type = SelectorType.XPath)]

表示使用 XPath 查询器 .//a[@class='current'] 结果若为 v 则保存为 { key: 类别, value: v },然后在数据实体中可以配置环境查询来设置值

[ValueSelector(Expression = "类别", Type = SelectorType.Environment)]

public string Category { get; set; }

定义如何从当前文本抽取合适的链接加入到 Scheduler 中,可以定义 xpath 查询元素以获取链接,也可以配置 pattern 来确定请求是否符合要求,若是不符合的链接则会完全忽略,即便在爬虫 InitializeAsync 中加入到 Scheduler 的链接,也要受到 pattern 的约束。

如上示例代码中:

[FollowRequestSelector(Expressions = new[] {"//div[@class='pager']"})]

默认表示使用 XPath 查询器 //div[@class='pager'] 查询到的页面元素里的所有链接都尝试加入到 Scheduler 中,也可以使用其它类型的查询器

定义如何从当前文本块中查询值设置到数据实体的属性中。需要注意的是,所有数据实体内的 ValueSelector 是基于 EntitySelector 查询到的元素为根元素。

支持的查询类型有:XPath、Regex、Css、JsonPath、Environment。其中 Environment 表示为环境值,其数据来源有:

-

构造 Request 时设置的 Properties

-

GlobalValueSelector 查询到的所有值

-

某些系统定义的值:

ENTITY_INDEX: 表示当前数据实体是当前文本查询到的所有数据实体的第几个 GUID:获取到一个随机的 GUID DATE:获取当天的时间,以 “yyyy-MM-dd” 格式化的字符串 TODAY:获取当天的时间,以 “yyyy-MM-dd” 格式化的字符串 DATETIME:获取当前时间,以 “yyyy-MM-dd HH:mm:ss” 格式化的字符串 NOW:获取当前时间,以 “yyyy-MM-dd HH:mm:ss” 格式化的字符串 MONTH:获取当月的第一天,以 “yyyy-MM-dd” 格式化的字符串 MONDAY:获取当前星期的星期一,以 “yyyy-MM-dd” 格式化的字符串 SPIDER_ID:获取当前爬虫的 ID REQUEST_HASH:获取当前数据实体所属请求的 HASH 值

只要是继承自 EntityBase 的数据实体都可以使用默认实现的数据解析器 DataParser,如上示例我们可以添加一个数据解析器

AddDataFlow(new DataParser<CnblogsEntry>());

我们可以使用默认的数据存储器

AddDataFlow(GetDefaultStorage());

若要使用默认的数据存储器,需要在 appsettings.json 中设置:

"StorageType": "DotnetSpider.MySql.MySqlEntityStorage, DotnetSpider.MySql",

"MySql": {

"ConnectionString": "Database='mysql';Data Source=localhost;password=1qazZAQ!;User ID=root;Port=3306;",

"Mode": "InsertIgnoreDuplicate"

},

其中 ConnectionString 是数据库连接字符串,StorageType 则是所要使用的存储器类型(需要包含程序集信息),Mode 表示数据存储器的模式:

Insert:直接插入,若遇到重复索引可能会有异常导致爬虫中止。所有数据库都支持

InsertIgnoreDuplicate:若数据没有违反重复约束则插入,若有重复则忽略,不是所有数据库都支持此种模式

InsertAndUpdate:若数据不存在则插入,重复则更新

Update:只做更新 class Program

{

static async Task Main(string[] args)

{

Log.Logger = new LoggerConfiguration()

.MinimumLevel.Information()

.MinimumLevel.Override("Microsoft.Hosting.Lifetime", LogEventLevel.Warning)

.MinimumLevel.Override("Microsoft", LogEventLevel.Warning)

.MinimumLevel.Override("System", LogEventLevel.Warning)

.MinimumLevel.Override("Microsoft.AspNetCore.Authentication", LogEventLevel.Warning)

.Enrich.FromLogContext()

.WriteTo.Console().WriteTo.RollingFile("logs/spiders.log")

.CreateLogger();

var builder = Builder.CreateDefaultBuilder<EntitySpider>(options =>

{

options.Speed = 1;

});

builder.UseDownloader<HttpClientDownloader>();

builder.UseSerilog();

builder.IgnoreServerCertificateError();

builder.UseQueueDistinctBfsScheduler<HashSetDuplicateRemover>();

await builder.Build().RunAsync();

Environment.Exit(0);

}

}

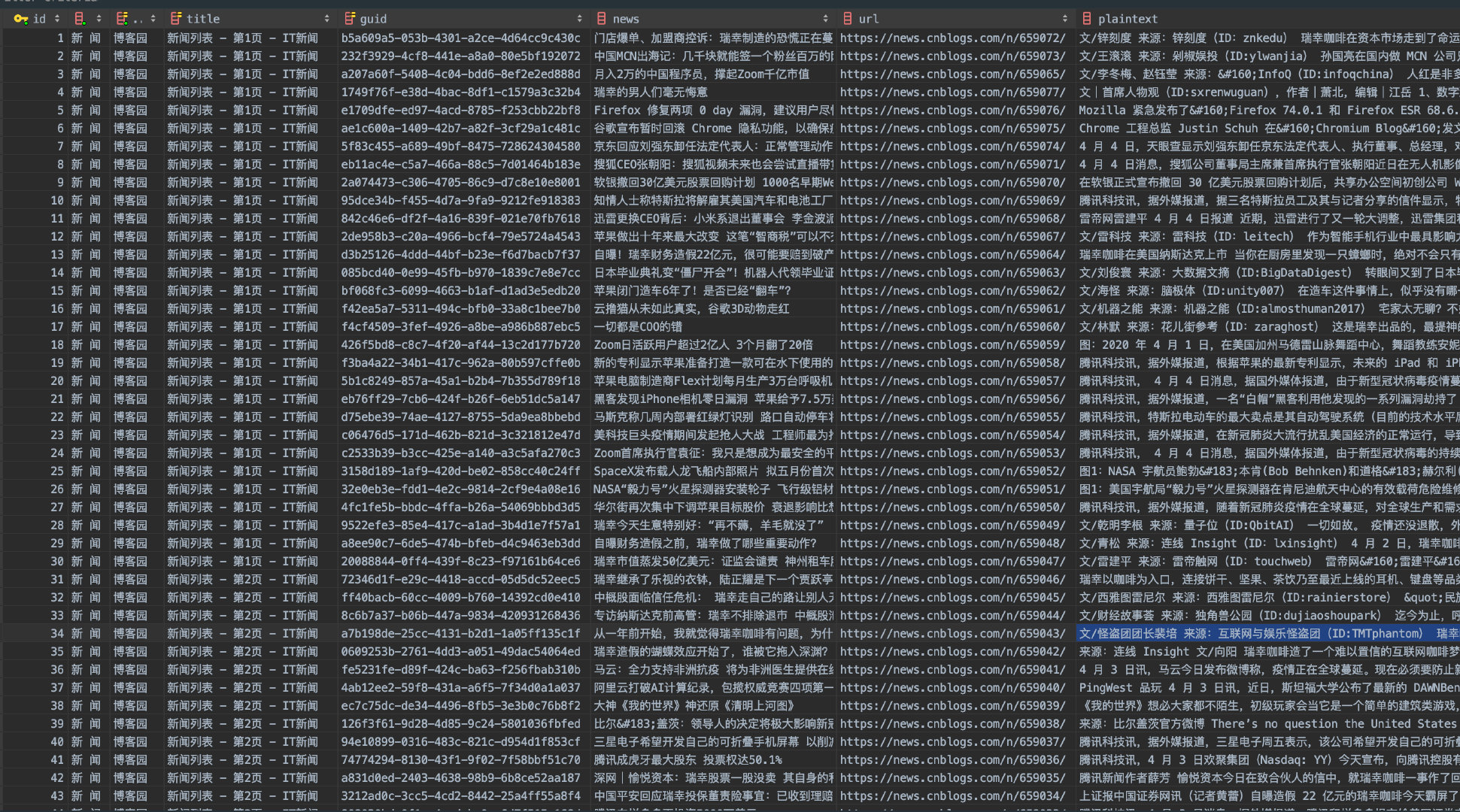

运行结果如下

[21:09:42 INF]

_____ _ _ _____ _ _

| __ \ | | | | / ____| (_) | |

| | | | ___ | |_ _ __ ___| |_| (___ _ __ _ __| | ___ _ __

| | | |/ _ \| __| '_ \ / _ \ __|\___ \| '_ \| |/ _` |/ _ \ '__|

| |__| | (_) | |_| | | | __/ |_ ____) | |_) | | (_| | __/ |

|_____/ \___/ \__|_| |_|\___|\__|_____/| .__/|_|\__,_|\___|_| version: 5.0.8.0

| |

|_|

[21:09:42 INF] RequestedQueueCount: 1000

[21:09:42 INF] Depth: 0

[21:09:42 INF] RetriedTimes: 3

[21:09:42 INF] EmptySleepTime: 60

[21:09:42 INF] Speed: 1

[21:09:42 INF] Batch: 4

[21:09:42 INF] RemoveOutboundLinks: False

[21:09:42 INF] StorageType: DotnetSpider.MySql.MySqlEntityStorage, DotnetSpider.MySql

[21:09:42 INF] RefreshProxy: 30

[21:09:42 INF] Agent is starting

[21:09:43 INF] Agent started

[21:09:43 INF] Initialize spider 602e6717de497c7ffc868c54, 博客园

[21:09:43 INF] 602e6717de497c7ffc868c54 DataFlows: DataParser`1 -> MySqlEntityStorage

[21:09:43 INF] 602e6717de497c7ffc868c54 register topic DotnetSpider_602e6717de497c7ffc868c54

[21:09:43 INF] Statistics service starting

[21:09:43 INF] Statistics service started

[21:09:44 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/1, NbBZNw== completed

[21:09:45 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/, NSx6sQ== completed

[21:09:46 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/2/, hJ4iug== completed

[21:09:47 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/3/, LROFdA== completed

[21:09:48 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/4/, UdblTQ== completed

[21:09:48 INF] 602e6717de497c7ffc868c54 total 11, speed: 0.82, success 4, failure 0, left 7

[21:09:49 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/5/, 0eqrGg== completed

[21:09:50 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/6/, bsaiBQ== completed

[21:09:51 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/7/, GIWSIQ== completed

[21:09:52 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/8/, WDsjmA== completed

[21:09:53 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/9/, 3KLWIA== completed

[21:09:53 INF] 602e6717de497c7ffc868c54 total 15, speed: 1.02, success 10, failure 0, left 5

[21:09:54 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/100/, dPpTZw== completed

[21:09:55 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/10/, rFzwJw== completed

[21:09:56 INF] 602e6717de497c7ffc868c54 download https://news.cnblogs.com/n/page/11/, fLGJ2g== completed