- | Type |

- Method |

- Description |

+ Type |

+ Method |

+ Description |

+

+ |

+

+ | Pre-processing |

+ Data Repairer |

+ Transforms the data distribution so that a given feature distribution is marginally independent of the sensitive attribute, s. |

+ |

- | Pre-processing |

- Data Repairer |

- Transforms the data distribution so that a given feature distribution is marginally independent of the sensitive attribute, s. |

+ Label Flipping |

+ Flips the labels of a fraction of the training data according to the Fair Ordering-Based Noise Correction method. |

+ |

- | Label Flipping |

- Flips the labels of a fraction of the training data according to the Fair Ordering-Based Noise Correction method. |

+ Prevalence Sampling |

+ Generates a training sample with controllable balanced prevalence for the groups in dataset, either by undersampling or oversampling. |

+ |

- | Prevalence Sampling |

- Generates a training sample with controllable balanced prevalence for the groups in dataset, either by undersampling or oversampling. |

+ Massaging |

+ Flips selected labels to reduce prevalence disparity between groups. |

+ |

- | Unawareness |

- Removes features that are highly correlated with the sensitive attribute. |

+ Correlation Suppression |

+ Removes features that are highly correlated with the sensitive attribute. |

+ |

- | Massaging |

- Flips selected labels to reduce prevalence disparity between groups. |

+ Feature Importance Suppression |

+ Iterively removes the most important features with respect to the sensitive attribute.

+ |

+

+ |

- | In-processing |

- FairGBM |

- Novel method where a boosting trees algorithm (LightGBM) is subject to pre-defined fairness constraints. |

+ In-processing |

+ FairGBM |

+ Novel method where a boosting trees algorithm (LightGBM) is subject to pre-defined fairness constraints. |

+ |

- | Fairlearn Classifier |

- Models from the Fairlearn reductions package. Possible parameterization for ExponentiatedGradient and GridSearch methods. |

+ Fairlearn Classifier |

+ Models from the Fairlearn reductions package. Possible parameterization for ExponentiatedGradient and GridSearch methods. |

+ |

- | Post-processing |

- Group Threshold |

- Adjusts the threshold per group to obtain a certain fairness criterion (e.g., all groups with 10% FPR) |

+ Post-processing |

+ Group Threshold |

+ Adjusts the threshold per group to obtain a certain fairness criterion (e.g., all groups with 10% FPR) |

+ |

- | Balanced Group Threshold |

- Adjusts the threshold per group to obtain a certain fairness criterion, while satisfying a global constraint (e.g., Demographic Parity with a global FPR of 10%) |

+ Balanced Group Threshold |

+ Adjusts the threshold per group to obtain a certain fairness criterion, while satisfying a global constraint (e.g., Demographic Parity with a global FPR of 10%) |

+ |

-

### Fairness Metrics

`aequitas` provides the value of confusion matrix metrics for each possible value of the sensitive attribute columns To calculate fairness metrics. The cells of the confusion metrics are:

@@ -214,13 +246,6 @@ From these, we calculate several metrics:

These are implemented in the [`Group`](https://github.com/dssg/aequitas/blob/master/src/aequitas/group.py) class. With the [`Bias`](https://github.com/dssg/aequitas/blob/master/src/aequitas/bias.py) class, several fairness metrics can be derived by different combinations of ratios of these metrics.

-### 📔Example Notebooks

-

-| Notebook | Description |

-|-|-|

-| [Audit a Model's Predictions](https://colab.research.google.com/github/dssg/aequitas/blob/notebooks/compas_demo.ipynb) | Check how to do an in-depth bias audit with the COMPAS example notebook. |

-| [Correct a Model's Predictions](https://colab.research.google.com/github/dssg/aequitas/blob/notebooks/aequitas_flow_model_audit_and_correct.ipynb) | Create a dataframe to audit a specific model, and correct the predictions with group-specific thresholds in the Model correction notebook. |

-| [Train a Model with Fairness Considerations](https://colab.research.google.com/github/dssg/aequitas/blob/notebooks/aequitas_flow_experiment.ipynb) | Experiment with your own dataset or methods and check the results of a Fair ML experiment. |

## Further documentation

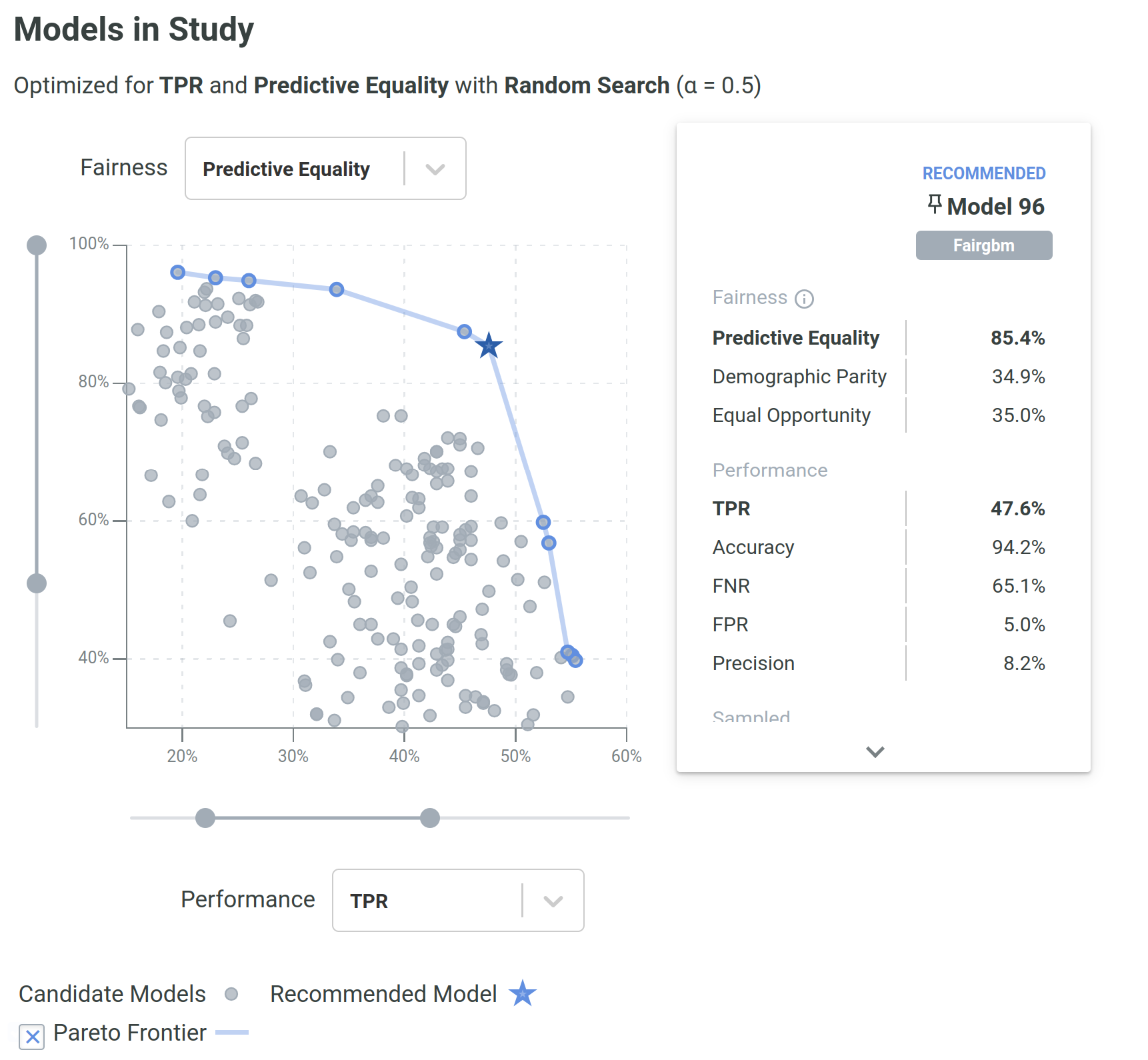

+The [`DefaultExperiment`](https://github.com/dssg/aequitas/blob/readme-feedback-changes/src/aequitas/flow/experiment/default.py#L9) class allows for an easier entry-point to experiments in the package. This class has two main parameters to configure the experiment: `experiment_size` and `methods`. The former defines the size of the experiment, which can be either `test` (1 model per method), `small` (10 models per method), `medium` (50 models per method), or `large` (100 models per method). The latter defines the methods to be used in the experiment, which can be either `all` or a subset, namely `preprocessing` or `inprocessing`.

+

+Several aspects of an experiment (*e.g.*, algorithms, number of runs, dataset splitting) can be configured individually in more granular detail in the [`Experiment`](https://github.com/dssg/aequitas/blob/readme-feedback-changes/src/aequitas/flow/experiment/experiment.py#L23) class.

+

+

[comment]: <> (Make default experiment this easy to run)

### 🧠 Quickstart on Method Training

@@ -137,51 +153,67 @@ We support a range of methods designed to address bias and discrimination in dif

+The [`DefaultExperiment`](https://github.com/dssg/aequitas/blob/readme-feedback-changes/src/aequitas/flow/experiment/default.py#L9) class allows for an easier entry-point to experiments in the package. This class has two main parameters to configure the experiment: `experiment_size` and `methods`. The former defines the size of the experiment, which can be either `test` (1 model per method), `small` (10 models per method), `medium` (50 models per method), or `large` (100 models per method). The latter defines the methods to be used in the experiment, which can be either `all` or a subset, namely `preprocessing` or `inprocessing`.

+

+Several aspects of an experiment (*e.g.*, algorithms, number of runs, dataset splitting) can be configured individually in more granular detail in the [`Experiment`](https://github.com/dssg/aequitas/blob/readme-feedback-changes/src/aequitas/flow/experiment/experiment.py#L23) class.

+

+

[comment]: <> (Make default experiment this easy to run)

### 🧠 Quickstart on Method Training

@@ -137,51 +153,67 @@ We support a range of methods designed to address bias and discrimination in dif