If you use this code, please cite our paper:

@inproceedings{kluger2020consac,

title={CONSAC: Robust Multi-Model Fitting by Conditional Sample Consensus},

author={Kluger, Florian and Brachmann, Eric and Ackermann, Hanno and Rother, Carsten and Yang, Michael Ying and Rosenhahn, Bodo},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2020}

}

Related repositories:

Get the code:

git clone --recurse-submodules https://github.com/fkluger/consac.git

cd consac

git submodule update --init --recursive

Set up the Python environment using Anaconda:

conda env create -f environment.yml

source activate consac

Build the LSD line segment detector module(s):

cd datasets/nyu_vp/lsd

python setup.py build_ext --inplace

cd ../../yud_plus/lsd

python setup.py build_ext --inplace

For the demo, OpenCV is required as well:

pip install opencv-contrib-python==3.4.0.12

The vanishing point labels and pre-extracted line segments for the NYU dataset are fetched automatically via the nyu_vp submodule. In order to use the original RGB images as well, you need to obtain the original dataset MAT-file and convert it to a version 7 MAT-file in MATLAB so that we can load it via scipy:

load('nyu_depth_v2_labeled.mat')

save('nyu_depth_v2_labeled.v7.mat','-v7')

Pre-extracted line segments and VP labels are fetched automatically via the yud_plus submodule. RGB images and camera

calibration parameters, however, are not included. Download the original York Urban Dataset from the

Elder Laboratory's website and

store it under the datasets/yud_plus/data subfolder.

The AdelaideRMF dataset can be obtained from Hoi Sim Wong's website.

For now, we provide a mirror here.

We use the same pre-computed SIFT features of structure-from-motion datasets for self-supervised training on homography estimation which were also used for NG-RANSAC. You can obtain them via:

cd datasets

wget -O traindata.tar.gz https://heidata.uni-heidelberg.de/api/access/datafile/:persistentId?persistentId=doi:10.11588/data/PCGYET/QZTF7P

tar -xzvf traindata.tar.gz

rm traindata.tar.gz

Refer to the NG-RANSAC documentation for details about the dataset.

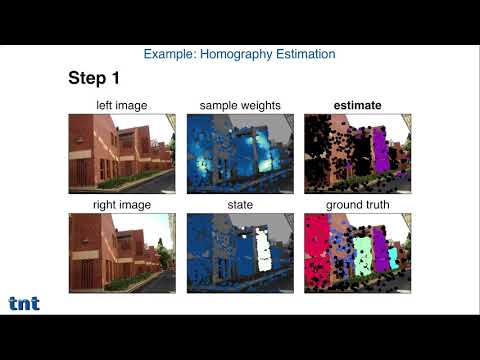

For a homography estimation demo, run:

python demo.py --problem homography --defaults

You should get a result similar to this:

For a vanishing point estimation demo, run:

python demo.py --problem vp --defaults

You should get a result similar to this:

You can also use your own images as input. Check python demo.py --help for a list of user-definable parameters.

In order to repeat the main experiments from our paper using pre-trained neural networks, you can simply run the following commands:

CONSAC on NYU-VP:

python evaluate_vp.py --dataset NYU --dataset_path ./datasets/nyu_vp/data --nyu_mat_path nyu_depth_v2_labeled.v7.mat --runcount 5

CONSAC on YUD+:

python evaluate_vp.py --dataset YUD+ --dataset_path ./datasets/yud_plus/data --runcount 5

CONSAC on YUD:

python evaluate_vp.py --dataset YUD --dataset_path ./datasets/yud_plus/data --runcount 5

For Sequential RANSAC instead of CONSAC, just add the --uniform option.

For CONSAC-S (trained in a self-supervised manner), add --ckpt models/consac-s_vp.net.

For CONSAC-S without IMR, add --ckpt models/consac-s_no-imr_vp.net.

For unconditional CONSAC, add --ckpt models/consac_unconditional_vp.net --unconditional.

CONSAC-S on AdelaideRMF:

python evaluate_homography.py --dataset_path PATH_TO_AdelaideRMF --runcount 5

For Sequential RANSAC instead of CONSAC, just add the --uniform option.

For CONSAC-S without IMR, add --ckpt models/consac-s_no-imr_homography.net.

For unconditional CONSAC-S, add --ckpt models/consac-s_unconditional_homography.net --unconditional.

Train CONSAC for vanishing point detection on NYU-VP:

python train_vp.py --calr --gpu GPU_ID

Train CONSAC-S for homography estimation on the SfM data:

python train_homography.py --calr --fair_sampling --gpu GPU_ID

The vanishing point example image shows a landmark in Hannover, Germany:

The Python implementation of the LSD line segment detector was originally taken from here: github.com/xanxys/lsd-python