diff --git a/.github/workflows/sphinx_docs.yml b/.github/workflows/sphinx_docs.yml

index 117c22efd..90a519c53 100644

--- a/.github/workflows/sphinx_docs.yml

+++ b/.github/workflows/sphinx_docs.yml

@@ -1,8 +1,13 @@

name: Deploy Sphinx documentation to Pages

on:

- release:

- types: [published]

+ pull_request:

+ types: [opened, synchronize]

+ paths:

+ - 'docs/**/*'

+ push:

+ branches:

+ - main

jobs:

pages:

@@ -12,19 +17,27 @@ jobs:

OS: ${{ matrix.os }}

PYTHON: '3.9'

steps:

- - uses: actions/checkout@master

+ - name: Checkout repository

+ uses: actions/checkout@master

- name: Setup Python ${{ matrix.python-version }}

uses: actions/setup-python@master

with:

python_version: ${{ matrix.python-version }}

- id: deployment

+ name: Build Documentation

uses: sphinx-notes/pages@v3

with:

documentation_path: ./docs/sphinx_doc/source

python_version: ${{ matrix.python-version }}

publish: false

requirements_path: ./docs/sphinx_doc/requirements.txt

+ - name: Upload Documentation

+ uses: actions/upload-artifact@v3

+ with:

+ name: SphinxDoc

+ path: ${{ steps.deployment.outputs.artifact }}

- uses: peaceiris/actions-gh-pages@v3

+ if: github.event_name == 'push' && github_ref == 'refs/heads/main'

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

publish_dir: ${{ steps.deployment.outputs.artifact }}

\ No newline at end of file

diff --git a/docs/sphinx_doc/source/conf.py b/docs/sphinx_doc/source/conf.py

index 221d8ff67..003a56492 100644

--- a/docs/sphinx_doc/source/conf.py

+++ b/docs/sphinx_doc/source/conf.py

@@ -35,8 +35,13 @@

"sphinx.ext.napoleon",

"sphinxcontrib.mermaid",

"myst_parser",

+ "sphinx.ext.autosectionlabel",

]

+# Prefix document path to section labels, otherwise autogenerated labels would

+# look like 'heading' rather than 'path/to/file:heading'

+autosectionlabel_prefix_document = True

+

# Add any paths that contain templates here, relative to this directory.

templates_path = ["_templates"]

diff --git a/docs/sphinx_doc/source/index.rst b/docs/sphinx_doc/source/index.rst

index 1b404003c..feb525bfd 100644

--- a/docs/sphinx_doc/source/index.rst

+++ b/docs/sphinx_doc/source/index.rst

@@ -8,8 +8,23 @@

AgentScope Documentation

======================================

+

+.. include:: tutorial/main.md

+ :parser: myst_parser.sphinx_

+

+.. toctree::

+ :maxdepth: 1

+ :glob:

+ :hidden:

+ :caption: AgentScope Tutorial

+

+ tutorial/quick_start.rst

+ tutorial/advance.rst

+ tutorial/contribute.rst

+

+

.. toctree::

- :maxdepth: 2

+ :maxdepth: 1

:glob:

:caption: AgentScope API Reference

@@ -24,15 +39,6 @@ AgentScope Documentation

agentscope.web_ui

agentscope

-.. include:: tutorial/main.md

- :parser: myst_parser.sphinx_

-

-.. toctree::

- :maxdepth: 1

- :glob:

- :hidden:

- :caption: AgentScope Tutorial

-

Indices and tables

==================

diff --git a/docs/sphinx_doc/source/tutorial/101-installation.md b/docs/sphinx_doc/source/tutorial/101-installation.md

new file mode 100644

index 000000000..fdde47b4f

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/101-installation.md

@@ -0,0 +1,68 @@

+(101-installation)=

+

+# Installation

+

+To install AgentScope, you need to have Python 3.9 or higher installed. We recommend setting up a new virtual environment specifically for AgentScope:

+

+### Create a Virtual Environment

+

+#### Using Conda

+

+If you're using Conda as your package and environment management tool, you can create a new virtual environment with Python 3.9 using the following commands:

+

+```bash

+# Create a new virtual environment named 'agentscope' with Python 3.9

+conda create -n agentscope python=3.9

+

+# Activate the virtual environment

+conda activate agentscope

+```

+

+#### Using Virtualenv

+

+Alternatively, if you prefer `virtualenv`, you can install it first (if it's not already installed) and then create a new virtual environment as shown:

+

+```bash

+# Install virtualenv if it is not already installed

+pip install virtualenv

+

+# Create a new virtual environment named 'agentscope' with Python 3.9

+virtualenv agentscope --python=python3.9

+

+# Activate the virtual environment

+source agentscope/bin/activate # On Windows use `agentscope\Scripts\activate`

+```

+

+### Installing AgentScope

+

+#### Install with Pip

+

+If you prefer to install AgentScope from Pypi, you can do so easily using `pip`:

+

+```bash

+# For centralized multi-agent applications

+pip install agentscope

+# For distributed multi-agent applications

+pip install agentscope[distribute] # On Mac use `pip install agentscope\[distribute\]`

+```

+

+#### Install from Source

+

+For users who prefer to install AgentScope directly from the source code, follow these steps to clone the repository and install the platform in editable mode:

+

+**_Note: This project is under active development, it's recommended to install AgentScope from source._**

+

+```bash

+# Pull the source code from Github

+git clone https://github.com/alibaba/AgentScope.git

+cd AgentScope

+

+# For centralized multi-agent applications

+pip install -e .

+# For distributed multi-agent applications

+pip install -e .[distribute] # On Mac use `pip install -e .\[distribute\]`

+```

+

+**Note**: The `[distribute]` option installs additional dependencies required for distributed applications. Remember to activate your virtual environment before running these commands.

+

+[[Return to the top]](#installation)

diff --git a/docs/sphinx_doc/source/tutorial/102-concepts.md b/docs/sphinx_doc/source/tutorial/102-concepts.md

new file mode 100644

index 000000000..a83da431e

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/102-concepts.md

@@ -0,0 +1,46 @@

+(102-concepts)=

+

+# Fundamental Concepts

+

+In this tutorial, you'll have an initial understanding of the **fundamental concepts** of AgentScope. We will focus on how a multi-agent application runs based on our platform and familiarize you with the essential terms. Let's get started!

+

+

+

+## Essential Terms and Concepts

+

+* **Agent** refers to an autonomous entity capable of performing actions to achieve specific objectives (probably powered by LLMs). In AgentScope, an agent takes the message as input and generates a corresponding response message. Agents can interact with each other to simulate human-like behaviors (e.g., discussion or debate) and cooperate to finish complicated tasks (e.g., generate runnable and reliable code).

+* **Message** is a carrier of communication information among agents. It encapsulates information that needs to be conveyed, such as instructions, multi-modal data, or status updates. In AgentScope, a message is a subclass of Python's dict with additional features for inter-agent communication, including fields such as `name` and `content` for identification and payload delivery.

+* **Memory** refers to the structures (e.g., list-like memory, database-based memory) used to store and manage `Msg` (Message) that agents need to remember and store. This can include chat history, knowledge, or other data that informs the agent's future actions.

+* **Service** is a collection of functionality tools (e.g., web search, code interpreter, file processing) that provide specific capabilities or processes that are independent of an agent's memory state. Services can be invoked by agents or other components and designed to be reusable across different scenarios.

+* **Pipeline** refers to the interaction order or pattern of agents in a task. AgentScope provides built-in `pipelines` to streamline the process of collaboration across multiple agents, such as `SequentialPipeline` and `ForLoopPipeline`. When a `Pipeline` is executed, the *message* passes from predecessors to successors with intermediate results for the task.

+

+## Code Structure

+

+```bash

+AgentScope

+├── src

+│ ├── agentscope

+│ | ├── agents # Core components and implementations pertaining to agents.

+│ | ├── configs # Configurations that can be customized for the application's needs.

+│ | ├── memory # Structures for agent memory.

+│ | ├── models # Interfaces for integrating diverse model APIs.

+│ | ├── pipeline # Fundamental components and implementations for running pipelines.

+│ | ├── rpc # Rpc module for agent distributed deployment.

+│ | ├── service # Services offering functions independent of memory and state.

+│ | ├── utils # Auxiliary utilities and helper functions.

+│ | ├── message.py # Definitions and implementations of messaging between agents.

+| | ├── web_ui # WebUI used to show dialogs.

+│ | ├── prompt.py # Prompt engineering module for model input.

+│ | ├── ... ..

+│ | ├── ... ..

+├── scripts # Scripts for launching local Model API

+├── examples # Pre-built examples of different applications.

+├── docs # Documentation tool for API reference.

+├── tests # Unittest modules for continuous integration.

+├── LICENSE # The official licensing agreement for AgentScope usage.

+└── setup.py # Setup script for installing.

+├── ... ..

+└── ... ..

+```

+

+[[Return to the top]](#fundamental-concepts)

diff --git a/docs/sphinx_doc/source/tutorial/103-example.md b/docs/sphinx_doc/source/tutorial/103-example.md

new file mode 100644

index 000000000..e81fa29c2

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/103-example.md

@@ -0,0 +1,102 @@

+(103-example)=

+

+# Getting Started with a Simple Example

+

+AgentScope is a versatile platform for building and running multi-agent applications. We provide various pre-built examples that will help you quickly understand how to create and use multi-agent for various applications. In this tutorial, you will learn how to set up a **simple agent-based interaction**.

+

+## Step1: Prepare Model Configs

+

+Agent is the basic composition and communication unit in AgentScope. To initialize a model-based agent, you need to prepare your configs for avaliable models. AgentScope supports a variety of APIs for pre-trained models. Here is a table outlining the supported APIs and the type of arguments required for each:

+

+| Model Usage | Type Argument in AgentScope | Supported APIs |

+| -------------------- | ------------------ |-----------------------------------------------------------------------------|

+| Text generation | `openai` | Standard *OpenAI* chat API, FastChat and vllm |

+| Image generation | `openai_dall_e` | *DALL-E* API for generating images |

+| Embedding | `openai_embedding` | API for text embeddings |

+| General usages in POST | `post_api` | *Huggingface* and *ModelScope* Inference API, and other customized post API |

+

+Each API has its specific configuration requirements. For example, to configure an OpenAI API, you would need to fill out the following fields in the model config in a dict, a yaml file or a json file:

+

+```python

+model_config = {

+ "type": "openai", # Choose from "openai", "openai_dall_e", or "openai_embedding"

+ "name": "{your_config_name}", # A unique identifier for your config

+ "model_name": "{model_name}", # The model identifier used in the OpenAI API, such as "gpt-3.5-turbo", "gpt-4", or "text-embedding-ada-002"

+ "api_key": "xxx", # Your OpenAI API key. If unset, the environment variable OPENAI_API_KEY is used.

+ "organization": "xxx", # Your OpenAI organization ID. If unset, the environment variable OPENAI_ORGANIZATION is used.

+}

+```

+

+For open-source models, we support integration with various model interfaces such as HuggingFace, ModelScope, FastChat, and vllm. You can find scripts on deploying these services in the `scripts` directory, and we defer the detailed instructions to [[Using Different Model Sources with Model API]](203-model).

+

+You can register your configuration by calling AgentScope's initilization method as follow. Besides, you can also load more than one config by calling init mutliple times.

+

+```python

+import agentscope

+

+# init once by passing a list of config dict

+openai_cfg_dict = {...dict_filling...}

+modelscope_cfg_dict = {...dict_filling...}

+agentscope.init(model_configs=[openai_cfg_dict, modelscope_cfg_dict])

+```

+

+## Step2: Create Agents

+

+Creating agents is straightforward in AgentScope. After initializing AgentScope with your model configurations (Step 1 above), you can then define each agent with its corresponding role and specific model.

+

+```python

+import agentscope

+from agentscope.agents import DialogAgent, UserAgent

+

+# read model configs

+agentscope.init(model_configs="./openai_model_configs.json")

+

+# Create a dialog agent and a user agent

+dialogAgent = DialogAgent(name="assistant", model="gpt-4")

+userAgent = UserAgent()

+```

+

+**NOTE**: Please refer to [[Customizing Your Custom Agent with Agent Pool]](201-agent) for all available agents.

+

+## Step3: Agent Conversation

+

+"Message" is the primary means of communication between agents in AgentScope. They are Python dictionaries comprising essential fields like the actual `content` of this message and the sender's `name`. Optionally, a message can include a `url` to either a local file (image, video or audio) or website.

+

+```python

+from agentscope.message import Msg

+

+# Example of a simple text message from Alice

+message_from_alice = Msg("Alice", "Hi!")

+

+# Example of a message from Bob with an attached image

+message_from_bob = Msg("Bob", "What about this picture I took?", url="/path/to/picture.jpg")

+```

+

+To start a conversation between two agents, such as `dialog_agent` and `user_agent`, you can use the following loop. The conversation continues until the user inputs `"exit"` which terminates the interaction.

+

+```python

+x = None

+while True:

+ x = dialogAgent(x)

+ x = userAgent(x)

+

+ # Terminate the conversation if the user types "exit"

+ if x.content == "exit":

+ print("Exiting the conversation.")

+ break

+```

+

+For a more advanced approach, AgentScope offers the option of using pipelines to manage the flow of messages between agents. The `sequentialpipeline` stands for sequential speech, where each agent receive message from last agent and generate its response accordingly.

+

+```python

+from agentscope.pipelines.functional import sequentialpipeline

+

+# Execute the conversation loop within a pipeline structure

+x = None

+while x is None or x.content != "exit":

+ x = sequentialpipeline([dialog_agent, user_agent])

+```

+

+For more details about how to utilize pipelines for complex agent interactions, please refer to [[Agent Interactions: Dive deeper into Pipelines and Message Hub]](202-pipeline).

+

+[[Return to the top]](#getting-started-with-a-simple-example)

diff --git a/docs/sphinx_doc/source/tutorial/104-usecase.md b/docs/sphinx_doc/source/tutorial/104-usecase.md

new file mode 100644

index 000000000..a034d8bcc

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/104-usecase.md

@@ -0,0 +1,329 @@

+(104-usecase)=

+

+# Crafting Your First Application

+

+ +

+Before diving into the advanced topics of AgentScope, in this tutorial, we will give you a glance at building a Werewolf application with out-of-the-box components of AgentScope.

+

+**Werewolf** is a well-known social-deduction game, that involves an imaginary village where a few villagers are secretly werewolves, and the objective is to identify who they are before they eliminate all other players. It's a good use case to demonstrate the interaction between multiple autonomous agents, each with its own objectives and the need for communication.

+

+Let the adventure begin to unlock the potential of multi-agent applications with AgentScope!

+

+## Getting Started

+

+Firstly, ensure that you have installed and configured AgentScope properly. Besides, we will involve the basic concepts of `Model API`, `Agent`, `Msg`, and `Pipeline,` as described in [Tutorial-Concept](102-concepts). The overview of this tutorial is shown below:

+

+- [Crafting Your First Application](#crafting-your-first-application)

+ - [Getting Started](#getting-started)

+ - [Step 1: Prepare Model API and Set Model Configs](#step-1-prepare-model-api-and-set-model-configs)

+ - [Step 2: Define the Roles of Each Agent](#step-2-define-the-roles-of-each-agent)

+ - [Step 3: Initialize AgentScope and the Agents](#step-3-initialize-agentscope-and-the-agents)

+ - [Step 4: Set Up the Game Logic](#step-4-set-up-the-game-logic)

+ - [Leverage Pipeline and MsgHub](#leverage-pipeline-and-msghub)

+ - [Implement Werewolf Pipeline](#implement-werewolf-pipeline)

+ - [Step 5: Run the Application](#step-5-run-the-application)

+ - [Next step](#next-step)

+ - [Other Example Applications](#other-example-applications)

+

+**Note**: all the configurations and code for this tutorial can be found in `examples/werewolf`.

+

+### Step 1: Prepare Model API and Set Model Configs

+

+As we discussed in the last tutorial, you need to prepare your model configurations into a JSON file for standard OpenAI chat API, FastChat, and vllm. More details and advanced usages such as configuring local models with POST API are presented in [Tutorial-Model-API](203-model).

+

+```json

+[

+ {

+ "type": "openai",

+ "name": "gpt-4",

+ "parameters": {

+ "api_key": "xxx",

+ "organization_id": "xxx",

+ "temperature": 0.0

+ }

+ },

+]

+```

+

+### Step 2: Define the Roles of Each Agent

+

+In the Werewolf game, agents assume a variety of roles, each endowed with distinctive abilities and objectives. Below, we will outline the agent classes corresponding to each role:

+

+- Villager: Ordinary townsfolk trying to survive.

+- Werewolf: Predators in disguise, aiming to outlast the villagers.

+- Seer: A villager with the power to see the true nature of one player each night.

+- Witch: A villager who can save or poison a player each night.

+

+To implement your own agent, you need to inherit `AgentBase` and implement the `reply` function, which is executed when an agent instance is called via `agent1(x)`.

+

+```python

+from agentscope.agents import AgentBase

+

+class MyAgent(AgentBase):

+ def reply(self, x):

+ # Do something here

+ ...

+ return x

+```

+

+AgentScope provides several out-of-the-box Agents implements and organizes them as an *Agent Pool*. In this application, we use a built-in agent, `DictDialogAgent`. Here we give an example configuration of `DictDialogAgent` for a player assigned as the role of a werewolf:

+

+```json

+{

+ "class": "DictDialogAgent",

+ "args": {

+ "name": "Player1",

+ "sys_prompt": "Act as a player in a werewolf game. You are Player1 and\nthere are totally 6 players, named Player1, Player2, Player3, Player4, Player5 and Player6.\n\nPLAYER ROLES:\nIn werewolf game, players are divided into two werewolves, two villagers, one seer, and one witch. Note only werewolves know who are their teammates.\nWerewolves: They know their teammates' identities and attempt to eliminate a villager each night while trying to remain undetected.\nVillagers: They do not know who the werewolves are and must work together during the day to deduce who the werewolves might be and vote to eliminate them.\nSeer: A villager with the ability to learn the true identity of one player each night. This role is crucial for the villagers to gain information.\nWitch: A character who has a one-time ability to save a player from being eliminated at night (sometimes this is a potion of life) and a one-time ability to eliminate a player at night (a potion of death).\n\nGAME RULE:\nThe game consists of two phases: night phase and day phase. The two phases are repeated until werewolf or villager wins the game.\n1. Night Phase: During the night, the werewolves discuss and vote for a player to eliminate. Special roles also perform their actions at this time (e.g., the Seer chooses a player to learn their role, the witch chooses a decide if save the player).\n2. Day Phase: During the day, all surviving players discuss who they suspect might be a werewolf. No one reveals their role unless it serves a strategic purpose. After the discussion, a vote is taken, and the player with the most votes is \"lynched\" or eliminated from the game.\n\nVICTORY CONDITION:\nFor werewolves, they win the game if the number of werewolves is equal to or greater than the number of remaining villagers.\nFor villagers, they win if they identify and eliminate all of the werewolves in the group.\n\nCONSTRAINTS:\n1. Your response should be in the first person.\n2. This is a conversational game. You should respond only based on the conversation history and your strategy.\n\nYou are playing werewolf in this game.\n",

+ "model": "gpt-3.5-turbo",

+ "use_memory": true

+ }

+}

+```

+

+In this configuration, `Player1` is designated as a `DictDialogAgent`. The parameters include a system prompt (`sys_prompt`) that can guide the agent's behavior, the model (`model`) that determines the type of language model of the agent, and a flag (`use_memory`) indicating whether the agent should remember past interactions.

+

+For other players, configurations can be customized based on their roles. Each role may have different prompts, models, or memory settings. You can refer to the JSON file located at `examples/werewolf/configs/agent_configs.json` within the AgentScope examples directory.

+

+### Step 3: Initialize AgentScope and the Agents

+

+Now we have defined the roles in the application and we can initialize the AgentScope environment and all agents. This process is simplified by AgentScope via a few lines, based on the configuration files we've prepared (assuming there are **2** werewolves, **2** villagers, **1** witch, and **1** seer):

+

+```python

+import agentscope

+

+# read model and agent configs, and initialize agents automatically

+survivors = agentscope.init(

+ model_configs="./configs/model_configs.json",

+ agent_configs="./configs/agent_configs.json",

+ logger_level="DEBUG",

+)

+

+# Define the roles within the game. This list should match the order and number

+# of agents specified in the 'agent_configs.json' file.

+roles = ["werewolf", "werewolf", "villager", "villager", "seer", "witch"]

+

+# Based on their roles, assign the initialized agents to variables.

+# This helps us reference them easily in the game logic.

+wolves, villagers, witch, seer = survivors[:2], survivors[2:-2], survivors[-1], survivors[-2]

+```

+

+Through this snippet of code, we've allocated roles to our agents and associated them with the configurations that dictate their behavior in the application.

+

+### Step 4: Set Up the Game Logic

+

+In this step, you will set up the game logic and orchestrate the flow of the Werewolf game using AgentScope's helper utilities.

+

+#### Leverage Pipeline and MsgHub

+

+To simplify the construction of agent communication, AgentScope provides two helpful concepts: **Pipeline** and **MsgHub**.

+

+- **Pipeline**: It allows users to program communication among agents easily.

+

+ ```python

+ from agentscope.pipelines import SequentialPipeline

+

+ pipe = SequentialPipeline(agent1, agent2, agent3)

+ x = pipe(x) # the message x will be passed and replied by agent 1,2,3 in order

+ ```

+

+- **MsgHub**: You may have noticed that all the above examples are one-to-one communication. To achieve a group chat, we provide another communication helper utility `msghub`. With it, the messages from participants will be broadcast to all other participants automatically. In such cases, participating agents even don't need input and output messages. All we need to do is to decide the order of speaking. Besides, `msghub` also supports dynamic control of participants.

+

+ ```python

+ with msghub(participants=[agent1, agent2, agent3]) as hub:

+ agent1()

+ agent2()

+

+ # Broadcast a message to all participants

+ hub.broadcast(Msg("Host", "Welcome to join the group chat!"))

+

+ # Add or delete participants dynamically

+ hub.delete(agent1)

+ hub.add(agent4)

+ ```

+

+#### Implement Werewolf Pipeline

+

+The game logic is divided into two major phases: (1) night when werewolves act, and (2) daytime when all players discuss and vote. Each phase will be handled by a section of code using pipelines to manage multi-agent communications.

+

+- **1.1 Night Phase: Werewolves Discuss and Vote**

+

+During the night phase, werewolves must discuss among themselves to decide on a target. The `msghub` function creates a message hub for the werewolves to communicate in, where every message sent by an agent is observable by all other agents within the `msghub`.

+

+```python

+# start the game

+for i in range(1, MAX_GAME_ROUND + 1):

+ # Night phase: werewolves discuss

+ hint = HostMsg(content=Prompts.to_wolves.format(n2s(wolves)))

+ with msghub(wolves, announcement=hint) as hub:

+ for _ in range(MAX_WEREWOLF_DISCUSSION_ROUND):

+ x = sequentialpipeline(wolves)

+ if x.agreement:

+ break

+```

+

+After the discussion, werewolves proceed to vote for their target, and the majority's choice is determined. The result of the vote is then broadcast to all werewolves.

+

+**Note**: the detailed prompts and utility functions can be found in `examples/werewolf`.

+

+```python

+ # werewolves vote

+ hint = HostMsg(content=Prompts.to_wolves_vote)

+ votes = [extract_name_and_id(wolf(hint).content)[0] for wolf in wolves]

+ # broadcast the result to werewolves

+ dead_player = [majority_vote(votes)]

+ hub.broadcast(

+ HostMsg(content=Prompts.to_wolves_res.format(dead_player[0])),

+ )

+```

+

+- **1.2 Witch's Turn**

+

+If the witch is still alive, she gets the opportunity to use her powers to either save the player chosen by the werewolves or use her poison.

+

+```python

+ # Witch's turn

+ healing_used_tonight = False

+ if witch in survivors:

+ if healing:

+ # Witch decides whether to use the healing potion

+ hint = HostMsg(

+ content=Prompts.to_witch_resurrect.format_map(

+ {"witch_name": witch.name, "dead_name": dead_player[0]},

+ ),

+ )

+ # Witch decides whether to use the poison

+ if witch(hint).resurrect:

+ healing_used_tonight = True

+ dead_player.pop()

+ healing = False

+```

+

+- **1.3 Seer's Turn**

+

+The seer has a chance to reveal the true identity of a player. This information can be crucial for the villagers. The `observe()` function allows each agent to take note of a message without immediately replying to it.

+

+```python

+ # Seer's turn

+ if seer in survivors:

+ # Seer chooses a player to reveal their identity

+ hint = HostMsg(

+ content=Prompts.to_seer.format(seer.name, n2s(survivors)),

+ )

+ x = seer(hint)

+

+ player, idx = extract_name_and_id(x.content)

+ role = "werewolf" if roles[idx] == "werewolf" else "villager"

+ hint = HostMsg(content=Prompts.to_seer_result.format(player, role))

+ seer.observe(hint)

+```

+

+- **1.4 Update Alive Players**

+

+Based on the actions taken during the night, the list of surviving players needs to be updated.

+

+```python

+ # Update the list of survivors and werewolves after the night's events

+ survivors, wolves = update_alive_players(survivors, wolves, dead_player)

+```

+

+- **2.1 Daytime Phase: Discussion and Voting**

+

+During the day, all players will discuss and then vote to eliminate a suspected werewolf.

+

+```python

+ # Daytime discussion

+ with msghub(survivors, announcement=hints) as hub:

+ # Discuss

+ x = sequentialpipeline(survivors)

+ # Vote

+ hint = HostMsg(content=Prompts.to_all_vote.format(n2s(survivors)))

+ votes = [extract_name_and_id(_(hint).content)[0] for _ in survivors]

+ vote_res = majority_vote(votes)

+ # Broadcast the voting result to all players

+ result = HostMsg(content=Prompts.to_all_res.format(vote_res))

+ hub.broadcast(result)

+ # Update the list of survivors and werewolves after the vote

+ survivors, wolves = update_alive_players(survivors, wolves, vote_res)

+```

+

+- **2.2 Check for Winning Conditions**

+

+After each phase, the game checks if the werewolves or villagers have won.

+

+```python

+ # Check if either side has won

+ if check_winning(survivors, wolves, "Moderator"):

+ break

+```

+

+- **2.3 Continue to the Next Round**

+

+If neither werewolves nor villagers win, the game continues to the next round.

+

+```python

+ # If the game hasn't ended, prepare for the next round

+ hub.broadcast(HostMsg(content=Prompts.to_all_continue))

+```

+

+These code blocks outline the core game loop for Werewolf using AgentScope's `msghub` and `pipeline`, which help to easily manage the operational logic of an application.

+

+### Step 5: Run the Application

+

+With the game logic and agents set up, you're ready to run the Werewolf game. By executing the `pipeline`, the game will proceed through the predefined phases, with agents interacting based on their roles and the strategies coded above:

+

+```bash

+cd examples/werewolf

+python main.py # Assuming the pipeline is implemented in main.py

+```

+

+As the game starts, you'll see output in the terminal, similar to the logs below, which illustrate how the game unfolds:

+

+```bash

+Moderator: Player1 and Player2, you are werewolves. If you are alone, eliminate a player, else discuss with your teammates and reach an agreement. Response in the following format which can be loaded by json.loads(){

+ "thought": "thought",

+ "speak": "thoughts summary to say to others",

+ "agreement": "whether the discussion reached an agreement or not(true/false)"

+}

+Player1: Player2, let's discuss who we should eliminate tonight.

+Player2: I think we should eliminate Player4 tonight. They seem suspicious to me.

+Player1: I think we should eliminate Player4 tonight. They seem suspicious to me.

+Player2: I agree with Player2, let's eliminate Player4 tonight. They seem suspicious.

+Moderator: Which player do you vote to kill? Response in the following format which can be loaded by python json.loads()

+{{

+ "thought": "thought" ,

+ "speak": "player_name"

+}}

+Player1: Player4

+Player2: Player4

+Moderator: The player with the most votes is Player4.

+Moderator: Player6, you're witch. Tonight Player4 is eliminated. Would you like to resurrect Player4? Response in the following format which can be loaded by python json.loads()

+{

+ "thought": "thought",

+ "speak": "thoughts summary to say",

+ "resurrect": "true/false"

+}

+Player6: I have considered the options, and I choose to resurrect Player4.

+Moderator: Player5, you're seer. Which player in Player1, Player2, Player3, Player4, Player5 and Player6 would you like to check tonight? Response in the following json format which can be loaded by python json.loads()

+{

+ "thought": "thought" ,

+ "speak": "player_name"

+}

+Player5: Player3

+Moderator: Okay, the role of Player3 is villager.

+Moderator: The day is coming, all the players open your eyes. Last night is peaceful, no player is eliminated.

+...

+```

+

+## Next step

+

+Now you've grasped how to conveniently set up a multi-agent application with AgentScope. Feel free to tailor the game to include additional roles and introduce more sophisticated strategies. For more advanced tutorials that delve deeper into more capabilities of AgentScope, such as *memory management* and *service functions* utilized by agents, please refer to the tutorials in the **Advanced Exploration** section and look up the API references.

+

+## Other Example Applications

+

+- Example of Simple Group Conversation: [examples/Simple Conversation](https://github.com/alibaba/AgentScope/tree/main/examples/simple_chat/README.md)

+- Example of Werewolves: [examples/Werewolves](https://github.com/alibaba/AgentScope/tree/main/examples/werewolves/README.md)

+- Example of Distributed Agents: [examples/Distributed Agents](https://github.com/alibaba/AgentScope/tree/main/examples/distributed_agents/README.md)

+- ...

+

+[[Return to the top]](#crafting-your-first-application)

diff --git a/docs/sphinx_doc/source/tutorial/105-logging.md b/docs/sphinx_doc/source/tutorial/105-logging.md

new file mode 100644

index 000000000..42e05b5ff

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/105-logging.md

@@ -0,0 +1,108 @@

+(105-logging)=

+

+# Logging and WebUI

+

+Welcome to the tutorial on logging in multi-agent applications with AgentScope. We'll also touch on how you can visualize these logs using a simple web interface. This guide will help you track the agent's interactions and system information in a clearer and more organized way.

+

+## Logging

+

+The logging utilities consist of a custom setup for the `loguru.logger`, which is an enhancement over Python's built-in `logging` module. We provide custom features:

+

+- **Colored Output**: Assigns different colors to different speakers in a chat to enhance readability.

+- **Redirecting Standard Error (stderr)**: Captures error messages and logs them with the `ERROR` level.

+- **Custom Log Levels**: Adds a custom level called `CHAT` that is specifically designed for logging dialogue interactions.

+- **Special Formatting**: Format logs with timestamps, levels, function names, and line numbers. Chat messages are formatted differently to stand out.

+

+### Setting Up the Logger

+

+We recommend setting up the logger via `agentscope.init`, and you can set the log level:

+

+```python

+import agentscope

+

+LOG_LEVEL = Literal[

+ "CHAT",

+ "TRACE",

+ "DEBUG",

+ "INFO",

+ "SUCCESS",

+ "WARNING",

+ "ERROR",

+ "CRITICAL",

+]

+

+agentscope.init(..., logger_level="INFO")

+```

+

+### Logging a Chat Message

+

+Logging chat messages helps keep a record of the conversation between agents. Here's how you can do it:

+

+```python

+# Log a simple string message.

+logger.chat("Hello World!")

+

+# Log a `msg` representing dialogue with a speaker and content.

+logger.chat({"name": "User", "content": "Hello, how are you?"})

+logger.chat({"name": "Agent", "content": "I'm fine, thank you!"})

+```

+

+### Logging a System information

+

+System logs are crucial for tracking the application's state and identifying issues. Here's how to log different levels of system information:

+

+```python

+# Log general information useful for understanding the flow of the application.

+logger.info("The dialogue agent has started successfully.")

+

+# Log a warning message indicating a potential issue that isn't immediately problematic.

+logger.warning("The agent is running slower than expected.")

+

+# Log an error message when something has gone wrong.

+logger.error("The agent encountered an unexpected error while processing a request.")

+```

+

+## Integrating logging with WebUI

+

+To visualize these logs, we provide a customized gradio component in `src/agentscope/web_ui`.

+

+### Quick Running

+

+For convince, we provide the pre-built app in a wheel file, you can run the WebUI in the following command:

+

+```shell

+pip install gradio_groupchat-0.0.1-py3-none-any.whl

+python app.py

+```

+

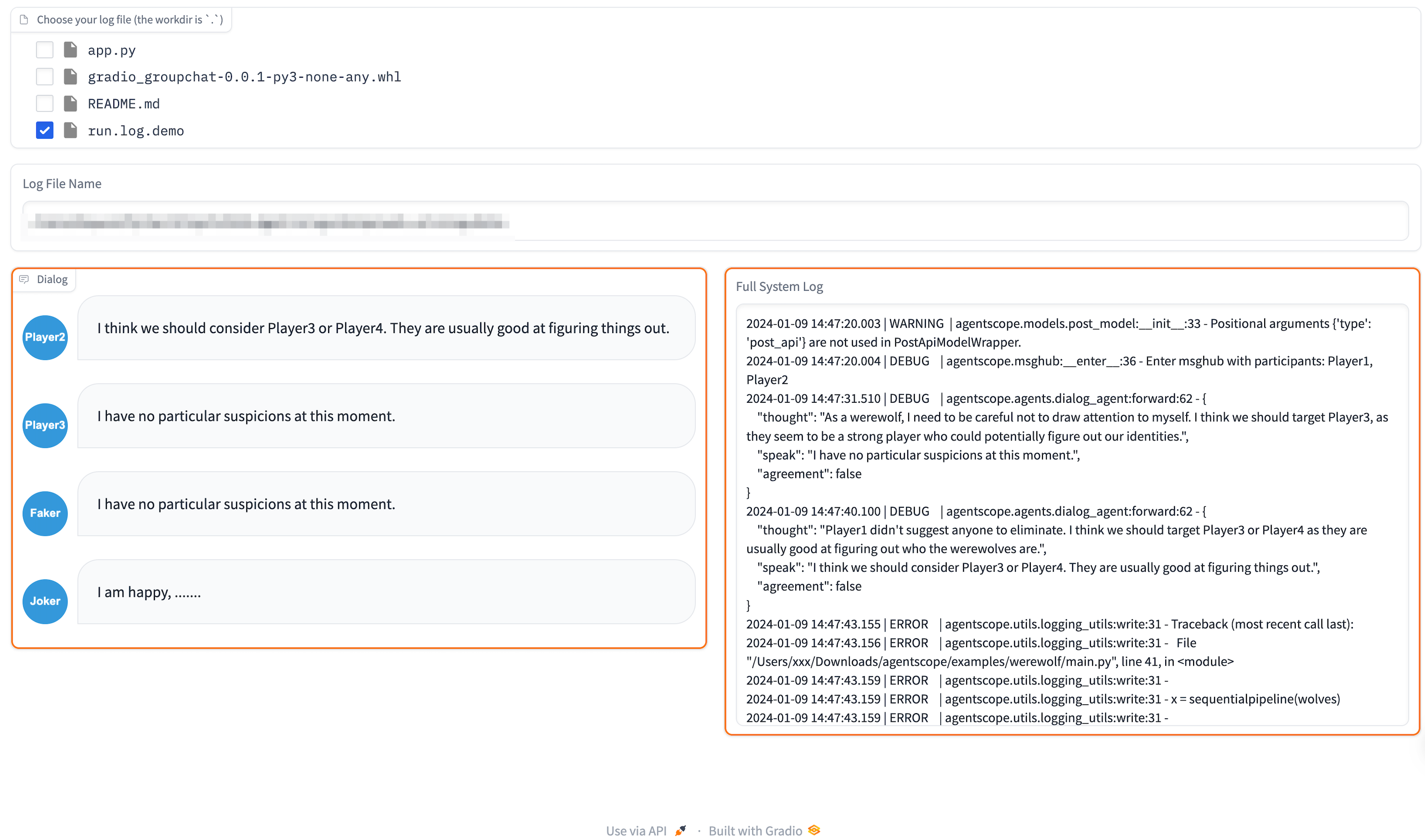

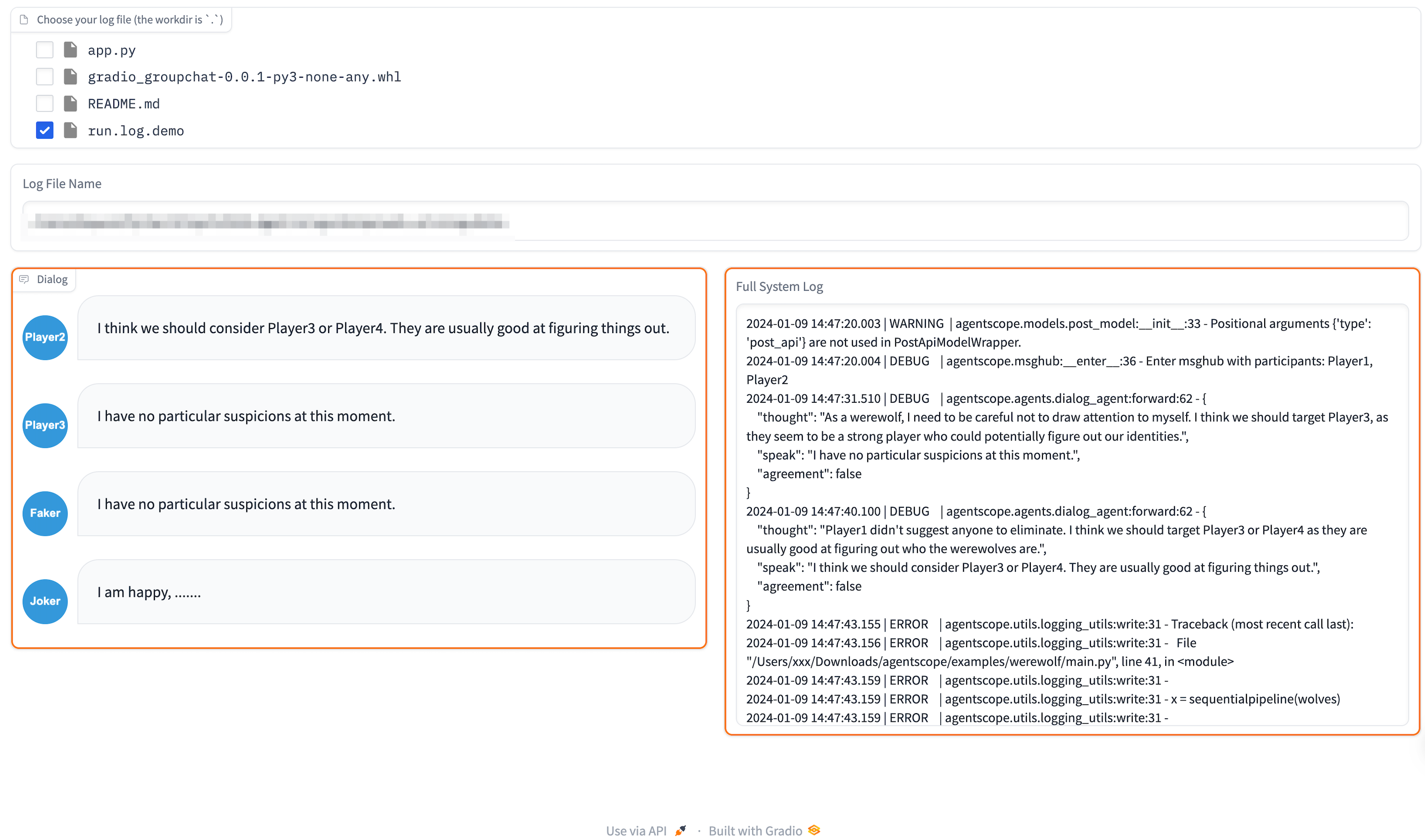

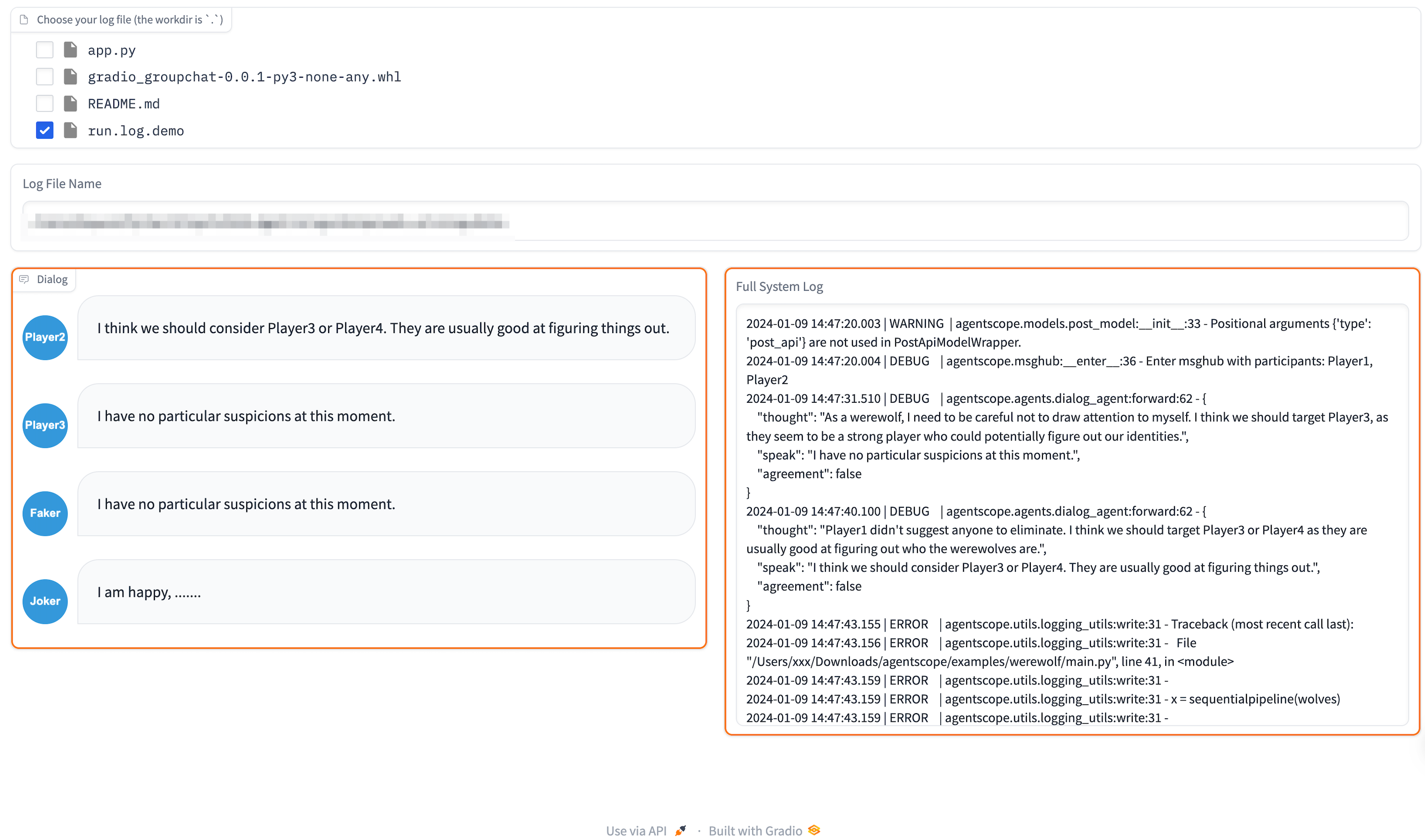

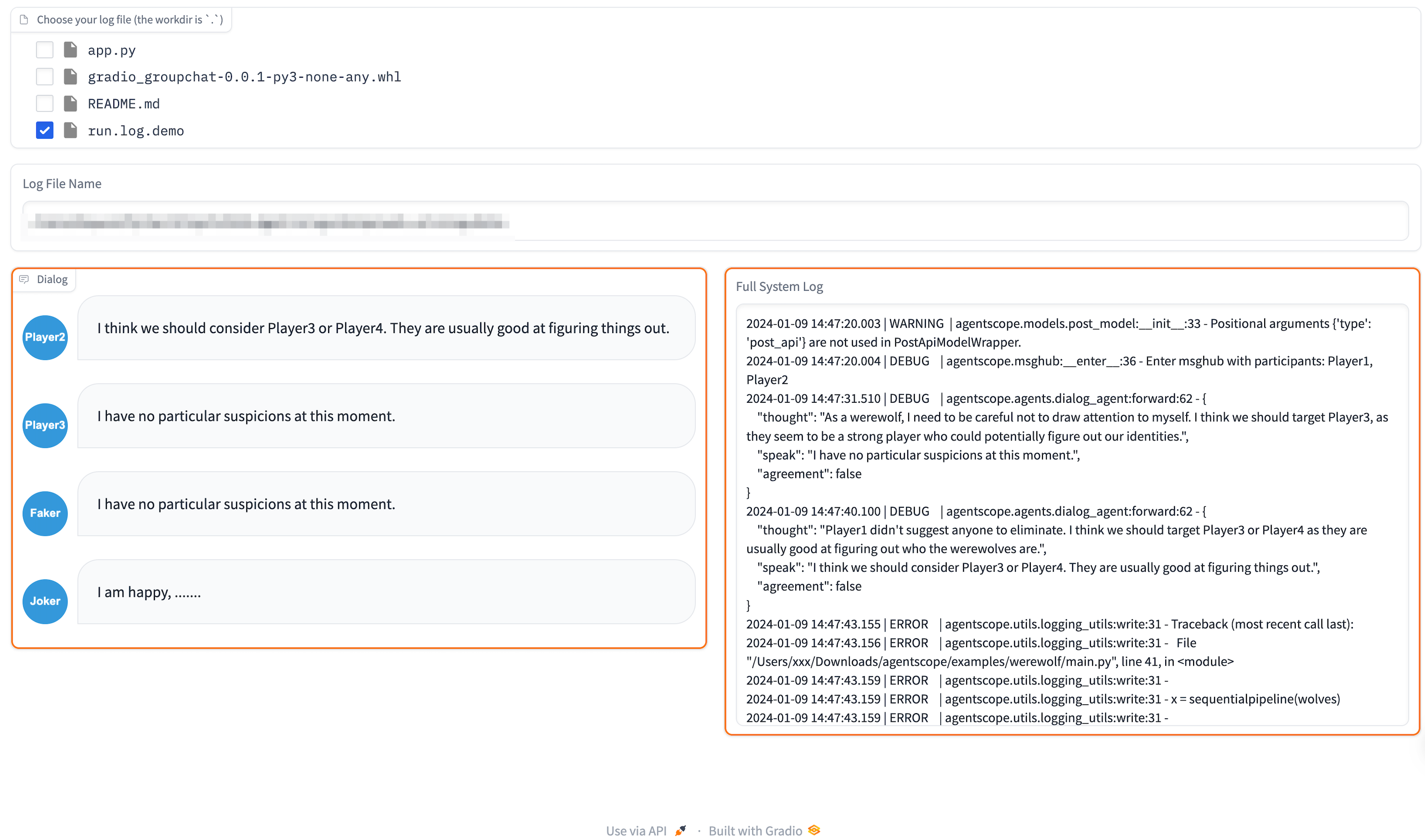

+After the init and entering the UI port printed by `app.py`, e.g., `http://127.0.0.1:7860/`, you can choose `run.log.demo` in the top-middle `FileSelector` window (it's a demo log file provided by us). Then, the dialog and system log should be shown correctly in the bottom windows.

+

+

+

+### For Other Customization

+

+To customize the backend, or the frontend of the provided WebUI, you can

+

+```shell

+# generate the template codes

+# for network connectivity problem, try to run

+# `npm config rm proxy && npm config rm https-proxy` first

+gradio cc create GroupChat --template Chatbot

+# replace the generated app.py into our built-in app.py

+cp -f app.py groupchat/demo

+# debug and develop your web_ui

+cd groupchat

+# edit the app.py, or other parts you want, reference link:

+# https://www.gradio.app/guides/custom-components-in-five-minutes

+gradio cc dev

+```

+

+If you want to release the modification, you can do

+

+```shell

+gradio cc build

+pip install

+python app.py

+```

+

+[[Return to the top]](#logging-and-webui)

diff --git a/docs/sphinx_doc/source/tutorial/201-agent.md b/docs/sphinx_doc/source/tutorial/201-agent.md

new file mode 100644

index 000000000..e498f18d1

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/201-agent.md

@@ -0,0 +1,175 @@

+(201-agent)=

+

+# Customizing Your Own Agent

+

+This tutorial helps you to understand the `Agent` in mode depth and navigate through the process of crafting your own custom agent with AgentScope. We start by introducing the fundamental abstraction called `AgentBase`, which serves as the base class to maintain the general behaviors of all agents. Then, we will go through the *AgentPool*, an ensemble of pre-built, specialized agents, each designed with a specific purpose in mind. Finally, we will demonstrate how to customize your own agent, ensuring it fits the needs of your project.

+

+## Understanding `AgentBase`

+

+The `AgentBase` class is the architectural cornerstone for all agent constructs within the AgentScope. As the superclass of all custom agents, it provides a comprehensive template consisting of essential attributes and methods that underpin the core functionalities of any conversational agent.

+

+Each AgentBase derivative is composed of several key characteristics:

+

+* `memory`: This attribute enables agents to retain and recall past interactions, allowing them to maintain context in ongoing conversations. For more details about `memory`, we defer to [Memory and Message Management](205-memory).

+

+* `model`: The model is the computational engine of the agent, responsible for making a response given existing memory and input. For more details about `model`, we defer to [Using Different Model Sources with Model API](203-model).

+

+* `sys_prompt` & `engine`: The system prompt acts as predefined instructions that guide the agent in its interactions; and the `engine` is used to dynamically generate a suitable prompt. For more details about them, we defer to [Prompt Engine](206-prompt).

+

+In addition to these attributes, `AgentBase` endows agents with pivotal methods such as `observe` and `reply`:

+

+* `observe()`: Through this method, an agent can take note of *message* without immediately replying, allowing it to update its memory based on the observed *message*.

+* `reply()`: This is the primary method that developers must implement. It defines the agent's behavior in response to an incoming *message*, encapsulating the logic that results in the agent's output.

+

+Besides, for unified interfaces and type hints, we introduce another base class `Operator`, which indicates performing some operation on input data by the `__call__` function. And we make `AgentBase` a subclass of `Operator`.

+

+```python

+class AgentBase(Operator):

+ # ... [code omitted for brevity]

+

+ def __init__(

+ self,

+ name: str,

+ config: Optional[dict] = None,

+ sys_prompt: Optional[str] = None,

+ model: Optional[Union[Callable[..., Any], str]] = None,

+ use_memory: bool = True,

+ memory_config: Optional[dict] = None,

+ ) -> None:

+

+ # ... [code omitted for brevity]

+ def observe(self, x: Union[dict, Sequence[dict]]) -> None:

+ # An optional method for updating the agent's internal state based on

+ # messages it has observed. This method can be used to enrich the

+ # agent's understanding and memory without producing an immediate

+ # response.

+ self.memory.add(x)

+

+ def reply(self, x: dict = None) -> dict:

+ # The core method to be implemented by custom agents. It defines the

+ # logic for processing an input message and generating a suitable

+ # response.

+ raise NotImplementedError(

+ f"Agent [{type(self).__name__}] is missing the required "

+ f'"reply" function.',

+ )

+

+ # ... [code omitted for brevity]

+```

+

+## Exploring the AgentPool

+

+The *AgentPool* within AgentScope is a curated ensemble of ready-to-use, specialized agents. Each of these agents is tailored for a distinct role and comes equipped with default behaviors that address specific tasks. The *AgentPool* is designed to expedite the development process by providing various templates of `Agent`.

+

+Below is a table summarizing the functionality of some of the key agents available in the Agent Pool:

+

+| Agent Type | Description | Typical Use Cases |

+| -------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

+| `AgentBase` | Serves as the superclass for all agents, providing essential attributes and methods. | The foundation for building any custom agent. |

+| `RpcAgentBase` | Executes remote procedure calls in distributed mode. | The foundation for building any custom agent in distributed mode. |

+| `DialogAgent` | Manages dialogues by understanding context and generating coherent responses. | Customer service bots, virtual assistants. |

+| `UserAgent` | Interacts with the user to collect input, generating messages that may include URLs or additional specifics based on required keys. | Collecting user input for agents |

+| *More to Come* | AgentScope is continuously expanding its pool with more specialized agents for diverse applications. | |

+

+## Customizing Agents from the AgentPool

+

+Customizing an agent from AgentPool enables you to tailor its functionality to meet the unique demands of your multi-agent application. You have the flexibility to modify existing agents with minimal effort by **adjusting configurations** and prompts or, for more extensive customization, you can engage in secondary development.

+

+Below, we provide usages of how to configure various agents from the AgentPool:

+

+### `DialogAgent`

+

+* **Reply Method**: The `reply` method is where the main logic for processing input *message* and generating responses.

+

+```python

+def reply(self, x: dict = None) -> dict:

+ # Additional processing steps can occur here

+

+ if x is not None:

+ self.memory.add(x) # Update the memory with the input

+

+ # Generate a prompt for the language model using the system prompt and memory

+ prompt = self.engine.join(self.sys_prompt, self.memory.get_memory())

+

+ # Invoke the language model with the prepared prompt

+ response = self.model(prompt, parse_func=json.loads, fault_handler=lambda x: {"speak": x})

+

+ # Format the response and create a message object

+ msg = Msg(self.name, response.get("speak", None) or response, **response)

+

+ # Record the message to memory and return it

+ self.memory.add(msg)

+ return msg

+```

+

+* **Usages:** To tailor a `DialogAgent` for a customer service bot:

+

+```python

+from agentscope.agents import DialogAgent

+

+# Configuration for the DialogAgent

+dialog_agent_config = {

+ "name": "ServiceBot",

+ "model": "gpt-3.5", # Specify the model used for dialogue generation

+ "sys_prompt": "Act as AI assistant to interact with the others. Try to "

+ "reponse on one line.\n", # Custom prompt for the agent

+ # Other configurations specific to the DialogAgent

+}

+

+# Create and configure the DialogAgent

+service_bot = DialogAgent(**dialog_agent_config)

+```

+

+### `UserAgent`

+

+* **Reply Method**: This method processes user input by prompting for content and if needed, additional keys and a URL. The gathered data is stored in a *message* object in the agent's memory for logging or later use and returns the message as a response.

+

+```python

+def reply(

+ self,

+ x: dict = None,

+ required_keys: Optional[Union[list[str], str]] = None,

+) -> dict:

+ # Check if there is initial data to be added to memory

+ if x is not None:

+ self.memory.add(x)

+

+ content = input(f"{self.name}: ") # Prompt the user for input

+ kwargs = {}

+

+ # Prompt for additional information based on the required keys

+ if required_keys is not None:

+ if isinstance(required_keys, str):

+ required_keys = [required_keys]

+ for key in required_keys:

+ kwargs[key] = input(f"{key}: ")

+

+ # Optionally prompt for a URL if required

+ url = None

+ if self.require_url:

+ url = input("URL: ")

+

+ # Create a message object with the collected input and additional details

+ msg = Msg(self.name, content=content, url=url, **kwargs)

+

+ # Add the message object to memory

+ self.memory.add(msg)

+ return msg

+```

+

+* **Usages:** To configure a `UserAgent` for collecting user input and URLs (of file, image, video, audio , or website):

+

+```python

+from agentscope.agents import UserAgent

+

+# Configuration for UserAgent

+user_agent_config = {

+ "name": "User",

+ "require_url": True, # If true, the agent will require a URL

+}

+

+# Create and configure the UserAgent

+user_proxy_agent = UserAgent(**user_agent_config)

+```

+

+[[Return to the top]](#customizing-your-own-agent)

diff --git a/docs/sphinx_doc/source/tutorial/202-pipeline.md b/docs/sphinx_doc/source/tutorial/202-pipeline.md

new file mode 100644

index 000000000..d61313fe5

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/202-pipeline.md

@@ -0,0 +1,301 @@

+(202-pipeline)=

+

+# Agent Interactions: Dive deeper into Pipelines and Message Hub

+

+**Pipeline & MsgHub** (message hub) are one or a sequence of steps describing how the structured `Msg` passes between multi-agents, which streamlines the process of collaboration across agents.

+

+`Pipeline` allows users to program communication among agents easily, and `MsgHub` enables message sharing among agents like a group chat.

+

+## Pipelines

+

+`Pipeline` in AgentScope serves as conduits through which messages pass among agents. In AgentScope, an `Agent` is a subclass of an `Operator` that performs some operation on input data. Pipelines extend this concept by encapsulating multiple agents, and also act as an `Operator`.

+

+Here is the base class for all pipeline types:

+

+```python

+class PipelineBase(Operator):

+ """Base interface of all pipelines."""

+ # ... [code omitted for brevity]

+ @abstractmethod

+ def __call__(self, x: Optional[dict] = None) -> dict:

+ """Define the actions taken by this pipeline.

+

+ Args:

+ x (Optional[`dict`], optional):

+ Dialog history and some environmental information

+

+ Returns:

+ `dict`: The pipeline's response to the input.

+ """

+```

+

+### Category

+

+AgentScope provides two main types of pipelines based on their implementation strategy:

+

+* **Operator-Type Pipelines**

+

+ * These pipelines are object-oriented and inherit from the `PipelineBase`. They are operators themselves and can be combined with other operators to create complex interaction patterns.

+

+ ```python

+ # Instantiate and invoke

+ pipeline = ClsPipeline(agent1, agent2, agent3)

+ x = pipeline(x)

+ ```

+

+* **Functional Pipelines**

+

+ * Functional pipelines provide similar control flow mechanisms as the class-based pipelines but are implemented as standalone functions. These are useful for scenarios where a class-based setup may not be necessary or preferred.

+

+ ```python

+ # Just invoke

+ x = funcpipeline(agent1, agent2, agent3, x)

+ ```

+

+Pipelines are categorized based on their functionality, much like programming language constructs. The table below outlines the different pipelines available in AgentScope:

+

+| Operator-Type Pipeline | Functional Pipeline | Description |

+| -------------------- | -------------------- | ------------------------------------------------------------ |

+| `SequentialPipeline` | `sequentialpipeline` | Executes a sequence of operators in order, passing the output of one as the input to the next. |

+| `IfElsePipeline` | `ifelsepipeline` | Implements conditional logic, executing one operator if a condition is true and another if it is false. |

+| `SwitchPipeline` | `switchpipeline` | Facilitates multi-branch selection, executing an operator from a mapped set based on the evaluation of a condition. |

+| `ForLoopPipeline` | `forlooppipeline` | Repeatedly executes an operator for a set number of iterations or until a specified break condition is met. |

+| `WhileLoopPipeline` | `whilelooppipeline` | Continuously executes an operator as long as a given condition remains true. |

+| - | `placeholder` | Acts as a placeholder in branches that do not require any operations in flow control like if-else/switch |

+

+### Usage

+

+This section illustrates how pipelines can simplify the implementation of logic in multi-agent applications by comparing the usage of pipelines versus approaches without pipelines.

+

+**Note:** Please note that in the examples provided below, we use the term `agent` to represent any instance that can act as an `Operator`. This is for ease of understanding and to illustrate how pipelines orchestrate interactions between different operations. You can replace `agent` with any `Operator`, thus allowing for a mix of `agent` and `pipeline` in practice.

+

+#### `SequentialPipeline`

+

+* Without pipeline:

+

+ ```python

+ x = agent1(x)

+ x = agent2(x)

+ x = agent3(x)

+ ```

+

+* Using pipeline:

+

+ ```python

+ from agentscope.pipelines import SequentialPipeline

+

+ pipe = SequentialPipeline([agent1, agent2, agent3])

+ x = pipe(x)

+ ```

+

+* Using functional pipeline:

+

+ ```python

+ from agentscope.pipelines import sequentialpipeline

+

+ x = sequentialpipeline([agent1, agent2, agent3], x)

+ ```

+

+#### `IfElsePipeline`

+

+* Without pipeline:

+

+ ```python

+ if condition(x):

+ x = agent1(x)

+ else:

+ x = agent2(x)

+ ```

+

+* Using pipeline:

+

+ ```python

+ from agentscope.pipelines import IfElsePipeline

+

+ pipe = IfElsePipeline(condition, agent1, agent2)

+ x = pipe(x)

+ ```

+

+* Using functional pipeline:

+

+ ```python

+ from agentscope.functional import ifelsepipeline

+

+ x = ifelsepipeline(condition, agent1, agent2, x)

+ ```

+

+#### `SwitchPipeline`

+

+* Without pipeline:

+

+ ```python

+ switch_result = condition(x)

+ if switch_result == case1:

+ x = agent1(x)

+ elif switch_result == case2:

+ x = agent2(x)

+ else:

+ x = default_agent(x)

+ ```

+

+* Using pipeline:

+

+ ```python

+ from agentscope.pipelines import SwitchPipeline

+

+ case_operators = {case1: agent1, case2: agent2}

+ pipe = SwitchPipeline(condition, case_operators, default_agent)

+ x = pipe(x)

+ ```

+

+* Using functional pipeline:

+

+ ```python

+ from agentscope.functional import switchpipeline

+

+ case_operators = {case1: agent1, case2: agent2}

+ x = switchpipeline(condition, case_operators, default_agent, x)

+ ```

+

+#### `ForLoopPipeline`

+

+* Without pipeline:

+

+ ```python

+ for i in range(max_iterations):

+ x = agent(x)

+ if break_condition(x):

+ break

+ ```

+

+* Using pipeline:

+

+ ```python

+ from agentscope.pipelines import ForLoopPipeline

+

+ pipe = ForLoopPipeline(agent, max_iterations, break_condition)

+ x = pipe(x)

+ ```

+

+* Using functional pipeline:

+

+ ```python

+ from agentscope.functional import forlooppipeline

+

+ x = forlooppipeline(agent, max_iterations, break_condition, x)

+ ```

+

+#### `WhileLoopPipeline`

+

+* Without pipeline:

+

+ ```python

+ while condition(x):

+ x = agent(x)

+ ```

+

+* Using pipeline:

+

+ ```python

+ from agentscope.pipelines import WhileLoopPipeline

+

+ pipe = WhileLoopPipeline(agent, condition)

+ x = pipe(x)

+ ```

+

+* Using functional pipeline:

+

+ ```python

+ from agentscope.functional import whilelooppipeline

+

+ x = whilelooppipeline(agent, condition, x)

+ ```

+

+### Pipeline Combination

+

+It's worth noting that AgentScope supports the combination of pipelines to create complex interactions. For example, we can create a pipeline that executes a sequence of agents in order, and then executes another pipeline that executes a sequence of agents in condition.

+

+```python

+from agentscope.pipelines import SequentialPipeline, ParallelPipeline

+# Create a pipeline that executes agents in order

+pipe1 = SequentialPipeline([agent1, agent2, agent3])

+# Create a pipeline that executes agents in parallel

+pipe2 = IfElsePipeline(condition, agent4, agent5)

+# Create a pipeline that executes pipe1 and pipe2 in order

+pipe3 = SequentialPipeline([pipe1, pipe2])

+# Invoke the pipeline

+x = pipe3(x)

+```

+

+## MsgHub

+

+`MsgHub` is designed to manage dialogue among a group of agents, allowing for the sharing of messages. Through `MsgHub`, agents can broadcast messages to all other agents in the group with `broadcast`.

+

+Here is the core class for a `MsgHub`:

+

+```python

+class MsgHubManager:

+ """MsgHub manager class for sharing dialog among a group of agents."""

+ # ... [code omitted for brevity]

+

+ def broadcast(self, msg: Union[dict, list[dict]]) -> None:

+ """Broadcast the message to all participants."""

+ for agent in self.participants:

+ agent.observe(msg)

+

+ def add(self, new_participant: Union[Sequence[AgentBase], AgentBase]) -> None:

+ """Add new participant into this hub"""

+ # ... [code omitted for brevity]

+

+ def delete(self, participant: Union[Sequence[AgentBase], AgentBase]) -> None:

+ """Delete agents from participant."""

+ # ... [code omitted for brevity]

+```

+

+### Usage

+

+#### Creating a MsgHub

+

+To create a `MsgHub`, instantiate a `MsgHubManager` by calling the `msghub` helper function with a list of participating agents. Additionally, you can supply an optional initial announcement that, if provided, will be broadcast to all participants upon initialization.

+

+```python

+from agentscope.msg_hub import msghub

+

+# Initialize MsgHub with participating agents

+hub_manager = msghub(

+ participants=[agent1, agent2, agent3], announcement=initial_announcement

+)

+```

+

+#### Broadcast message in MsgHub

+

+The `MsgHubManager` can be used with a context manager to handle the setup and teardown of the message hub environment:

+

+```python

+with msghub(

+ participants=[agent1, agent2, agent3], announcement=initial_announcement

+) as hub:

+ # Agents can now broadcast and receive messages within this block

+ agent1()

+ agent2()

+

+ # Or manually broadcast a message

+ hub.broadcast(some_message)

+

+```

+

+Upon exiting the context block, the `MsgHubManager` ensures that each agent's audience is cleared, preventing any unintended message sharing outside of the hub context.

+

+#### Adding and Deleting Participants

+

+You can dynamically add or remove agents from the `MsgHub`:

+

+```python

+# Add a new participant

+hub.add(new_agent)

+

+# Remove an existing participant

+hub.delete(existing_agent)

+```

+

+[[Return to the top]](#agent-interactions-dive-deeper-into-pipelines-and-message-hub)

diff --git a/docs/sphinx_doc/source/tutorial/203-model.md b/docs/sphinx_doc/source/tutorial/203-model.md

new file mode 100644

index 000000000..92c8fde7c

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/203-model.md

@@ -0,0 +1,254 @@

+(203-model)=

+

+# Using Different Model Sources with Model API

+

+AgentScope allows for the integration of multi-modal models from various sources. The core step is the initialization process, where once initialized with a certain config, all agent instances globally select the appropriate model APIs based on the model name specified (e.g., `model='gpt-4'`):

+

+```python

+import agentscope

+

+agentscope.init(model_configs=PATH_TO_MODEL_CONFIG)

+```

+

+where the model configs could be a list of dict:

+

+```json

+[

+ {

+ "type": "openai",

+ "name": "gpt-4",

+ "parameters": {

+ "api_key": "xxx",

+ "organization_id": "xxx",

+ "temperature": 0.0

+ }

+ },

+ {

+ "type": "openai_dall_e",

+ "name": "dall-e-3",

+ "parameters": {

+ "api_key": "xxx",

+ "organization_id": "xxx",

+ "size": "1024x1024"

+ }

+ }

+ // Additional models can be configured here

+]

+```

+

+This allows users to configure the model once, enabling shared use across all agents within the multi-agent application. Here is a table outlining the supported APIs and the type of arguments required for each:

+

+| Model Usage | Type Argument in AgentScope | Supported APIs |

+| -------------------- | ------------------ | ------------------------------------------------------------ |

+| Text generation | `openai` | Standard OpenAI chat API, FastChat and vllm |

+| Image generation | `openai_dall_e` | DALL-E API for generating images |

+| Embedding | `openai_embedding` | API for text embeddings |

+| General usages in POST | `post_api` | Huggingface/ModelScope Inference API, and customized post API |

+

+## Standard OpenAI API

+

+Our configuration is fully compatible with the Standard OpenAI API. For specific parameter configuration and usage guides, we recommend visiting their official website: [https://platform.openai.com/docs/api-reference/introduction](https://platform.openai.com/docs/api-reference/introduction).

+

+## Self-host Model API

+

+In AgentScope, in addition to OpenAI API, we also support open-source models with post-request API. In this document, we will introduce how to fast set up local model API serving with different inference engines.

+

+### Flask-based Model API Serving

+

+[Flask](https://github.com/pallets/flask) is a lightweight web application framework. It is easy to build a local model API serving with Flask.

+

+Here we provide two Flask examples with Transformers and ModelScope libraries, respectively. You can build your own model API serving with a few modifications.

+

+#### With Transformers Library

+

+##### Install Libraries and Set up Serving

+

+Install Flask and Transformers by following the command.

+

+```bash

+pip install Flask, transformers

+```

+

+Taking model `meta-llama/Llama-2-7b-chat-hf` and port `8000` as an example, set up the model API serving by running the following command.

+

+```bash

+python flask_transformers/setup_hf_service.py

+ --model_name_or_path meta-llama/Llama-2-7b-chat-hf

+ --device "cuda:0" # or "cpu"

+ --port 8000

+```

+

+You can replace `meta-llama/Llama-2-7b-chat-hf` with any model card in the huggingface model hub.

+

+##### How to use in AgentScope

+

+In AgentScope, you can load the model with the following model configs: `./flask_transformers/model_config.json`.

+

+```json

+{

+ "type": "post_api",

+ "name": "flask_llama2-7b-chat",

+ "api_url": "http://127.0.0.1:8000/llm/",

+ "json_args": {

+ "max_length": 4096,

+ "temperature": 0.5

+ }

+}

+```

+

+##### Note

+

+In this model serving, the messages from post requests should be in **STRING** format. You can use [templates for chat model](https://huggingface.co/docs/transformers/main/chat_templating) from *transformers* with a little modification based on `./flask_transformers/setup_hf_service.py`.

+

+#### With ModelScope Library

+

+##### Install Libraries and Set up Serving

+

+Install Flask and modelscope by following the command.

+

+```bash

+pip install Flask, modelscope

+```

+

+Taking model `modelscope/Llama-2-7b-ms` and port `8000` as an example, to set up the model API serving, run the following command.

+

+```bash

+python flask_modelscope/setup_ms_service.py

+ --model_name_or_path modelscope/Llama-2-7b-ms

+ --device "cuda:0" # or "cpu"

+ --port 8000

+```

+

+You can replace `modelscope/Llama-2-7b-ms` with any model card in modelscope model hub.

+

+##### How to use AgentScope

+

+In AgentScope, you can load the model with the following model configs: `flask_modelscope/model_config.json`.

+

+```json

+{

+ "type": "post_api",

+ "name": "flask_llama2-7b-ms",

+ "api_url": "http://127.0.0.1:8000/llm/",

+ "json_args": {

+ "max_length": 4096,

+ "temperature": 0.5

+ }

+}

+```

+

+##### Note

+

+Similar to the example of transformers, the messages from post requests should be in **STRING format**.

+

+### FastChat

+

+[FastChat](https://github.com/lm-sys/FastChat) is an open platform that provides a quick setup for model serving with OpenAI-compatible RESTful APIs.

+

+#### Install Libraries and Set up Serving

+

+To install FastChat, run

+

+```bash

+pip install "fastchat[model_worker,webui]"

+```

+

+Taking model `meta-llama/Llama-2-7b-chat-hf` and port `8000` as an example, to set up model API serving, run the following command to set up model serving.

+

+```bash

+bash fastchat_script/fastchat_setup.sh -m meta-llama/Llama-2-7b-chat-hf -p 8000

+```

+

+#### Supported Models

+

+Refer to [supported model list](https://github.com/lm-sys/FastChat/blob/main/docs/model_support.md#supported-models) of FastChat.

+

+#### How to use in AgentScope

+

+Now you can load the model in AgentScope by the following model config: `fastchat_script/model_config.json`.

+

+```json

+{

+ "type": "openai",

+ "name": "meta-llama/Llama-2-7b-chat-hf",

+ "api_key": "EMPTY",

+ "client_args": {

+ "base_url": "http://127.0.0.1:8000/v1/"

+ },

+ "generate_args": {

+ "temperature": 0.5

+ }

+}

+```

+

+### vllm

+

+[vllm](https://github.com/vllm-project/vllm) is a high-throughput inference and serving engine for LLMs.

+

+#### Install Libraries and Set up Serving

+

+To install vllm, run

+

+```bash

+pip install vllm

+```

+

+Taking model `meta-llama/Llama-2-7b-chat-hf` and port `8000` as an example, to set up model API serving, run

+

+```bash

+bash vllm_script/vllm_setup.sh -m meta-llama/Llama-2-7b-chat-hf -p 8000

+```

+

+#### Supported models

+

+Please refer to the [supported models list](https://docs.vllm.ai/en/latest/models/supported_models.html) of vllm.

+

+#### How to use in AgentScope

+

+Now you can load the model in AgentScope by the following model config: `vllm_script/model_config.json`.

+

+```json

+{

+ "type": "openai",

+ "name": "meta-llama/Llama-2-7b-chat-hf",

+ "api_key": "EMPTY",

+ "client_args": {

+ "base_url": "http://127.0.0.1:8000/v1/"

+ },

+ "generate_args": {

+ "temperature": 0.5

+ }

+}

+```

+

+## Model Inference API

+

+Both [Huggingface](https://huggingface.co/docs/api-inference/index) and [ModelScope](https://www.modelscope.cn) provide model inference API, which can be used with AgentScope post API model wrapper.

+Taking `gpt2` in HuggingFace inference API as an example, you can use the following model config in AgentScope.

+

+```json

+{

+ "type": "post_api",

+ "name": 'gpt2',

+ "headers": {

+ "Authorization": "Bearer {YOUR_API_TOKEN}"

+ }

+ "api_url": "https://api-inference.huggingface.co/models/gpt2"

+}

+```

+

+## In-memory Models without API

+

+It is entirely possible to use models without setting up an API service. Here's an example of how to initialize an agent with a local model instance:

+

+```python

+from transformers import AutoModelForCausalLM, AutoTokenizer

+

+model = AutoModelForCausalLM.from_pretrained(MODEL_NAME)

+tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

+model.eval()

+# Do remember to re-implement the `reply` method to tokenize *message*!

+agent = YourAgent(name='agent', model=model, tokenizer=tokenizer)

+```

+

+[[Return to the top]](#using-different-model-sources-with-model-api)

diff --git a/docs/sphinx_doc/source/tutorial/204-service.md b/docs/sphinx_doc/source/tutorial/204-service.md

new file mode 100644

index 000000000..448627a28

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/204-service.md

@@ -0,0 +1,135 @@

+(204-service)=

+

+# Enhancing Agent Capabilities with Service Functions

+

+**Service functions**, often referred to simply as **Service**, constitute a versatile suite of utility tools that can be used to enhance the functionality of agents. A service is designed to perform a specific task like web search, code interpretation, or file processing. Services can be invoked by agents and other components for reuse across different scenarios.

+

+## ServiceResponse

+

+The design behind `Service` distinguishes them from typical Python functions. In scenarios where execution is failed, service functions do not raise exceptions within the program. Instead, they return a `ServiceResponse` (a sub-class of dict).

+

+```python

+def demo_service() -> ServiceResponse:

+ #do some specifc actions

+ # ......

+ res = ServiceResponse({status=status, content=content})

+ return res

+

+

+class ServiceResponse(dict):

+ """Used to wrap the execution results of the services"""

+ # ... [code omitted for brevity]

+

+ def __init__(

+ self,

+ status: ServiceExecStatus,

+ content: Any,

+ ):

+ self.status = status

+ self.content = content

+```

+

+This object encapsulates `status` of the execution (`SUCCESS` or `ERROR`), which can indicate success or failure, and the `content`, which can either be the output of a successful execution or the error stack from a failure.

+

+Here's why this design is beneficial:

+

+- **Error Handling**: `Service` and `ServiceResponse` allows agents to flexibly handle errors. An agent can check the status of the response and decide on the next steps, whether to retry the operation, use fallback logic, or analyze the error stack and choose an appropriate strategy to make improvements.

+- **Consistency**: Service functions provide a consistent interface for both successful outcomes and errors. This consistency simplifies the interaction model for agents that use these services.

+

+## Overview of Service Functions

+

+Below is a table outlining various service functions categorized by their primary domain. These services offer a range of capabilities to agents.

+

+| Service Scenario | Service Function Name | Description |

+| --------------- | --------------------- | ------------------------------------------------------------ |

+| Code | `execute_python_code` | Execute a string of Python code, optionally inside a Docker container. |

+| Retrieval | `retrieve_from_list` | Retrieve specific items from a list based on given criteria. |

+| SQL Query | `query_mysql` | Execute a SQL query against a MySQL database and return results. |

+| | `query_sqlite` | Execute a SQL query against a SQLite database and return results. |

+| | `query_mongodb` | Perform a query or operation against a MongoDB collection. |

+| Text Processing | `summarization` | Summarize a block of text to highlight the main points with LLM. |

+| Web Search | `web_search` | Perform a web search using a specified search engine (currently supports Google and Bing). |

+| File | `create_file` | Create a new file at a specified path with optional initial content. |

+| | `delete_file` | Delete a file specified by the file path. |

+| | `move_file` | Move or rename a file from one path to another. |

+| | `create_directory` | Create a new directory at a specified path. |

+| | `delete_directory` | Delete a directory and all of its contents. |

+| | `move_directory` | Move or rename a directory from one path to another. |

+| | `read_text_file` | Read and return the contents of a text file. |

+| | `write_text_file` | Write text content to a file at a specified path. |

+| | `read_json_file` | Read and parse the contents of a JSON file. |

+| | `write_json_file` | Serialize a Python object to JSON and write it to a file. |

+| *More to Come* | | Additional service functions are being developed and will be added to enhance the capabilities of AgentScope further. |

+

+For details about each Service Function, please consult the API references, where the docstrings provide comprehensive information about the parameters, expected input formats, return types, and any additional options that can modify the behavior of the Service Function.

+

+## Usage

+

+In AgentScope, each Service Function comes with a meticulously crafted docstring and demonstrative test functions that provide detailed instructions on how to utilize it. To enhance the capabilities of your agents with these services, you can craft prompts for LLM to generate parameters for Service:

+

+By composing appropriate prompts that align with the information detailed in the Service Functions' docstrings, you can guide an LLM to generate responses that match the required parameters of a `Service`.

+

+```python

+import json

+import inspect

+from agentscope.service import ServiceResponse

+from agentscope.agents import AgentBase

+

+

+def create_file(file_path: str, content: str = "") -> ServiceResponse:

+ """

+ Create a file and write content to it.

+

+ Args:

+ file_path (str): The path where the file will be created.

+ content (str): Content to write into the file.

+

+ Returns:

+ ServiceResponse: where the boolean indicates success, and the

+ str contains an error message if any, including the error type.

+ """

+ # ... [code omitted for brevity]

+

+

+class YourAgent(AgentBase):

+ # ... [code omitted for brevity]

+

+ def reply(self, x: dict = None) -> dict:

+ # ... [code omitted for brevity]

+

+ # Construct the prompt for the agent to provide parameters in JSON

+ # format

+ prompt = (

+ f"To complete the user request\n```{x['content']}```\n"

+ "Please provide the necessary parameters in JSON format for the "

+ "function:\n"

+ f"Function: {create_file.__name__}\n"

+ "Description: Create a file and write content to it.\n"

+ )

+

+ # Add details about the function parameters

+ sig = inspect.signature(create_file)

+ parameters = sig.parameters.items()

+ params_prompt = "\n".join(

+ f"- {name} ({param.annotation.__name__}): "

+ f"{'(default: ' + json.dumps(param.default) + ')'if param.default is not inspect.Parameter.empty else ''}"

+ for name, param in parameters

+ )

+ prompt += params_prompt

+

+ # Get the model response

+ model_response = self.model(prompt)

+

+ # Parse the model response and call the create_file function

+ # Additional extraction functions might be necessary

+ try:

+ kwargs = json.loads(model_response.content)

+ create_file(**kwargs)

+ except:

+ # Error handling

+ pass

+

+ # ... [code omitted for brevity]

+```

+

+[[Return to the top]](#enhancing-agent-capabilities-with-service-functions)

diff --git a/docs/sphinx_doc/source/tutorial/205-memory.md b/docs/sphinx_doc/source/tutorial/205-memory.md

new file mode 100644

index 000000000..728bc1f29

--- /dev/null

+++ b/docs/sphinx_doc/source/tutorial/205-memory.md

@@ -0,0 +1,146 @@

+(205-memory)=

+

+# Memory and Message Management

+

+**Message** represents individual pieces of information or interactions flowing between/within agents. **Memory** refers to the storage and retrieval of historical information and serves as the storage and management system for the messages. This allows the agent to remember past interactions, maintain context, and provide more coherent and relevant responses.

+

+## Understanding `MessageBase` and its subclasses

+

+### `MessageBase`

+

+`MessageBase` is designed to organize attributes of a message, like the agent's name, the content, and associated media URLs. It provides a structure that can be extended to create specific types of messages.

+

+```python