-[](https://arxiv.org/abs/2309.02033)

-[](docs/DeveloperGuide_ZH.md)

-

[](https://pypi.org/project/py-data-juicer)

[](https://hub.docker.com/r/datajuicer/data-juicer)

-[](README.md#documentation)

-[](README_ZH.md#documentation)

-[](https://alibaba.github.io/data-juicer/)

-[](https://modelscope.cn/studios?name=Data-Jiucer&page=1&sort=latest&type=1)

-[](https://modelscope.cn/datasets?organization=Data-Juicer&page=1)

-[](https://modelscope.cn/models?organization=Data-Juicer&page=1)

+[](docs/DeveloperGuide_ZH.md)

+[](docs/DeveloperGuide_ZH.md)

+[](https://modelscope.cn/studios?name=Data-Jiucer&page=1&sort=latest&type=1)

+[](https://huggingface.co/spaces?&search=datajuicer)

+

+[](README.md#documentation-index--文档索引-a-namedocumentationindex)

+[](README_ZH.md#documentation-index--文档索引-a-namedocumentationindex)

+[](https://alibaba.github.io/data-juicer/)

+[](https://arxiv.org/abs/2309.02033)

-[](https://huggingface.co/spaces?&search=datajuicer)

-[](https://huggingface.co/datasets?&search=datajuicer)

-[](https://huggingface.co/models?&search=datajuicer)

-[](tools/quality_classifier/README_ZH.md)

-[](tools/evaluator/README_ZH.md)

+Data-Juicer 是一个一站式**多模态**数据处理系统,旨在为大语言模型 (LLM) 提供更高质量、更丰富、更易“消化”的数据。

-Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM) 提供更高质量、更丰富、更易“消化”的数据。

-本项目在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。欢迎您加入我们推进 LLM 数据的开发和研究工作!

+Data-Juicer(包含[DJ-SORA](docs/DJ_SORA_ZH.md))正在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。热烈欢迎您加入我们,一起推进LLM数据的开发和研究!

如果Data-Juicer对您的研发有帮助,请引用我们的[工作](#参考文献) 。

@@ -39,7 +34,8 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

----

## 新消息

--  [2024-02-20] 我们在积极维护一份关于LLM-Data的精选列表,欢迎[访问](docs/awesome_llm_data.md)并参与贡献!

+-  [2024-03-07] 我们现在发布了 **Data-Juicer [v0.2.0](https://github.com/alibaba/data-juicer/releases/tag/v0.2.0)**! 在这个新版本中,我们支持了更多的 **多模态数据(包括视频)** 相关特性。我们还启动了 **[DJ-SORA](docs/DJ_SORA_ZH.md)** ,为SORA-like大模型构建开放的大规模高质量数据集!

+-  [2024-02-20] 我们在积极维护一份关于LLM-Data的*精选列表*,欢迎[访问](docs/awesome_llm_data.md)并参与贡献!

-  [2024-02-05] 我们的论文被SIGMOD'24 industrial track接收!

- [2024-01-10] 开启“数据混合”新视界——第二届Data-Juicer大模型数据挑战赛已经正式启动!立即访问[竞赛官网](https://tianchi.aliyun.com/competition/entrance/532174),了解赛事详情。

@@ -54,10 +50,11 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

目录

===

-

* [Data-Juicer: 为大语言模型提供更高质量、更丰富、更易“消化”的数据](#data-juicer-为大语言模型提供更高质量更丰富更易消化的数据)

* [目录](#目录)

* [特点](#特点)

+ * [Documentation Index | 文档索引](#documentation-index--文档索引-a-namedocumentationindex)

+ * [演示样例](#演示样例)

* [前置条件](#前置条件)

* [安装](#安装)

* [从源码安装](#从源码安装)

@@ -66,28 +63,28 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

* [安装校验](#安装校验)

* [快速上手](#快速上手)

* [数据处理](#数据处理)

+ * [分布式数据处理](#分布式数据处理)

* [数据分析](#数据分析)

* [数据可视化](#数据可视化)

* [构建配置文件](#构建配置文件)

* [预处理原始数据(可选)](#预处理原始数据可选)

* [对于 Docker 用户](#对于-docker-用户)

- * [Documentation | 文档](#documentation)

* [数据处理菜谱](#数据处理菜谱)

- * [演示样例](#演示样例)

* [开源协议](#开源协议)

* [贡献](#贡献)

* [致谢](#致谢)

* [参考文献](#参考文献)

+

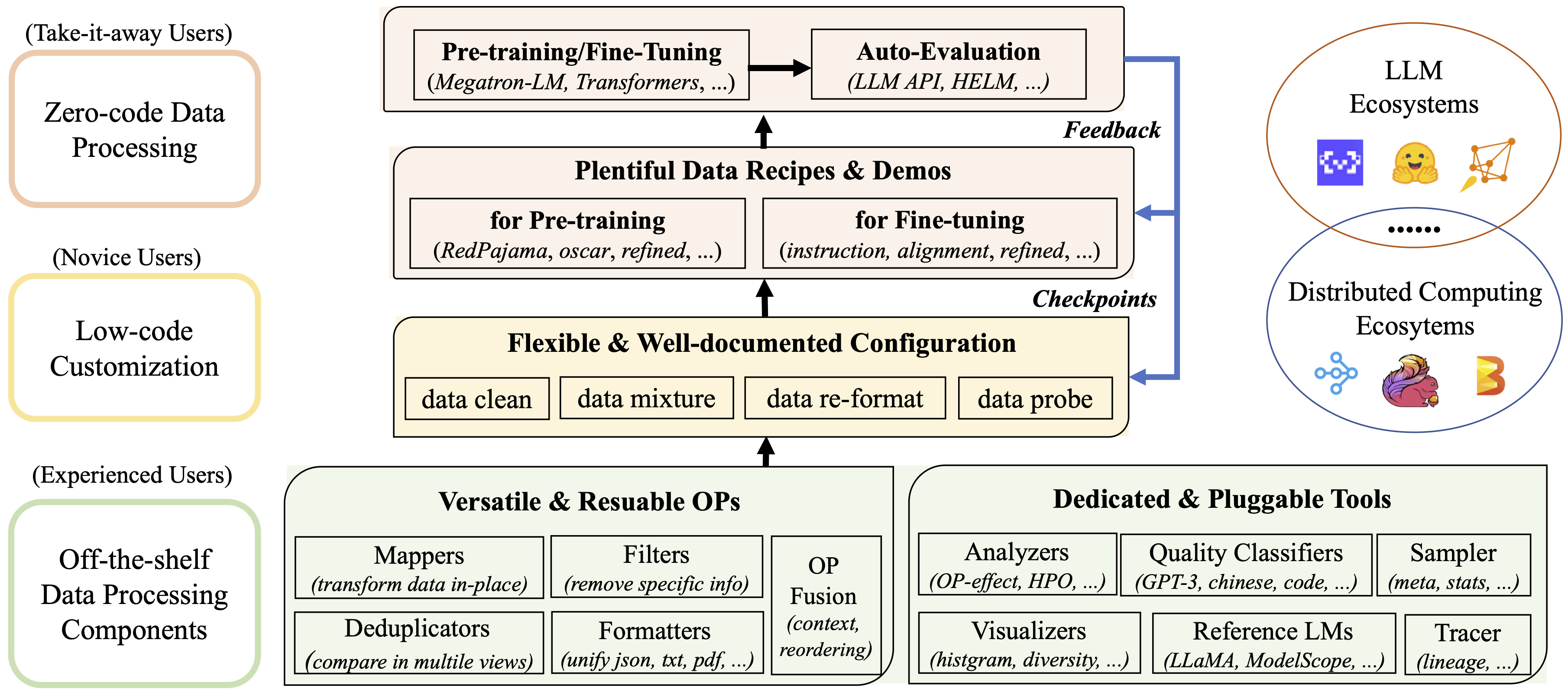

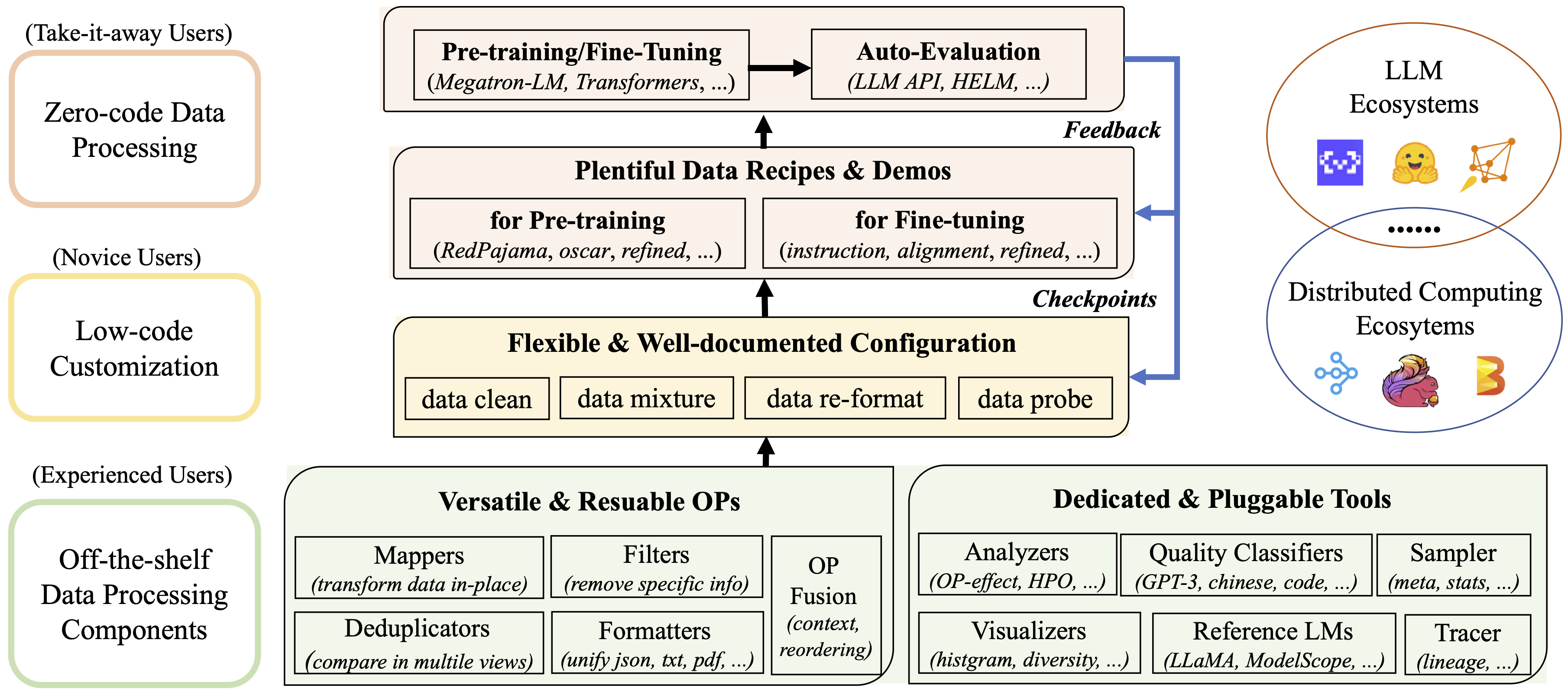

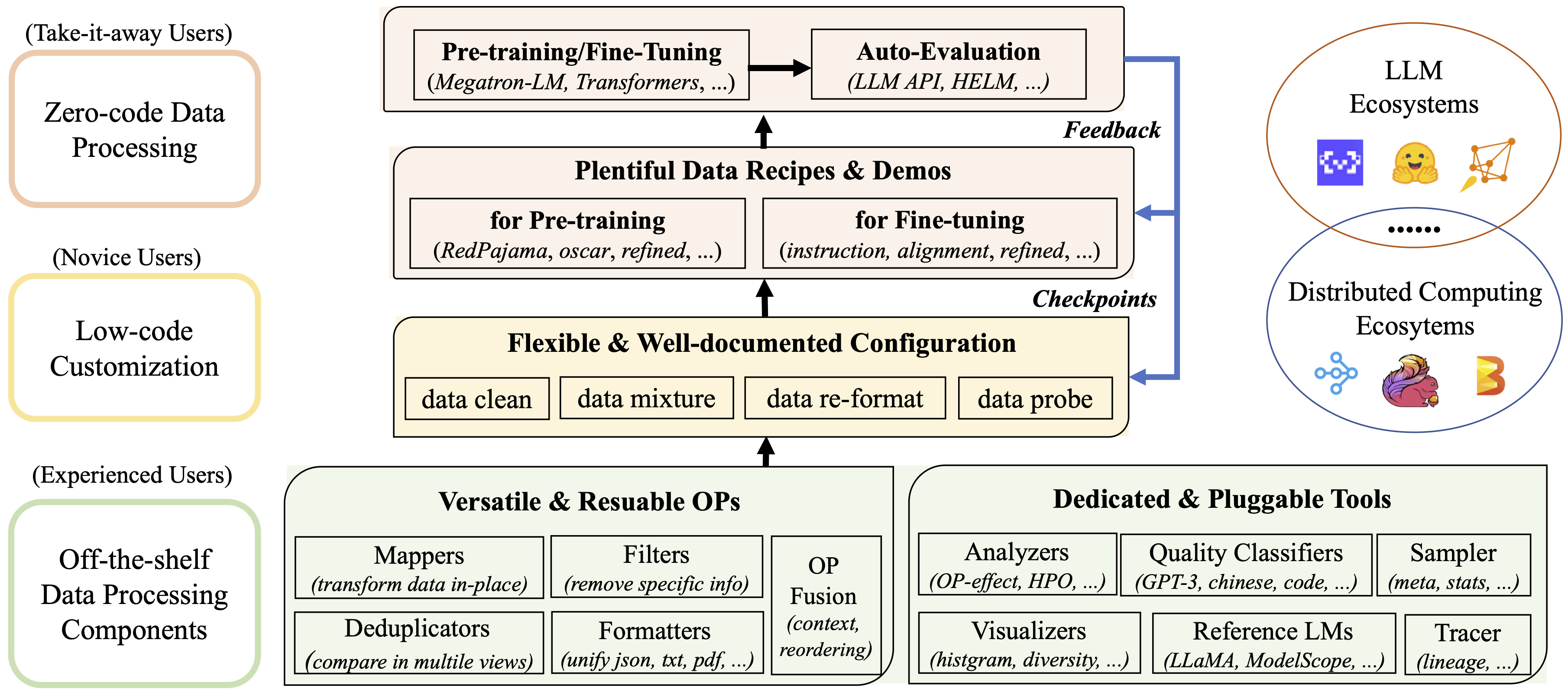

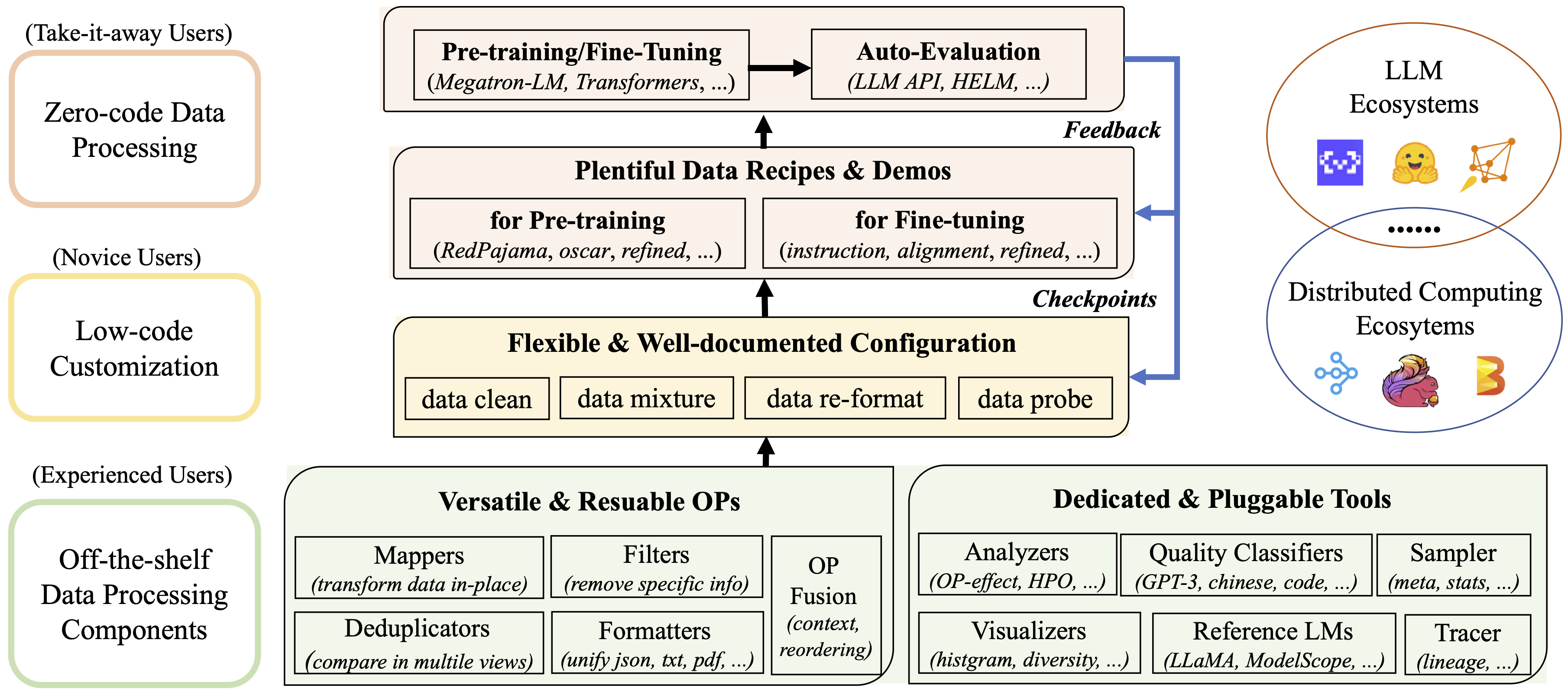

## 特点

-* **系统化 & 可复用**:为用户提供系统化且可复用的20+[配置菜谱](configs/README_ZH.md),50+核心[算子](docs/Operators_ZH.md)和专用[工具池](#documentation),旨在让数据处理独立于特定的大语言模型数据集和处理流水线。

+* **系统化 & 可复用**:为用户提供系统化且可复用的80+核心[算子](docs/Operators_ZH.md),20+[配置菜谱](configs/README_ZH.md)和20+专用[工具池](#documentation),旨在让数据处理独立于特定的大语言模型数据集和处理流水线。

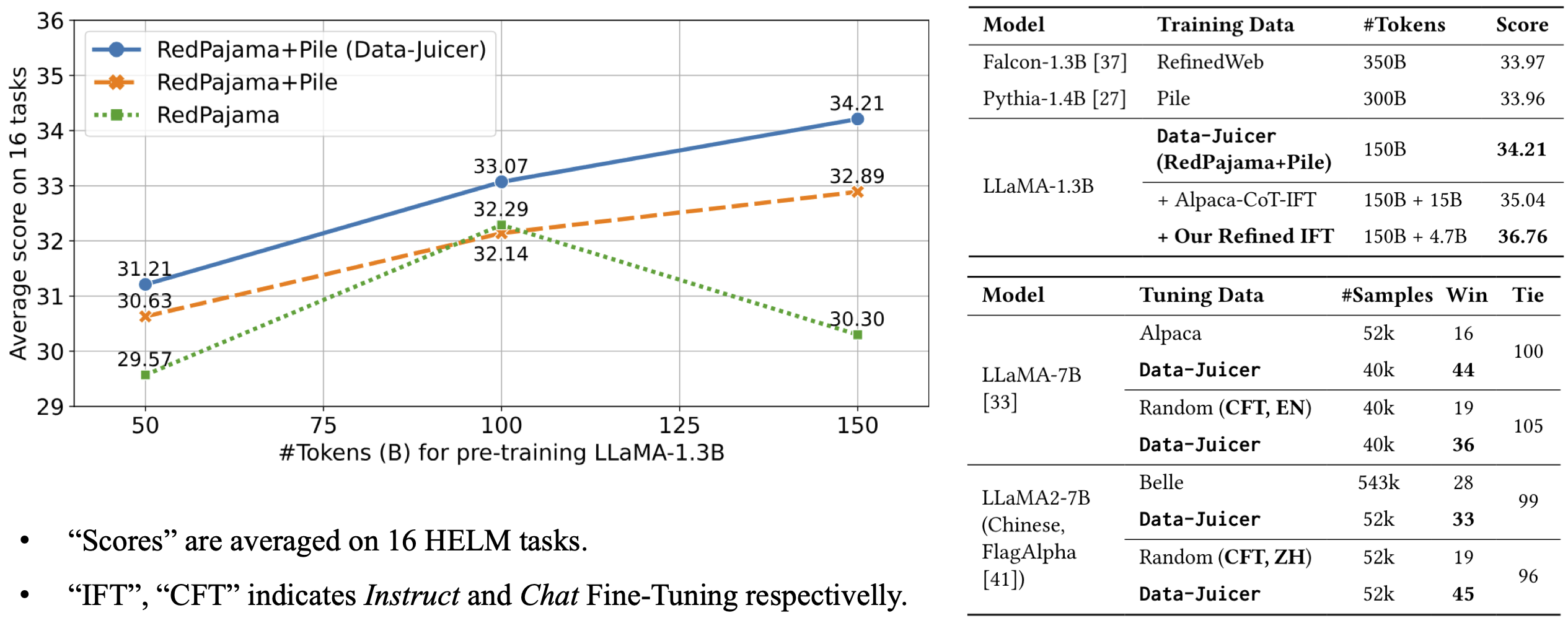

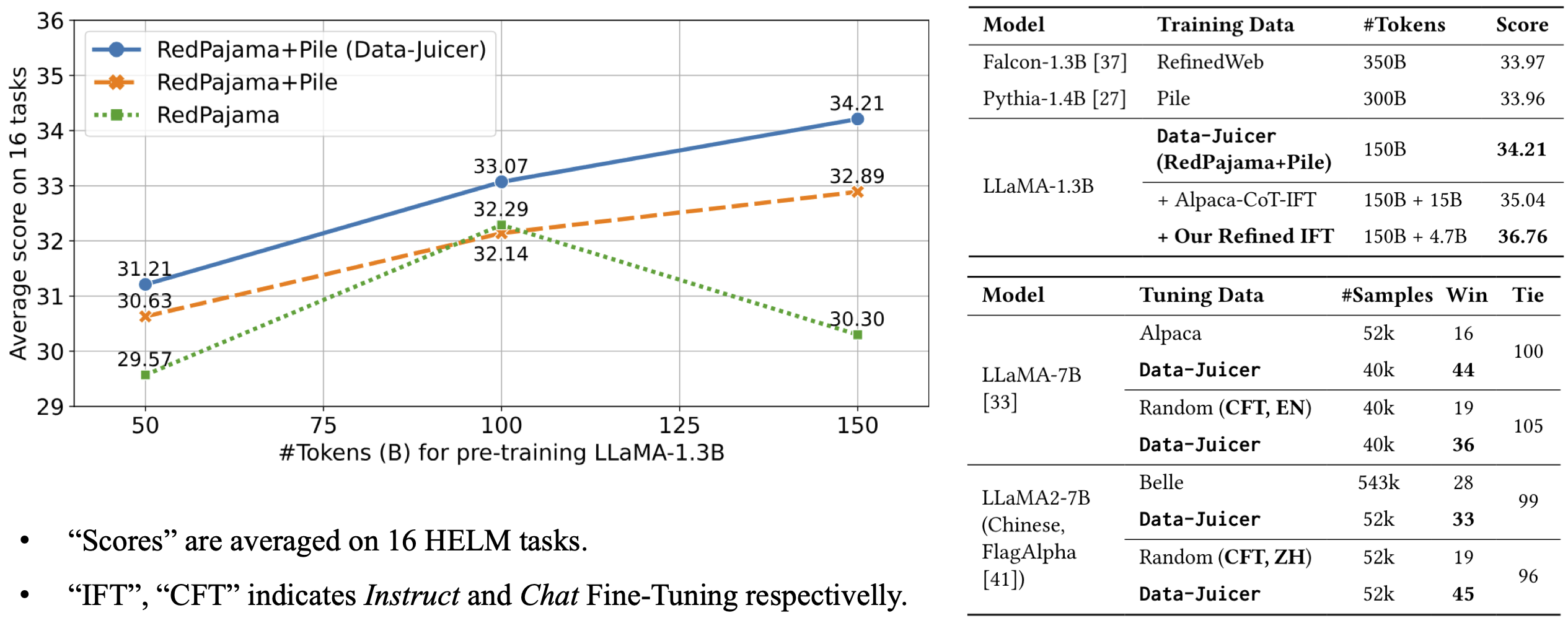

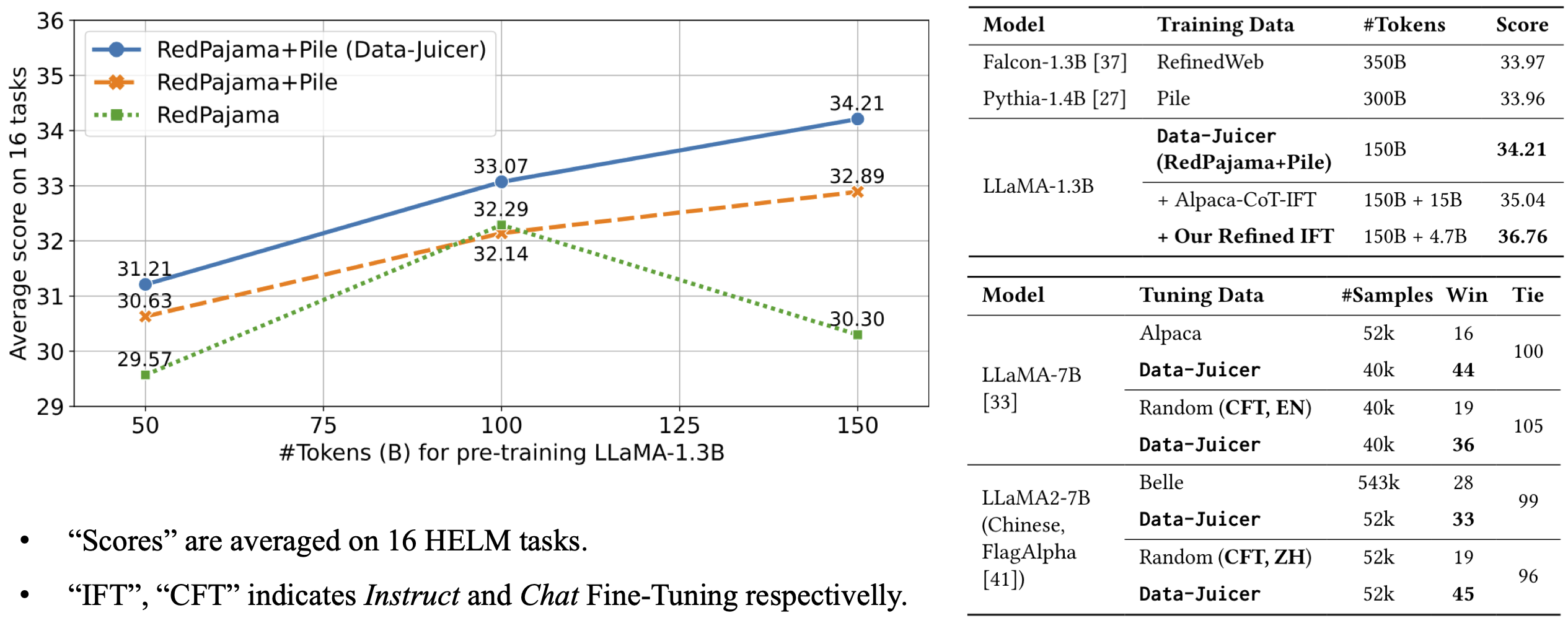

* **数据反馈回路**:支持详细的数据分析,并提供自动报告生成功能,使您深入了解您的数据集。结合多维度自动评估功能,支持在 LLM 开发过程的多个阶段进行及时反馈循环。

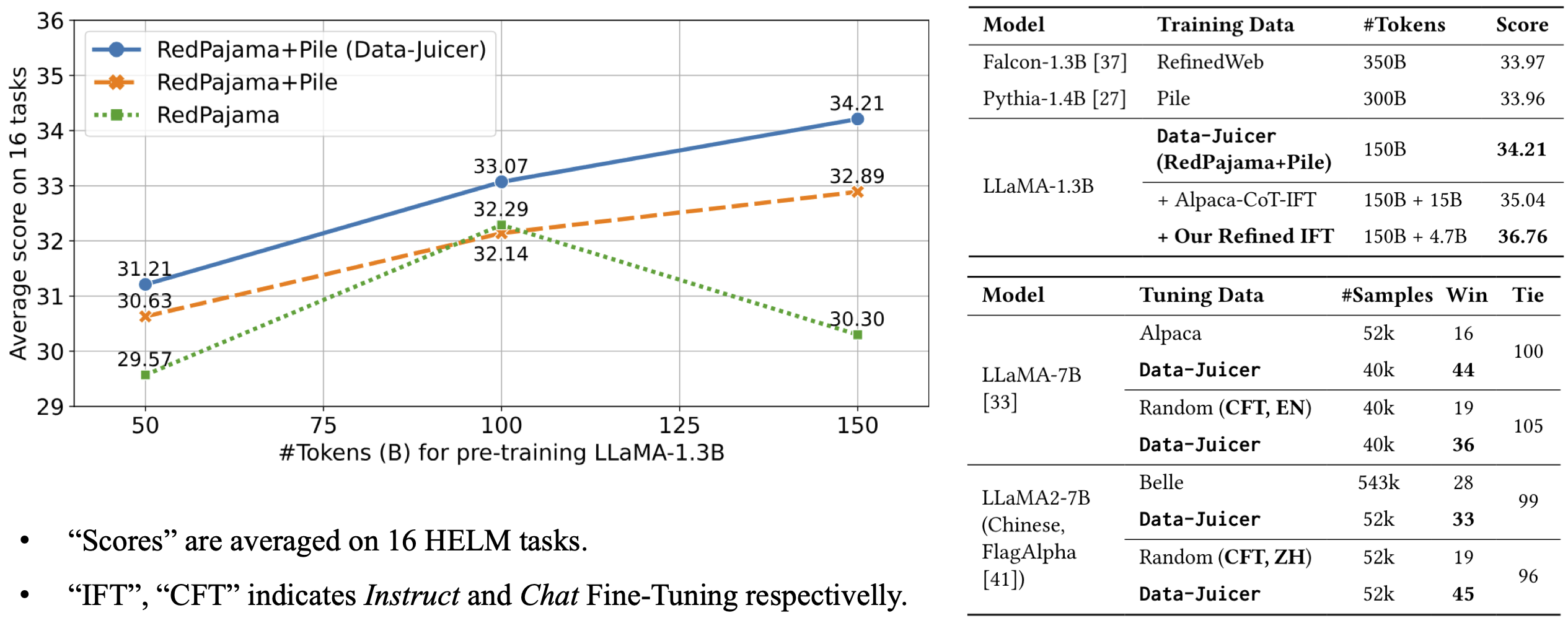

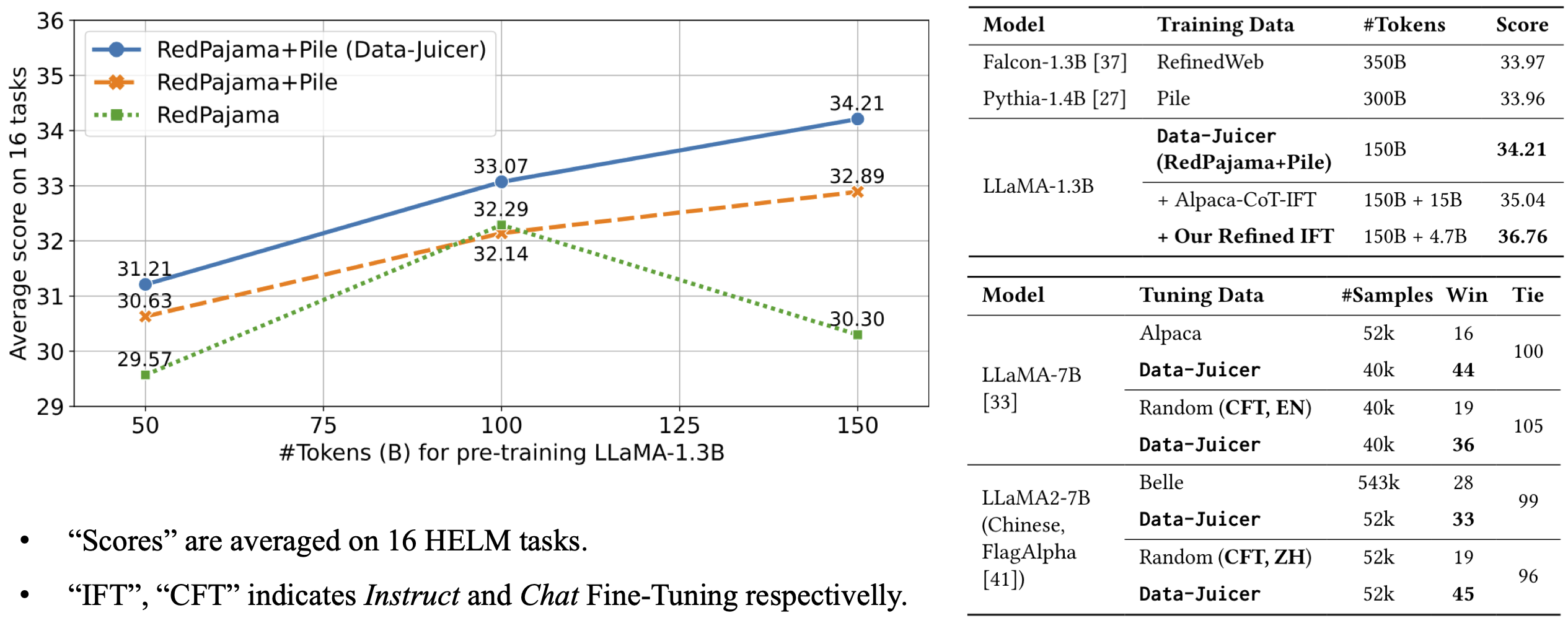

-* **全面的数据处理菜谱**:为pre-training、fine-tuning、中英文等场景提供数十种[预构建的数据处理菜谱](configs/data_juicer_recipes/README_ZH.md)。

+* **全面的数据处理菜谱**:为pre-training、fine-tuning、中英文等场景提供数十种[预构建的数据处理菜谱](configs/data_juicer_recipes/README_ZH.md)。 在LLaMA、LLaVA等模型上有效验证。

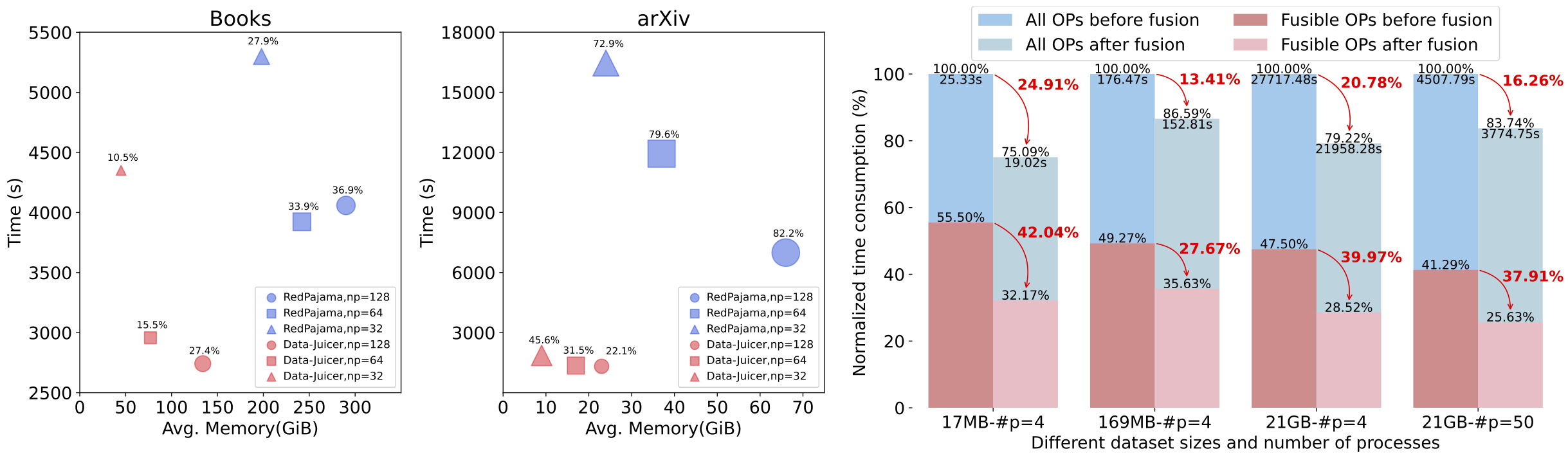

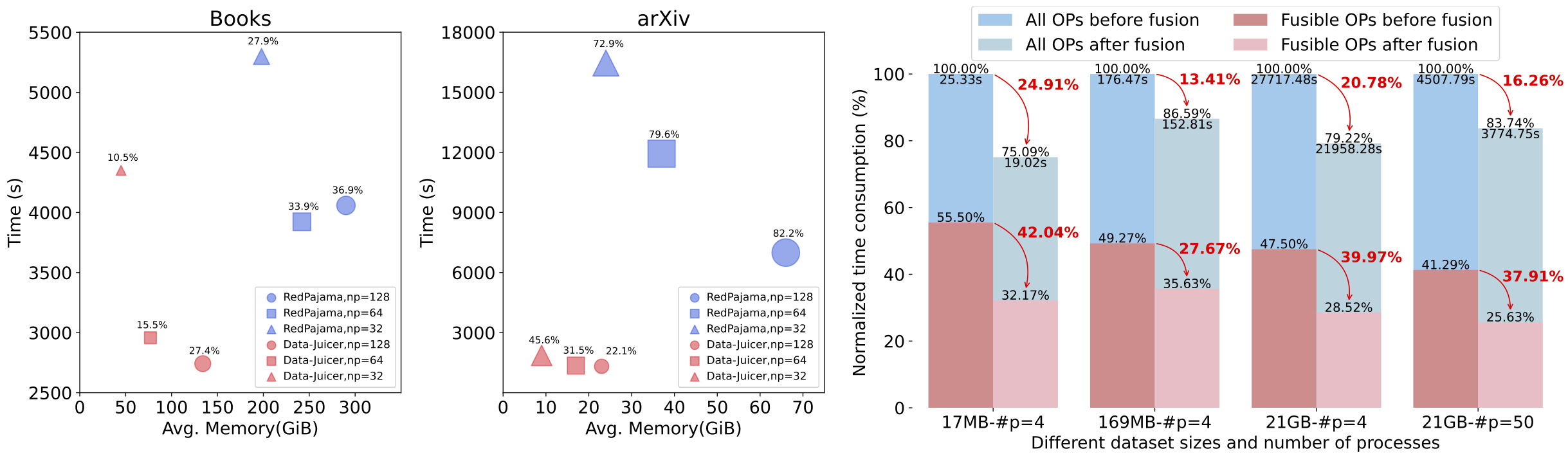

* **效率增强**:提供高效的数据处理流水线,减少内存占用和CPU开销,提高生产力。

@@ -96,9 +93,47 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

* **灵活 & 易扩展**:支持大多数数据格式(如jsonl、parquet、csv等),并允许灵活组合算子。支持[自定义算子](docs/DeveloperGuide_ZH.md#构建自己的算子),以执行定制化的数据处理。

+## Documentation Index | 文档索引

+

+* [Overview](README.md) | [概览](README_ZH.md)

+* [Operator Zoo](docs/Operators.md) | [算子库](docs/Operators_ZH.md)

+* [Configs](configs/README.md) | [配置系统](configs/README_ZH.md)

+* [Developer Guide](docs/DeveloperGuide.md) | [开发者指南](docs/DeveloperGuide_ZH.md)

+* ["Bad" Data Exhibition](docs/BadDataExhibition.md) | [“坏”数据展览](docs/BadDataExhibition_ZH.md)

+* Dedicated Toolkits | 专用工具箱

+ * [Quality Classifier](tools/quality_classifier/README.md) | [质量分类器](tools/quality_classifier/README_ZH.md)

+ * [Auto Evaluation](tools/evaluator/README.md) | [自动评测](tools/evaluator/README_ZH.md)

+ * [Preprocess](tools/preprocess/README.md) | [前处理](tools/preprocess/README_ZH.md)

+ * [Postprocess](tools/postprocess/README.md) | [后处理](tools/postprocess/README_ZH.md)

+* [Third-parties (LLM Ecosystems)](thirdparty/README.md) | [第三方库(大语言模型生态)](thirdparty/README_ZH.md)

+* [API references](https://alibaba.github.io/data-juicer/)

+* [Awesome LLM-Data](docs/awesome_llm_data.md)

+* [DJ-SORA](docs/DJ_SORA_ZH.md)

+

+

+## 演示样例

+

+* Data-Juicer 介绍 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/overview_scan/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/overview_scan)]

+* 数据可视化:

+ * 基础指标统计 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visulization_statistics/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_statistics)]

+ * 词汇多样性 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visulization_diversity/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_diversity)]

+ * 算子洞察(单OP) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visualization_op_insight/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_op_insight)]

+ * 算子效果(多OP) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visulization_op_effect/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_op_effect)]

+* 数据处理:

+ * 科学文献 (例如 [arXiv](https://info.arxiv.org/help/bulk_data_s3.html)) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/process_sci_data/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/process_sci_data)]

+ * 编程代码 (例如 [TheStack](https://huggingface.co/datasets/bigcode/the-stack)) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/process_code_data/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/process_code_data)]

+ * 中文指令数据 (例如 [Alpaca-CoT](https://huggingface.co/datasets/QingyiSi/Alpaca-CoT)) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/process_sft_zh_data/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/process_cft_zh_data)]

+* 工具池:

+ * 按语言分割数据集 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/tool_dataset_splitting_by_language/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/tool_dataset_splitting_by_language)]

+ * CommonCrawl 质量分类器 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/tool_quality_classifier/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/tool_quality_classifier)]

+ * 基于 [HELM](https://github.com/stanford-crfm/helm) 的自动评测 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/auto_evaluation_helm/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/auto_evaluation_helm)]

+ * 数据采样及混合 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_mixture/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_mixture)]

+* 数据处理回路 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_process_loop/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_process_loop)]

+

+

## 前置条件

-* 推荐 Python>=3.7,<=3.10

+* 推荐 Python>=3.8,<=3.10

* gcc >= 5 (at least C++14 support)

## 安装

@@ -189,6 +224,25 @@ export DATA_JUICER_MODELS_CACHE="/path/to/another/directory/models"

export DATA_JUICER_ASSETS_CACHE="/path/to/another/directory/assets"

```

+### 分布式数据处理

+

+现在基于RAY对多机分布式的数据处理进行了实现。

+对应Demo可以通过如下命令运行:

+

+```shell

+

+# 运行文字数据处理

+python tools/process_data.py --config ./demos/process_on_ray/configs/demo.yaml

+

+# 运行视频数据处理

+python tools/process_data.py --config ./demos/process_video_on_ray/configs/demo.yaml

+

+```

+

+ - 如果需要在多机上使用RAY运行多模态数据处理,需要确保各分布式节点可以访问对应的数据路径,将对应的数据路径挂载在文件共享系统(如NAS)中

+

+ - 用户也可以不使用RAY,拆分数据集后使用Slurm/DLC在集群上运行

+

### 数据分析

- 以配置文件路径为参数运行 `analyze_data.py` 或者 `dj-analyze` 命令行工具来分析数据集。

@@ -273,48 +327,14 @@ docker run -dit \ # 在后台启动容器

docker exec -it

-[](https://arxiv.org/abs/2309.02033)

-[](docs/DeveloperGuide_ZH.md)

-

[](https://pypi.org/project/py-data-juicer)

[](https://hub.docker.com/r/datajuicer/data-juicer)

-[](README.md#documentation)

-[](README_ZH.md#documentation)

-[](https://alibaba.github.io/data-juicer/)

-[](https://modelscope.cn/studios?name=Data-Jiucer&page=1&sort=latest&type=1)

-[](https://modelscope.cn/datasets?organization=Data-Juicer&page=1)

-[](https://modelscope.cn/models?organization=Data-Juicer&page=1)

+[](docs/DeveloperGuide_ZH.md)

+[](docs/DeveloperGuide_ZH.md)

+[](https://modelscope.cn/studios?name=Data-Jiucer&page=1&sort=latest&type=1)

+[](https://huggingface.co/spaces?&search=datajuicer)

+

+[](README.md#documentation-index--文档索引-a-namedocumentationindex)

+[](README_ZH.md#documentation-index--文档索引-a-namedocumentationindex)

+[](https://alibaba.github.io/data-juicer/)

+[](https://arxiv.org/abs/2309.02033)

-[](https://huggingface.co/spaces?&search=datajuicer)

-[](https://huggingface.co/datasets?&search=datajuicer)

-[](https://huggingface.co/models?&search=datajuicer)

-[](tools/quality_classifier/README_ZH.md)

-[](tools/evaluator/README_ZH.md)

+Data-Juicer 是一个一站式**多模态**数据处理系统,旨在为大语言模型 (LLM) 提供更高质量、更丰富、更易“消化”的数据。

-Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM) 提供更高质量、更丰富、更易“消化”的数据。

-本项目在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。欢迎您加入我们推进 LLM 数据的开发和研究工作!

+Data-Juicer(包含[DJ-SORA](docs/DJ_SORA_ZH.md))正在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。热烈欢迎您加入我们,一起推进LLM数据的开发和研究!

如果Data-Juicer对您的研发有帮助,请引用我们的[工作](#参考文献) 。

@@ -39,7 +34,8 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

----

## 新消息

--  [2024-02-20] 我们在积极维护一份关于LLM-Data的精选列表,欢迎[访问](docs/awesome_llm_data.md)并参与贡献!

+-  [2024-03-07] 我们现在发布了 **Data-Juicer [v0.2.0](https://github.com/alibaba/data-juicer/releases/tag/v0.2.0)**! 在这个新版本中,我们支持了更多的 **多模态数据(包括视频)** 相关特性。我们还启动了 **[DJ-SORA](docs/DJ_SORA_ZH.md)** ,为SORA-like大模型构建开放的大规模高质量数据集!

+-  [2024-02-20] 我们在积极维护一份关于LLM-Data的*精选列表*,欢迎[访问](docs/awesome_llm_data.md)并参与贡献!

-  [2024-02-05] 我们的论文被SIGMOD'24 industrial track接收!

- [2024-01-10] 开启“数据混合”新视界——第二届Data-Juicer大模型数据挑战赛已经正式启动!立即访问[竞赛官网](https://tianchi.aliyun.com/competition/entrance/532174),了解赛事详情。

@@ -54,10 +50,11 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

目录

===

-

* [Data-Juicer: 为大语言模型提供更高质量、更丰富、更易“消化”的数据](#data-juicer-为大语言模型提供更高质量更丰富更易消化的数据)

* [目录](#目录)

* [特点](#特点)

+ * [Documentation Index | 文档索引](#documentation-index--文档索引-a-namedocumentationindex)

+ * [演示样例](#演示样例)

* [前置条件](#前置条件)

* [安装](#安装)

* [从源码安装](#从源码安装)

@@ -66,28 +63,28 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

* [安装校验](#安装校验)

* [快速上手](#快速上手)

* [数据处理](#数据处理)

+ * [分布式数据处理](#分布式数据处理)

* [数据分析](#数据分析)

* [数据可视化](#数据可视化)

* [构建配置文件](#构建配置文件)

* [预处理原始数据(可选)](#预处理原始数据可选)

* [对于 Docker 用户](#对于-docker-用户)

- * [Documentation | 文档](#documentation)

* [数据处理菜谱](#数据处理菜谱)

- * [演示样例](#演示样例)

* [开源协议](#开源协议)

* [贡献](#贡献)

* [致谢](#致谢)

* [参考文献](#参考文献)

+

## 特点

-* **系统化 & 可复用**:为用户提供系统化且可复用的20+[配置菜谱](configs/README_ZH.md),50+核心[算子](docs/Operators_ZH.md)和专用[工具池](#documentation),旨在让数据处理独立于特定的大语言模型数据集和处理流水线。

+* **系统化 & 可复用**:为用户提供系统化且可复用的80+核心[算子](docs/Operators_ZH.md),20+[配置菜谱](configs/README_ZH.md)和20+专用[工具池](#documentation),旨在让数据处理独立于特定的大语言模型数据集和处理流水线。

* **数据反馈回路**:支持详细的数据分析,并提供自动报告生成功能,使您深入了解您的数据集。结合多维度自动评估功能,支持在 LLM 开发过程的多个阶段进行及时反馈循环。

-* **全面的数据处理菜谱**:为pre-training、fine-tuning、中英文等场景提供数十种[预构建的数据处理菜谱](configs/data_juicer_recipes/README_ZH.md)。

+* **全面的数据处理菜谱**:为pre-training、fine-tuning、中英文等场景提供数十种[预构建的数据处理菜谱](configs/data_juicer_recipes/README_ZH.md)。 在LLaMA、LLaVA等模型上有效验证。

* **效率增强**:提供高效的数据处理流水线,减少内存占用和CPU开销,提高生产力。

@@ -96,9 +93,47 @@ Data-Juicer 是一个一站式数据处理系统,旨在为大语言模型 (LLM

* **灵活 & 易扩展**:支持大多数数据格式(如jsonl、parquet、csv等),并允许灵活组合算子。支持[自定义算子](docs/DeveloperGuide_ZH.md#构建自己的算子),以执行定制化的数据处理。

+## Documentation Index | 文档索引

+

+* [Overview](README.md) | [概览](README_ZH.md)

+* [Operator Zoo](docs/Operators.md) | [算子库](docs/Operators_ZH.md)

+* [Configs](configs/README.md) | [配置系统](configs/README_ZH.md)

+* [Developer Guide](docs/DeveloperGuide.md) | [开发者指南](docs/DeveloperGuide_ZH.md)

+* ["Bad" Data Exhibition](docs/BadDataExhibition.md) | [“坏”数据展览](docs/BadDataExhibition_ZH.md)

+* Dedicated Toolkits | 专用工具箱

+ * [Quality Classifier](tools/quality_classifier/README.md) | [质量分类器](tools/quality_classifier/README_ZH.md)

+ * [Auto Evaluation](tools/evaluator/README.md) | [自动评测](tools/evaluator/README_ZH.md)

+ * [Preprocess](tools/preprocess/README.md) | [前处理](tools/preprocess/README_ZH.md)

+ * [Postprocess](tools/postprocess/README.md) | [后处理](tools/postprocess/README_ZH.md)

+* [Third-parties (LLM Ecosystems)](thirdparty/README.md) | [第三方库(大语言模型生态)](thirdparty/README_ZH.md)

+* [API references](https://alibaba.github.io/data-juicer/)

+* [Awesome LLM-Data](docs/awesome_llm_data.md)

+* [DJ-SORA](docs/DJ_SORA_ZH.md)

+

+

+## 演示样例

+

+* Data-Juicer 介绍 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/overview_scan/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/overview_scan)]

+* 数据可视化:

+ * 基础指标统计 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visulization_statistics/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_statistics)]

+ * 词汇多样性 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visulization_diversity/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_diversity)]

+ * 算子洞察(单OP) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visualization_op_insight/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_op_insight)]

+ * 算子效果(多OP) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visulization_op_effect/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_op_effect)]

+* 数据处理:

+ * 科学文献 (例如 [arXiv](https://info.arxiv.org/help/bulk_data_s3.html)) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/process_sci_data/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/process_sci_data)]

+ * 编程代码 (例如 [TheStack](https://huggingface.co/datasets/bigcode/the-stack)) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/process_code_data/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/process_code_data)]

+ * 中文指令数据 (例如 [Alpaca-CoT](https://huggingface.co/datasets/QingyiSi/Alpaca-CoT)) [[ModelScope](https://modelscope.cn/studios/Data-Juicer/process_sft_zh_data/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/process_cft_zh_data)]

+* 工具池:

+ * 按语言分割数据集 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/tool_dataset_splitting_by_language/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/tool_dataset_splitting_by_language)]

+ * CommonCrawl 质量分类器 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/tool_quality_classifier/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/tool_quality_classifier)]

+ * 基于 [HELM](https://github.com/stanford-crfm/helm) 的自动评测 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/auto_evaluation_helm/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/auto_evaluation_helm)]

+ * 数据采样及混合 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_mixture/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_mixture)]

+* 数据处理回路 [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_process_loop/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_process_loop)]

+

+

## 前置条件

-* 推荐 Python>=3.7,<=3.10

+* 推荐 Python>=3.8,<=3.10

* gcc >= 5 (at least C++14 support)

## 安装

@@ -189,6 +224,25 @@ export DATA_JUICER_MODELS_CACHE="/path/to/another/directory/models"

export DATA_JUICER_ASSETS_CACHE="/path/to/another/directory/assets"

```

+### 分布式数据处理

+

+现在基于RAY对多机分布式的数据处理进行了实现。

+对应Demo可以通过如下命令运行:

+

+```shell

+

+# 运行文字数据处理

+python tools/process_data.py --config ./demos/process_on_ray/configs/demo.yaml

+

+# 运行视频数据处理

+python tools/process_data.py --config ./demos/process_video_on_ray/configs/demo.yaml

+

+```

+

+ - 如果需要在多机上使用RAY运行多模态数据处理,需要确保各分布式节点可以访问对应的数据路径,将对应的数据路径挂载在文件共享系统(如NAS)中

+

+ - 用户也可以不使用RAY,拆分数据集后使用Slurm/DLC在集群上运行

+

### 数据分析

- 以配置文件路径为参数运行 `analyze_data.py` 或者 `dj-analyze` 命令行工具来分析数据集。

@@ -273,48 +327,14 @@ docker run -dit \ # 在后台启动容器

docker exec -it [ModelScope](https://modelscope.cn/datasets/Data-Juicer/alpaca-cot-en-refined-by-data-juicer/summary)

[HuggingFace](https://huggingface.co/datasets/datajuicer/alpaca-cot-en-refined-by-data-juicer) | [39 Subsets of Alpaca-CoT](alpaca_cot/README.md#refined-alpaca-cot-dataset-meta-info) | | Alpaca-Cot ZH | 21,197,246 | 9,873,214 | 46.58% | [alpaca-cot-zh-refine.yaml](alpaca_cot/alpaca-cot-zh-refine.yaml) | [Aliyun](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/LLM_data/our_refined_datasets/CFT/alpaca-cot-zh-refine_result.jsonl)

[ModelScope](https://modelscope.cn/datasets/Data-Juicer/alpaca-cot-zh-refined-by-data-juicer/summary)

[HuggingFace](https://huggingface.co/datasets/datajuicer/alpaca-cot-zh-refined-by-data-juicer) | [28 Subsets of Alpaca-CoT](alpaca_cot/README.md#refined-alpaca-cot-dataset-meta-info) | + +## Before and after refining for Multimodal Dataset + +| subset | #samples before | #samples after | keep ratio | config link | data link | source | +|---------------------------|:---------------------------:|:--------------:|:----------:|--------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|---------------| +| LLaVA pretrain (LCS-558k) | 558,128 | 500,380 | 89.65% | [llava-pretrain-refine.yaml](llava-pretrain-refine.yaml) | [Aliyun](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/MM_data/our_refined_data/LLaVA-1.5/public/llava-pretrain-refine-result.json)

[ModelScope](https://modelscope.cn/datasets/Data-Juicer/llava-pretrain-refined-by-data-juicer/summary)

[HuggingFace](https://huggingface.co/datasets/datajuicer/llava-pretrain-refined-by-data-juicer) | [LLaVA-1.5](https://github.com/haotian-liu/LLaVA) | + +### Evaluation Results +- LLaVA pretrain (LCS-558k): models **pretrained with refined dataset** and fine-tuned with the original instruct dataset outperforms the baseline (LLaVA-1.5-13B) on 10 out of 12 benchmarks. + +| model | VQAv2 | GQA | VizWiz | SQA | TextVQA | POPE | MME | MM-Bench | MM-Bench-CN | SEED | LLaVA-Bench-Wild | MM-Vet | +|-------------------------------|-------| --- | --- | --- | --- | --- | --- | --- | --- | --- | --- | --- | +| LLaVA-1.5-13B

(baseline) | **80.0** | 63.3 | 53.6 | 71.6 | **61.3** | 85.9 | 1531.3 | 67.7 | 63.6 | 61.6 | 72.5 | 36.1 | +| LLaVA-1.5-13B

(refined pretrain dataset) | 79.94 | **63.5** | **54.09** | **74.20** | 60.82 | **86.67** | **1565.53** | **68.2** | **63.9** | **61.8** | **75.9** | **37.4** | diff --git a/configs/data_juicer_recipes/README_ZH.md b/configs/data_juicer_recipes/README_ZH.md index af8d1d697..d7dd848d7 100644 --- a/configs/data_juicer_recipes/README_ZH.md +++ b/configs/data_juicer_recipes/README_ZH.md @@ -35,3 +35,17 @@ |-------------------|:------------------------:|:----------------------------------:|:---------:|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------| | Alpaca-Cot EN | 136,219,879 | 72,855,345 | 54.48% | [alpaca-cot-en-refine.yaml](alpaca_cot/alpaca-cot-en-refine.yaml) | [Aliyun](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/LLM_data/our_refined_datasets/CFT/alpaca-cot-en-refine_result.jsonl)

[ModelScope](https://modelscope.cn/datasets/Data-Juicer/alpaca-cot-en-refined-by-data-juicer/summary)

[HuggingFace](https://huggingface.co/datasets/datajuicer/alpaca-cot-en-refined-by-data-juicer) | [来自Alpaca-CoT的39个子集](alpaca_cot/README_ZH.md#完善的-alpaca-cot-数据集元信息) | | Alpaca-Cot ZH | 21,197,246 | 9,873,214 | 46.58% | [alpaca-cot-zh-refine.yaml](alpaca_cot/alpaca-cot-zh-refine.yaml) | [Aliyun](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/LLM_data/our_refined_datasets/CFT/alpaca-cot-zh-refine_result.jsonl)

[ModelScope](https://modelscope.cn/datasets/Data-Juicer/alpaca-cot-zh-refined-by-data-juicer/summary)

[HuggingFace](https://huggingface.co/datasets/datajuicer/alpaca-cot-zh-refined-by-data-juicer) | [来自Alpaca-CoT的28个子集](alpaca_cot/README_ZH.md#完善的-alpaca-cot-数据集元信息) | + +## 完善前后的多模态数据集 + +| 数据子集 | 完善前的样本数目 | 完善后的样本数目 | 样本保留率 | 配置链接 | 数据链接 | 来源 | +|---------------------------|:---------------------------:|:--------------:|:----------:|--------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|---------------| +| LLaVA pretrain (LCS-558k) | 558,128 | 500,380 | 89.65% | [llava-pretrain-refine.yaml](llava-pretrain-refine.yaml) | [Aliyun](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/MM_data/our_refined_data/LLaVA-1.5/public/llava-pretrain-refine-result.json)

[ModelScope](https://modelscope.cn/datasets/Data-Juicer/llava-pretrain-refined-by-data-juicer/summary)

[HuggingFace](https://huggingface.co/datasets/datajuicer/llava-pretrain-refined-by-data-juicer) | [LLaVA-1.5](https://github.com/haotian-liu/LLaVA) | + +### 评测结果 +- LLaVA pretrain (LCS-558k): 使用**完善后的预训练数据集**预训练并使用原始的指令数据集微调后的模型在12个评测集上有10个超过了基线模型LLaVA-1.5-13B。 + +| 模型 | VQAv2 | GQA | VizWiz | SQA | TextVQA | POPE | MME | MM-Bench | MM-Bench-CN | SEED | LLaVA-Bench-Wild | MM-Vet | +|---------------------------------|-------| --- | --- | --- | --- | --- | --- | --- | --- | --- | --- | --- | +| LLaVA-1.5-13B

(基线) | **80.0** | 63.3 | 53.6 | 71.6 | **61.3** | 85.9 | 1531.3 | 67.7 | 63.6 | 61.6 | 72.5 | 36.1 | +| LLaVA-1.5-13B

(完善后的预训练数据集) | 79.94 | **63.5** | **54.09** | **74.20** | 60.82 | **86.67** | **1565.53** | **68.2** | **63.9** | **61.8** | **75.9** | **37.4** | diff --git a/configs/data_juicer_recipes/llava-pretrain-refine.yaml b/configs/data_juicer_recipes/llava-pretrain-refine.yaml new file mode 100644 index 000000000..03a1bf23c --- /dev/null +++ b/configs/data_juicer_recipes/llava-pretrain-refine.yaml @@ -0,0 +1,60 @@ +project_name: 'llava-1.5-pretrain-dataset-refine-recipe' +dataset_path: 'blip_laion_cc_sbu_558k_dj_fmt_only_caption.jsonl' # converted LLaVA pretrain dataset in Data-Juicer format with only_keep_caption is True. See tools/multimodal/source_format_to_data_juicer_format/llava_to_dj.py +export_path: 'blip_laion_cc_sbu_558k_dj_fmt_only_caption_refined.jsonl' + +np: 42 # number of subprocess to process your dataset +text_keys: 'text' # the key name of field where the sample texts to be processed, e.g., `text`, `instruction`, `output`, ... + +# for multimodal data processing +image_key: 'images' # Key name of field to store the list of sample image paths. +image_special_token: '

" + "We welcome you to join us in promoting LLM data development and research!

", + 'demo':"You can experience the effect of the operators of Data-Juicer" + } + # image_src = covert_image_to_base64(project_img_path) + #

+ return f"""

+ :

export_path: './outputs/demo/demo-processed'

diff --git a/demos/process_on_ray/data/demo-dataset.jsonl b/demos/process_on_ray/data/demo-dataset.jsonl

new file mode 100644

index 000000000..a212d42f4

--- /dev/null

+++ b/demos/process_on_ray/data/demo-dataset.jsonl

@@ -0,0 +1,11 @@

+{"text":"What’s one thing you wish everyone knew about the brain?\nibble\nWhat’s one thing you wish everyone knew about the brain?\nThe place to have real conversations and understand each other better. Join a community or build and grow your own with groups, threads, and conversations.\nSee this content immediately after install\nGet The App\n"}

+{"text":"JavaScript must be enabled to use the system\n"}

+{"text":"中国企业又建成一座海外三峡工程!-科技-高清完整正版视频在线观看-优酷\n"}

+{"text":"Skip to content\nPOLIDEPORTES\nPeriodismo especialzado en deportes\nPrimary Menu\nPOLIDEPORTES\nPolideportes\n¿Quiénes somos?\nNoticia\nEntrevistas\nReportaje\nEquipos de Época\nOpinión\nEspeciales\nCopa Poli\nBuscar:\nSteven Villegas Ceballos patinador\nShare this...\nFacebook\nTwitter\nLinkedin\nWhatsapp\nEmail\nSeguir leyendo\nAnterior El imparable campeón Steven Villegas\nTe pueden interesar\nDeportes\nNoticia\nPiezas filatélicas llegan al Museo Olímpico Colombiano\nmarzo 17, 2023"}

+{"text":"Redirect Notice\nRedirect Notice\nThe previous page is sending you to http:\/\/sieuthikhoavantay.vn\/chi-tiet\/khoa-van-tay-dessmann-s710fp-duc.\nIf you do not want to visit that page, you can return to the previous page.\n"}

+{"text": "Do you need a cup of coffee?"}

+{"text": ".cv域名是因特网域名管理机构ICANN为佛得角共和国(The Republic of Cape Verde República de Cabo Verde)国家及地区分配的顶级域(ccTLD),作为其国家及地区因特网顶级域名。- 奇典网络\n专业的互联网服务提供商 登录 注册 控制中心 新闻中心 客户支持 交费方式 联系我们\n首页\n手机AI建站\n建站\n推广\n域名\n主机\n安全\n企业服务\n加盟\nICANN与CNNIC双认证顶级注册商 在中国,奇典网络是域名服务提供商\n.cv\n.cv域名是ICANN为佛得角共和国国家及地区分配的顶级域名,注册期限1年到10年不等。\n价格: 845 元\/1年\n注册要求: 无要求\n.cv\/.com.cv注册要求\n更多国别域名\n更多NewG域名\n相关资质\n1.什么是 .cv\/.com.cv域名?有什么优势?\n.cv域名是因特网域名管理机构ICANN为佛得角共和国(The Republic of Cape Verde República de Cabo Verde)国家及地区分配的顶级域(ccTLD),作为其国家及地区因特网顶级域名。\n2.cv\/.com.cv域名长度为多少?有什么注册规则?"}

+{"text": "Sur la plateforme MT4, plusieurs manières d'accéder à ces fonctionnalités sont conçues simultanément."}

+{"text": "欢迎来到阿里巴巴!"}

+{"text": "This paper proposed a novel method on LLM pretraining."}

+{"text":"世界十大网投平台_2022年卡塔尔世界杯官网\n177-8228-4819\n网站首页\n关于我们\n产品展示\n广告牌制作 广告灯箱制作 标识牌制作 楼宇亮化工程 门头店招制作 不锈钢金属字制作 LED发光字制作 形象墙Logo墙背景墙制作 LED显示屏制作 装饰装潢工程 铜字铜牌制作 户外广告 亚克力制品 各类广告设计 建筑工地广告制作 楼顶大字制作|楼顶发光字制作 霓虹灯制作 三维扣板|3D扣板|广告扣板 房地产广告制作设计 精神堡垒|立牌|指示牌制作 大型商业喷绘写真 展览展示 印刷服务\n合作伙伴\n新闻资讯\n公司新闻 行业新闻 制作知识 设计知识\n成功案例\n技术园地\n联系方式\n"}

diff --git a/demos/process_video_on_ray/configs/demo.yaml b/demos/process_video_on_ray/configs/demo.yaml

new file mode 100644

index 000000000..27236c08a

--- /dev/null

+++ b/demos/process_video_on_ray/configs/demo.yaml

@@ -0,0 +1,39 @@

+# Process config example for dataset

+

+# global parameters

+project_name: 'ray-demo'

+executor_type: 'ray'

+dataset_path: './demos/process_video_on_ray/data/demo-dataset.jsonl' # path to your dataset directory or file

+ray_address: 'auto' # change to your ray cluster address, e.g., ray://:

+export_path: './outputs/demo/demo-processed-ray-videos'

+

+# process schedule

+# a list of several process operators with their arguments

+

+# single node passed, multi node still under develop

+process:

+ # Filter ops

+ - video_duration_filter:

+ min_duration: 20

+ max_duration: 60

+ # Mapper ops

+ - video_split_by_duration_mapper: # Mapper to split video by duration.

+ split_duration: 10 # duration of each video split in seconds.

+ min_last_split_duration: 0 # the minimum allowable duration in seconds for the last video split. If the duration of the last split is less than this value, it will be discarded.

+ keep_original_sample: true

+ - video_resize_aspect_ratio_mapper:

+ min_ratio: 1

+ max_ratio: 1.1

+ strategy: increase

+ - video_split_by_key_frame_mapper: # Mapper to split video by key frame.

+ keep_original_sample: true # whether to keep the original sample. If it's set to False, there will be only cut sample in the final datasets and the original sample will be removed. It's True in default

+ - video_split_by_duration_mapper: # Mapper to split video by duration.

+ split_duration: 10 # duration of each video split in seconds.

+ min_last_split_duration: 0 # the minimum allowable duration in seconds for the last video split. If the duration of the last split is less than this value, it will be discarded.

+ keep_original_sample: true

+ - video_resolution_filter: # filter samples according to the resolution of videos in them

+ min_width: 1280 # the min resolution of horizontal resolution filter range (unit p)

+ max_width: 4096 # the max resolution of horizontal resolution filter range (unit p)

+ min_height: 480 # the min resolution of vertical resolution filter range (unit p)

+ max_height: 1080 # the max resolution of vertical resolution filter range (unit p)

+ any_or_all: any

diff --git a/demos/process_video_on_ray/data/Note.md b/demos/process_video_on_ray/data/Note.md

new file mode 100644

index 000000000..bf3dfece3

--- /dev/null

+++ b/demos/process_video_on_ray/data/Note.md

@@ -0,0 +1,7 @@

+# Note for dataset path

+

+The videos/images path here support both absolute path and relative path.

+Please use an address that can be accessed on all nodes (such as an address within a NAS file-sharing system).

+For relative paths, these should be relative to the directory where the dataset file is located (the dataset_path parameter in the config).

+ - if the dataset_path parameter is a directory, then it's relative to dataset_path

+ - if the dataset_path parameter is a file, then it's relative to data_path parameter's corresponding dirname

diff --git a/demos/process_video_on_ray/data/demo-dataset.jsonl b/demos/process_video_on_ray/data/demo-dataset.jsonl

new file mode 100644

index 000000000..1c9c006b0

--- /dev/null

+++ b/demos/process_video_on_ray/data/demo-dataset.jsonl

@@ -0,0 +1,3 @@

+{"videos": ["./videos/video1.mp4"], "text": "<__dj__video> 10s videos <|__dj__eoc|>'}"}

+{"videos": ["./videos/video2.mp4"], "text": "<__dj__video> 23s videos <|__dj__eoc|>'}"}

+{"videos": ["./videos/video3.mp4"], "text": "<__dj__video> 46s videos <|__dj__eoc|>'}"}

\ No newline at end of file

diff --git a/demos/process_video_on_ray/data/videos/video1.mp4 b/demos/process_video_on_ray/data/videos/video1.mp4

new file mode 100644

index 000000000..5b0cad49f

Binary files /dev/null and b/demos/process_video_on_ray/data/videos/video1.mp4 differ

diff --git a/demos/process_video_on_ray/data/videos/video2.mp4 b/demos/process_video_on_ray/data/videos/video2.mp4

new file mode 100644

index 000000000..28acb927f

Binary files /dev/null and b/demos/process_video_on_ray/data/videos/video2.mp4 differ

diff --git a/demos/process_video_on_ray/data/videos/video3.mp4 b/demos/process_video_on_ray/data/videos/video3.mp4

new file mode 100644

index 000000000..45db64a51

Binary files /dev/null and b/demos/process_video_on_ray/data/videos/video3.mp4 differ

diff --git a/docs/DJ_SORA.md b/docs/DJ_SORA.md

new file mode 100644

index 000000000..1dce43860

--- /dev/null

+++ b/docs/DJ_SORA.md

@@ -0,0 +1,106 @@

+English | [中文页面](DJ_SORA_ZH.md)

+

+---

+

+Data is the key to the unprecedented development of large multi-modal models such as SORA. How to obtain and process data efficiently and scientifically faces new challenges! DJ-SORA aims to create a series of large-scale, high-quality open source multi-modal data sets to assist the open source community in data understanding and model training.

+

+DJ-SORA is based on Data-Juicer (including hundreds of dedicated video, image, audio, text and other multi-modal data processing [operators](Operators_ZH.md) and tools) to form a series of systematic and reusable Multimodal "data recipes" for analyzing, cleaning, and generating large-scale, high-quality multimodal data.

+

+This project is being actively updated and maintained. We eagerly invite you to participate and jointly create a more open and higher-quality multi-modal data ecosystem to unleash the unlimited potential of large models!

+

+# Motivation

+- SORA only briefly mentions using DALLE-3 to generate captions and can handle varying durations, resolutions and aspect ratios.

+- High-quality large-scale fine-grained data helps to densify data points, aiding models to better learn the conditional mapping of "text -> spacetime token", and solve a series of existing challenges in text-to-video models:

+ - Smoothness of visual flow, with some generated videos exhibiting dropped frames and static states.

+ - Text comprehension and fine-grained detail, where the produced results have a low match with the given prompts.

+ - Generated content showing distortions and violations of physical laws, especially when entities are in motion.

+ - Short video content, mostly around ~10 seconds, with little to no significant changes in scenes or backdrops.

+

+# Roadmap

+## Overview

+* [Support high-performance loading and processing of video data](#Support high-performance loading and processing of video data)

+* [Basic Operators (video spatio-temporal dimension)](#Basic operator video spatio-temporal dimension)

+* [Advanced Operators (fine-grained modal matching and data generation)](#Advanced operators fine-grained modal matching and data generation)

+* [Advanced Operators (Video Content)](#Advanced Operator Video Content)

+* [DJ-SORA Data Recipes and Datasets](#DJ-SORA Data Recipes and Datasets)

+* [DJ-SORA Data Validation and Model Training](#DJ-SORA Data Validation and Model Training)

+

+

+## Support high-performance loading and processing of video data

+- [✅] Parallelize data loading and storing:

+ - [✅] lazy load with pyAV and ffmpeg

+ - [✅] Multi-modal data path signature

+- [✅] Parallelization operator processing:

+ - [✅] Support single machine multicore running

+ - [✅] GPU utilization

+ - [✅] Ray based multi-machine distributed running

+- [ ] [WIP] Distributed scheduling optimization (OP-aware, automated load balancing) --> Aliyun PAI-DLC

+- [ ] [WIP] Distributed storage optimization

+

+## Basic Operators (video spatio-temporal dimension)

+- Towards Data Quality

+ - [✅] video_resolution_filter (targeted resolution)

+ - [✅] video_aspect_ratio_filter (targeted aspect ratio)

+ - [✅] video_duration_filter (targeted) duration)

+ - [✅] video_motion_score_filter (video continuity dimension, calculating optical flow and removing statics and extreme dynamics)

+ - [✅] video_ocr_area_ratio_filter (remove samples with text areas that are too large)

+- Towards Data Diversity & Quantity

+ - [✅] video_resize_resolution_mapper (enhancement in resolution dimension)

+ - [✅] video_resize_aspect_ratio_mapper (enhancement in aspect ratio dimension)

+ - [✅] video_split_by_duration_mapper (enhancement in time dimension)

+ - [✅] video_split_by_key_frame_mapper (enhancement in time dimension with key information focus)

+ - [✅] video_split_by_scene_mapper (enhancement in time dimension with scene continuity focus)

+

+## Advanced Operators (fine-grained modal matching and data generation)

+- Towards Data Quality

+ - [✅] video_frames_text_similarity_filter (enhancement in the spatiotemporal consistency dimension, calculating the matching score of key/specified frames and text)

+- Towards Diversity & Quantity

+ - [✅] video_tagging_from_frames_mapper (with lightweight image-to-text models, spatial summary information from dense frames)

+ - [ ] [WIP] video_captioning_from_frames_mapper (heavier image-to-text models, generating more detailed spatial information from fewer frames)

+ - [✅] video_tagging_from_audio_mapper (introducing audio classification/category and other meta information)

+ - [✅] video_captioning_from_audio_mapper (incorporating voice/dialogue information; AudioCaption for environmental and global context)

+ - [✅] video_captioning_from_video_mapper (video-to-text model, generating spacetime information from continuous frames)

+ - [ ] [WIP] video_captioning_from_summarizer_mapper (combining the above sub-abilities, using pure text large models for denoising and summarizing different types of caption information)

+ - [ ] [WIP] video_interleaved_mapper (enhancement in ICL, temporal, and cross-modal dimensions), `interleaved_modes` include:

+ - text_image_interleaved (placing captions and frames of the same video in temporal order)

+ - text_audio_interleaved (placing ASR text and frames of the same video in temporal order)

+ - text_image_audio_interleaved (alternating stitching of the above two types)

+## Advanced Operators (Video Content)

+- [✅] video_deduplicator (comparing hash values to deduplicate at the file sample level)

+- [✅] video_aesthetic_filter (performing aesthetic scoring filters after frame decomposition)

+- [✅] Compatibility with existing ffmpeg video commands

+ - audio_ffmpeg_wrapped_mapper

+ - video_ffmpeg_wrapped_mapper

+- [WIP] Video content compliance and privacy protection operators (image, text, audio):

+ - [✅] Mosaic

+ - [ ] Copyright watermark

+ - [ ] Face blurring

+ - [ ] Violence and Adult Content

+- [ ] [TODO] (Beyond Interpolation) Enhancing data authenticity and density

+ - Collisions, lighting, gravity, 3D, scene and phase transitions, depth of field, etc.

+ - [ ] Filter-type operators: whether captions describe authenticity, relevance scoring/correctness of that description

+ - [ ] Mapper-type operators: enhance textual descriptions of physical phenomena in video data

+ - [ ] ...

+## DJ-SORA Data Recipes and Datasets

+- Support for unified loading and conversion of representative datasets (other-data <-> dj-data), facilitating DJ operator processing and dataset expansion.

+ - [✅] **Video-ChatGPT**: 100k video-instruction data: `{}`

+ - [✅] **Youku-mPLUG-CN**: 36TB video-caption data: `{}`

+ - [✅] **InternVid**: 234M data sample: `{}`

+ - [ ] VideoInstruct-100K, Panda70M, MSR-VTT, ......

+ - [ ] ModelScope's datasets integration

+- [ ] Large-scale high-quality DJ-SORA dataset

+ - [ ] [WIP] Continuous expansion of data sources: open-datasets, Youku, web, ...

+ - [ ] [WIP] (Data sandbox) Building and optimizing multimodal data recipes with DJ-video operators (which are also being continuously extended and improved).

+ - [ ] [WIP] Large-scale analysis and cleaning of high-quality multimodal datasets based on DJ recipes

+ - [ ] [WIP] Large-scale generation of high-quality multimodal datasets based on DJ recipes.

+ - ...

+

+## DJ-SORA Data Validation and Model Training

+ - [ ] [WIP] Exploring and refining multimodal data evaluation metrics and techniques, establishing benchmarks and insights.

+ - [ ] [WIP] Integration of SORA-like model training pipelines

+ - VideoDIT

+ - VQVAE

+ - ...

+ - [ ] [WIP] (Model-Data sandbox) With relatively small models and the DJ-SORA dataset, exploring low-cost, transferable, and instructive data-model co-design, configurations and checkpoints.

+ - [ ] Training SORA-like models with DJ-SORA data on larger scales and in more scenarios to improve model performance.

+ - ...

diff --git a/docs/DJ_SORA_ZH.md b/docs/DJ_SORA_ZH.md

new file mode 100644

index 000000000..4ccdd8866

--- /dev/null

+++ b/docs/DJ_SORA_ZH.md

@@ -0,0 +1,111 @@

+中文 | [English Page](DJ_SORA.md)

+

+---

+

+数据是SORA等前沿大模型的关键,如何高效科学地获取和处理数据面临新的挑战!DJ-SORA旨在创建一系列大规模高质量开源多模态数据集,助力开源社区数据理解和模型训练。

+

+DJ-SORA将基于Data-Juicer(包含上百个专用的视频、图像、音频、文本等多模态数据处理[算子](Operators_ZH.md)及工具),形成一系列系统化可复用的多模态“数据菜谱”,用于分析、清洗及生成大规模高质量多模态数据。

+

+本项目正在积极更新和维护中,我们热切地邀请您参与,共同打造一个更开放、更高质的多模态数据生态系统,激发大模型无限潜能!

+

+# 动机

+- SORA仅简略提及使用了DALLE-3来生成高质量caption,且模型输入数据有变化的时长、分辨率和宽高比。

+- 高质量大规模细粒度数据有助于稠密化数据点,帮助模型学好“文本 -> spacetime token”的条件映射,解决text-2-video模型的一系列现有挑战:

+ - 画面流畅性和一致性,部分生成的视频有丢帧及静止状态

+ - 文本理解能力和细粒度,生成出的结果和prompt匹配度较低

+ - 视频内容较短,大多只有~10s,且场景画面不会有大的改变

+ - 生成内容存在变形扭曲和物理规则违背情况,特别是在实体做出动作时

+

+# 路线图

+## 概览

+* [支持视频数据的高性能加载和处理](#支持视频数据的高性能加载和处理)

+* [基础算子(视频时空维度)](#基础算子视频时空维度)

+* [进阶算子(细粒度模态间匹配及生成)](#进阶算子细粒度模态间匹配及生成)

+* [进阶算子(视频内容)](#进阶算子视频内容)

+* [DJ-SORA数据菜谱及数据集](#DJ-SORA数据菜谱及数据集)

+* [DJ-SORA数据验证及模型训练](#DJ-SORA数据验证及模型训练)

+

+## 支持视频数据的高性能加载和处理

+- [✅] 并行化数据加载存储:

+ - [✅] lazy load with pyAV and ffmpeg

+ - [✅] 多模态数据路径签名

+- [✅] 并行化算子处理:

+ - [✅] 支持单机多核

+ - [✅] GPU调用

+ - [✅] Ray多机分布式

+- [ ] [WIP] 分布式调度优化(OP-aware、自动化负载均衡)--> Aliyun PAI-DLC

+- [ ] [WIP] 分布式存储优化

+

+## 基础算子(视频时空维度)

+- 面向数据质量

+ - [✅] video_resolution_filter (在分辨率维度进行过滤)

+ - [✅] video_aspect_ratio_filter (在宽高比维度进行过滤)

+ - [✅] video_duration_filter (在时间维度进行过滤)

+ - [✅] video_motion_score_filter(在视频连续性维度过滤,计算光流,去除静态和极端动态)

+ - [✅] video_ocr_area_ratio_filter (移除文本区域过大的样本)

+- 面向数据多样性及数量

+ - [✅] video_resize_resolution_mapper(在分辨率维度进行增强)

+ - [✅] video_resize_aspect_ratio_mapper(在宽高比维度进行增强)

+ - [✅] video_split_by_key_frame_mapper(基于关键帧进行切割)

+ - [✅] video_split_by_duration_mapper(在时间维度进行切割)

+ - [✅] video_split_by_scene_mapper (基于场景连续性进行切割)

+

+## 进阶算子(细粒度模态间匹配及生成)

+- 面向数据质量

+ - [✅] video_frames_text_similarity_filter(在时空一致性维度过滤,计算关键/指定帧 和文本的匹配分)

+- 面向数据多样性及数量

+ - [✅] video_tagging_from_frames_mapper (轻量图生文模型,密集帧生成空间 概要信息)

+ - [ ] [WIP] video_captioning_from_frames_mapper(更重的图生文模型,少量帧生 成更详细空间信息)

+ - [✅] video_tagging_from_audio_mapper (引入audio classification/category等meta信息)

+ - [✅] video_captioning_from_audio_mapper(引入人声/对话等信息; AudioCaption环境、场景等全局信息)

+ - [✅] video_captioning_from_video_mapper(视频生文模型,连续帧生成时序信息)

+ - [ ] [WIP] video_captioning_from_summarizer_mapper(基于上述子能力的组合,使用纯文本大模型对不同种caption信息去噪、摘要)

+ - [ ] [WIP] video_interleaved_mapper(在ICL、时间和跨模态维度增强),`interleaved_modes` include

+ - text_image_interleaved(按时序交叉放置同一视频的的caption和frames)

+ - text_audio_interleaved(按时序交叉放置同一视频的的ASR文本和frames)

+ - text_image_audio_interleaved(交替拼接上述两种)

+

+## 进阶算子(视频内容)

+- [✅] video_deduplicator (比较MD5哈希值在文件样本级别去重)

+- [✅] video_aesthetic_filter(拆帧后,进行美学度打分过滤)

+- [✅]兼容ffmpeg已有的video commands

+ - audio_ffmpeg_wrapped_mapper

+ - video_ffmpeg_wrapped_mapper

+- [WIP] 视频内容合规和隐私保护算子(图像、文字、音频):

+ - [✅] 马赛克

+ - [ ] 版权水印

+ - [ ] 人脸模糊

+ - [ ] 黄暴恐

+- [ ] [TODO] (Beyond Interpolation) 增强数据真实性和稠密性

+ - 碰撞、光影、重力、3D、场景切换(phase tranisition)、景深等

+ - [ ] Filter类算子: caption是否描述真实性,该描述的相关性得分/正确性得分

+ - [ ] Mapper类算子:增强video数据中对物理现象的文本描述

+ - [ ] ...

+

+

+

+## DJ-SORA数据菜谱及数据集

+- 支持代表性数据的统一加载和转换(other-data <-> dj-data),方便DJ算子处理及扩展数据集

+ - [✅] **Video-ChatGPT**: 100k video-instruction data:`{}`

+ - [✅] **Youku-mPLUG-CN**: 36TB video-caption data:`{}`

+ - [✅] **InternVid**: 234M data sample:`{}`

+ - [ ] VideoInstruct-100K, Panda70M, MSR-VTT, ......

+ - [ ] ModelScope数据集集成

+- [ ] 大规模高质量DJ-SORA数据集

+ - [ ] [WIP] 数据源持续扩充:open-datasets, youku, web, ...

+ - [ ] [WIP] (Data sandbox) 基于DJ-video算子构建和优化多模态数据菜谱 (算子同期持续完善)

+ - [ ] [WIP] 基于DJ菜谱规模化分析、清洗高质量多模态数据集

+ - [ ] [WIP] 基于DJ菜谱规模化生成高质量多模态数据集

+ - ...

+

+## DJ-SORA数据验证及模型训练

+ - [ ] [WIP] 探索及完善多模态数据的评估指标和评估技术,形成benchmark和insights

+ - [ ] [WIP] 类SORA模型训练pipeline集成

+ - VideoDIT

+ - VQVAE

+ - ...

+ - [ ] [WIP] (Model-Data sandbox) 在相对小的模型和DJ-SORA数据集上,探索形成低开销、可迁移、有指导性的data-model co-design、配置及检查点

+ - [ ] 更大规模、更多场景使用DJ-SORA数据训练类SORA模型,提高模型性能

+ - ...

+

+

diff --git a/docs/DeveloperGuide.md b/docs/DeveloperGuide.md

index a658b5e7c..bf248aa82 100644

--- a/docs/DeveloperGuide.md

+++ b/docs/DeveloperGuide.md

@@ -147,6 +147,52 @@ class StatsKeys(object):

# ... (same as above)

```

+ - In a mapper operator, to avoid process conflicts and data coverage, we offer an interface to make a saving path for produced extra datas. The format of the saving path is `{ORIGINAL_DATAPATH}/{OP_NAME}/{ORIGINAL_FILENAME}__dj_hash_#{HASH_VALUE}#.{EXT}`, where the `HASH_VALUE` is hashed from the init parameters of the operator, the related parameters in each sample, the process ID, and the timestamp. For convenience, we can call `self.remove_extra_parameters(locals())` at the beginning of the initiation to get the init parameters. At the same time, we can call `self.add_parameters` to add related parameters with the produced extra datas from each sample. Take the operator which enhances the images with diffusion models as example:

+ ```python

+ # ... (import some library)

+ OP_NAME = 'image_diffusion_mapper'

+ @OPERATORS.register_module(OP_NAME)

+ @LOADED_IMAGES.register_module(OP_NAME)

+ class ImageDiffusionMapper(Mapper):

+ def __init__(self,

+ # ... (OP parameters)

+ *args,

+ **kwargs):

+ super().__init__(*args, **kwargs)

+ self._init_parameters = self.remove_extra_parameters(locals())

+

+ def process(self, sample, rank=None):

+ # ... (some codes)

+ # captions[index] is the prompt for diffusion model

+ related_parameters = self.add_parameters(

+ self._init_parameters, caption=captions[index])

+ new_image_path = transfer_filename(

+ origin_image_path, OP_NAME, **related_parameters)

+ # ... (some codes)

+ ```

+ For the mapper to produce multi extra datas base on one origin data, we can add suffix at the saving path. Take the operator which splits videos according to their key frames as example:

+ ```python

+ # ... (import some library)

+ OP_NAME = 'video_split_by_key_frame_mapper'

+ @OPERATORS.register_module(OP_NAME)

+ @LOADED_VIDEOS.register_module(OP_NAME)

+ class VideoSplitByKeyFrameMapper(Mapper):

+ def __init__(self,

+ # ... (OP parameters)

+ *args,

+ **kwargs):

+ super().__init__(*args, **kwargs)

+ self._init_parameters = self.remove_extra_parameters(locals())

+

+ def process(self, sample, rank=None):

+ # ... (some codes)

+ split_video_path = transfer_filename(

+ original_video_path, OP_NAME, **self._init_parameters)

+ suffix = '_split-by-key-frame-' + str(count)

+ split_video_path = add_suffix_to_filename(split_video_path, suffix)

+ # ... (some codes)

+ ```

+

3. After implemention, add it to the OP dictionary in the `__init__.py` file in `data_juicer/ops/filter/` directory.

```python

@@ -172,8 +218,9 @@ process:

```python

import unittest

from data_juicer.ops.filter.text_length_filter import TextLengthFilter

+from data_juicer.utils.unittest_utils import DataJuicerTestCaseBase

-class TextLengthFilterTest(unittest.TestCase):

+class TextLengthFilterTest(DataJuicerTestCaseBase):

def test_func1(self):

pass

@@ -183,6 +230,9 @@ class TextLengthFilterTest(unittest.TestCase):

def test_func3(self):

pass

+

+if __name__ == '__main__':

+ unittest.main()

```

6. (Strongly Recommend) In order to facilitate the use of other users, we also need to update this new OP information to

diff --git a/docs/DeveloperGuide_ZH.md b/docs/DeveloperGuide_ZH.md

index f188aecbc..3c6bb2411 100644

--- a/docs/DeveloperGuide_ZH.md

+++ b/docs/DeveloperGuide_ZH.md

@@ -142,6 +142,52 @@ class StatsKeys(object):

# ... (same as above)

```

+ - 在mapper算子中,我们提供了产生额外数据的存储路径生成接口,避免出现进程冲突和数据覆盖的情况。生成的存储路径格式为`{ORIGINAL_DATAPATH}/{OP_NAME}/{ORIGINAL_FILENAME}__dj_hash_#{HASH_VALUE}#.{EXT}`,其中`HASH_VALUE`是算子初始化参数、每个样本中相关参数、进程ID和时间戳的哈希值。为了方便,可以在OP类初始化开头调用`self.remove_extra_parameters(locals())`获取算子初始化参数,同时可以调用`self.add_parameters`添加每个样本与生成额外数据相关的参数。例如,利用diffusion模型对图像进行增强的算子:

+ ```python

+ # ... (import some library)

+ OP_NAME = 'image_diffusion_mapper'

+ @OPERATORS.register_module(OP_NAME)

+ @LOADED_IMAGES.register_module(OP_NAME)

+ class ImageDiffusionMapper(Mapper):

+ def __init__(self,

+ # ... (OP parameters)

+ *args,

+ **kwargs):

+ super().__init__(*args, **kwargs)

+ self._init_parameters = self.remove_extra_parameters(locals())

+

+ def process(self, sample, rank=None):

+ # ... (some codes)

+ # captions[index] is the prompt for diffusion model

+ related_parameters = self.add_parameters(

+ self._init_parameters, caption=captions[index])

+ new_image_path = transfer_filename(

+ origin_image_path, OP_NAME, **related_parameters)

+ # ... (some codes)

+ ```

+ 针对一个数据源衍生出多个额外数据的情况,我们允许在生成的存储路径后面再加后缀。比如,根据关键帧将视频拆分成多个视频:

+ ```python

+ # ... (import some library)

+ OP_NAME = 'video_split_by_key_frame_mapper'

+ @OPERATORS.register_module(OP_NAME)

+ @LOADED_VIDEOS.register_module(OP_NAME)

+ class VideoSplitByKeyFrameMapper(Mapper):

+ def __init__(self,

+ # ... (OP parameters)

+ *args,

+ **kwargs):

+ super().__init__(*args, **kwargs)

+ self._init_parameters = self.remove_extra_parameters(locals())

+

+ def process(self, sample, rank=None):

+ # ... (some codes)

+ split_video_path = transfer_filename(

+ original_video_path, OP_NAME, **self._init_parameters)

+ suffix = '_split-by-key-frame-' + str(count)

+ split_video_path = add_suffix_to_filename(split_video_path, suffix)

+ # ... (some codes)

+ ```

+

3. 实现后,将其添加到 `data_juicer/ops/filter` 目录下 `__init__.py` 文件中的算子字典中:

```python

@@ -168,8 +214,10 @@ process:

```python

import unittest

from data_juicer.ops.filter.text_length_filter import TextLengthFilter

+from data_juicer.utils.unittest_utils import DataJuicerTestCaseBase

+

-class TextLengthFilterTest(unittest.TestCase):

+class TextLengthFilterTest(DataJuicerTestCaseBase):

def test_func1(self):

pass

@@ -179,6 +227,9 @@ class TextLengthFilterTest(unittest.TestCase):

def test_func3(self):

pass

+

+if __name__ == '__main__':

+ unittest.main()

```

6. (强烈推荐)为了方便其他用户使用,我们还需要将新增的算子信息更新到相应的文档中,具体包括如下文档:

diff --git a/docs/Operators.md b/docs/Operators.md

index 28fdb7306..9409449b3 100644

--- a/docs/Operators.md

+++ b/docs/Operators.md

@@ -2,6 +2,7 @@

Operators are a collection of basic processes that assist in data modification, cleaning, filtering, deduplication, etc. We support a wide range of data sources and file formats, and allow for flexible extension to custom datasets.

+This page offers a basic description of the operators (OPs) in Data-Juicer. Users can refer to the [API documentation](https://alibaba.github.io/data-juicer/) for the specific parameters of each operator. Users can refer to and run the unit tests for [examples of operator-wise usage](../tests/ops) as well as the effects of each operator when applied to built-in test data samples.

## Overview

@@ -10,9 +11,9 @@ The operators in Data-Juicer are categorized into 5 types.

| Type | Number | Description |

|-----------------------------------|:------:|-------------------------------------------------|

| [ Formatter ]( #formatter ) | 7 | Discovers, loads, and canonicalizes source data |

-| [ Mapper ]( #mapper ) | 26 | Edits and transforms samples |

-| [ Filter ]( #filter ) | 28 | Filters out low-quality samples |

-| [ Deduplicator ]( #deduplicator ) | 4 | Detects and removes duplicate samples |

+| [ Mapper ]( #mapper ) | 38 | Edits and transforms samples |

+| [ Filter ]( #filter ) | 36 | Filters out low-quality samples |

+| [ Deduplicator ]( #deduplicator ) | 5 | Detects and removes duplicate samples |

| [ Selector ]( #selector ) | 2 | Selects top samples based on ranking |

@@ -25,6 +26,7 @@ All the specific operators are listed below, each featured with several capabili

- Financial: closely related to financial sector

- Image: specific to images or multimodal

- Audio: specific to audios or multimodal

+ - Video: specific to videos or multimodal

- Multimodal: specific to multimodal

* Language Tags

- en: English

@@ -46,69 +48,88 @@ All the specific operators are listed below, each featured with several capabili

## Mapper

-| Operator | Domain | Lang | Description |

-|-----------------------------------------------------|--------------------|--------|----------------------------------------------------------------------------------------------------------------|

-| chinese_convert_mapper | General | zh | Converts Chinese between Traditional Chinese, Simplified Chinese and Japanese Kanji (by [opencc](https://github.com/BYVoid/OpenCC)) |

-| clean_copyright_mapper | Code | en, zh | Removes copyright notice at the beginning of code files (:warning: must contain the word *copyright*) |

-| clean_email_mapper | General | en, zh | Removes email information |

-| clean_html_mapper | General | en, zh | Removes HTML tags and returns plain text of all the nodes |

-| clean_ip_mapper | General | en, zh | Removes IP addresses |

-| clean_links_mapper | General, Code | en, zh | Removes links, such as those starting with http or ftp |

-| expand_macro_mapper | LaTeX | en, zh | Expands macros usually defined at the top of TeX documents |

-| fix_unicode_mapper | General | en, zh | Fixes broken Unicodes (by [ftfy](https://ftfy.readthedocs.io/)) |

-| generate_caption_mapper | Multimodal | - | generate samples whose captions are generated based on another model (such as blip2) and the figure within the original sample |

-| gpt4v_generate_mapper | Multimodal | - | generate samples whose texts are generated based on gpt-4-visison and the image |

+| Operator | Domain | Lang | Description |

+|-----------------------------------------------------|--------------------|--------|---------------------------------------------------------------------------------------------------------------|

+| audio_ffmpeg_wrapped_mapper | Audio | - | Simple wrapper to run a FFmpeg audio filter |

+| chinese_convert_mapper | General | zh | Converts Chinese between Traditional Chinese, Simplified Chinese and Japanese Kanji (by [opencc](https://github.com/BYVoid/OpenCC)) |

+| clean_copyright_mapper | Code | en, zh | Removes copyright notice at the beginning of code files (:warning: must contain the word *copyright*) |

+| clean_email_mapper | General | en, zh | Removes email information |

+| clean_html_mapper | General | en, zh | Removes HTML tags and returns plain text of all the nodes |

+| clean_ip_mapper | General | en, zh | Removes IP addresses |

+| clean_links_mapper | General, Code | en, zh | Removes links, such as those starting with http or ftp |

+| expand_macro_mapper | LaTeX | en, zh | Expands macros usually defined at the top of TeX documents |

+| fix_unicode_mapper | General | en, zh | Fixes broken Unicodes (by [ftfy](https://ftfy.readthedocs.io/)) |

| image_blur_mapper | Multimodal | - | Blur images |

+| image_captioning_from_gpt4v_mapper | Multimodal | - | generate samples whose texts are generated based on gpt-4-visison and the image |

+| image_captioning_mapper | Multimodal | - | generate samples whose captions are generated based on another model (such as blip2) and the figure within the original sample |

| image_diffusion_mapper | Multimodal | - | Generate and augment images by stable diffusion model |

-| nlpaug_en_mapper | General | en | Simply augments texts in English based on the `nlpaug` library |

-| nlpcda_zh_mapper | General | zh | Simply augments texts in Chinese based on the `nlpcda` library |

-| punctuation_normalization_mapper | General | en, zh | Normalizes various Unicode punctuations to their ASCII equivalents |

-| remove_bibliography_mapper | LaTeX | en, zh | Removes the bibliography of TeX documents |

-| remove_comments_mapper | LaTeX | en, zh | Removes the comments of TeX documents |

-| remove_header_mapper | LaTeX | en, zh | Removes the running headers of TeX documents, e.g., titles, chapter or section numbers/names |

-| remove_long_words_mapper | General | en, zh | Removes words with length outside the specified range |

+| nlpaug_en_mapper | General | en | Simply augments texts in English based on the `nlpaug` library |

+| nlpcda_zh_mapper | General | zh | Simply augments texts in Chinese based on the `nlpcda` library |

+| punctuation_normalization_mapper | General | en, zh | Normalizes various Unicode punctuations to their ASCII equivalents |

+| remove_bibliography_mapper | LaTeX | en, zh | Removes the bibliography of TeX documents |

+| remove_comments_mapper | LaTeX | en, zh | Removes the comments of TeX documents |

+| remove_header_mapper | LaTeX | en, zh | Removes the running headers of TeX documents, e.g., titles, chapter or section numbers/names |

+| remove_long_words_mapper | General | en, zh | Removes words with length outside the specified range |

| remove_non_chinese_character_mapper | General | en, zh | Remove non Chinese character in text samples. |

| remove_repeat_sentences_mapper | General | en, zh | Remove repeat sentences in text samples. |

-| remove_specific_chars_mapper | General | en, zh | Removes any user-specified characters or substrings |

+| remove_specific_chars_mapper | General | en, zh | Removes any user-specified characters or substrings |

| remove_table_text_mapper | General, Financial | en | Detects and removes possible table contents (:warning: relies on regular expression matching and thus fragile) |

-| remove_words_with_incorrect_

substrings_mapper | General | en, zh | Removes words containing specified substrings | -| replace_content_mapper | General | en, zh | Replace all content in the text that matches a specific regular expression pattern with a designated replacement string. | -| sentence_split_mapper | General | en | Splits and reorganizes sentences according to semantics | -| whitespace_normalization_mapper | General | en, zh | Normalizes various Unicode whitespaces to the normal ASCII space (U+0020) | +| remove_words_with_incorrect_

substrings_mapper | General | en, zh | Removes words containing specified substrings | +| replace_content_mapper | General | en, zh | Replace all content in the text that matches a specific regular expression pattern with a designated replacement string | +| sentence_split_mapper | General | en | Splits and reorganizes sentences according to semantics | +| video_captioning_from_audio_mapper | Multimodal | - | Caption a video according to its audio streams based on Qwen-Audio model | +| video_captioning_from_video_mapper | Multimodal | - | generate samples whose captions are generated based on another model (video-blip) and sampled video frame within the original sample | +| video_ffmpeg_wrapped_mapper | Video | - | Simple wrapper to run a FFmpeg video filter | +| video_resize_aspect_ratio_mapper | Video | - | Resize video aspect ratio to a specified range | +| video_resize_resolution_mapper | Video | - | Map videos to ones with given resolution range | +| video_split_by_duration_mapper | Multimodal | - | Mapper to split video by duration. | +| video_spit_by_key_frame_mapper | Multimodal | - | Mapper to split video by key frame. | +| video_split_by_scene_mapper | Multimodal | - | Split videos into scene clips | +| video_tagging_from_audio_mapper | Multimodal | - | Mapper to generate video tags from audio streams extracted from the video. | +| video_tagging_from_frames_mapper | Multimodal | - | Mapper to generate video tags from frames extracted from the video. | +| whitespace_normalization_mapper | General | en, zh | Normalizes various Unicode whitespaces to the normal ASCII space (U+0020) | ## Filter -| Operator | Domain | Lang | Description | -|--------------------------------|------------|--------|-------------------------------------------------------------------------------------------------------------------------------------------------------| -| alphanumeric_filter | General | en, zh | Keeps samples with alphanumeric ratio within the specified range | -| audio_duration_filter | Audio | - | Keep data samples whose audios' durations are within a specified range | +| Operator | Domain | Lang | Description | +|--------------------------------|------------|--------|-----------------------------------------------------------------------------------------------------------------------------------------------------| +| alphanumeric_filter | General | en, zh | Keeps samples with alphanumeric ratio within the specified range | +| audio_duration_filter | Audio | - | Keep data samples whose audios' durations are within a specified range | | audio_nmf_snr_filter | Audio | - | Keep data samples whose audios' Signal-to-Noise Ratios (SNRs, computed based on Non-Negative Matrix Factorization, NMF) are within a specified range. | -| audio_size_filter | Audio | - | Keep data samples whose audios' sizes are within a specified range | -| average_line_length_filter | Code | en, zh | Keeps samples with average line length within the specified range | -| character_repetition_filter | General | en, zh | Keeps samples with char-level n-gram repetition ratio within the specified range | -| face_area_filter | Image | - | Keeps samples containing images with face area ratios within the specified range | -| flagged_words_filter | General | en, zh | Keeps samples with flagged-word ratio below the specified threshold | -| image_aspect_ratio_filter | Image | - | Keeps samples containing images with aspect ratios within the specified range | -| image_shape_filter | Image | - | Keeps samples containing images with widths and heights within the specified range | -| image_size_filter | Image | - | Keeps samples containing images whose size in bytes are within the specified range | -| image_text_matching_filter | Multimodal | - | Keeps samples with image-text classification matching score within the specified range based on a BLIP model | -| image_text_similarity_filter | Multimodal | - | Keeps samples with image-text feature cosine similarity within the specified range based on a CLIP model | -| language_id_score_filter | General | en, zh | Keeps samples of the specified language, judged by a predicted confidence score | -| maximum_line_length_filter | Code | en, zh | Keeps samples with maximum line length within the specified range | -| perplexity_filter | General | en, zh | Keeps samples with perplexity score below the specified threshold | -| phrase_grounding_recall_filter | Multimodal | - | Keeps samples whose locating recalls of phrases extracted from text in the images are within a specified range | -| special_characters_filter | General | en, zh | Keeps samples with special-char ratio within the specified range | -| specified_field_filter | General | en, zh | Filters samples based on field, with value lies in the specified targets | -| specified_numeric_field_filter | General | en, zh | Filters samples based on field, with value lies in the specified range (for numeric types) | -| stopwords_filter | General | en, zh | Keeps samples with stopword ratio above the specified threshold | -| suffix_filter | General | en, zh | Keeps samples with specified suffixes | -| text_action_filter | General | en, zh | Keeps samples containing action verbs in their texts | -| text_entity_dependency_filter | General | en, zh | Keeps samples containing entity nouns related to other tokens in the dependency tree of the texts | -| text_length_filter | General | en, zh | Keeps samples with total text length within the specified range | -| token_num_filter | General | en, zh | Keeps samples with token count within the specified range | -| word_num_filter | General | en, zh | Keeps samples with word count within the specified range | -| word_repetition_filter | General | en, zh | Keeps samples with word-level n-gram repetition ratio within the specified range | +| audio_size_filter | Audio | - | Keep data samples whose audios' sizes are within a specified range | +| average_line_length_filter | Code | en, zh | Keeps samples with average line length within the specified range | +| character_repetition_filter | General | en, zh | Keeps samples with char-level n-gram repetition ratio within the specified range | +| face_area_filter | Image | - | Keeps samples containing images with face area ratios within the specified range | +| flagged_words_filter | General | en, zh | Keeps samples with flagged-word ratio below the specified threshold | +| image_aspect_ratio_filter | Image | - | Keeps samples containing images with aspect ratios within the specified range | +| image_shape_filter | Image | - | Keeps samples containing images with widths and heights within the specified range | +| image_size_filter | Image | - | Keeps samples containing images whose size in bytes are within the specified range | +| image_aesthetics_filter | Image | - | Keeps samples containing images whose aesthetics scores are within the specified range | +| image_text_matching_filter | Multimodal | - | Keeps samples with image-text classification matching score within the specified range based on a BLIP model | +| image_text_similarity_filter | Multimodal | - | Keeps samples with image-text feature cosine similarity within the specified range based on a CLIP model | +| language_id_score_filter | General | en, zh | Keeps samples of the specified language, judged by a predicted confidence score | +| maximum_line_length_filter | Code | en, zh | Keeps samples with maximum line length within the specified range | +| perplexity_filter | General | en, zh | Keeps samples with perplexity score below the specified threshold | +| phrase_grounding_recall_filter | Multimodal | - | Keeps samples whose locating recalls of phrases extracted from text in the images are within a specified range | +| special_characters_filter | General | en, zh | Keeps samples with special-char ratio within the specified range | +| specified_field_filter | General | en, zh | Filters samples based on field, with value lies in the specified targets | +| specified_numeric_field_filter | General | en, zh | Filters samples based on field, with value lies in the specified range (for numeric types) | +| stopwords_filter | General | en, zh | Keeps samples with stopword ratio above the specified threshold | +| suffix_filter | General | en, zh | Keeps samples with specified suffixes | +| text_action_filter | General | en, zh | Keeps samples containing action verbs in their texts | +| text_entity_dependency_filter | General | en, zh | Keeps samples containing entity nouns related to other tokens in the dependency tree of the texts | +| text_length_filter | General | en, zh | Keeps samples with total text length within the specified range | +| token_num_filter | General | en, zh | Keeps samples with token count within the specified range | +| video_aspect_ratio_filter | Video | - | Keeps samples containing videos with aspect ratios within the specified range | +| video_duration_filter | Video | - | Keep data samples whose videos' durations are within a specified range | +| video_aesthetics_filter | Video | - | Keeps samples whose specified frames have aesthetics scores within the specified range | +| video_frames_text_similarity_filter | Multimodal | - | Keep data samples whose similarities between sampled video frame images and text are within a specific range | +| video_motion_score_filter | Video | - | Keep samples with video motion scores within a specific range | +| video_ocr_area_ratio_filter | Video | - | Keep data samples whose detected text area ratios for specified frames in the video are within a specified range | +| video_resolution_filter | Video | - | Keeps samples containing videos with horizontal and vertical resolutions within the specified range | +| word_num_filter | General | en, zh | Keeps samples with word count within the specified range | +| word_repetition_filter | General | en, zh | Keeps samples with word-level n-gram repetition ratio within the specified range | ## Deduplicator @@ -119,6 +140,7 @@ All the specific operators are listed below, each featured with several capabili | document_minhash_deduplicator | General | en, zh | Deduplicates samples at document-level using MinHashLSH | | document_simhash_deduplicator | General | en, zh | Deduplicates samples at document-level using SimHash | | image_deduplicator | Image | - | Deduplicates samples at document-level using exact matching of images between documents | +| video_deduplicator | Video | - | Deduplicates samples at document-level using exact matching of videos between documents | ## Selector diff --git a/docs/Operators_ZH.md b/docs/Operators_ZH.md index f3df33d89..4517c614c 100644 --- a/docs/Operators_ZH.md +++ b/docs/Operators_ZH.md @@ -2,6 +2,8 @@ 算子 (Operator) 是协助数据修改、清理、过滤、去重等基本流程的集合。我们支持广泛的数据来源和文件格式,并支持对自定义数据集的灵活扩展。 +这个页面提供了OP的基本描述,用户可以参考[API文档](https://alibaba.github.io/data-juicer/)更细致了解每个OP的具体参数,并且可以查看、运行单元测试,来体验[各OP的用法示例](../tests/ops)以及每个OP作用于内置测试数据样本时的效果。 + ## 概览 Data-Juicer 中的算子分为以下 5 种类型。 @@ -9,9 +11,9 @@ Data-Juicer 中的算子分为以下 5 种类型。 | 类型 | 数量 | 描述 | |------------------------------------|:--:|---------------| | [ Formatter ]( #formatter ) | 7 | 发现、加载、规范化原始数据 | -| [ Mapper ]( #mapper ) | 26 | 对数据样本进行编辑和转换 | -| [ Filter ]( #filter ) | 28 | 过滤低质量样本 | -| [ Deduplicator ]( #deduplicator ) | 4 | 识别、删除重复样本 | +| [ Mapper ]( #mapper ) | 38 | 对数据样本进行编辑和转换 | +| [ Filter ]( #filter ) | 36 | 过滤低质量样本 | +| [ Deduplicator ]( #deduplicator ) | 5 | 识别、删除重复样本 | | [ Selector ]( #selector ) | 2 | 基于排序选取高质量样本 | 下面列出所有具体算子,每种算子都通过多个标签来注明其主要功能。 @@ -23,6 +25,7 @@ Data-Juicer 中的算子分为以下 5 种类型。 - Financial: 与金融领域相关 - Image: 专用于图像或多模态 - Audio: 专用于音频或多模态 + - Video: 专用于视频或多模态 - Multimodal: 专用于多模态 * Language 标签 @@ -46,6 +49,7 @@ Data-Juicer 中的算子分为以下 5 种类型。 | 算子 | 场景 | 语言 | 描述 | |-----------------------------------------------------|-----------------------|-----------|--------------------------------------------------------| +| audio_ffmpeg_wrapped_mapper | Audio | - | 运行 FFmpeg 语音过滤器的简单封装 | | chinese_convert_mapper | General | zh | 用于在繁体中文、简体中文和日文汉字之间进行转换(借助 [opencc](https://github.com/BYVoid/OpenCC)) | | clean_copyright_mapper | Code | en, zh | 删除代码文件开头的版权声明 (:warning: 必须包含单词 *copyright*) | | clean_email_mapper | General | en, zh | 删除邮箱信息 | @@ -54,9 +58,9 @@ Data-Juicer 中的算子分为以下 5 种类型。 | clean_links_mapper | General, Code | en, zh | 删除链接,例如以 http 或 ftp 开头的 | | expand_macro_mapper | LaTeX | en, zh | 扩展通常在 TeX 文档顶部定义的宏 | | fix_unicode_mapper | General | en, zh | 修复损坏的 Unicode(借助 [ftfy](https://ftfy.readthedocs.io/)) | -| generate_caption_mapper | Multimodal | - | 生成样本,其标题是根据另一个辅助模型(例如 blip2)和原始样本中的图形生成的。 | -| gpt4v_generate_mapper | Multimodal | - | 基于gpt-4-vision和图像生成文本 | -| image_blur_mapper | Multimodal | - | 对图像进行模糊处理 | +| image_blur_mapper | Multimodal | - | 对图像进行模糊处理 | +| image_captioning_from_gpt4v_mapper | Multimodal | - | 基于gpt-4-vision和图像生成文本 | +| image_captioning_mapper | Multimodal | - | 生成样本,其标题是根据另一个辅助模型(例如 blip2)和原始样本中的图形生成的。 | | image_diffusion_mapper | Multimodal | - | 用stable diffusion生成图像,对图像进行增强 | | nlpaug_en_mapper | General | en | 使用`nlpaug`库对英语文本进行简单增强 | | nlpcda_zh_mapper | General | zh | 使用`nlpcda`库对中文文本进行简单增强 | @@ -70,8 +74,18 @@ Data-Juicer 中的算子分为以下 5 种类型。 | remove_specific_chars_mapper | General | en, zh | 删除任何用户指定的字符或子字符串 | | remove_table_text_mapper | General, Financial | en | 检测并删除可能的表格内容(:warning: 依赖正则表达式匹配,因此很脆弱) | | remove_words_with_incorrect_