You’re on the last hour of your on-call shift when your phone rings. Customers are complaining that they can’t send direct messages, which is a major feature of your platform. You pull up the app and immediately see the problem: The send button isn’t showing. With a bit of digging, you trace the problem back to a malfunctioning caching server. The app couldn’t pull in the “send message” image file, and nothing appeared in its place. You get the caching server working again, and during an incident response meeting the next day, the front-end team adds a text-based fallback for all user-interface elements just in case something like this happens again.

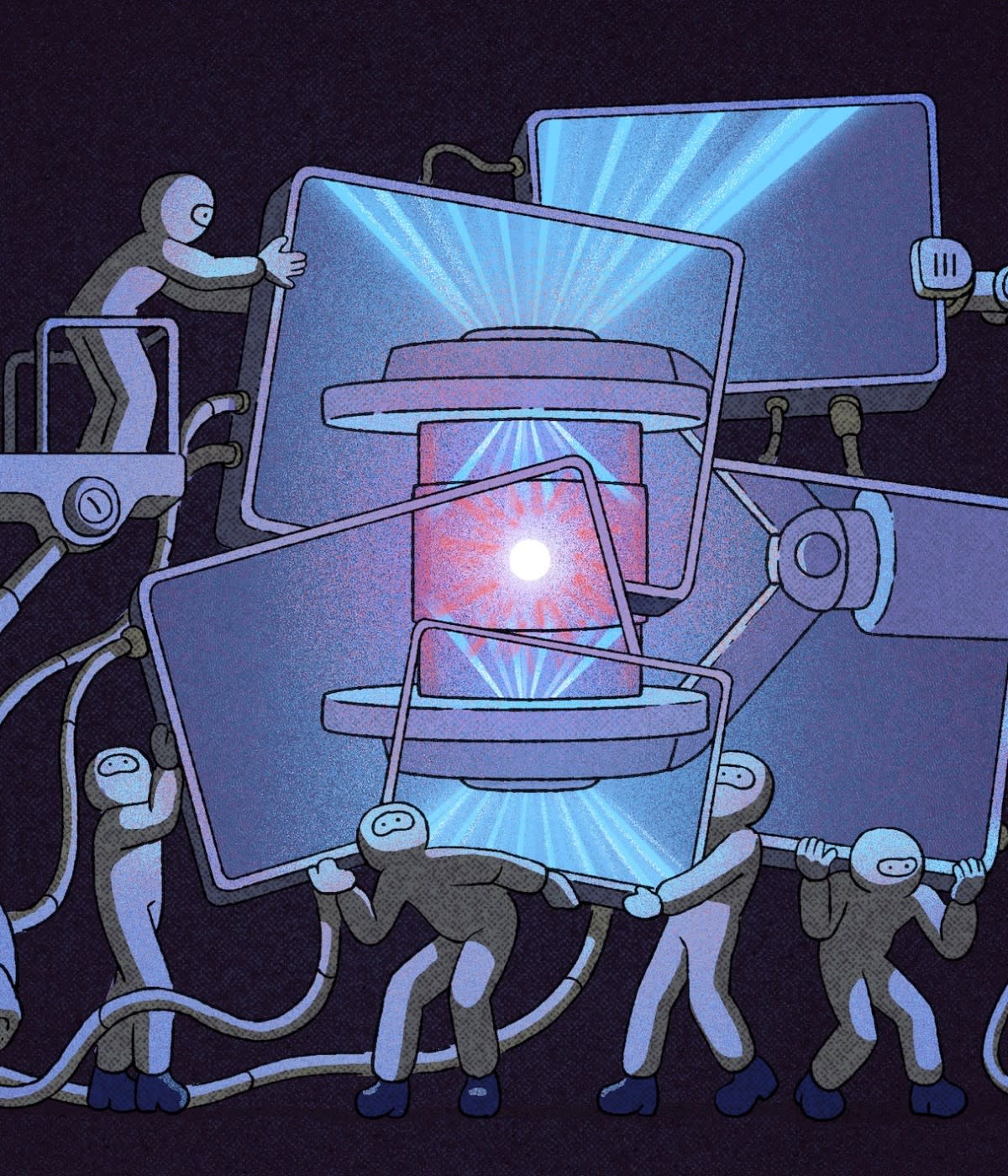

These sorts of problems are becoming more common as applications grow more complex. Apps that may look like monoliths to users are often constellations of different database servers, APIs, microservices, and libraries—any of which could fail at any moment. To build more resilient applications and solve problems before they cause incidents like the ones above, DevOps teams are increasingly turning to a discipline called “chaos engineering.”

At its simplest, chaos engineering might involve randomly switching off different services to see how the system reacts as a whole. "It helps you find faults in the system you didn't know existed," says Nora Jones, founder and CEO of incident analysis startup Jeli. She’s also the co-author of the book Chaos Engineering: System Resiliency in Practice, and a former Netflix resilience engineer.

For example, in 2018, before Netflix launched the interactive movie Black Mirror: Bandersnatch, the team discovered that a small key-value store that they believed to be non-critical was actually pretty important. "It was sort of a hidden dependency," says Netflix resilience engineer Ales Plsek. "Discovering that enabled us to invest more in resilience there. Chaos engineering really helps us ensure that we're investing in the right things."

Interest in the discipline of chaos engineering has exploded since Netflix first released the code for its chaos engineering tool Chaos Monkey in 2012 and, more recently, with the arrival of open source tools like Litmus, Chaos Toolkit, and PowerfulSeal that provide robust and customizable tools for running chaos experiments.

“I don’t know any organization that doesn’t do some sort of fault-driven experiments in production at this point,” says John Allspaw, the principal/founder of Adaptive Capacity Labs and former CTO of Etsy. “It’s one of several important techniques that engineering teams use to build confidence as they build software and, more importantly, change that software.”

It's easy to see the appeal. It has a cool name and it's always fun to break stuff. But chaos engineering is about more than just wreaking havoc. "You're trying to learn about how your system behaves," says Sylvain Hellegouarch, maintainer of Chaos Toolkit and co-founder of the startup Reliably. "It's the scientific method. You state a hypothesis, usually something along the lines of 'the system should run smoothly even if it can't contact this particular database,' and then you test the hypothesis and explore the results."

Most importantly, chaos engineering is a mindset that helps DevOps teams grapple with unpredictability. Thanks to open source tooling, chaos engineering is finding its way across the software development lifecycle and helping developers rethink how they build, test, and maintain software.

“Successful organizations don’t get any simpler, they only grow more complex,” Allspaw says. “More engineering teams are recognizing that they can’t predict or even understand the entirety of their infrastructure. Chaos engineering helps update their mental models for this new world.”

The discipline of chaos

Netflix began their work with chaos engineering in 2010 alongside their migration from physical data centers to the cloud, explains Plsek. "They worried about what might happen if particular AWS instances went down, or if certain services were unavailable," he says. "The whole ecosystem was, and is, evolving day by day, and no single person knows how the entire thing works."

So they created Chaos Monkey. The idea was simple enough: It shut off different systems at random. "It really made people more aware of their dependencies," Plsek says.

Netflix first discussed Chaos Monkey in a blog post that captured the public imagination at a time when many other companies were also migrating to the cloud, or at least relying more heavily on both cloud APIs and internal microservices. "I remember reading this post years before I joined Netflix and it completely changed how I thought about service development," Plsek says.

Netflix followed the blog post by open sourcing Chaos Monkey in 2012. That made it easier for people at other companies to start using it. "Chaos Monkey really was the start of everything else," Hellegouarch says.

As the idea of chaos engineering spread, the open source community developed more tools to adapt the practice to a wider variety of organizations. "We were trying to sell our data management product to a large financial services company," explains Umasankar Mukkara, co-founder of MayaData and, more recently, Chaos Native. "Our customers were confident in the many benefits of migrating to the cloud, such as the ability to deliver features more quickly. But they wanted us to prove the product was reliable."

Mukkara and his team realized that they could use chaos engineering to test their system's resilience and began exploring options. They didn't want to use a proprietary solution, and Chaos Monkey didn't meet their needs. "We wanted something designed to work with Kubernetes," he explains. "Almost all new software will be written in a cloud native way. We needed tools that fit well into that type of environment."

So they built their own chaos engineering platform, which they later open sourced as Litmus. "Open source is the norm now," he says. "The Kubernetes ecosystem is driven by open collaboration, so it was natural for us to share what we were working on with the larger DevOps community," Mukkara says. "The response was super positive." The project now has more than 100 contributors. "We get lots of feedback from both companies and individuals about things like how we can build the tool more effectively and better manage the system's lifecycle."

Hellegouarch and company took a slightly different approach with Chaos Toolkit. Rather than try to build a tool specific to their own environment, they opted to create a minimal foundation that others could build upon.

“We started using Chaos Toolkit because of the customizability,” says Roei Kuperman, a full-stack developer at hosting company Wix. “It was easy to create plugins that fit our needs, or to extend the core project,” he says. For example, Wix contributed dynamic configuration features to the core Chaos Toolkit project.

That’s what makes open source so important for chaos engineering: “Anyone can write extensions that meet their own particular requirements,” Hellegouarch says. “There's not really any way for a single company or open source project to solve everyone's needs. I've never used Azure, so I can't create a tool that addresses the needs of an Azure environment. But together, the community can build tools for any conceivable environment.”

Chaos everywhere

There's a clear sense in the community that chaos engineering is just a part of something larger, and that chaos engineering tools won't necessarily always stand alone. Historically it's been part of Ops. It makes sense to tightly couple chaos engineering with Ops functions like observability.

Jeli focuses on "incident analysis," a complementary idea to chaos engineering that focuses on learning in the aftermath of outages or other IT incidents. "I think incident analysis is necessary for chaos engineering to be successful," Jones says. "You need to learn from your chaos experiments, and you need to create chaos experiments based on what you learn from other incidents. Incident analysis can be a way to learn what's important to your customers and what you need to test. I see chaos engineering and incident analysis going hand in hand."

Meanwhile, much like security, chaos engineering is “shifting left” to the “Dev” half of DevOps. "The big thing we see is building chaos culture into the whole company," Hellegouarch says. "I expect we'll see chaos engineering tools that support multiple personas, including observability and incident analysis as well as developers and QA. The shift is already happening."

At Wix, chaos engineering has historically been part of Ops and tightly coupled with observability and alerting tools, but is spreading to the application layer as well. “I think chaos engineering will always be principally part of operations, but in the near future, it will be a part of the CI/CD process of the infrastructure development teams as well,” Kuperman says. The trend is also exemplified by the recent acquisition of Chaos Native by CI/CD platform Harness.

Open source will continue to drive the expansion of chaos engineering, and more importantly, chaos thinking throughout organizations. All of these different projects offer not just tools for running chaos experiments but some guidance as to how to practice the discipline. "The IT industry needs to learn collectively to solve the big problems we all face, like security and reliability," Hellegouarch says. "Open source is the key way we do that.