Developers around the world, regardless of background or subject matter, have something in common: we have code tasks that need to be performed at different points of the development lifecycle.

This can include a variety of things like analysis of the code, where you might be checking for syntax errors or security issues. It can also include figuring out a way to get that code somewhere that people can use it.

These tasks help to ensure the quality of the products we intend to ship, but they also have another thing in common: they require something to trigger them.

If automation isn’t set up, that means a human must complete these tasks. As much as we pride ourselves on our ability to always run them, things happen and human error gets in the way. That could be simply forgetting to run something or maybe a coworker is handling deploying the project when you’re on PTO and they’re not aware of all the scripts that must be run.

What can we do to remove as much of that human error out of the equation as possible? How can we make robots do the grunt work so we can get back to solving the real problems?

What are some common code tasks?

Each code task plays a different but equally important role in the software development lifecycle. Some tasks might feel more important depending on the team who it impacts the most, but they all provide different means to the end goal of shipping high quality software.

Linting & Formatting

Linting and formatting are two different but somewhat related tasks.

Linting is a process where input code is analyzed and checked for bugs or stylistic issues, based on a set of rules. Depending on the team, this might include things like whether or not each JavaScript statement includes a semicolon or a way to flag unused dependency import statements. It also provides a way for teams to programmatically find syntax errors that might not have popped up in your local environment.

Formatting extends this. Many of the cases that a linter might flag can be programmatically fixed by a formatter.

Both of these tools end up saving us time. It prevents us from needing to have code style discussions in an unrelated Pull Request and helps to prevent bugs that are easy for a computer to catch yet easy for a human to miss. But it’s not the only solution for maintaining quality.

Running tests

Going further on the idea of programmatically trying to catch bugs is the process of building and running tests.

Tests come in a lot of different forms. The common buckets are unit, integration, end-to-end, and visual tests. They each play their own role in a larger suite of tests that help teams build quality software.

That quality software is built for another entity to use, whether it’s a human or another robot. As developers, we need to make sure we’re maintaining that functionality for our users, and tests are a way to do that.

Deployments

When we’re confident that a particular version or release will perform and meet our expectations, we ultimately deploy it.

The code needs to go somewhere in order for it to be used. That might include compiling that code or spinning up external resources that it depends on.

Orchestrating all of the steps needed to reach production, everything involved from building to automation and testing, is both a beautiful and nerve-racking thing. When it works, it’s a pretty fluid process, but hitting a snag or missing a step can easily lead to stress and anxiety.

Security checks & Code analysis

Finally we need to make sure that the product we deploy is secure and safe to use.

Security checks are a commonly glossed over code task where the lessons learned unfortunately tend to come after an incident occurs.

There really isn’t an excuse for this. We’re liable to our customers for the code we create. Security is just as critical to the development cycle as any other part and the tools we have available help make it easy to integrate into existing workflows.

But that liability doesn’t only come in the form of security checks, it also comes along with code analysis.

While we touched a little bit on the linting side of code analysis, it extends beyond your code and into other people's code.

A beautiful part of the developer ecosystem is the abundance of 3rd party libraries that we can use in our application. But while that library might be free to view on GitHub, that doesn’t mean you have the license to use it in your commercial application.

Code analysis can also help look for licensing issues and even run additional security checks on code you might not have been aware of.

All of these pieces work together to keep your customers safe and help you maintain a high level of quality for your product.

Where code tasks fail

Most if not all of these tasks are typically easy to argue for. Telling a computer to do something that will help prevent bugs or security issues is something anyone can get on board with. While the code task itself might be as easy as pushing a button or running a few commands, in reality, sometimes that button doesn’t get pushed and the task doesn’t work out in the end.

Human error

One thing all of these code tasks have in common is they require something to initiate them. More often than not, at some point of the development lifecycle, that's a human, whether it's clicking deploy or running a script.

Nobody’s perfect and this isn’t meant to shame anyone who forgot to run a test before deploying to production (my hand is raised), but we need to be realistic when setting expectations for ourselves and figure out ways to reduce that margin of error as much as we can.

Time

The fact of the matter is this stuff also takes time. That’s valuable time that can be extremely limited depending on the team.

When requiring humans to kick off these processes, we have to depend on them to all be available at the times we need. This becomes especially challenging as our world continues to move to a global workforce with asynchronous schedules.

And that’s time we can’t spend solving other challenges. That’s time we’re sitting waiting for a deployment to finish so that we can make sure that nothing failed.

Other human error

If you’re part of a small team (or even large teams), you might have experienced times when there is only one person who knows how to do something. While there are other fundamental issues with this, it means that a single person is needed to perform those processes. What if that person is on PTO for a day? What if they’re sick without notice? If you’re lucky, that person might have written down some instructions or given someone a quick walkthrough. But are you sure they covered all the steps?

That step that was left out could be a critical point of failure that brings down your infrastructure when someone tries to access it on the web. Or it could be as simple as forgetting to properly version the package, which comes with its own set of problems

How automation can help

Fortunately, we’re living in a time where we have an abundance of high quality tools that can help us automate these tasks, removing as much of that margin of error as we can.

While it might not be able to smooth out some of the more fundamental issues in a team’s workflow, we can take advantage of different types of automation and use them to have the most impact within our projects.

Scripts

Scripts are probably the most fundamental type of automation in our toolbelt.

Your project probably already has some level of scripting, even if that means calling the build command from your framework of choice. But we can take that a step further and script our scripts!

Consider the process of automating application deployment. As we talked about earlier, that can include a variety of different steps including deploying different services or even making sure you increment your build version.

We can take all of these commands that we would typically run manually and put them in a shell script (or the language of your choice).

#!/bin/bash

# Setup Project

git clone https://github.com/colbyfayock/my-cool-project

cd my-cool-project

# Install Dependencies

npm ci

# Run all of the tests

npm run test:ci

# Version and tag

PACKAGE_VERSION=$(npm version patch)

git tag $PACKAGE_VERSION

git push --tags

# Deploy CF stacks

aws cloudformation deploy --stack-name my-cool-stack --template-file ./deploy/my-cool-stack.yaml

# Run end to end tests

npm run e2e:ci

This can give you confidence that whether you or your coworker is the one deploying, you’ll hit all of the steps of your deployment process.

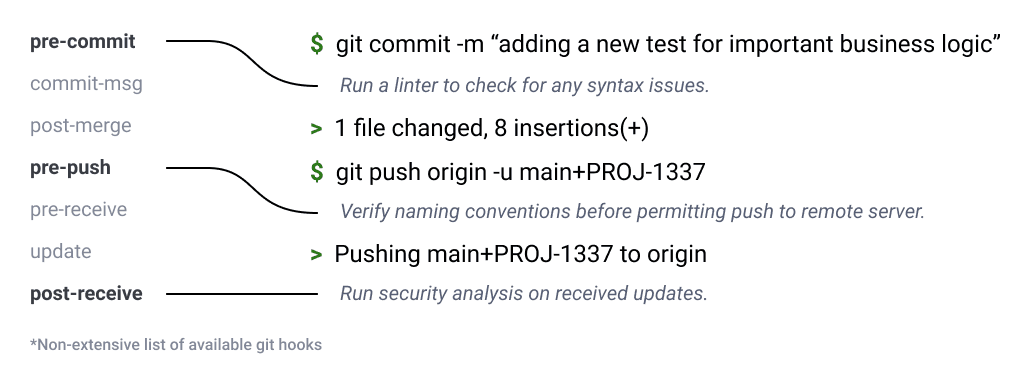

Git hooks

Taking this a step further, we begin to automate triggering these scripts with tools like Git hooks. One of the cool things about our favorite versioning tool is that Git makes hooks available through the different parts of the workflow.

For instance, a pretty common Git hook is pre-commit, which, just as it sounds, triggers right before the actual commit occurs.

While that probably isn’t the right place to run tests—especially if you have a large test suite—it’s a perfect opportunity to run tools like linters and formatters. Linters can quickly double check for syntax errors while your formatter is making style changes, without ever impacting your workflow.

Using tools like husky, we can manage a pre-commit hook or any other git hook right in our code. With husky installed, we can run:

npx husky add .husky/pre-commit "npm run lint && npm run format"

Which creates a new script that husky will tell Git to run any time that hook is triggered.

If you end up with any syntax errors that the formatter can’t fix, the pre-commit hook will break, and you’ll have an opportunity to fix it. Otherwise, you’ll be off with a fresh new commit that’s automatically cleaned up and ready to push to remote!

CI/CD

As you can imagine, Git hooks can be limited. We’re not going to want to put all of those infrastructure scripts in a Git hook, where often those pipelines are very complex and take a good amount of time.

That’s where Continuous Integration and Continuous Deployment (CI/CD) step in, where you’re able to have complete control of your entire environment and automate any part of the process.

A common CI/CD approach is to still take advantage of the Git lifecycle. Instead of configuring a script to trigger during a particular stage, you would configure your CI/CD process to trigger any time a commit is pushed to a branch.

As soon as that commit is pushed up to the remote server, the CI/CD environment would pick up that change, check the code out on that environment, and perform any functions you choose. This typically includes installing dependencies, building the project, running tests, and ultimately deploying it.

Infrastructure usually isn’t as easy as running a single build and deploy command. Since you have complete control over the environment, you can also include deploying your cloud infrastructure, whether that’s an AWS CloudFormation template or manually putting commands together with the CLI.

pipeline {

stages {

stage('Prepare') {

checkout scm

sh 'node -v'

sh 'npm ci'

}

stage('Build') {

sh 'npm run build'

}

stage('Test - Pre Deploy') {

sh 'npm test:ci'

}

stage('Deploy') {

echo "Deploying environment ${PROJECT_ENVIRONMENT}"

sh '''

aws cloudformation deploy \

--stack-name my-cool-stack \

--template-file ./deploy/my-cool-stack.yaml \

--parameters ProjectEnv="${PROJECT_ENVIRONMENT}"

'''

}

stage('Test - Post Deploy') {

sh 'npm run e2e:ci';

}

}

}

The above Jenkins example is arguably still “simple” - it’s pretty much the script we saw earlier split out into stages - but even this small step starts to provide benefits.

Breaking down our workflow into these stages allows us to gain better insight into each sequence of events while allowing this process to scale in a meaningful way. We can start gauging time and resource metrics to see how much a deployment costs. As we see a little in the above, we can also have environment specific configurations depending on if it’s the production branch or if it’s going to a staging environment.

Ultimately the goal is to give you and your team the ability to take control of your development workflow and automate it in a meaningful way, helping to get process out of the way of humans, while maintaining a high level of quality for your customers.

GitHub Actions

Actions are a feature from GitHub that allow you to automate your code tasks and workflows right inside of your existing repositories. While Actions are technically CI/CD, they’re a bit more interesting in how they're integrated into the GitHub ecosystem including Octokit.

Because they’re kind of already “part” of GitHub repositories, integrating with Actions tends to have a bit of a lower barrier of entry. Creating a new Action workflow is as simple as creating a new .yml file in your repository or using GitHub's set of examples to easily get started.

The best part is you still gain a lot of the same flexibility with Actions as you do with traditional CI/CD services.

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Use Node.js 14.x

uses: actions/setup-node@v2

with:

node-version: 14.x

- run: npm ci

- run: npm run build

- name: Test - Pre Deploy

run: npm test:ci

- name: Deploy

run: |

aws cloudformation deploy \

--stack-name my-cool-stack \

--template-file ./deploy/my-cool-stack.yaml \

- name: Test - Post Deploy

run: npm e2e:ci

In this example of a build job, we’re still providing a similar workflow to the examples we saw earlier, where we’re installing dependencies, building the project, testing the project, and deploying it. We’re even splitting it up into different steps, which like traditional CI/CD, gives us insights like timing into each step.

What makes this interesting is that you’re defining your environment and configuration straight from that YAML file. You no longer need to maintain servers or environments ready to spin up a new build. GitHub takes care of all of that for you with a simple line stating what base environment you’d like to use.

One of my other favorite parts of this is you can even run scheduled jobs. Using a similar YAML-based configuration, we can specify our schedule with cron syntax:

on:

schedule:

- cron: "0 14 * * *"

jobs:

reporting:

runs-on: ubuntu-latest

In this example, we’re setting up a daily job that could be something important to the business like building reports. As another example, you could be like me and set up some simple daily reminders in a GitHub repo.

Regardless, Actions give us the flexibility to take control of our automated workflows without much hassle, making it a great place for both beginners to automation and seasoned developers alike.

Taking back quality with automation

Automation can be one of the most powerful tools at our disposal, but often it’s pushed aside due to feature prioritization or feared complexity. Luckily the tools that help us automate in the first place have matured to a point where the barrier of entry and required time commitment is lower than ever before. With a little up front investment, we can make better use of the time we spend working on code tasks, freeing up more time to spend on the unique challenges of the world, automating away the repetitive tasks that are holding us back from a better product.