| Documentation | Blog | Paper | Slide |

Latest News 🔥

- [2024.11] rLLM has been approved by Snowflake (AI Data Cloud leader, NYSE: $57.46B) as a nice tool for RTL-type tasks. See paper: arXiv:2411.11829.

- [2024.10] We have recently added the state-of-the-art GNN method OGC [TNNLS 2024], the TNN method ExcelFormer [KDD 2024] and Trompt [ICML 2023].

- [2024.08] rLLM is supported by the CCF-Huawei Populus Grove Fund (CCF-华为胡杨林基金数据库专项). This project focuses on Tabular Data Governance for AI Tasks. Watch a short introduction video on Huawei's official account: 📺 Bilibili.

- [2024.07] We have released rLLM (v0.1), and the detailed documentation is now available: rLLM Documentation.

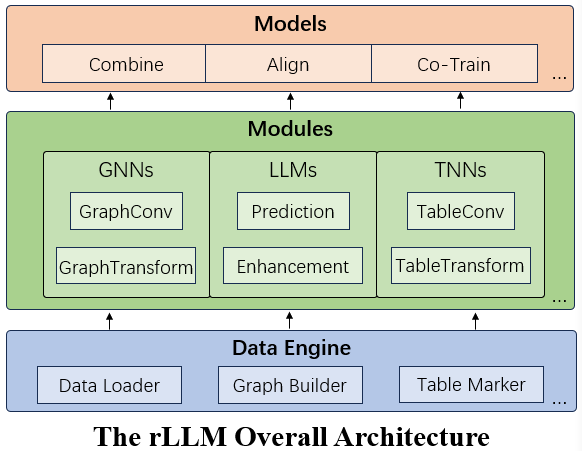

rLLM (relationLLM) is an easy-to-use Pytorch library for Relational Table Learning (RTL) with LLMs, by performing two key functions:

- Breaks down state-of-the-art GNNs, LLMs, and TNNs as standardized modules.

- Facilitates novel model building in a "combine, align, and co-train" way.

Let's run an RTL-type method BRIDGE as an example:

# cd ./examples

# set parameters if necessary

python bridge/bridge_tml1m.py

python bridge/bridge_tlf2k.py

python bridge/bridge_tacm12k.py- LLM-friendly: Modular interface designed for LLM-oriented applications, integrating smoothly with LangChain and Hugging Face transformers.

- One-Fit-All Potential: Processes various graphs (like Social/Citation/E-commerce Networks) by treating them as multiple tables linked by foreigner keys.

- Novel Datasets: Introduces three new relational table datasets useful for RTL model design. Includes the standard classification task, with examples.

- Community Support: Maintained by students and teachers from Shanghai Jiao Tong University and Tsinghua University. Supports the SJTU undergraduate course "Content Understanding (NIS4301)" and the graduate course "Social Network Analysis (NIS8023)".

rLLM includes over 15 state-of-the-art GNN and TNN models, ideal for both standalone use and building RTL-type methods. Highlighted models include:

- OGC: From Cluster Assumption to Graph Convolution: Graph-based Semi-Supervised Learning Revisited [TNNLS 2024] [Example]

- ExcelFormer: ExcelFormer: A Neural Network Surpassing GBDTs on Tabular Data [KDD 2024] [Example]

- TAPE: Harnessing Explanations: LLM-to-LM Interpreter for Enhanced Text-Attributed Graph Representation Learning [ICLR 2024] [Example]

- Label-Free-GNN: Label-free Node Classification on Graphs with Large Language Models [ICLR 2024] [Example]

- Trompt: Towards a Better Deep Neural Network for Tabular Data [ICML 2023] [Example]

- ...

- Code structure optimization

- Support for more TNNs

- Large-scale RTL training

- LLM prompt optimization

@article{rllm2024,

title={rLLM: Relational Table Learning with LLMs},

author={Weichen Li and Xiaotong Huang and Jianwu Zheng and Zheng Wang and Chaokun Wang and Li Pan and Jianhua Li},

year={2024},

eprint={2407.20157},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2407.20157},

}