-

Notifications

You must be signed in to change notification settings - Fork 1.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feature Request: Add Mish activation #1060

Comments

|

Thank you for the feature request. We will implement the Mish activation function. |

|

Thank you for considering the request. |

|

@Laicheng0830 Hi, just checking on the update for this Feature Request. Is it still on the roadmap? |

|

we will update this feature. @digantamisra98 |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Mish is a novel activation function proposed in this paper.

It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

Mish also was recently used for a submission on the Stanford DAWN Cifar-10 Training Time Benchmark where it obtained 94% accuracy in just 10.7 seconds which is the current best score on 4 GPU and second fastest overall. Additionally, Mish has shown to improve convergence rate by requiring less epochs. Reference -

Mish also has shown consistent improved ImageNet scores and is more robust. Reference -

Additional ImageNet benchmarks along with Network architectures and weights are avilable on my repository.

Summary of Vision related results:

It would be nice to have Mish as an option within the activation function group.

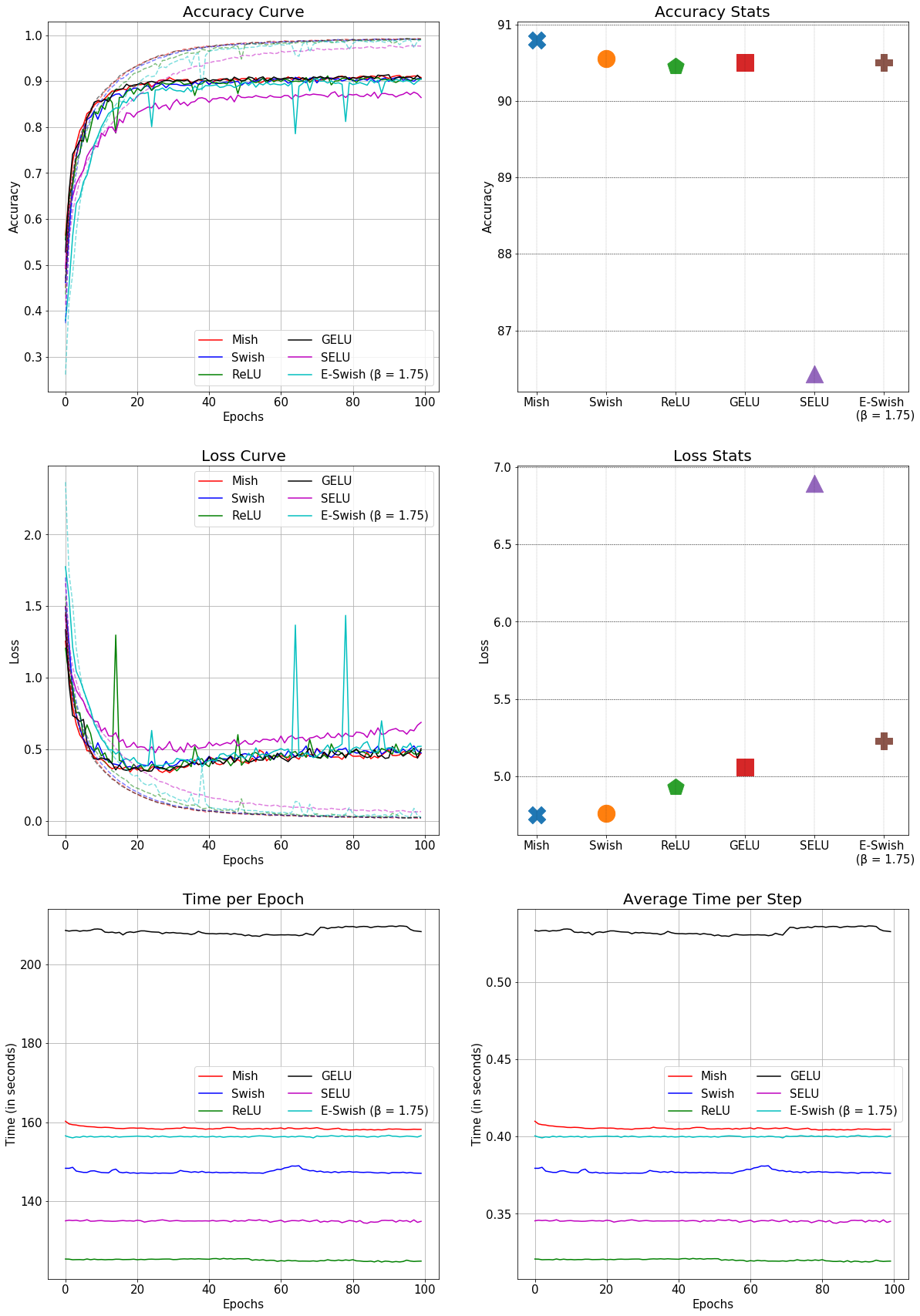

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10:

The text was updated successfully, but these errors were encountered: