-

-

Notifications

You must be signed in to change notification settings - Fork 16.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Batch Detection #7683

Comments

|

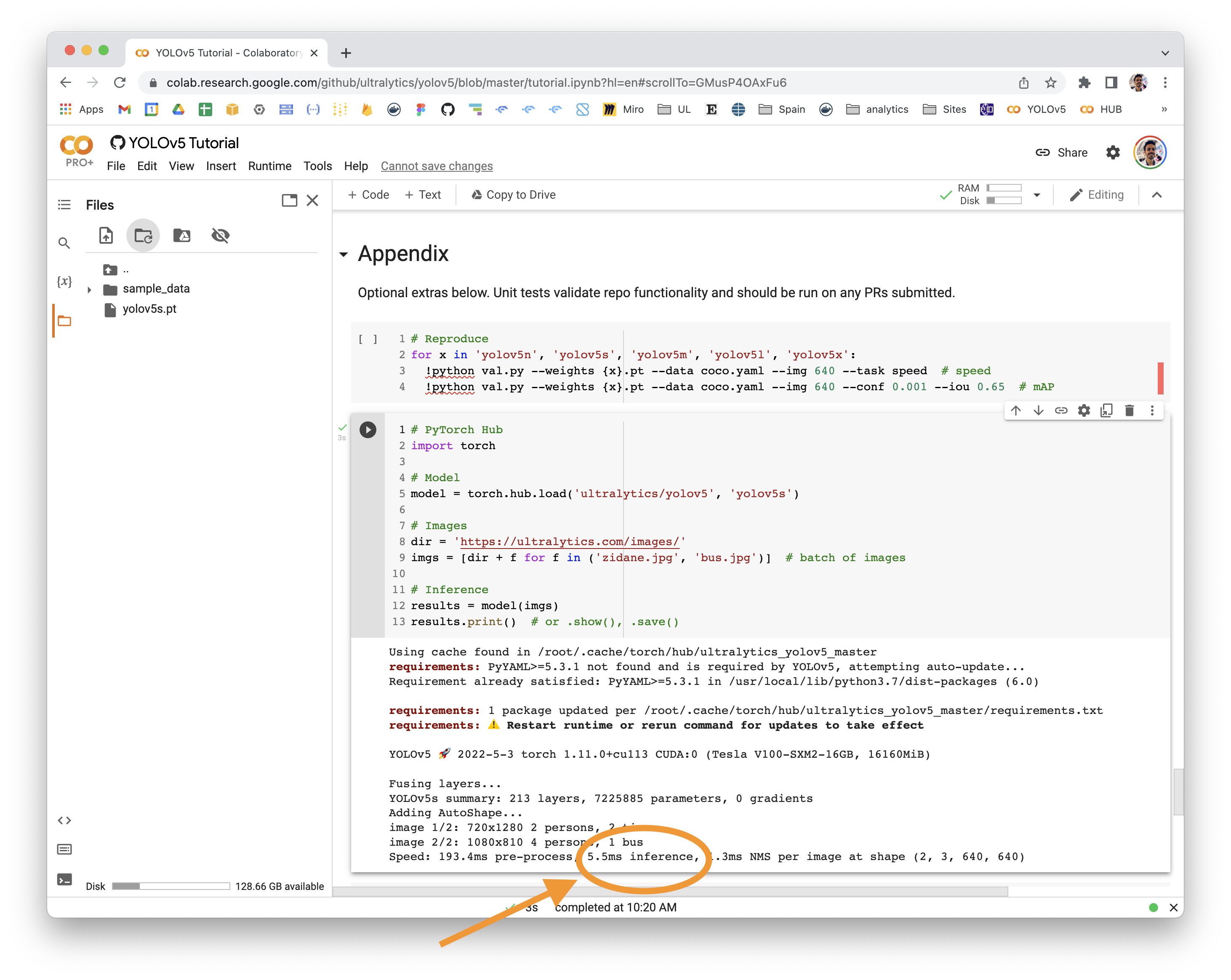

@rafcy 👋 Hello! Thanks for asking about inference speed issues. PyTorch Hub speeds will vary by hardware, software, model, inference settings, etc. Our default example in Colab with a V100 looks like this: YOLOv5 🚀 can be run on CPU (i.e. detect.py inferencepython detect.py --weights yolov5s.pt --img 640 --conf 0.25 --source data/images/YOLOv5 PyTorch Hub inferenceimport torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

# Images

dir = 'https://ultralytics.com/images/'

imgs = [dir + f for f in ('zidane.jpg', 'bus.jpg')] # batch of images

# Inference

results = model(imgs)

results.print() # or .show(), .save()

# Speed: 631.5ms pre-process, 19.2ms inference, 1.6ms NMS per image at shape (2, 3, 640, 640)Increase SpeedsIf you would like to increase your inference speed some options are:

Good luck 🍀 and let us know if you have any other questions! |

|

hello @glenn-jocher and thank you for the response. So, as far as I understand with conducting some tests with the colab, batch detection does not help in any way with speeding up the detection process right? |

I have seen that, I did my own test as well in colab to check out the results. The inference time is indeed decreasing a bit when batches are increased but the overall delay gets a lot bigger. I know that I may sound annoying with my queries, but I am just trying to figure out if batch detection of your YoloV5 works for my application. Batch Size || YOLOv5s Thank you in advance. |

|

👋 Hello, this issue has been automatically marked as stale because it has not had recent activity. Please note it will be closed if no further activity occurs. Access additional YOLOv5 🚀 resources:

Access additional Ultralytics ⚡ resources:

Feel free to inform us of any other issues you discover or feature requests that come to mind in the future. Pull Requests (PRs) are also always welcomed! Thank you for your contributions to YOLOv5 🚀 and Vision AI ⭐! |

Search before asking

YOLOv5 Component

No response

Bug

Hello everyone,

I am trying tiling methods so what I am trying to do is get an image, split it into patches and batch-detect objects on those images but instead there is much more delay instead. I don't know what I am doing wrong but nothing compares to any batched inference times that are mentioned in the documentations. I am getting like 20 fps with an image inference of size 1080p (using yolov5s custom trained model) and when splitting the image into 15 patches I am getting 5 FPS.

Things I tried:

All these result to the same fps and yes, I am running using a GPU (RTX 2070)

Another example i've tried, is to split a 4k image into 60 patches of 512 x 512 and detect them with the pytorch hub example as a tuple. I am getting these results as per performance

Speed: 5.5ms pre-process, 56.4ms inference, 0.8ms NMS per image at shape (60, 3, 640, 640)but it actually needed 3.7 seconds to run. So, the speeds are misleading because they represent the inference per image which makes no sense since I wanted batched inference.

Please help me specify if I am doing something wrong or if it is normal having these results and I should stop trying to find a solution to my issue.

Thank you in advance.

Environment

I am using a custom made Docker that includes:

Minimal Reproducible Example

No response

Additional

No response

Are you willing to submit a PR?

The text was updated successfully, but these errors were encountered: