-

Notifications

You must be signed in to change notification settings - Fork 3

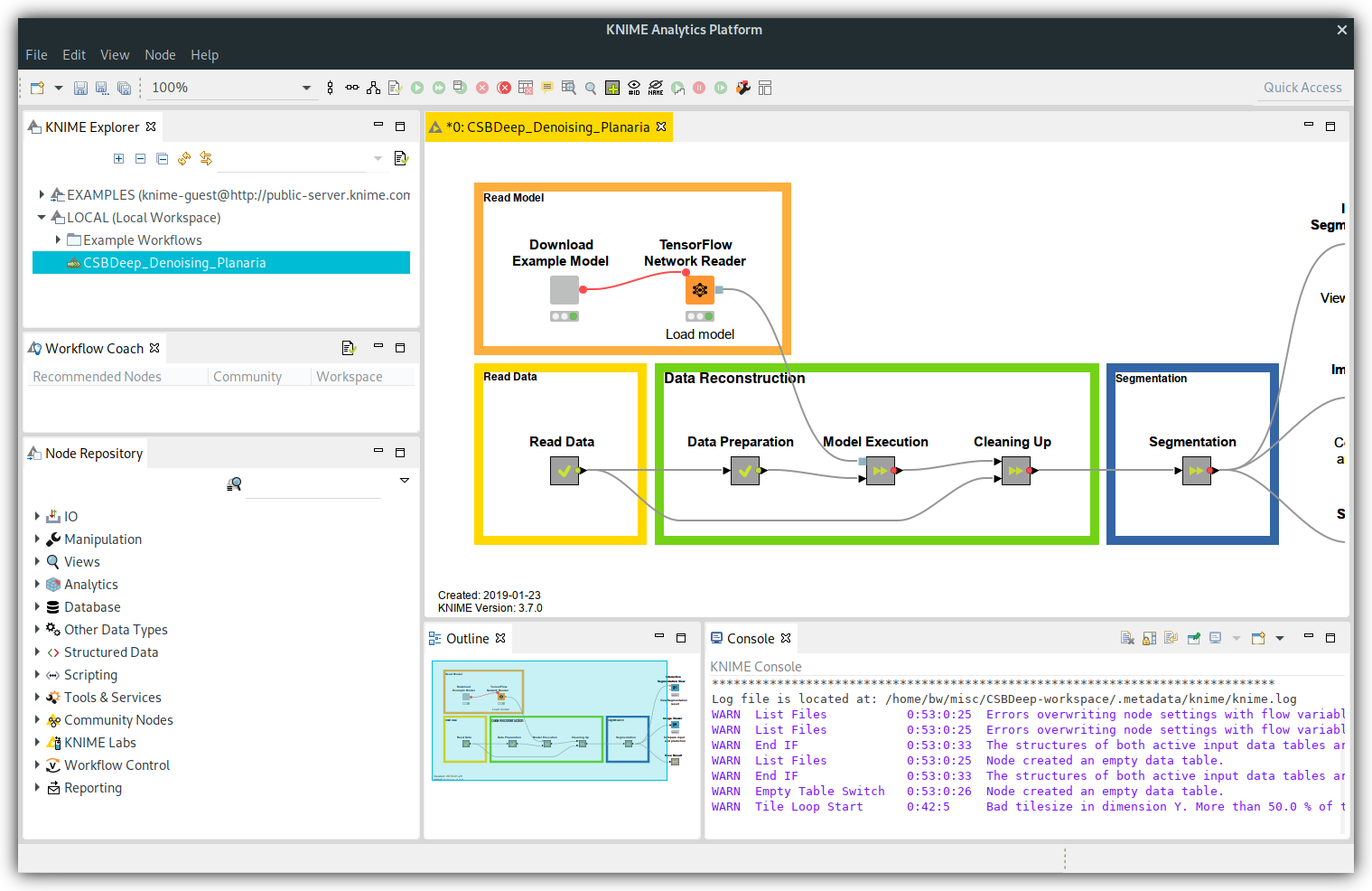

KNIME Workflow – 3D Denoising (Planaria)

This page helps you getting started with the KNIME workflow for 3D denoising of planaria data.

The workflow consists of the parts Read Data, Data Reconstruction, and Segmentation. Apart from that it is organized in Metanodes which contain workflows. You can open a Metanode and see the inner workflow by double-clicking on the node.

-

Read Data: Loads some images and sets variables which control some flexible parts of the workflow like the normalization and tiling. If you want to execute the workflow on different data use the

Image Readernode to load your images and adjust the values of the variables in theJava Edit Variablenode. -

Data Reconstruction: Executes the model to reconstruct the data.

-

Data Preparation: Expands the image such that every dimension is a multiple of 4 and normalizes the image between the 3rd and 99.8th percentile (The percentiles can be changed in the

Java Edit Variablenode). -

Model Execution: Executes the model on overlapping tiles of the image. The size of the tiles can be changed in the

Java Edit Variablenode. It is needed to change the tile-size if you change the input images or if the Memory consumption is too high. - Cleaning Up: Removes the border by which the image was expanded in the Data Preparation step, adds the input image to the table and splits the prediction into intensity and scale.

-

Data Preparation: Expands the image such that every dimension is a multiple of 4 and normalizes the image between the 3rd and 99.8th percentile (The percentiles can be changed in the

- Segmentation: Apart from the data reconstruction the workflow performs a simple segmentation to illustrate the usage of the reconstructed data. It is done by a thresholding and a connected component analysis. This gives only good results because of the clear reconstruction of the data.

Install and setup KNIME as described in the Installation Instructions.

Download the workflow here.

Right click on "LOCAL (Local workspace)" in your "KNIME Explorer" and choose "Import KNIME Workflow...":

A dialog will open up, where you can choose the downloaded file and press "Finish":

The "CSBDeep_Denoising_Planaria" workflow should now be in you KNIME Explorer. Double click on it to open it.

Download exemplary image data or use your own images for the following steps.

To load the data open the inner workflow of the "Read Data" meta node by double-clicking on the node or right-clicking and choosing Metanode->Open.

Now, you need to configure the "Image Reader" node. You can either double-click on the node or select it and press F6.

You will see a file browser where you can navigate through the files on your computer and choose files by selecting them and clicking the "Add Selected" button. On the right side of the window, you can see which files will be loaded. Select the planaria.tif example image and press the "OK" button.

Note: If you choose another very large image or if your computer is not as powerful it is likely that you will get memory issues. See Out Of Memory Exception / High RAM usage.

All done. The KNIME workflow is now configured to read the example image. You can close the "Read Data" workflow by closing the tab and return to the main workflow.

- Whole workflow: Click on the green double arrow button in the toolbar or press SHIFT+F7

- Single node: Select the node and click on the green arrow button in the toolbar or press F7

Segmentation result:

- Right-click on the "Interactive Segmentation View" node and select "View: Interactive Segmentation View"

- Choose an image and inspect the segmentation for this image.

Compare input and output:

- Right-click on the "Image Viewer" node and select "View: Image Viewer"

- Drag your mouse over the Image column and the Output_Intensity column to compare the input and output beside each other

- Select the 'Synchronize images?' checkbox if you want to scroll through the Z dimension of both images simultaneously

You can use your CARE model by adding a new TensorFlow Network Reader node and configure it to load your model. The model can then be connected to the Model Execution Metanode.

Segmentation is not the only thing you can do after the data reconstruction. KNIME provides many nodes which can do sophisticated data analysis and image processing. You can modify and extend the workflow to solve your task.

You can find nodes and add them to the workflow using the 'Node Repository' and connect them by dragging a data connection from one node to another with your mouse.

More information on how to use KNIME can be found on their website:

If the image processed at once is too large the model will allocate too much memory and fail with an out of memory exception or consume all the available system memory. The workflow solves this problem by tiling the image into multiple smaller images and merging them again after the model was executed.

You can edit the number of tiles created to fit your machine and data by editing the value of num_tiles in the Java Edit Variable node:

Rules:

- Increase

num_tiles-> less memory usage, slower execution - Decrease

num_tiles-> more memory usage, faster execution