-

Notifications

You must be signed in to change notification settings - Fork 247

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Improve LinkedList Base.map performance by not having to reverse the list

#763

base: master

Are you sure you want to change the base?

Conversation

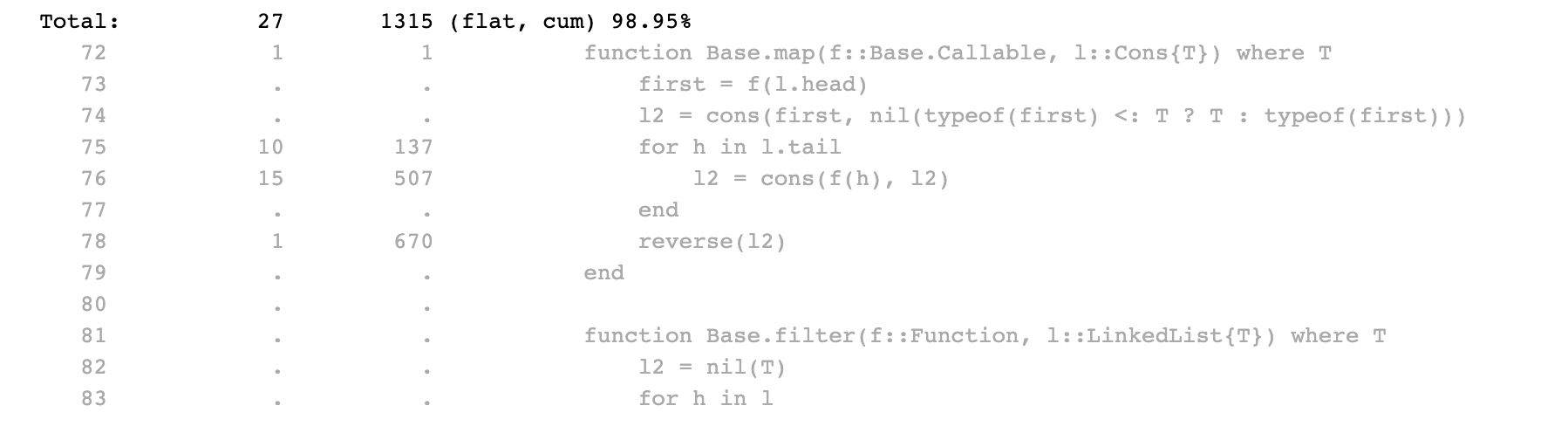

instead create it recursively in-order, via tail-recursion Big perf improvement: Before: ```julia julia> @Btime DataStructures.map(x->x*2, $(list((1:1000)...))); 148.476 μs (6469 allocations: 163.55 KiB) ``` After: ```julia julia> @Btime DataStructures.map(x->x*2, $(list((1:1000)...))); 29.705 μs (1745 allocations: 42.89 KiB) ```

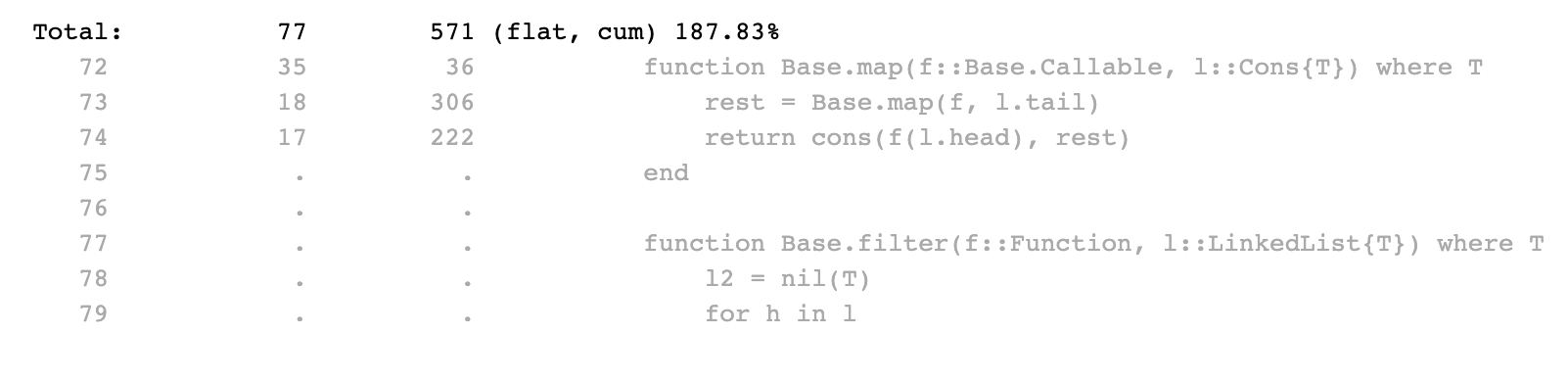

This avoids a StackOverflow error, and also turns out to be faster still, and lets us maintain the ability for map to return a list of a different eltype than the original, added in #27. Before: ```julia julia> @Btime DataStructures.map(x->x*2, $(list((1:1000)...))); 29.705 μs (1745 allocations: 42.89 KiB) ``` After: ```julia julia> @Btime DataStructures.map(x->x*2, $(list((1:1000)...))); 13.139 μs (1011 allocations: 47.65 KiB) ``` I'm not sure how there are fewer allocations.. But I can see how it'd be faster, with no recursive function calls.

357f597 to

b1034b8

Compare

|

This feels very against the spirit of LinkedLists. |

Haha yeah, i thought that at first too. But after thinking more about it, I really don't think so: the standard recursive linked list map algorithm uses the program stack to keep a copy of all the elements in the vector, which is essentially the same as collecting them onto a vector. Since each stack frame will basically only hold the list node's value and maybe a couple other elements. This PR is just a manually optimized version that keeps it in a tighter stack of only the values. As far as "basically might as well have used a vector in the first place," I guess the argument for using a list is that you're not going to be running (c.f. I intend to remove them from the package) Yeah, makes sense. Frankly this was just a nice bit of saturday morning coding. I think it seemed like a clear, easy improvement, but if you think it's too much like "cheating," we can close it. 🤷 😁 |

|

Okay, ACTUALLY, maybe the other option would just be to switch back to the recursive algorithm, even though it can stack overflow, because yeah recursive linked list algorithms might stack overflow! That's kind of like, part of it. And since this whole data structure is only really here to be educational, I think that's more valuable. The PR that originally made this not recursive was this one: PR #27, which rewrote several functions to not stack overflow, and also added this cool type changing property for the map function. We can undo the bit that avoids the recursion and keep the type changing with this version?: function rmap(f::Base.Callable, l::Cons{T}) where T

t = tail(l)

if t isa Nil

first = f(head(l))

return cons(first, nil(typeof(first) <: T ? T : typeof(first)))

else

return cons(f(head(l)), rmap(f, t::Cons{T}))

end

endI kind of like this one best since, as you say, it's more in the "spirit" of a linked list function, and it still keeps the type changing property. And it has exactly the same performance as the vector-based one I originally submitted, since (as i said above), they're literally the exact same algorithm. julia> @btime DataStructures.map(x->x*2, $(list((1:1000)...)));

13.371 μs (1009 allocations: 47.61 KiB)

julia> @btime DataStructures.rmap(x->x*2, $(list((1:1000)...)));

11.567 μs (999 allocations: 31.22 KiB)It actually even appears to be slightly better since you don't have to manage allocating the stack frames, so there's less GC interaction. So maybe we switch to this one? I guess there's 3 options to choose from:

Whatever you think makes the most sense for this code, which, as you say, is going to move to a different package in the end anyway 🙈 ❤️ Thanks for humoring me, Lyndon! ❤️ |

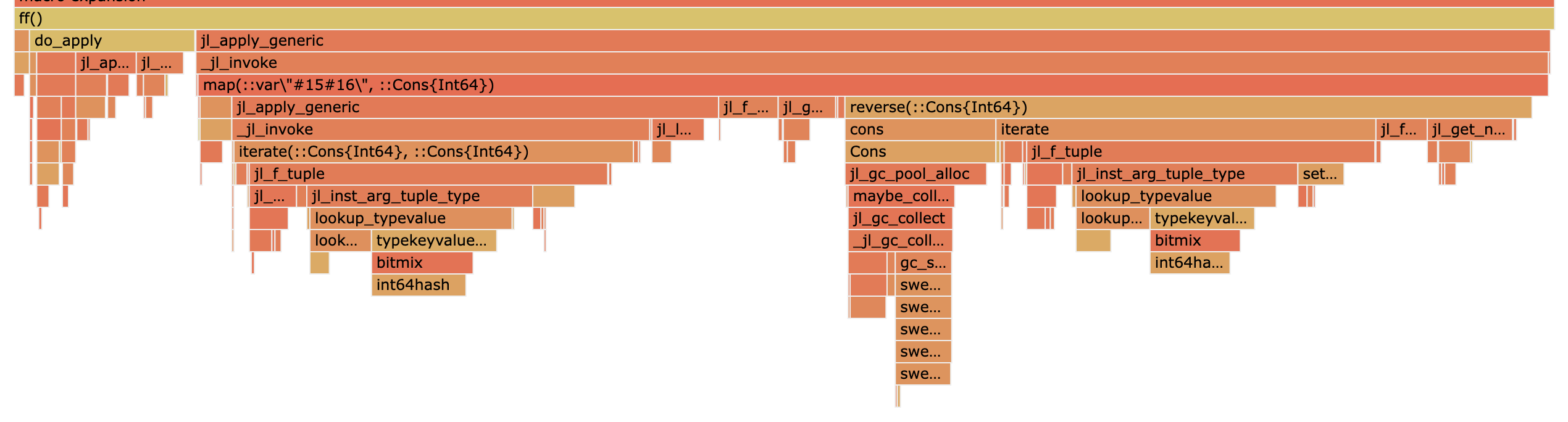

I was curious about the Linked List implementation so I did a bit of profiling it, and I was surprised to see that

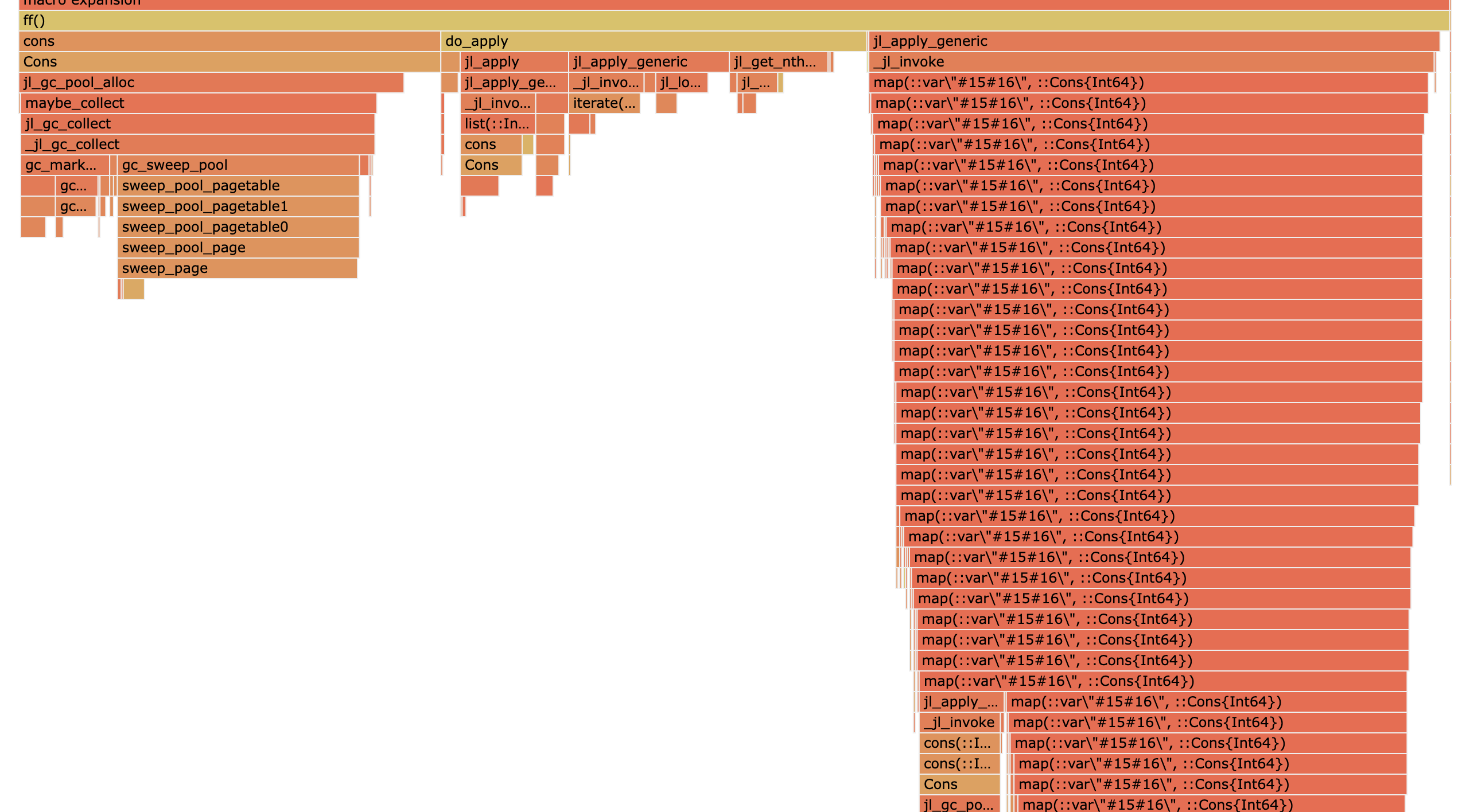

map()was constructing a list twice:So I first implemented it as the tail recursive map for linked lists (in fa11333), which improved things quite a bit:

Before:

After:

But that has this silly (if adorable) recursive call stack, which can of course StackOverflow (I had forgotten that julia doesn't have Tail Call Optimization):

So I rewrote it into an iterative function, and magically that actually made it even faster still. I'm not exactly sure why, but i'll take it. :)

Before:

After:

Also, this let's us maintain the property that was added in PR #27, to allow

map()to create a list with a different eltype.(Note: All measurements taken on julia 1.6.3; visualizations with PProf.jl)