Unleash the power of imagination and generalization of world models for self-driving cars.

Note

- October 2024: CarDreamer has been accepted by IEEE IoT!

- August 2024: Support transmission error in intention sharing.

- August 2024: Created a right turn random task.

- July 2024: Created a stop-sign task and a traffic-light task.

- July 2024: Uploaded all the task checkpoints to HuggingFace

- May 2024 Released arXiv preprint.

Can world model based self-supervised reinforcement learning train autonomous driving agents via imagination of traffic dynamics? The answer is YES!

Integrating the high-fidelity CARLA simulator with world models, we are able to train a world model that not only learns complex environment dynamics but also have an agent interact with the neural network "simulator" to learn to drive.

Simply put, in CarDreamer the agent can learn to drive in a "dream world" from scratch, mastering maneuvers like overtaking and right turns, and avoiding collisions in heavy traffic—all within an imagined world!

CarDreamer offers customizable observability, multi-modal observation spaces, and intention-sharing capabilities. Our paper presents a systematic analysis of the impact of different inputs on agent performance.

Dive into our demos to see the agent skillfully navigating challenges and ensuring safe and efficient travel.

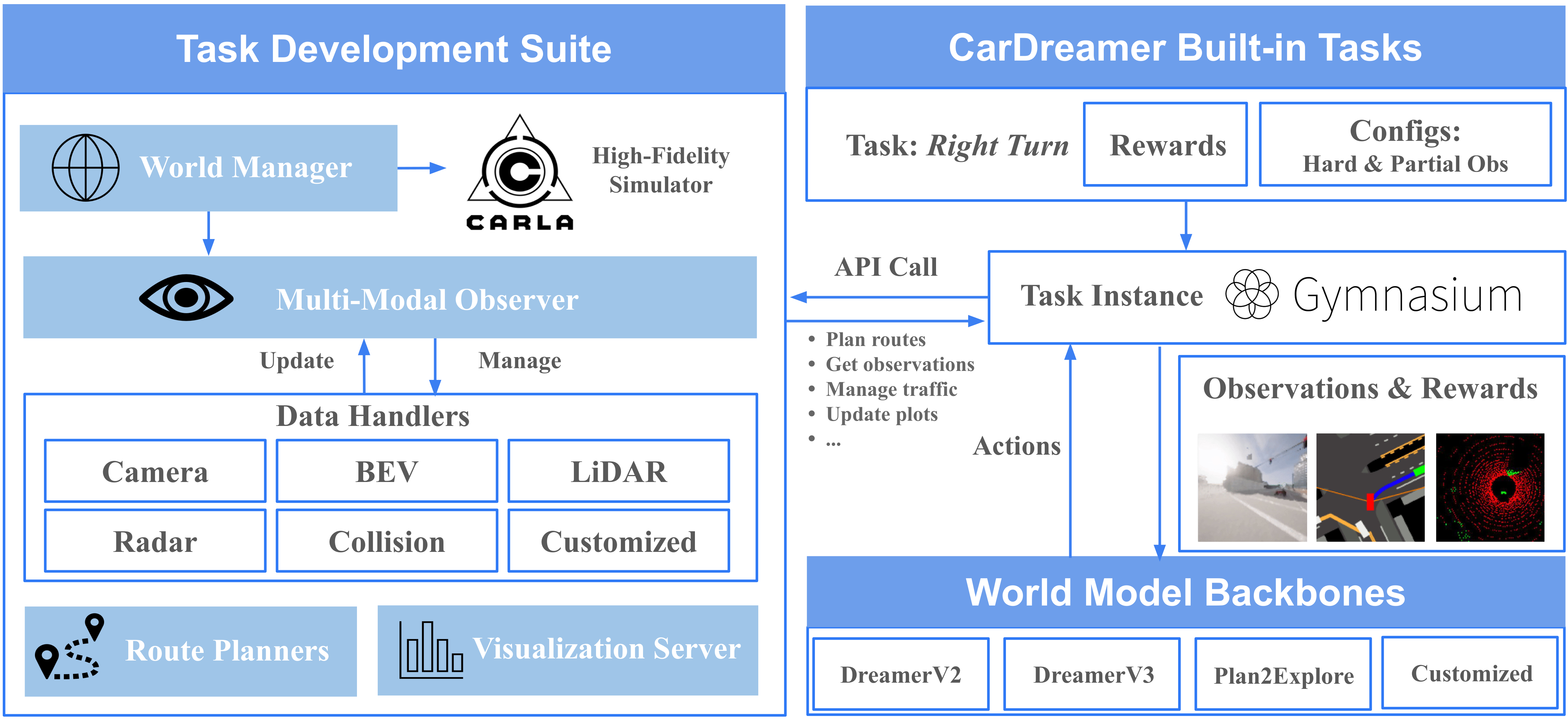

Explore world model based autonomous driving with CarDreamer, an open-source platform designed for the development and evaluation of world model based autonomous driving.

- 🏙️ Built-in Urban Driving Tasks: flexible and customizable observation modality, observability, intention sharing; optimized rewards

- 🔧 Task Development Suite: create your own urban driving tasks with ease

- 🌍 Model Backbones: integrated state-of-the-art world models

Documentation: CarDreamer API Documents.

Looking for more technical details? Check our report here! Paper link

Tip

A world model is learnt to model traffic dynamics; then a driving agent is trained on world model's imagination! The driving agent masters diverse driving skills including lane merge, left turn, and right turn, to random roaming purely from scratch.

We train DreamerV3 agents on our built-in tasks with a single 4090. Depending on the observation spaces, the memory overhead ranges from 10GB-20GB alongwith 3GB reserved for CARLA.

| Right turn hard | Roundabout | Left turn hard | Lane merge | Overtake |

|---|---|---|---|---|

|

|

|

|

|

| Right turn hard | Roundabout | Left turn hard | Lane merge | Overtake |

|---|---|---|---|---|

|

|

|

|

|

| Traffic Light | Stop Sign |

|---|---|

|

|

Tip

Human drivers use turn signals to inform their intentions of turning left or right. Autonomous vehicles can do the same!

Let's see how CarDreamer agents communicate and leverage intentions. Our experiment has demonstrated that through sharing intention, the policy learning is much easier! Specifically, a policy without knowing other agents' intentions can be conservative in our crossroad tasks; while intention sharing allows the agents to find the proper timing to cut in the traffic flow.

| Sharing waypoints vs. Without sharing waypoints | Sharing waypoints vs. Without sharing waypoints |

|---|---|

| Right turn hard | Left turn hard |

|

|

| Full observability vs. Partial observability |

|---|

| Right turn hard |

|

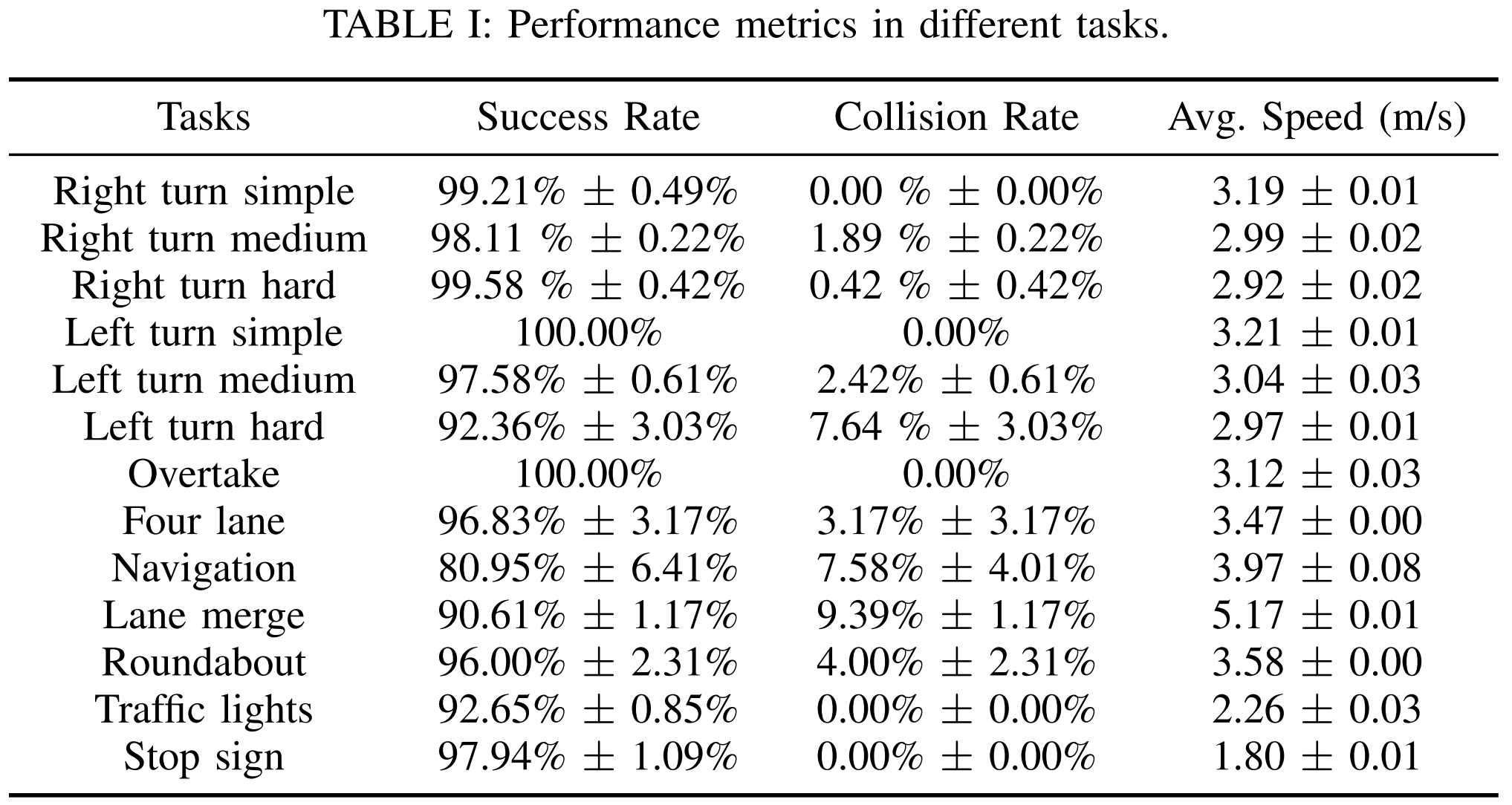

The following table shows the overall performance metrics over different CarDreamer built-in tasks.

| Task Performance Metrics |

|---|

|

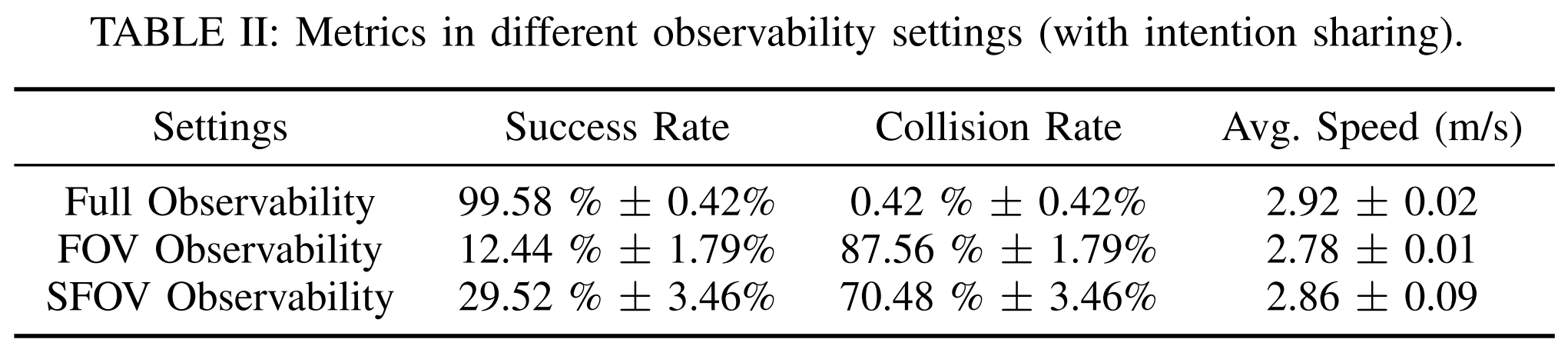

CarDreamer enables the customization of different levels of observability. The table below highlights performance metrics under different observability settings, including full observability, field-of-view (FOV), and recursive field-of-view (SFOV). These settings allow agents to operate with varying degrees of environmental awareness, impacting their ability to plan and execute maneuvers effectively.

| Observability Performance Metrics |

|---|

|

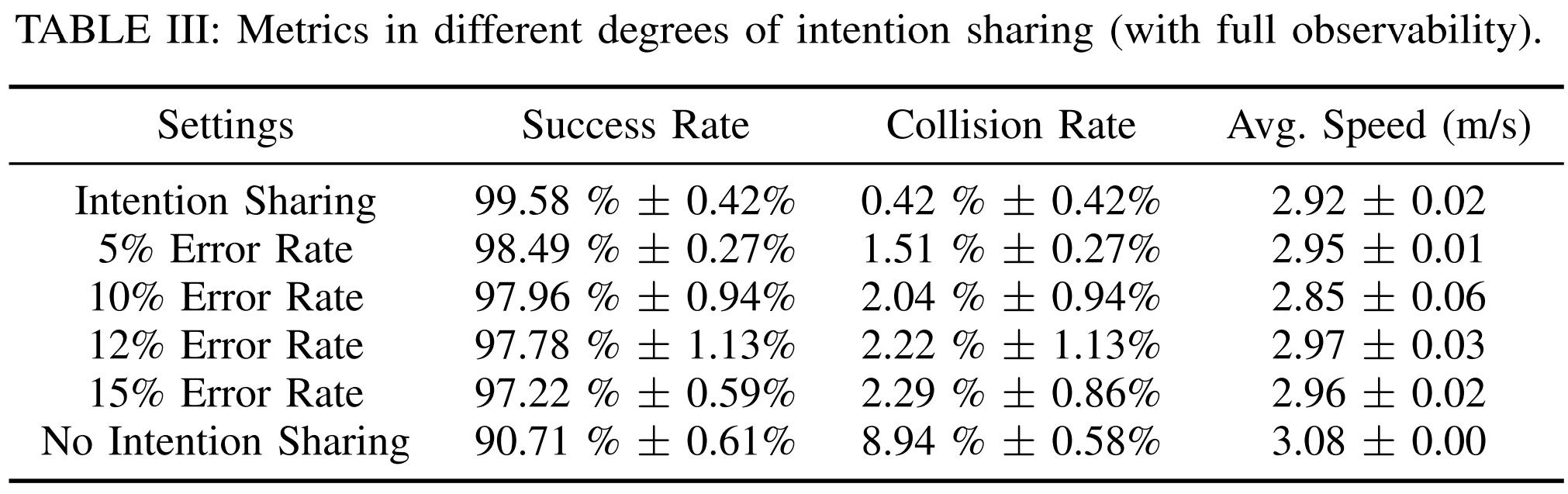

CarDreamer enhances autonomous vehicle planning by allowing vehicles to share their driving intentions, akin to how human drivers use turn signals. This feature facilitates smoother interactions between agents. Additionally, CarDreamer offers the ability to introduce and customize transmission errors in intention sharing, allowing for a more realistic simulation of communication imperfections. The table below presents performance results under various intention sharing and transmission error configurations.

| Intention Sharing and Transmission Errors |

|---|

|

To install CarDreamer tasks or the development suite, clone the repository:

git clone https://github.com/ucd-dare/CarDreamer

cd CarDreamerDownload CARLA release of version 0.9.15 as we experiemented with this version. Set the following environment variables:

export CARLA_ROOT="</path/to/carla>"

export PYTHONPATH="${CARLA_ROOT}/PythonAPI/carla":${PYTHONPATH}Install the package using flit. The --symlink flag is used to create a symlink to the package in the Python environment, so that changes to the package are immediately available without reinstallation. (--pth-file also works, as an alternative to --symlink.)

conda create python=3.10 --name cardreamer

conda activate cardreamer

pip install flit

flit install --symlinkThe model backbones are decoupled from CarDreamer tasks or the development sutie. Users can install model dependencies on their own demands. To install DreamerV2 and DreamerV3, check out the guidelines in separate folders DreamerV3 or DreamerV2. The experiments in our paper were conducted using DreamerV3, the current state-of-the-art world models.

Find README.md in the corresponding directory of the algorithm you want to use and follow the instructions to install dependencies for that model and start training. We suggest starting with DreamerV3 as it is showing better performance across our experiments. To train DreamerV3 agents, use

bash train_dm3.sh 2000 0 --task carla_four_lane --dreamerv3.logdir ./logdir/carla_four_laneThe command will launch CARLA at 2000 port, load task a built-in task named carla_four_lane, and start the visualization tool at port 9000 (2000+7000) which can be accessed through http://localhost:9000/. You can append flags to the command to overwrite yaml configurations.

The section explains how to create CarDreamer tasks in a standalone mode without loading our integrated models. This can be helpful if you want to train and evaluate your own models other than our integrated DreamerV2 and DreamerV3 on CarDreamer tasks.

CarDreamer offers a range of built-in task classes, which you can explore in the CarDreamer Docs: Tasks and Configurations.

Each task class can be instantiated with various configurations. For instance, the right-turn task can be set up with simple, medium, or hard settings. These settings are defined in YAML blocks within tasks.yaml. The task creation API retrieves the given identifier (e.g., carla_four_lane_hard) from these YAML task blocks and injects the settings into the task class to create a gym task instance.

# Create a gym environment with default task configurations

import car_dreamer

task, task_configs = car_dreamer.create_task('carla_four_lane_hard')

# Or load default environment configurations without instantiation

task_configs = car_dreamer.load_task_configs('carla_right_turn_hard')To create your own driving tasks using the development suite, refer to CarDreamer Docs: Customization.

CarDreamer employs an Observer-Handler architecture to manage complex multi-modal observation spaces. Each handler defines its own observation space and lifecycle for stepping, resetting, or fetching information, similar to a gym environment. The agent communicates with the environment through an observer that manages these handlers.

Users can enable built-in observation handlers such as BEV, camera, LiDAR, and spectator in task configurations. Check out common.yaml for all available built-in handlers. Additionally, users can customize observation handlers and settings to suit their specific needs.

To implement new handlers for different observation sources and modalities (e.g., text, velocity, locations, or even more complex data), CarDreamer provides two methods:

- Register a callback as a SimpleHandler to fetch data at each step.

- For observations requiring complex workflows that cannot be conveyed by a

SimpleHandler, create an handler maintaining the full lifecycle of that observation, similar to our built-in message, BEV, spectator handlers.

For more details on defining new observation sources, see CarDreamer Docs: Defining a new observation source.

Each handler can access yaml configurations for further customization. For example, a BEV handler setting can be defined as:

birdeye_view:

# Specify the handler name used to produce `birdeye_view` observation

handler: birdeye

# The observation key

key: birdeye_view

# Define what to render in the birdeye view

entities: [roadmap, waypoints, background_waypoints, fov_lines, ego_vehicle, background_vehicles]

# ... other settings used by the BEV handlerThe handler field specifies which handler implementation is used to manage that observation key. Then, users can simply enable this observation in the task settings.

your_task_name:

env:

observation.enabled: [camera, collision, spectator, birdeye_view]One might need transfer information from the environements to a handler to compute their observations. E.g., a BEV handler might need a location to render the destination spot. These environment information can be accessed either through WorldManager APIs, or through environment state management.

A WorldManager instance is passed in the handler during its initialization. The environment states are defined by an environment's get_state() API, and passed as parameters to handler's get_observation().

class MyHandler(BaseHandler):

def __init__(self, world: WorldManager, config):

super().__init__(world, config)

self._world = world

def get_observation(self, env_state: Dict) -> Tuple[Dict, Dict]:

# Get the waypoints through environment states

waypoints = env_state.get("waypoints")

# Get actors through the world manager API

actors = self._world.actors

# ...

class MyEnv(CarlaBaseEnv):

# ...

def get_state(self):

return {

# Expose the waypoints through get_state()

'waypoints': self.waypoints,

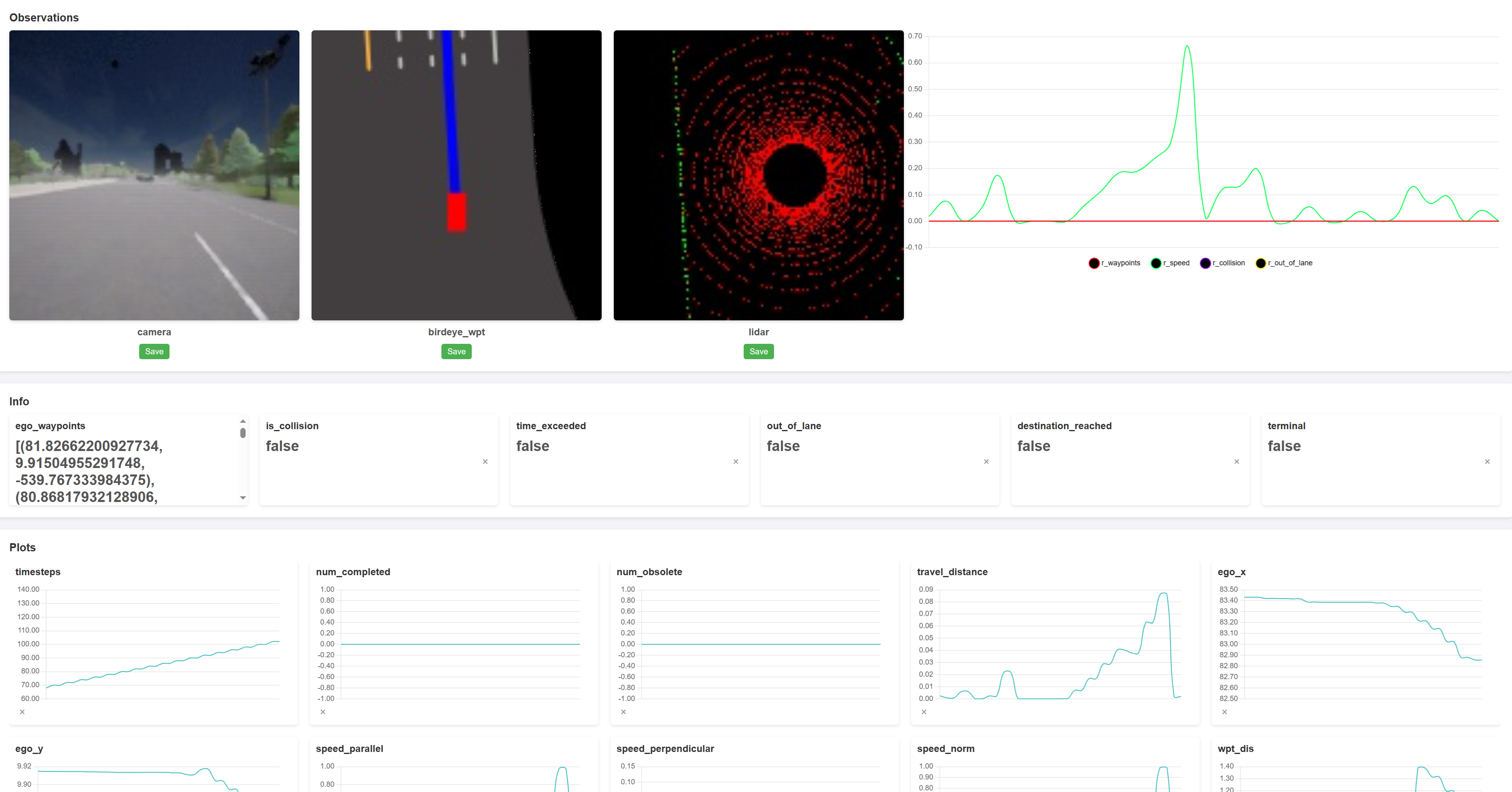

}We stream observations, rewards, terminal conditions, and custom metrics to a web browser for each training session in real-time, making it easier to engineer rewards and debug.

| Visualization Server |

|

...

To easily customize your own driving tasks, and observation spaces, etc., please refer to our CarDreamer API Documents.

If you find this repository useful, please cite this paper:

IEEE IoT paper link ArXiv paper link

@ARTICLE{10714437,

author={Gao, Dechen and Cai, Shuangyu and Zhou, Hanchu and Wang, Hang and Soltani, Iman and Zhang, Junshan},

journal={IEEE Internet of Things Journal},

title={CarDreamer: Open-Source Learning Platform for World Model Based Autonomous Driving},

year={2024},

volume={},

number={},

pages={1-1},

keywords={Autonomous Driving;Reinforcement Learning;World Model},

doi={10.1109/JIOT.2024.3479088}}

@article{CarDreamer2024,

title = {{CarDreamer: Open-Source Learning Platform for World Model based Autonomous Driving}},

author = {Dechen Gao, Shuangyu Cai, Hanchu Zhou, Hang Wang, Iman Soltani, Junshan Zhang},

journal = {arXiv preprint arXiv:2405.09111},

year = {2024},

month = {May}

}

Birdeye view imagination

Camera view imagination

LiDAR view imagination

Special thanks to the community for your valuable contributions and support in making CarDreamer better for everyone!

|

Hanchu Zhou |

Shuangyu Cai |

GaoDechen |

junshanzhangJZ2080 |

Gaofeng Dong |

ucdmike |

|

Jung Dongwon |

Andrew Lee |

IanGuangleiZhu |

liamjxu |

TracyYXChen |

Wei Shao |

The contributor list is automatically generated based on the commit history. Please use pre-commit to automatically check and format changes.

# Setup pre-commit tool

pip install pre-commit

pre-commit install

# Run pre-commit

pre-commit run --all-filesCarDreamer builds on the several projects within the autonomous driving and machine learning communities.