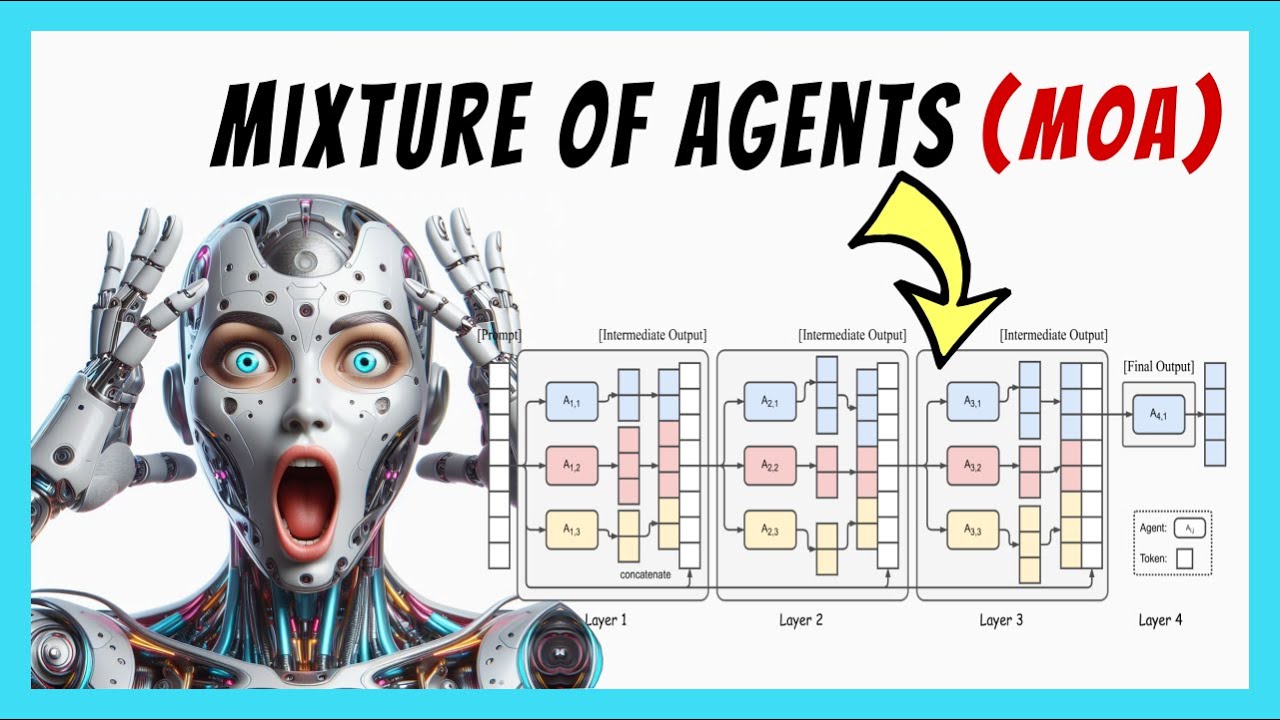

This is a simplified agentic workflow process based on the Mixture of Agents (MoA) system by Wang et al. (2024). "Mixture-of-Agents Enhances Large Language Model Capabilities".

This demo is a 3-layer MoA with 4 open-source Qwen2, Qwen 1.5, Mixtral, and dbrx models. Qwen2 acts as the aggregator in the final layer. Check out the links.txt for the link to the GSM8K benchmark and datasets. For more details, watch the full tutorial video on YouTube at the end of this page.

- Export your Together AI API key as an environment variable using

bash_profileorZshrcand update your shell with the new variable. If you want to set up the key inside your Python script, follow the fileintegrate_api_keyhere. git clonethe MoA GitHub projecthttps://github.com/togethercomputer/MoA.gitinto your project directory.pip install -r requirements.txtfrom the MoA directory.- Run the Python file

bot.py

For the detailed explanation of the Mixture of Agents (MoA) system and paper and how to run MoA AI agents locally watch this video: