—Making your ML and optimization benchmarks simple and open—

Benchopt is a benchmarking suite tailored for machine learning workflows.

It is built for simplicity, transparency, and reproducibility.

It is implemented in Python but can run algorithms written in many programming languages.

So far, benchopt has been tested with Python,

R, Julia

and C/C++ (compiled binaries with a command line interface).

Programs available via conda should be compatible as well.

See for instance an example of usage with R.

It is recommended to use benchopt within a conda environment to fully-benefit

from benchopt Command Line Interface (CLI).

To install benchopt, start by creating a new conda environment and then activate it

conda create -n benchopt python

conda activate benchoptThen run the following command to install the latest release of benchopt

pip install -U benchoptIt is also possible to use the latest development version. To do so, run instead

pip install git+https://github.com/benchopt/benchopt.gitAfter installing benchopt, you can

- replicate/modify an existing benchmark

- create your own benchmark

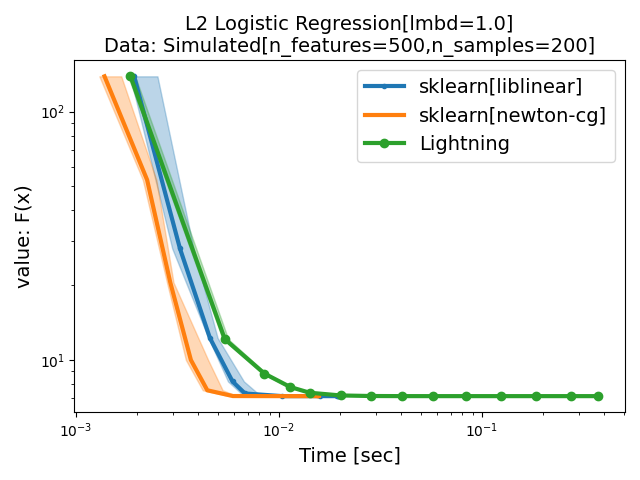

Replicating an existing benchmark is simple. Here is how to do so for the L2-logistic Regression benchmark.

- Clone the benchmark repository and

cdto it

git clone https://github.com/benchopt/benchmark_logreg_l2

cd benchmark_logreg_l2- Install the desired solvers automatically with

benchopt

benchopt install . -s lightning -s sklearn- Run the benchmark to get the figure below

benchopt run . --config ./example_config.ymlThese steps illustrate how to reproduce the L2-logistic Regression benchmark.

Find the complete list of the Available benchmarks.

Also, refer to the documentation to learn more about benchopt CLI and its features.

You can also easily extend this benchmark by adding a dataset, solver or metric.

Learn that and more in the Benchmark workflow.

The section Write a benchmark of the documentation provides a tutorial

for creating a benchmark. The benchopt community also maintains

a template benchmark to quickly and easily start a new benchmark.

Join benchopt discord server and get in touch with the community!

Feel free to drop us a message to get help with running/constructing benchmarks

or (why not) discuss new features to be added and future development directions that benchopt should take.

Benchopt is a continuous effort to make reproducible and transparent ML and optimization benchmarks.

Join us in this endeavor! If you use benchopt in a scientific publication, please cite

@inproceedings{benchopt,

author = {Moreau, Thomas and Massias, Mathurin and Gramfort, Alexandre

and Ablin, Pierre and Bannier, Pierre-Antoine

and Charlier, Benjamin and Dagréou, Mathieu and Dupré la Tour, Tom

and Durif, Ghislain and F. Dantas, Cassio and Klopfenstein, Quentin

and Larsson, Johan and Lai, En and Lefort, Tanguy

and Malézieux, Benoit and Moufad, Badr and T. Nguyen, Binh and Rakotomamonjy,

Alain and Ramzi, Zaccharie and Salmon, Joseph and Vaiter, Samuel},

title = {Benchopt: Reproducible, efficient and collaborative optimization benchmarks},

year = {2022},

booktitle = {NeurIPS},

url = {https://arxiv.org/abs/2206.13424}

}