-

Notifications

You must be signed in to change notification settings - Fork 4

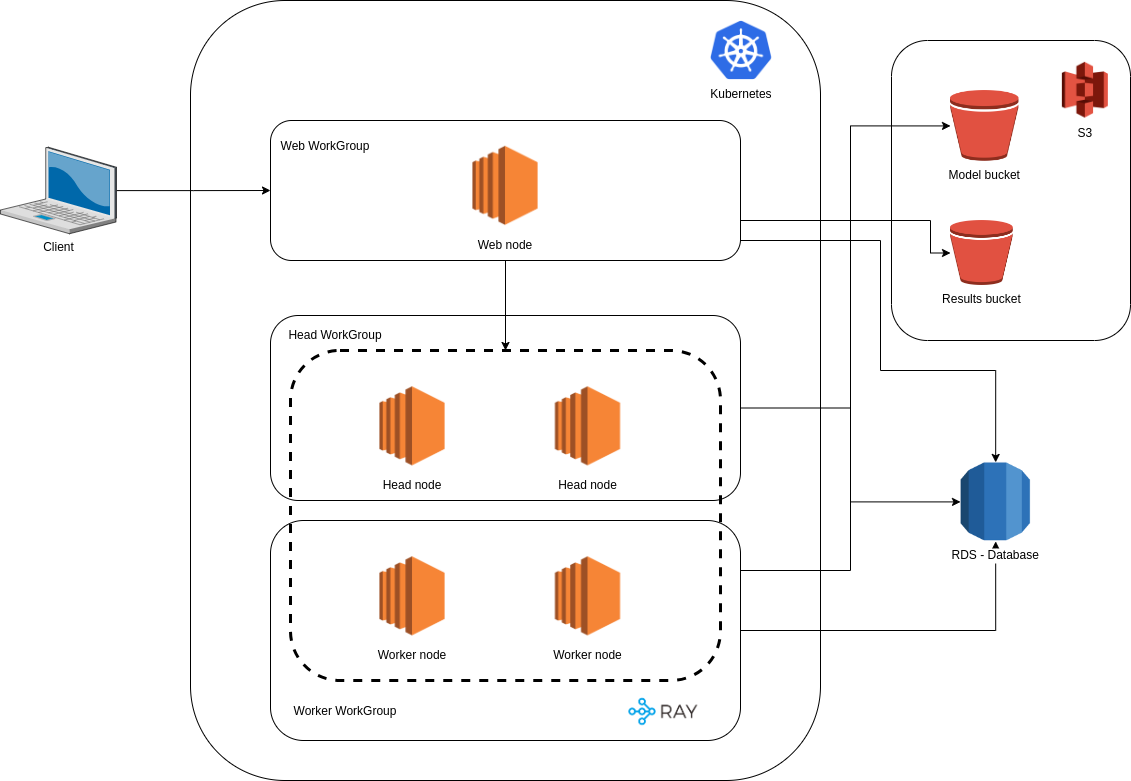

Infrastructure

The production Morpheus infrastructure is designed to run in a Kubernetes cluster, really Kubernetes is the current standard to deploy applications on the Internet. One of the main reasons for that is the portability, ultimately you can choose to run your cluster in on-premise infrastructure or in different cloud providers like AWS, Azure, and Google Cloud.

Currently, the infrastructure has been developed using Terraform, and the module to deploy it in AWS is available here.

Talking about infrastructure we could classify the different nodes into 3 types and add one s3-type service and a database engine.

The web nodes are responsible for running the Morpheus architecture's web components in this case, Kubernetes pods. These components are:

-

API/Backend: This component has been built using the FastAPI framework and it covered all the interactions between the frontend and the Ray cluster to generate the images.

-

Client/Frontend: This component has been built using the NextJS framework and it processes the interactions with the application user directly.

-

Collaborative: This component uses Excalidraw to collaborate and create images using an online whiteboard.

Ray.io head node is the main part of a Ray cluster. It is responsible for orchestrating the different tasks you need to process using a scalable infrastructure. For that reason, it needs at least 2 nodes to be deployed.

To deploy a new Head node for any change in the version of the script, the RayService Kubernetes resource:

- Check first if the current head node is available.

- It creates another instance of the Head node in another node

- It waits for the new head node to be healthy.

- It kills the old head node.

As you can see the high availability for this component is important and that is the main reason to have at least 2 nodes.

Ray.io nodes are the part of the infrastructure where the images are generated, which means these nodes have a GPU. In this case, although the worker nodes are important they don't need to have high availability, this is because Ray's head is able to keep the requests in a queue while there is a worker node available. These nodes have available the models in an operating system directory to be shared with the Ray.io worker instances. Later based on the Morpheus feature (ImageToImage, Pix2Pix, etc), the model is loaded from that location.

S3 buckets are basically containers for the models and results of the generation process. There are 2 buckets:

- Result bucket: Tt's used to save the generated image.

- Model bucket: It's used to deploy the different models in the infra. Later the worker nodes are able to synchronize those files to be used from the Ray.io worker nodes.

This is a PostgreSQL instance. It's used to persist the application data using a relational database.

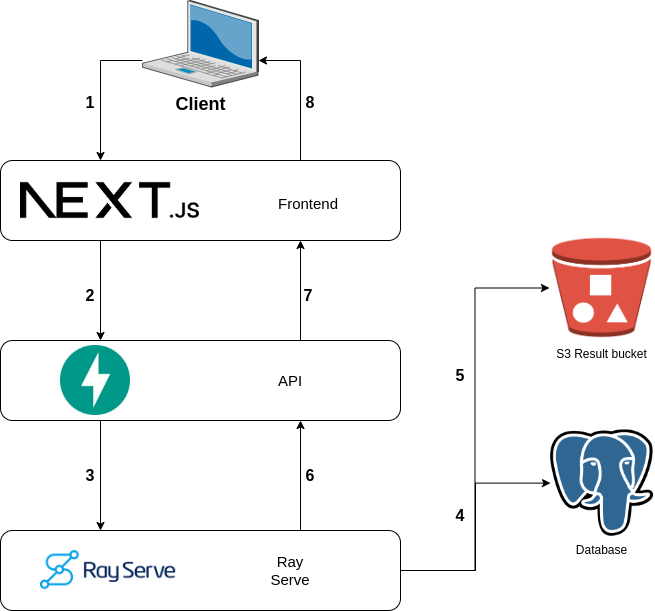

A simple generation flow would work like this:

- The client sends a generation action from the frontend.

- The frontend asks the backend for a generation task.

- The backend sends the prompt to the Ray.io cluster.

- Ray cluster generates an image and saves it in the result S3 bucket.

- Ray cluster updates the task status in the PostgreSQL database.

- Ray cluster response to the backend request.

- The backend says to the frontend the task is being executed.

- The frontend polls the backend to detect when the image has been generated.