Confusing coordinate system. #153

Replies: 11 comments 29 replies

-

|

sorry for the shift in coordinate system! internally, ngp uses entirely 0-1

bounding box, as displayed in the gui, with cameras looking down positive

z. that's just the convention we chose early on. however, we wanted to be

compatible specifically with the original nerf datasets, which place the

origin at 0, scale the cameras to be around 3 units from the origin, and

have a different convention for 'up', camera up, etc.

so the snippet you found could be described as 'converting from the

original nerf paper conventions to ngp's conventions'.

over time, the 'original nerf' format of transforms.json became the

dominant (only practical) way to get data into ngp; so, it comes across as

confusing as we don't explicitly talk about the mapping.

In the end, I am afraid it is what it is.

you can adjust the scale and offset components of the transformation by

adding extra parameters to the json, see nerf_loader.cu that looks for keys

'offset', 'scale' and 'aabb'. however the coordinate flipping is hard coded

for the time being.

…On Fri, Jan 21, 2022 at 5:07 PM Martijn Courteaux ***@***.***> wrote:

I'm trying to wrap my head around the coordinate system, and it looks

unlike anything I've seen before. I setup a simple test transforms.json:

{

"camera_angle_x": 2.1273956954128375,

"scale": 1.0,

"offset": [

0.0,

0.0,

0.0

],

"frames": [

{

"file_path": "png/view_f0000f",

"transform_matrix": [

[

1.0,

0.0,

0.0,

0.8

],

[

0.0,

1.0,

0.0,

1.0

],

[

0.0,

0.0,

1.0,

1.2

],

[

0.0,

0.0,

0.0,

1.0

]

]

}

]

}

Notice how the camera I set up is having identity rotational part, and

translation vector [0.8, 1.0, 1.2]. The result looks like this:

[image: image]

<https://user-images.githubusercontent.com/845012/150566804-e932cdc4-b977-4a0c-9d4f-3ecaae490d93.png>

[image: image]

<https://user-images.githubusercontent.com/845012/150567363-a5ecf787-111a-46ca-9db7-1b499c115acd.png>

[image: image]

<https://user-images.githubusercontent.com/845012/150567796-e4dec845-4aea-463e-b456-751db1adf200.png>

Confusing things:

- The camera and the unit cube have different orientations for X, Y,

and Z (i.e.: red, green, blue axis). (see first image)

- The position of the camera relative to the unit cube seems wrong.

The the camera seems to be at x=1, and z=0.8, whereas I specified x to be

0.8, and z to be 1.2. (see second image).

- The camera is at y=1.2, whereas it should be 1.0. (see third image).

So, position in the testbed is (1, 1.2, 0.8), but it should be (0.8, 1.0,

1.2). This shuffles around ALL axis. After long searching, I found this

snippet:

https://github.com/NVlabs/instant-ngp/blob/409613afdc08f69342a9269b9e674604229d183f/include/neural-graphics-primitives/nerf_loader.h#L50-L70

So, my question kinda reduces to: how to think about this coordinate

system, as I still haven't figured out how to convert my dataset

transformations to yours.

—

Reply to this email directly, view it on GitHub

<#72>, or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAFOYTZYYMZ3QX7H22NUVDTUXGHEHANCNFSM5MQA7I5Q>

.

You are receiving this because you are subscribed to this thread.Message

ID: ***@***.***>

|

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

For reference for others. This is what I have right now, based on a position and euler rotations for a Blender-like camera: def generate_transform_matrix(pos, rot):

def Rx(theta):

return np.matrix([[ 1, 0 , 0 ],

[ 0, np.cos(theta),-np.sin(theta)],

[ 0, np.sin(theta), np.cos(theta)]])

def Ry(theta):

return np.matrix([[ np.cos(theta), 0, np.sin(theta)],

[ 0 , 1, 0 ],

[-np.sin(theta), 0, np.cos(theta)]])

def Rz(theta):

return np.matrix([[ np.cos(theta), -np.sin(theta), 0 ],

[ np.sin(theta), np.cos(theta) , 0 ],

[ 0 , 0 , 1 ]])

R = Rz(rot[2]) * Ry(rot[1]) * Rx(rot[0])

xf_rot = np.eye(4)

xf_rot[:3,:3] = R

xf_pos = np.eye(4)

xf_pos[:3,3] = pos - average_position

# barbershop_mirros_hd_dense:

# - camera plane is y+z plane, meaning: constant x-values

# - cameras look to +x

# Don't ask me...

extra_xf = np.matrix([

[-1, 0, 0, 0],

[ 0, 0, 1, 0],

[ 0, 1, 0, 0],

[ 0, 0, 0, 1]])

# NerF will cycle forward, so lets cycle backward.

shift_coords = np.matrix([

[0, 0, 1, 0],

[1, 0, 0, 0],

[0, 1, 0, 0],

[0, 0, 0, 1]])

xf = shift_coords @ extra_xf @ xf_pos

assert np.abs(np.linalg.det(xf) - 1.0) < 1e-4

xf = xf @ xf_rot

return xf |

Beta Was this translation helpful? Give feedback.

-

|

Absolutely beautiful!! nerf_barbershop_spherical_2.small.mp4 |

Beta Was this translation helpful? Give feedback.

-

|

thankyou for this! I am tempted to make a pullrequest for you that disables as much of the coordinate transformation as possible - dont worry the default wont change so your dataset will continue to work - I feel guilty about the trouble we caused :) |

Beta Was this translation helpful? Give feedback.

-

|

I think I wouldn't be the only one that would appreciate a non-weirdly-behaving coordinate system 😋 I must admit, I got to this point by brute forcing transformation matrices and a little bit of iterative educated guessing. I'm confused as the camera upside-down thing we discussed earlier is not clearly in my transformation code anymore. |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

hey does anyone know how i could import a camera i tracked from a 3d software like blender into instant ngp as a camera path? |

Beta Was this translation helpful? Give feedback.

-

|

I think you guys could help me with my issue, I can't find the problem. The coordinates of the camera are off. #1286 |

Beta Was this translation helpful? Give feedback.

-

|

Hello, I am dealing with an issue that I feel is related. If anybody could help me, I would greatly appreciate it! You can find my issue here #1360 |

Beta Was this translation helpful? Give feedback.

-

|

Hello every one, |

Beta Was this translation helpful? Give feedback.

-

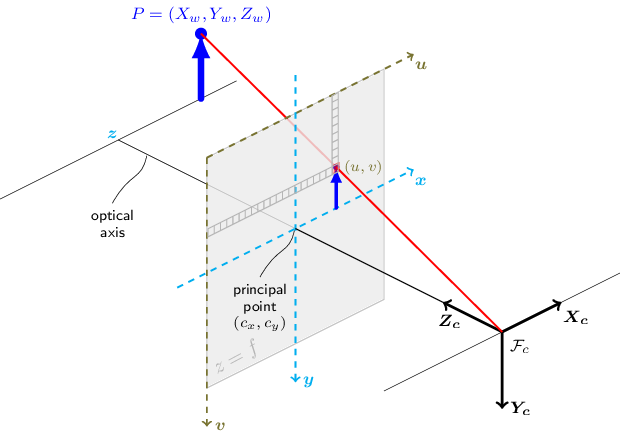

I'm trying to wrap my head around the coordinate system, and it looks unlike anything I've seen before. I setup a simple test

transforms.json:{ "camera_angle_x": 2.1273956954128375, "scale": 1.0, "offset": [ 0.0, 0.0, 0.0 ], "frames": [ { "file_path": "png/view_f0000f", "transform_matrix": [ [ 1.0, 0.0, 0.0, 0.8 ], [ 0.0, 1.0, 0.0, 1.0 ], [ 0.0, 0.0, 1.0, 1.2 ], [ 0.0, 0.0, 0.0, 1.0 ] ] } ] }Notice how the camera I set up is having identity rotational part, and translation vector

[0.8, 1.0, 1.2]. The result looks like this:Confusing things:

So, position in the testbed is (1, 1.2, 0.8), but it should be (0.8, 1.0, 1.2). This shuffles around ALL axis. After long searching, I found this snippet:

instant-ngp/include/neural-graphics-primitives/nerf_loader.h

Lines 50 to 70 in 409613a

So, my question kinda reduces to: how to think about this coordinate system, as I still haven't figured out how to convert my dataset transformations to yours.

Beta Was this translation helpful? Give feedback.

All reactions