-

Notifications

You must be signed in to change notification settings - Fork 362

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Memory leak if stream is not explicitly closed #1117

Comments

This comment was marked as spam.

This comment was marked as spam.

This comment was marked as spam.

This comment was marked as spam.

|

hi @hmaarrfk Have you found any other solution besides explicitly closing containers and streams? This is my current code that encodes and decodes from the list of numpy array images ( with io.BytesIO() as buf:

with av.open(buf, "w", format=container_string) as container:

stream = container.add_stream(codec, rate=rate, options=options)

stream.height = imgs[0].shape[0]

stream.width = imgs[0].shape[1]

stream.pix_fmt = pixel_fmt

for img in imgs_padded:

frame = av.VideoFrame.from_ndarray(img, format="rgb24")

frame.pict_type = "NONE"

for packet in stream.encode(frame):

container.mux(packet)

# Flush stream

for packet in stream.encode():

container.mux(packet)

stream.close()

outputs = []

with av.open(buf, "r", format=container_string) as video:

for i, frame in enumerate(video.decode(video=0)):

if sequence_length <= i < sequence_length * 2:

outputs.append(frame.to_rgb().to_ndarray().astype(np.uint8))

if i >= sequence_length * 2:

break

video.streams.video[0].close() |

|

no, i just explicitly call close. |

|

Ok, thanks. I later found out the memory leak only occurs if I use the |

|

I can also reproduce this, without It seems to happen when I process the frames in a different process, and stream.close causes everything to hang if I use more than 1 processes. Multiple bugs bundled into one 😅 |

|

hi, @meakbiyik |

|

Hey @RoyaltyLJW, I still use |

|

@meakbiyik Thanks a lot. It fix my deadlock issue |

This comment was marked as spam.

This comment was marked as spam.

|

Not stale |

|

I also experienced the same issue - when deployed in Docker, not closing the stream causes memory instability. @hmaarrfk you saved my life with this extremely niche bug report. |

|

Hi, I just encountered a similar problem on repeated video decoding. It seems that adding The code I'm using: from contextlib import closing

import av # v9.2.0 in my case

def iterate_frames(path: str, *, with_threads: bool = False):

with av.open(path) as container:

stream = container.streams.video[0]

if with_threads:

stream.thread_type = 'AUTO'

for packet in container.demux(stream):

for frame in packet.decode():

yield frame

# stream.codec_context.close() # seems to be the fix

while True:

for frame in iterate_frames("myvideo.mp4", with_threads=True):

passI still can see slight memory increase after many iterations, but it's fluctuating in the range of several MB, while without the fixing line the memory consumption rockets up into gigabytes. |

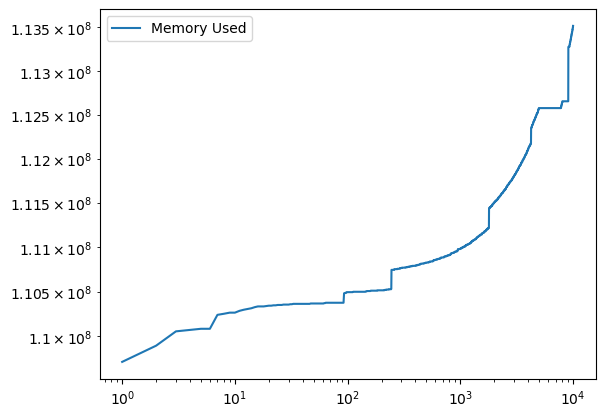

Overview

I think there is a memory leak that occurs if you don't explicitly close a container or stream.

I'm still trying to drill the problem down, but I think I have a minimum reproducing example that I think is worthwhile to share at this stage.

It seems that

__dealloc__isn't called as expected maybe??? https://github.com/PyAV-Org/PyAV/blob/main/av/container/input.pyx#L88Expected behavior

That the memory be cleared. If I add the

closecalls to the loop I get.Versions

The text was updated successfully, but these errors were encountered: