-

Notifications

You must be signed in to change notification settings - Fork 3

Added FAQs for Experiment Results, and an additional FAQ in Charts #406

Conversation

|

Lint fix: #410 |

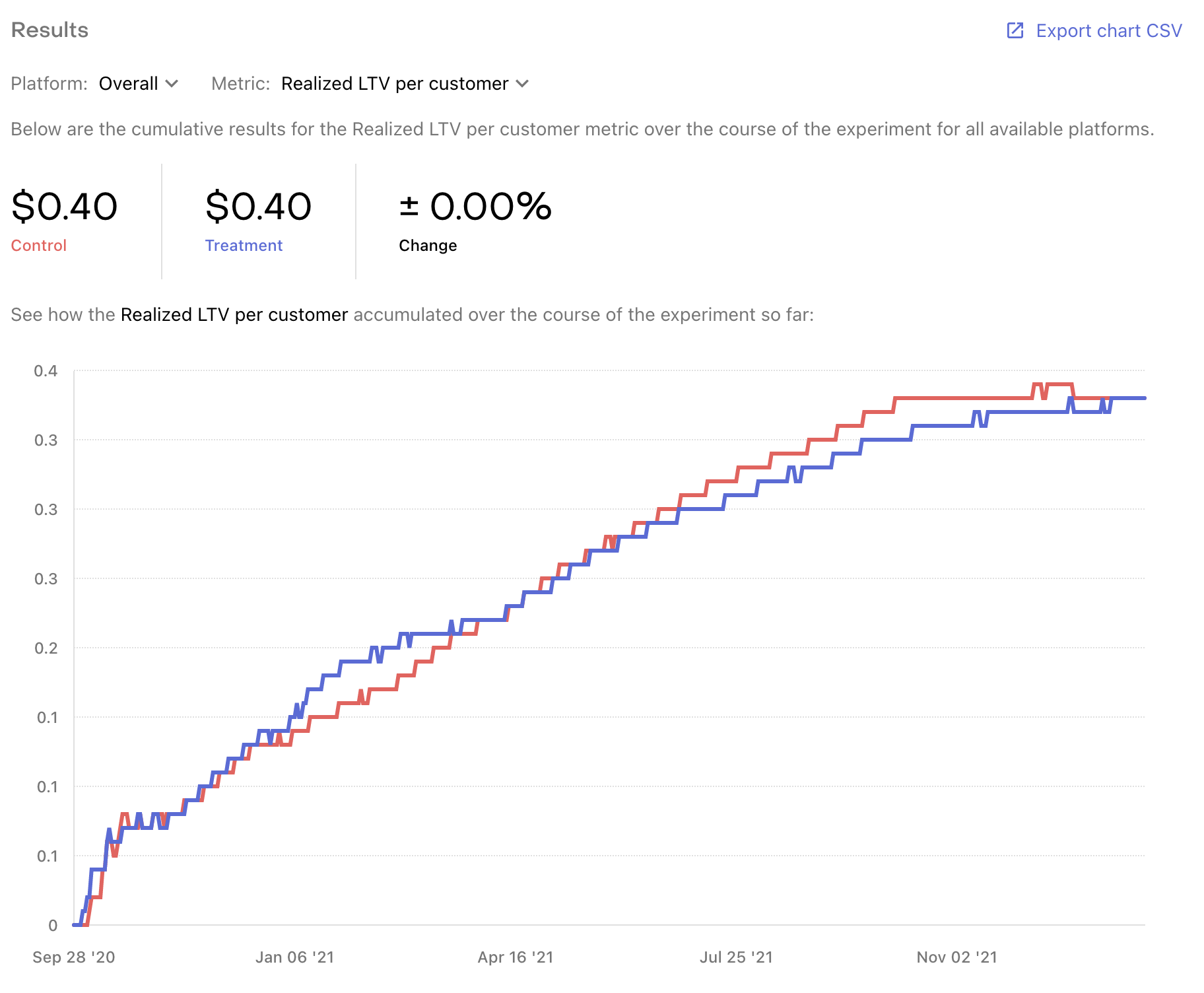

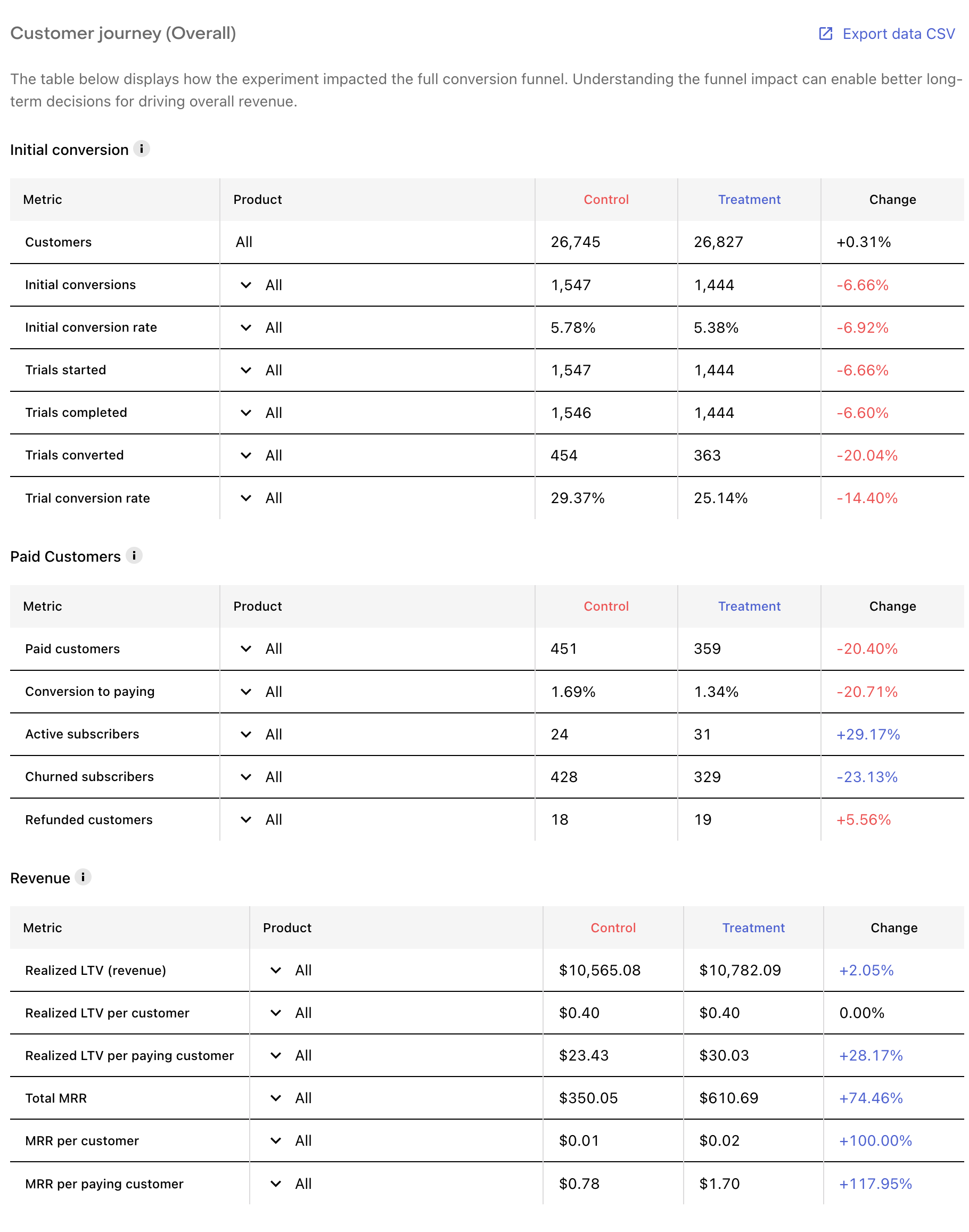

Previewstemp/experiments-results-v1.md See contentsWithin 24 hours of your experiment's launch you'll start seeing data on the Results page. RevenueCat offers experiment results through each step of the subscription journey to give you a comprehensive view of the impact of your test. You can dig into these results in a few different ways, which we'll cover below. Results chartThe Results chart should be your primary source for understanding how a specific metric has performed for each variant over the lifetime of your experiment. Customer journey tablesThe customer journey tables can be used to dig into and compare your results across variants. The customer journey for a subscription product can be complex: a "conversion" may only be the start of a trial, a single payment is only a portion of the total revenue that subscription may eventually generate, and other events like refunds and cancellations are critical to understanding how a cohort is likely to monetize over time. To help parse your results, we've broken up experiment results into three tables:

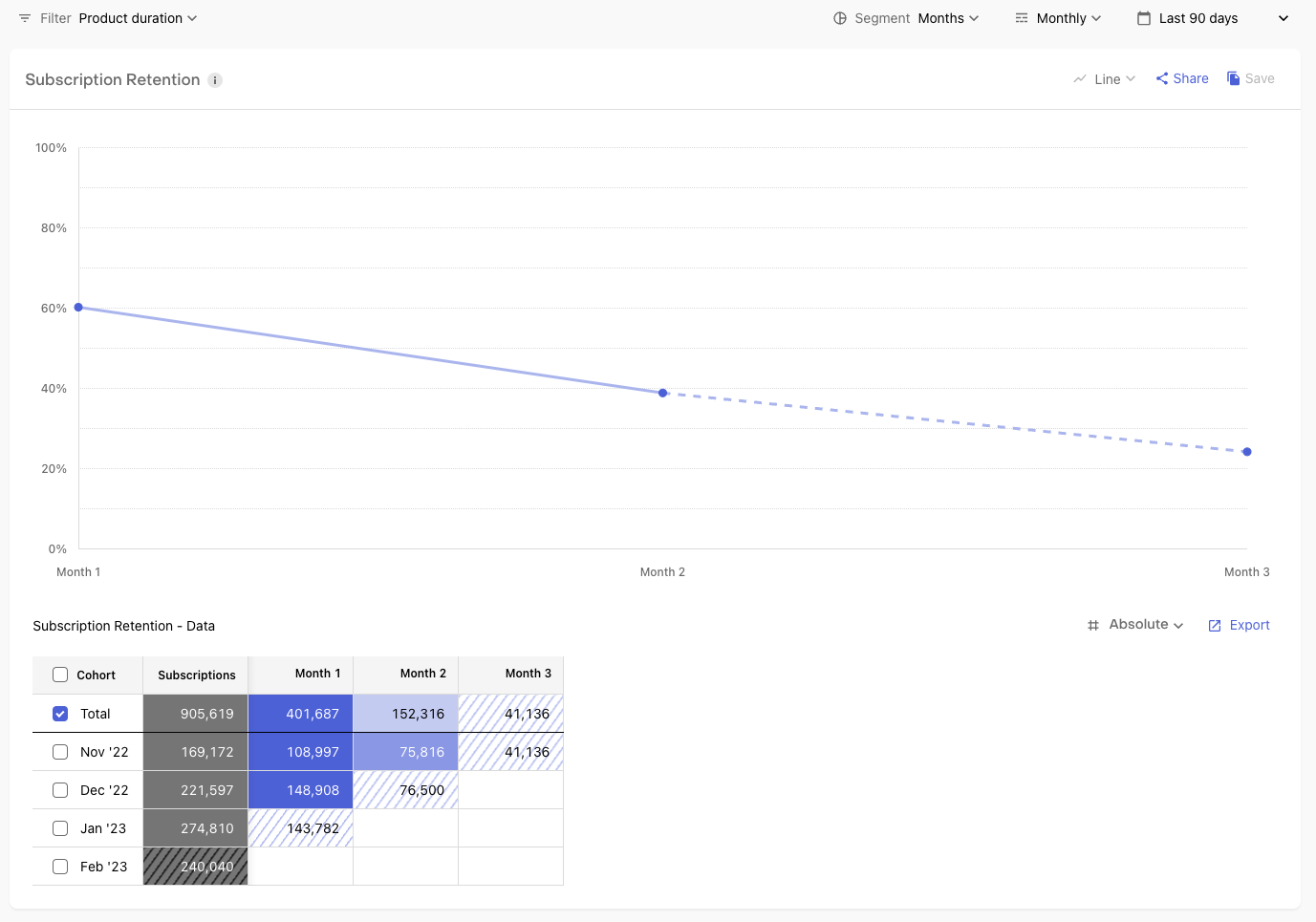

Metric definitionsInitial conversion metric definitions[block:parameters] Paid customers metric definitions[block:parameters] Revenue metric definitions[block:parameters] [block:callout] FAQs[block:parameters] temp/subscription-retention-chart.md See contentsDefinitionSubscription Retention shows you how paying subscriptions renew and retain over time by cohorts, which are segmented by subscription start date by default, but can be segmented by other fields like Country or Product as well. Available settings

Segmentation & cohortingWhen segmenting by subscription start date, cohorts are segmented by the start of a paid subscription. Subsequent periods along the horizontal table indicate how many subscriptions continued renewing through those periods. When segmenting by other dimensions, such as Country or Store, cohorts are segmented by the values within that dimension (e.g. App Store or Play Store for the Store segment), and include all paid subscriptions started within the specified date range. Subsequent periods along the horizontal table indicate the portion of subscriptions that successfully renewed out of all those that had the opportunity to renew in a given period. How to use Subscription Retention in your businessMeasuring Subscription Retention is crucial for understanding:

By studying this data, you may learn which customer segments are worth focusing your product and marketing efforts on more fully, or where you have the opportunity to improve your pricing to increase retention over time. CalculationFor each period, we measure: Subscriptions: The count of new paid subscriptions started within that period.

Retention through each available period

FormulaWhen segmenting by Subscription Start Date When segmenting by other dimensions

FAQs[block:parameters] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM!

Motivation / Description

Adding FAQs for Experiment Results to address the most common questions that come up. Plus I bundled an additional FAQ for the Subscription Retention Chart at the same time.

Changes introduced

Linear ticket (if any)

Additional comments