-

Notifications

You must be signed in to change notification settings - Fork 3

Simultaneous Testing Docs #670

base: main

Are you sure you want to change the base?

Conversation

Previewstemp/configuring-experiments-v1.md See contentsBefore setting up an experiment, make sure you've created the Offerings you want to test. This may include:

You should also test the Offerings you've chosen on any platform your app supports. Setting up a new experimentFirst, navigate to your Project. Then, click on Experiments in the Monetization tools section. [block:image] Select + New to create a new experiment. [block:image]

Required fieldsTo create your experiment, you must first enter the following required fields:

Audience customizationThen, you can optionally customize the audience who will be enrolled through your experiment through Customize enrollment criteria and New customers to enroll. Customize enrollment criteria Select from any of the available dimensions to filter which new customers are enrolled in your experiment.

New customers to enroll You can modify the % of new customers to enroll in 10% increments based on how much of your audience you want to expose to the test. Keep in mind that the enrolled new customers will be split between the two variants, so a test that enrolls 10% of new customers would yield 5% in the Control group and 5% in the Treatment group. Once done, select CREATE EXPERIMENT to complete the process. Starting an experimentWhen viewing a new experiment, you can start, edit, or delete the experiment.

Running multiple tests simultaneouslyYou can use Experiments to run multiple test simultaneously as long as:

If a test that you've created does not meet the above criteria, we'll alert you to that in the Dashboard and you'll be prevented from starting the test, as seen below. [block:image] Examples of valid tests to run simultaneouslyScenario #1 -- Multiple tests on unique audiences

Scenario #2 -- Multiple tests on identical audiences

Examples of invalid tests to run simultaneouslyScenario #3 -- Multiple tests on partially overlapping audiences

Scenario #4 -- Multiple tests on >100% of an identical audience

FAQs[block:parameters] temp/experiments-overview-v1.md See contentsExperiments allow you to answer questions about your users' behaviors and app's business by A/B testing two Offerings in your app and analyzing the full subscription lifecycle to understand which variant is producing more value for your business. While price testing is one of the most common forms of A/B testing in mobile apps, Experiments are based on RevenueCat Offerings, which means you can A/B test more than just prices, including: trial length, subscription length, different groupings of products, etc. You can even use our Paywalls or Offering Metadata to remotely control and A/B test any aspect of your paywall. Learn more.

How does it work?After configuring the two Offerings you want and adding them to an Experiment, RevenueCat will randomly assign users to a cohort where they will only see one of the two Offerings. Everything is done server-side, so no changes to your app are required if you're already displaying the

[block:image]{"images":[{"image":["https://files.readme.io/229d551-experiments-learn.webp","ab-test.png",null],"align":"center"}]}[/block] As soon as a customer is enrolled in an experiment, they'll be included in the "Customers" count on the Experiment Results page, and you'll see any trial starts, paid conversions, status changes, etc. represented in the corresponding metrics. (Learn more here)

Implementation requirementsExperiments requires you to use Offerings and have a dynamic paywall in your app that displays the current Offering for a given customer. While Experiments will work with iOS and Android SDKs 3.0.0+, it is recommended to use these versions:

If you meet these requirements, you can start using Experiments without any app changes! If not, take a look at Displaying Products. The Swift sample app has an example of a dynamic paywall that is Experiments-ready.

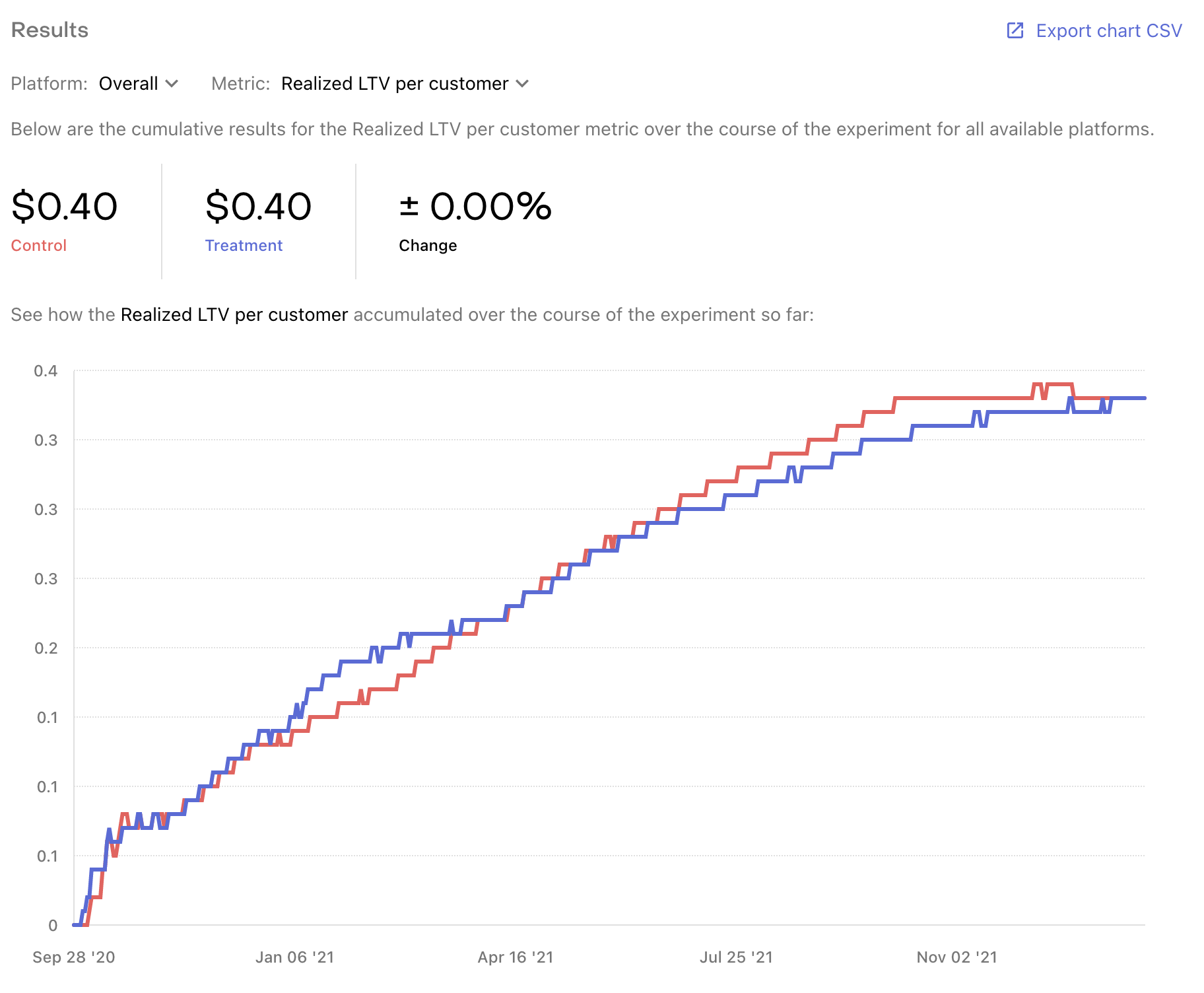

Visit Configuring Experiments to learn how to setup your first test. Tips for Using ExperimentsDecide how long you want to run your experiments There’s no time limit on tests. Consider the timescales that matter for you. For example, if comparing monthly vs yearly, yearly might outperform in the short term because of the high short term revenue, but monthly might outperform in the long term. Keep in mind that if the difference in performance between your variants is very small, then the likelihood that you're seeing statistically significant data is lower as well. "No result" from an experiment is still a result: it means your change was likely not impactful enough to help or hurt your performance either way. ** Test only one variable at a time** It's tempting to try to test multiple variables at once, such as free trial length and price; resist that temptation! The results are often clearer when only one variable is tested. You can run more tests for other variables as you further optimize your LTV. Run multiple tests simultaneously to isolate variables & audiences If you're looking to test the price of a product and it's optimal trial length, you can run 2 tests simultaneously that each target a subset of your total audience. For example, Test #1 can test price with 20% of your audience; and Test #2 can test trial length with a different 20% of your audience. You can also test different variables with different audiences this way to optimize your Offering by country, app, and more. ** Bigger changes will validate faster** Small differences ($3 monthly vs $2 monthly) will often show ambiguous results and may take a long time to show clear results. Try bolder changes like $3 monthly vs $10 monthly to start to triangulate your optimal price. ** Running a test with a control** Sometimes you want to compare a different Offering to the one that is already the default. If so, you can set one of the variants to the Offering that is currently used in your app. ** Run follow-up tests after completing one test** After you run a test and find that one Offering won over the other, try running another test comparing the winning Offering against another similar Offering. This way, you can continually optimize for lifetime value (LTV). For example, if you were running a price test between a $5 product and a $7 product and the $7 Offering won, try running another test between a $8 product and the $7 winner to find the optimal price for the product that results in the highest LTV. temp/experiments-results-v1.md See contentsWithin 24 hours of your experiment's launch you'll start seeing data on the Results page. RevenueCat offers experiment results through each step of the subscription journey to give you a comprehensive view of the impact of your test. You can dig into these results in a few different ways, which we'll cover below. Results chartThe Results chart should be your primary source for understanding how a specific metric has performed for each variant over the lifetime of your experiment.

You can also click Export chart CSV to receive an export of all metrics by day for deeper analysis.

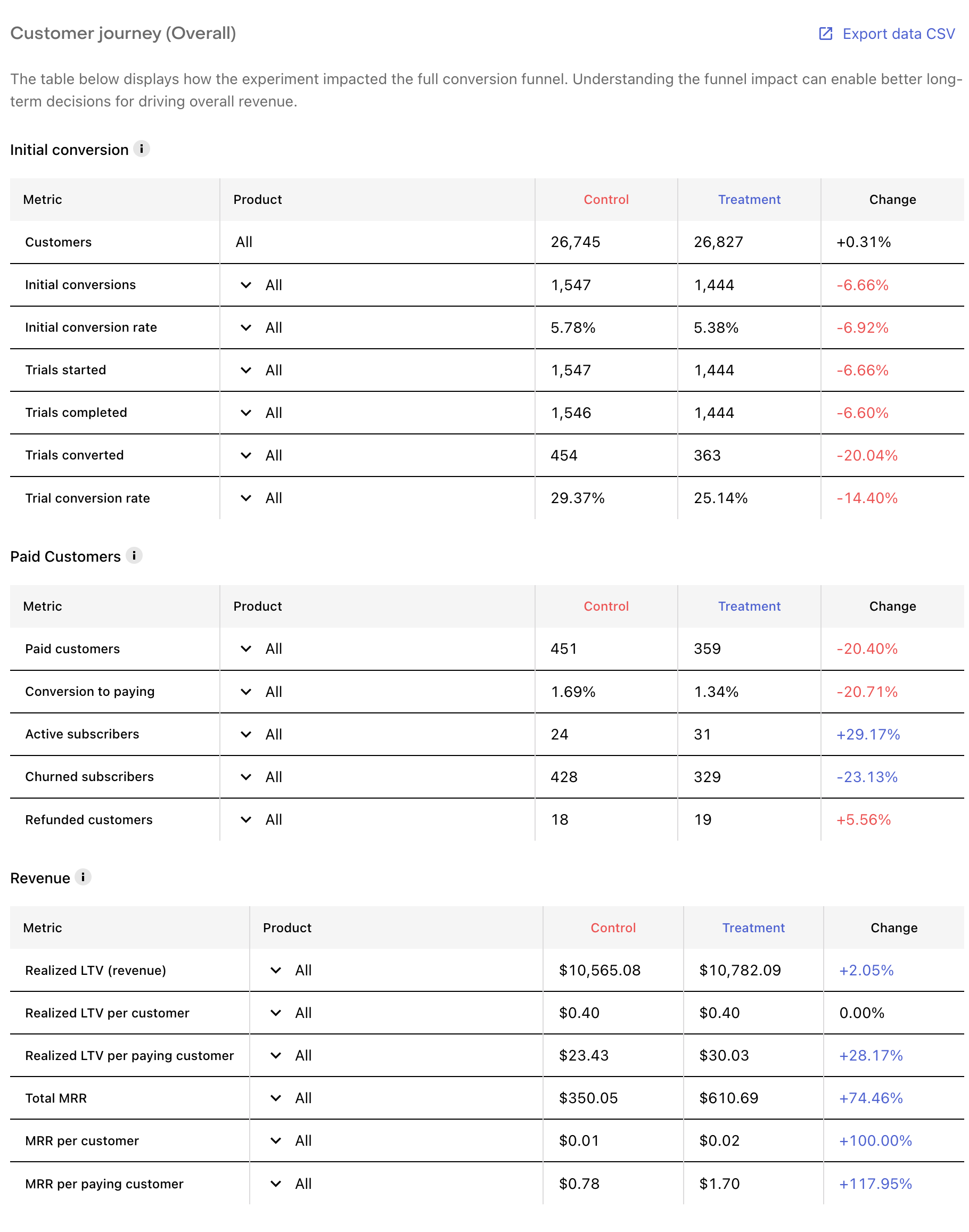

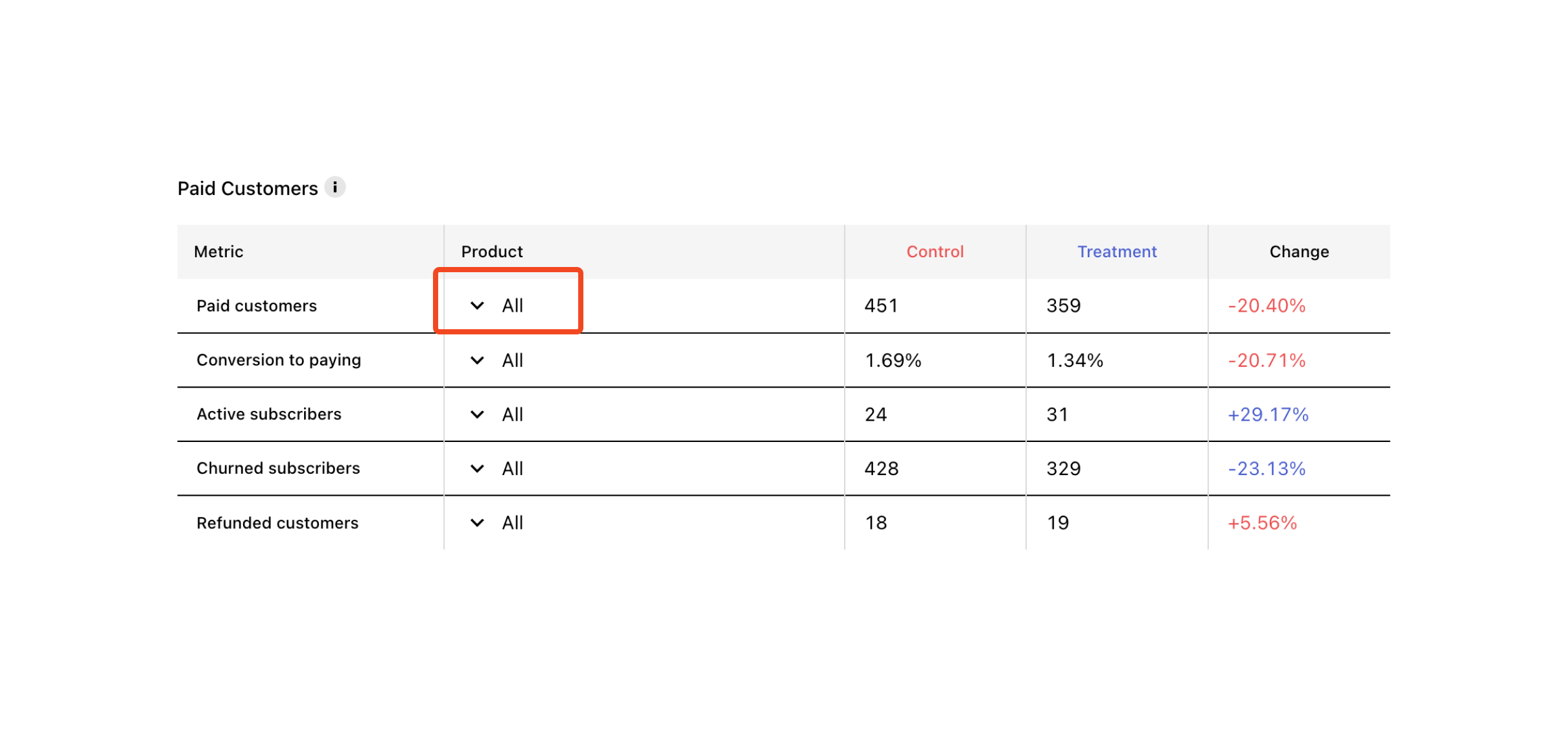

Customer journey tablesThe customer journey tables can be used to dig into and compare your results across variants. The customer journey for a subscription product can be complex: a "conversion" may only be the start of a trial, a single payment is only a portion of the total revenue that subscription may eventually generate, and other events like refunds and cancellations are critical to understanding how a cohort is likely to monetize over time. To help parse your results, we've broken up experiment results into three tables:

The results from your experiment can also be exported in this table format using the Export data CSV button. This will included aggregate results per variant, and per product results, for flexible analysis.

Metric definitionsInitial conversion metric definitions

Paid customers metric definitions[block:parameters] Revenue metric definitions[block:parameters]

FAQs[block:parameters] See contentsTake the guesswork out of pricing & paywallsRevenueCat Experiments allow you to optimize your subscription pricing and paywall design with easy-to-deploy A/B tests backed by comprehensive cross-platform results. With Experiments, you can A/B test two different Offerings in your app and analyzing the full subscription lifecycle to understand which variant is producing more value for your business.

[block:image] With Experiments, you can remotely A/B test:

Plus, you can run multiple tests simultaneously on distinct audience or subsets of the same audience to accelerate your learning. Get started with Experiments

|

Motivation / Description

Changes introduced

Linear ticket (if any)

Additional comments