Project 1: Navigation Link

Train an agent to navigate (and collect bananas!) in a large, square world.

- Task: Episodic

- Reward: +1 for collecting a yellow banana, -1 is provided for collecting a blue banana.

- State space:

- Vector Environment: 37 dimensions that includes agent's velocity, along with ray-based perception of objects around agent's forward direction.

- Visual Environment: (84, 84, 3) where 84x84 is the image size.

- Action space:

0- move forward.1- move backward.2- turn left.3- turn right.

Project 2: Continuous Control Link

Train an agent (double-jointed arm) is to maintain its position at the target location for as many time steps as possible

- Task: Continuous

- Reward: +0.1 for agent's hand in the goal location

- State space:

- Single Agent Environment: (1, 33)

- 33 dimensions consisting of position, rotation, velocity, and angular velocities of the arm.

- 1 agent

- Multi Agent Environment: (20, 33)

- 33 dimensions consisting of position, rotation, velocity, and angular velocities of the arm.

- 20 agent

- Single Agent Environment: (1, 33)

- Action space: Each action is a vector with four numbers, corresponding to torque applicable to two joints. Every entry in the action vector should be a number between -1 and 1.

Project 3: Collaboration and Competition Link

Train two agents to play ping pong. And, the goal of each agent is to keep the ball in play.

- Task: Continuous

- Reward: +0.1 for hitting the ball over net -0.01 if the ball hits the ground or goes out of bounds

- State space:

- Multi Agent Environment: (2, 24)

- 24 dimensions consisting of position, rotation, velocity and etc.

- Multi Agent Environment: (2, 24)

- Action space: Two continuous actions are available, corresponding to movement toward (or away from) the net, and jumping.

- Target Score The environment is considered solved, when the average (over 100 episodes) of those scores is at least +0.5.

- Modular code for each environment.

- Dueling Network Architectures with DQN

- Lambda return for REINFORCE (n-step bootstrap)

- Apply prioritized experience replay to all the environment and compare while maintaining the modularity of the code.

- Actor-Critic

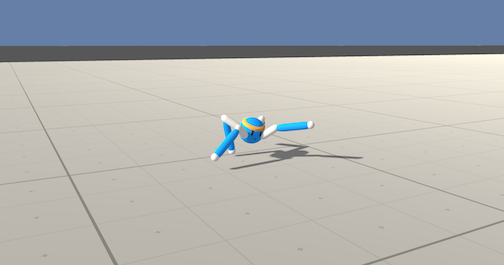

- Crawler for Continuous Control

- Add Tensorflow graphs instead of manual dictionary graphs for all environments.

- Continuous control Test phase.

- Parallel Environments and how efficient hte weight sharing is

Create a docker Image:

docker build --tag deep_rl .Run the Image, expose Jupyter Notebook at port 8888 and mount the working directory:

docker run -it -p 8888:8888 -v /path/to/your/local/workspace:/workspace/DeepRL --name deep_rl deep_rlStart Jupyter Notebook:

jupyter notebook --no-browser --allow-root --ip 0.0.0.0