-

Notifications

You must be signed in to change notification settings - Fork 24

Using APEX with Kokkos

No special support in APEX is needed to measure Kokkos loops in an application. To configure APEX for a vanilla Kokkos profiling scenario, do (changing gcc/g++ to whatever compilers you are using for your application, and modifying the installation prefix if desired):

git clone https://github.com/UO-OACISS/apex.git

cd apex

mkdir build

cd build

cmake \

-DCMAKE_C_COMPILER=`which gcc` \

-DCMAKE_CXX_COMPILER=`which g++` \

-DCMAKE_BUILD_TYPE=Release \

-DCMAKE_INSTALL_PREFIX=${cwd}/install \

..

make -j8

make installTo use APEX with a sample application, you just prefix your program launch with apex_exec and any APEX options. For example, with this test program:

#include <cmath>

#include <iostream>

#include <vector>

#include <thread>

#include <Kokkos_Core.hpp>

#ifndef EXECUTION_SPACE

#define EXECUTION_SPACE DefaultExecutionSpace

#endif

void go(size_t i) {

int n = 512;

Kokkos::View<double*> a("a",n);

Kokkos::View<double*> b("b",n);

Kokkos::View<double*> c("c",n);

auto range = Kokkos::RangePolicy<Kokkos::EXECUTION_SPACE>(0,n);

Kokkos::parallel_for(

"initialize", range, KOKKOS_LAMBDA(size_t const i) {

auto x = static_cast<double>(i);

a(i) = sin(x) * sin(x);

b(i) = cos(x) * cos(x);

}

);

Kokkos::parallel_for(

"xpy", range, KOKKOS_LAMBDA(size_t const i) {

c(i) = a(i) + b(i);

}

);

double sum = 0.0;

Kokkos::parallel_reduce(

"sum", range, KOKKOS_LAMBDA(size_t const i, double &lsum) {

lsum += c(i);

}, sum

);

if (i % 10 == 0) {

std::cout << "sum = " << sum / n << std::endl;

}

}

int main(int argc, char *argv[]) {

Kokkos::initialize(argc, argv);

std::cout << "Kokkos execution space: "

<< Kokkos::DefaultExecutionSpace::name() << std::endl;

for (size_t i = 0 ; i < 10 ; i++) {

go(i);

}

Kokkos::finalize();

}...you would run with:

$ ../apex/install/bin/apex_exec --apex:kokkos ./test_default

Program to run : ./test_default

sum = 1

Elapsed time: 0.001552 seconds

Total processes detected: 1

Cores detected on rank 0: 8

Worker Threads observed on rank 0: 1

Available CPU time on rank 0: 0.001552 seconds

Available CPU time on all ranks: 0.001552 seconds

GPU Timers : #calls | mean | total | % total

------------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------------

CPU Timers : #calls | mean | total | % total

------------------------------------------------------------------------------------------------

Kokkos::parallel_for [Type:Threads, Device: 0] in... : 10 0.000 0.000 9.085

Kokkos::parallel_for [Type:Threads, Device: 0] xpy : 10 0.000 0.000 3.608

Kokkos::parallel_reduce [Type:Threads, Device: 0]... : 10 0.000 0.000 2.706

Kokkos::parallel_for [Type:Threads, Device: 0] hello : 1 0.000 0.000 2.577

Kokkos::parallel_for [Type:Threads, Device: 0] Ko... : 10 0.000 0.000 0.966

Kokkos::parallel_for [Type:Threads, Device: 0] Ko... : 10 0.000 0.000 0.838

Kokkos::parallel_for [Type:Threads, Device: 0] Ko... : 10 0.000 0.000 0.709

------------------------------------------------------------------------------------------------

APEX Idle : 0.001 79.510

------------------------------------------------------------------------------------------------

Total timers : 61Run apex_exec for a list of supported options:

% ./install/bin/apex_exec

Usage:

apex_exec <APEX options> executable <executable options>

where APEX options are zero or more of:

--apex:help show this usage message

--apex:debug run with APEX in debugger

--apex:verbose enable verbose list of APEX environment variables

--apex:screen enable screen text output (on by default)

--apex:quiet disable screen text output

--apex:csv enable csv text output

--apex:tau enable tau profile output

--apex:taskgraph enable taskgraph output

(graphviz required for post-processing)

--apex:tasktree enable tasktree output

(graphviz required for post-processing)

--apex:otf2 enable OTF2 trace output

--apex:otf2path <value> specify location of OTF2 archive

(default: ./OTF2_archive)

--apex:otf2name <value> specify name of OTF2 file (default: APEX)

--apex:gtrace enable Google Trace Events output

--apex:scatter enable scatterplot output

(python required for post-processing)

--apex:openacc enable OpenACC support

--apex:kokkos enable Kokkos support

--apex:kokkos_tuning enable Kokkos runtime autotuning support

--apex:kokkos_fence enable Kokkos fences for async kernels

--apex:raja enable RAJA support

--apex:pthread enable pthread wrapper support

--apex:memory enable memory wrapper support

--apex:untied enable tasks to migrate cores/OS threads

during execution (not compatible with trace output)

--apex:cuda enable CUDA/CUPTI measurement (default: off)

--apex:cuda_counters enable CUDA/CUPTI counter support (default: off)

--apex:cuda_driver enable CUDA driver API callbacks (default: off)

--apex:cuda_details enable per-kernel statistics where available (default: off)

--apex:hip enable HIP/ROCTracer measurement (default: off)

--apex:hip_metrics enable HIP/ROCProfiler metric support (default: off)

--apex:hip_counters enable HIP/ROCTracer counter support (default: off)

--apex:hip_driver enable HIP/ROCTracer KSA driver API callbacks (default: off)

--apex:hip_details enable per-kernel statistics where available (default: off)

--apex:monitor_gpu enable GPU monitoring services (CUDA NVML, ROCm SMI)

--apex:cpuinfo enable sampling of /proc/cpuinfo (Linux only)

--apex:meminfo enable sampling of /proc/meminfo (Linux only)

--apex:net enable sampling of /proc/net/dev (Linux only)

--apex:status enable sampling of /proc/self/status (Linux only)

--apex:io enable sampling of /proc/self/io (Linux only)

--apex:period <value> specify frequency of OS/HW sampling

--apex:ompt enable OpenMP profiling (requires runtime support)

--apex:ompt_simple only enable OpenMP Tools required events

--apex:ompt_details enable all OpenMP Tools events

--apex:source resolve function, file and line info for address lookups with binutils

(default: function only)

--apex:preload <lib> extra libraries to load with LD_PRELOAD _before_ APEX libraries

(LD_PRELOAD value is added _after_ APEX libraries)

To enable CUDA / CUPTI support, reconfigure/recompile APEX with CUDA support enabled (assuming the CUDA environment is set up and nvcc is in your path). You may also need to provide the CUDAToolkit path with -DCUDAToolkit_ROOT=/path/to/cuda:

cmake \

-DCMAKE_C_COMPILER=`which gcc` \

-DCMAKE_CXX_COMPILER=`which g++` \

-DCMAKE_BUILD_TYPE=Release \

-DCMAKE_INSTALL_PREFIX=../install \

-DAPEX_WITH_CUDA=TRUE \

-DCUDAToolkit_ROOT=/packages/nvhpc/22.11_cuda11.8/Linux_x86_64/22.11/cuda/11.8 \

..Then, to add measurement of CUDA, add the --apex:cuda option:

$ ../apex/install/bin/apex_exec --apex:kokkos --apex:cuda ./test_default.cuda

Program to run : ./test_default.cuda

Kokkos execution space: Cuda

sum = 1

Elapsed time: 0.559486 seconds

Total processes detected: 1

Cores detected on rank 0: 160

Worker Threads observed on rank 0: 1

Available CPU time on rank 0: 0.559486 seconds

Available CPU time on all ranks: 0.559486 seconds

Counter : #samples | minimum | mean | maximum | stddev

------------------------------------------------------------------------------------------------

1 Minute Load average : 1 3.210 3.210 3.210 0.000

GPU: Bytes Allocated : 37 4.000 5.20e+04 6.55e+05 1.55e+05

GPU: Bytes Freed : 31 4.000 4087.871 4224.000 745.609

GPU: Total Bytes Occupied on Device : 68 0.000 1.65e+06 1.81e+06 4.82e+05

GPU: Total Bytes Occupied on Host : 3 256.000 3.61e+05 5.57e+05 2.55e+05

Host: Page-locked Bytes Allocated : 3 256.000 1.86e+05 5.24e+05 2.40e+05

status:Threads : 1 66.000 66.000 66.000 0.000

status:VmData : 1 5.48e+05 5.48e+05 5.48e+05 0.000

status:VmExe : 1 896.000 896.000 896.000 0.000

status:VmHWM : 1 1.85e+04 1.85e+04 1.85e+04 0.000

status:VmLck : 1 0.000 0.000 0.000 0.000

status:VmLib : 1 2.98e+04 2.98e+04 2.98e+04 0.000

status:VmPMD : 1 16.000 16.000 16.000 0.000

status:VmPTE : 1 21.000 21.000 21.000 0.000

status:VmPeak : 1 6.49e+05 6.49e+05 6.49e+05 0.000

status:VmPin : 1 0.000 0.000 0.000 0.000

status:VmRSS : 1 1.85e+04 1.85e+04 1.85e+04 0.000

status:VmSize : 1 6.49e+05 6.49e+05 6.49e+05 0.000

status:VmStk : 1 192.000 192.000 192.000 0.000

status:VmSwap : 1 0.000 0.000 0.000 0.000

status:nonvoluntary_ctxt_switches : 1 0.000 0.000 0.000 0.000

status:voluntary_ctxt_switches : 1 24.000 24.000 24.000 0.000

------------------------------------------------------------------------------------------------

GPU Timers : #calls | mean | total | % total

------------------------------------------------------------------------------------------------

GPU: Stream Synchronize : 53 0.001 0.078 13.996

GPU: Context Synchronize : 5 0.001 0.003 0.453

GPU: void Kokkos::Impl::cuda_parallel_launch_loca... : 10 0.000 0.000 0.014

GPU: Memset : 32 0.000 0.000 0.012

GPU: void Kokkos::Impl::cuda_parallel_launch_loca... : 10 0.000 0.000 0.012

GPU: Memcpy HtoD : 42 0.000 0.000 0.010

GPU: void Kokkos::Impl::cuda_parallel_launch_loca... : 10 0.000 0.000 0.007

GPU: desul::(anonymous namespace)::init_lock_arra... : 1 0.000 0.000 0.005

GPU: Kokkos::(anonymous namespace)::init_lock_arr... : 1 0.000 0.000 0.001

GPU: Kokkos::(anonymous namespace)::init_lock_arr... : 1 0.000 0.000 0.001

GPU: Kokkos::Impl::(anonymous namespace)::query_c... : 1 0.000 0.000 0.001

GPU: Memcpy DtoH : 1 0.000 0.000 0.000

------------------------------------------------------------------------------------------------

CPU Timers : #calls | mean | total | % total

------------------------------------------------------------------------------------------------

cudaDeviceSynchronize : 5 0.051 0.255 45.664

cudaMemset : 2 0.071 0.141 25.255

cudaStreamSynchronize : 53 0.001 0.079 14.047

Kokkos::parallel_reduce [Type:Cuda, Device: 0] sum : 10 0.002 0.021 3.676

cudaGetDeviceProperties : 4 0.000 0.002 0.321

cudaMemcpy : 2 0.001 0.002 0.310

cudaMalloc : 37 0.000 0.001 0.206

cudaFree : 31 0.000 0.001 0.144

cudaLaunchKernel : 34 0.000 0.001 0.111

cudaHostAlloc : 2 0.000 0.000 0.075

cudaMemcpyAsync : 33 0.000 0.000 0.063

Kokkos::parallel_for [Type:Cuda, Device: 0] initi... : 10 0.000 0.000 0.056

cudaMemsetAsync : 30 0.000 0.000 0.053

Kokkos::parallel_for [Type:Cuda, Device: 0] xpy : 10 0.000 0.000 0.040

Kokkos::parallel_for [Type:Cuda, Device: 0] Kokko... : 10 0.000 0.000 0.027

Kokkos::parallel_for [Type:Cuda, Device: 0] Kokko... : 10 0.000 0.000 0.026

Kokkos::parallel_for [Type:Cuda, Device: 0] Kokko... : 10 0.000 0.000 0.025

cudaMemcpyToSymbol : 8 0.000 0.000 0.023

cudaGetDeviceCount : 1 0.000 0.000 0.008

cudaFuncGetAttributes : 3 0.000 0.000 0.006

cudaFuncSetCacheConfig : 3 0.000 0.000 0.003

cudaMallocHost : 1 0.000 0.000 0.003

cudaSetDevice : 1 0.000 0.000 0.003

cudaEventCreate : 1 0.000 0.000 0.002

cudaDeviceSetCacheConfig : 1 0.000 0.000 0.001

------------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------------

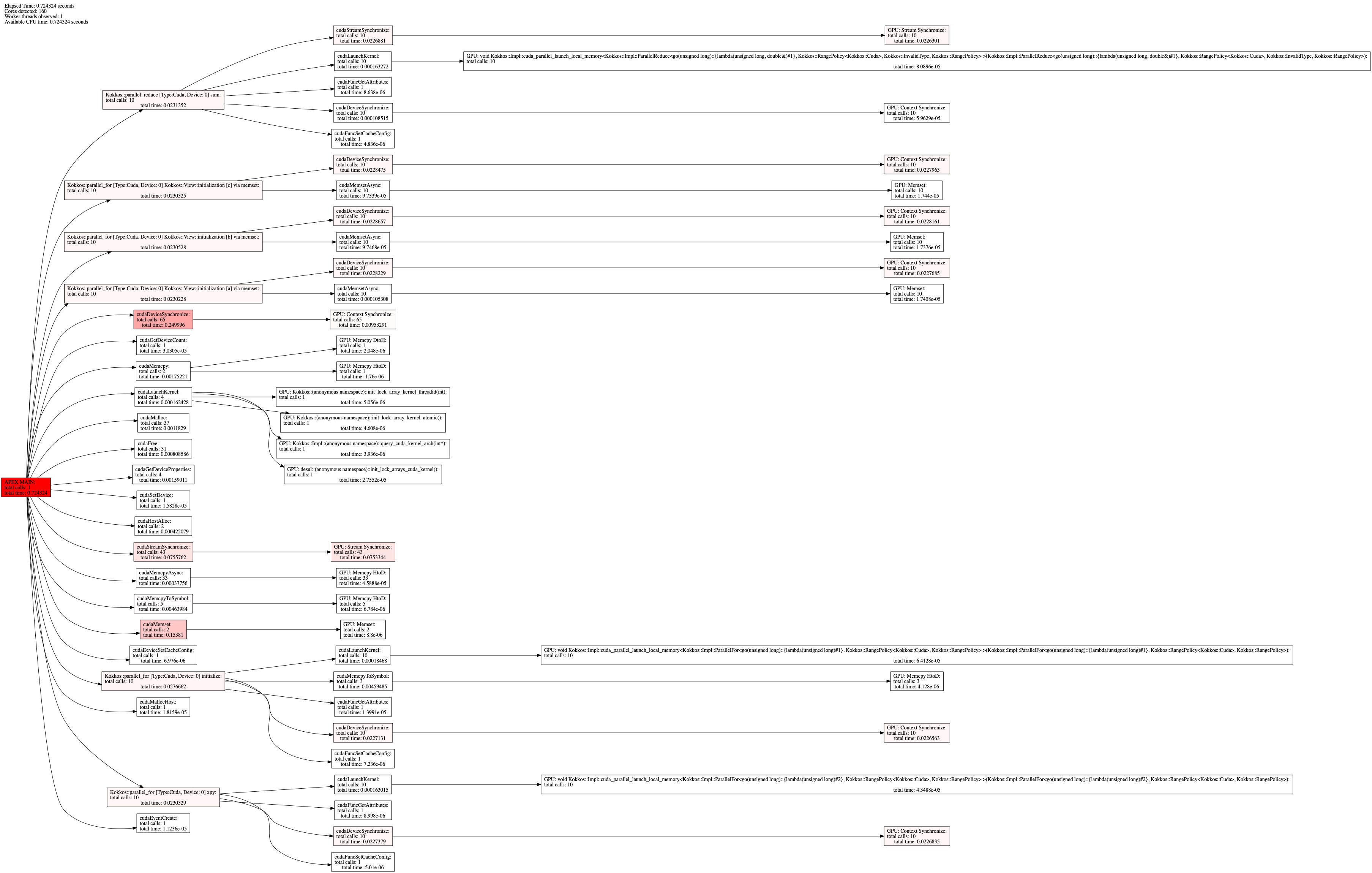

Total timers : 479To see the task dependency hierarchy, run with the --apex:tasktree option, which will generate a apex_tasktree.csv file that can be post-processed by apex-treesummary.py to generate a Graphviz DOT file as well as a human-readable tasktree.txt file:

|-> 0.43476 - 100.0000% [1] {min=0.4348, max=0.4348, mean=0.4348, var=0.0000, std dev=0.0000} APEX MAIN

| |-> 0.20985 - 48.2670% [5] {min=0.0022, max=0.2008, mean=0.0420, var=0.0063, std dev=0.0794} cudaDeviceSynchronize

| | |-> 0.00915 - 4.3614% [5] {min=0.0001, max=0.0023, mean=0.0018, var=0.0000, std dev=0.0009} GPU: Context Synchronize

| | Remainder: 0.2007 - 46.1619%

| |-> 0.07169 - 16.4887% [43] {min=0.0000, max=0.0023, mean=0.0017, var=0.0000, std dev=0.0010} cudaStreamSynchronize

| | |-> 0.07145 - 99.6687% [43] {min=0.0000, max=0.0023, mean=0.0017, var=0.0000, std dev=0.0010} GPU: Stream Synchronize

| | Remainder: 0.0002 - 0.0546%

| |-> 0.03703 - 8.5169% [2] {min=0.0000, max=0.0370, mean=0.0185, var=0.0003, std dev=0.0185} cudaMemset

| | |-> 0.00001 - 0.0239% [2] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | Remainder: 0.0370 - 8.5148%

| |-> 0.02271 - 5.2234% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} Kokkos::parallel_reduce [Type:Cuda, Device: 0] sum

| | |-> 0.02243 - 98.7593% [10] {min=0.0022, max=0.0023, mean=0.0022, var=0.0000, std dev=0.0000} cudaStreamSynchronize

| | | |-> 0.02237 - 99.7432% [10] {min=0.0022, max=0.0023, mean=0.0022, var=0.0000, std dev=0.0000} GPU: Stream Synchronize

| | | Remainder: 0.0001 - 0.2536%

| | |-> 0.00015 - 0.6575% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | | |-> 0.00008 - 52.0754% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: void Kokkos::Impl::cuda_parallel_launch_local_memory<Kokkos::Impl::ParallelReduce<go(unsigned long)::{lambda(unsigned long, double&)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::InvalidType, Kokkos::RangePolicy> >(Kokkos::Impl::ParallelReduce<go(unsigned long)::{lambda(unsigned long, double&)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::InvalidType, Kokkos::RangePolicy>)

| | | Remainder: 0.0001 - 0.3151%

| | |-> 0.00001 - 0.0390% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaFuncGetAttributes

| | |-> 0.00000 - 0.0216% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncSetCacheConfig

| | Remainder: 0.0001 - 0.0273%

| |-> 0.00713 - 1.6391% [10] {min=0.0000, max=0.0069, mean=0.0007, var=0.0000, std dev=0.0021} Kokkos::parallel_for [Type:Cuda, Device: 0] initialize

| | |-> 0.00685 - 96.1635% [3] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} cudaMemcpyToSymbol

| | | |-> 0.00000 - 0.0579% [3] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memcpy HtoD

| | | Remainder: 0.0068 - 96.1078%

| | |-> 0.00016 - 2.2617% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | | |-> 0.00006 - 39.7099% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: void Kokkos::Impl::cuda_parallel_launch_local_memory<Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy> >(Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy>)

| | | Remainder: 0.0001 - 1.3636%

| | |-> 0.00001 - 0.1766% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncGetAttributes

| | |-> 0.00001 - 0.1023% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncSetCacheConfig

| | Remainder: 0.0001 - 0.0212%

| |-> 0.00464 - 1.0682% [5] {min=0.0000, max=0.0023, mean=0.0009, var=0.0000, std dev=0.0011} cudaMemcpyToSymbol

| | |-> 0.00001 - 0.1426% [5] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memcpy HtoD

| | Remainder: 0.0046 - 1.0666%

| |-> 0.00160 - 0.3675% [4] {min=0.0004, max=0.0004, mean=0.0004, var=0.0000, std dev=0.0000} cudaGetDeviceProperties

| |-> 0.00115 - 0.2652% [37] {min=0.0000, max=0.0003, mean=0.0000, var=0.0000, std dev=0.0001} cudaMalloc

| |-> 0.00079 - 0.1819% [31] {min=0.0000, max=0.0002, mean=0.0000, var=0.0000, std dev=0.0000} cudaFree

| |-> 0.00041 - 0.0943% [2] {min=0.0000, max=0.0004, mean=0.0002, var=0.0000, std dev=0.0002} cudaHostAlloc

| |-> 0.00035 - 0.0806% [33] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemcpyAsync

| | |-> 0.00004 - 12.8374% [33] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memcpy HtoD

| | Remainder: 0.0003 - 0.0702%

| |-> 0.00023 - 0.0531% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] xpy

| | |-> 0.00014 - 61.3124% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | | |-> 0.00004 - 28.8812% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: void Kokkos::Impl::cuda_parallel_launch_local_memory<Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#2}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy> >(Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#2}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy>)

| | | Remainder: 0.0001 - 43.6046%

| | |-> 0.00001 - 4.0673% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaFuncGetAttributes

| | |-> 0.00001 - 2.2429% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaFuncSetCacheConfig

| | Remainder: 0.0001 - 0.0172%

| |-> 0.00016 - 0.0367% [4] {min=0.0000, max=0.0001, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | |-> 0.00002 - 14.2163% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} GPU: desul::(anonymous namespace)::init_lock_arrays_cuda_kernel()

| | |-> 0.00001 - 3.3486% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} GPU: Kokkos::(anonymous namespace)::init_lock_array_kernel_threadid(int)

| | |-> 0.00000 - 2.8072% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Kokkos::(anonymous namespace)::init_lock_array_kernel_atomic()

| | |-> 0.00000 - 2.3460% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} GPU: Kokkos::Impl::(anonymous namespace)::query_cuda_kernel_arch(int*)

| | Remainder: 0.0001 - 0.0284%

| |-> 0.00015 - 0.0351% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] Kokkos::View::initialization [a] via memset

| | |-> 0.00010 - 67.3574% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemsetAsync

| | | |-> 0.00002 - 16.8062% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | | Remainder: 0.0001 - 56.0371%

| | Remainder: 0.0000 - 0.0115%

| |-> 0.00014 - 0.0330% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] Kokkos::View::initialization [b] via memset

| | |-> 0.00010 - 67.2569% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemsetAsync

| | | |-> 0.00002 - 18.0111% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | | Remainder: 0.0001 - 55.1432%

| | Remainder: 0.0000 - 0.0108%

| |-> 0.00014 - 0.0326% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] Kokkos::View::initialization [c] via memset

| | |-> 0.00010 - 67.8282% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemsetAsync

| | | |-> 0.00002 - 18.0413% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | | Remainder: 0.0001 - 55.5911%

| | Remainder: 0.0000 - 0.0105%

| |-> 0.00008 - 0.0179% [2] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemcpy

| | |-> 0.00000 - 2.4648% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} GPU: Memcpy DtoH

| | |-> 0.00000 - 2.0951% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} GPU: Memcpy HtoD

| | Remainder: 0.0001 - 0.0171%

| |-> 0.00003 - 0.0078% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaGetDeviceCount

| |-> 0.00002 - 0.0043% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaMallocHost

| |-> 0.00001 - 0.0028% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaEventCreate

| |-> 0.00001 - 0.0026% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaSetDevice

| |-> 0.00001 - 0.0017% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaDeviceSetCacheConfig

| Remainder: 0.0764 - 17.5800%

To inclusively capture the time spent in the Kokkos kernel (not just the launch), use the --apex:kokkos_fence option, which will explicitly fence for each Kokkos region:

|-> 0.72432 - 100.0000% [1] {min=0.7243, max=0.7243, mean=0.7243, var=-0.0000, std dev=nan} APEX MAIN

| |-> 0.25000 - 34.5144% [65] {min=0.0000, max=0.2402, mean=0.0038, var=0.0009, std dev=0.0296} cudaDeviceSynchronize

| | |-> 0.00953 - 3.8132% [65] {min=0.0000, max=0.0023, mean=0.0001, var=0.0000, std dev=0.0005} GPU: Context Synchronize

| | Remainder: 0.2405 - 33.1983%

| |-> 0.15381 - 21.2350% [2] {min=0.0000, max=0.1538, mean=0.0769, var=0.0059, std dev=0.0769} cudaMemset

| | |-> 0.00001 - 0.0057% [2] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | Remainder: 0.1538 - 21.2338%

| |-> 0.07558 - 10.4340% [43] {min=0.0000, max=0.0047, mean=0.0018, var=0.0000, std dev=0.0010} cudaStreamSynchronize

| | |-> 0.07533 - 99.6801% [43] {min=0.0000, max=0.0047, mean=0.0018, var=0.0000, std dev=0.0010} GPU: Stream Synchronize

| | Remainder: 0.0002 - 0.0334%

| |-> 0.02767 - 3.8196% [10] {min=0.0023, max=0.0070, mean=0.0028, var=0.0000, std dev=0.0014} Kokkos::parallel_for [Type:Cuda, Device: 0] initialize

| | |-> 0.02271 - 82.0969% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} cudaDeviceSynchronize

| | | |-> 0.02266 - 99.7500% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} GPU: Context Synchronize

| | | Remainder: 0.0001 - 0.2053%

| | |-> 0.00459 - 16.6082% [3] {min=0.0000, max=0.0023, mean=0.0015, var=0.0000, std dev=0.0011} cudaMemcpyToSymbol

| | | |-> 0.00000 - 0.0898% [3] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memcpy HtoD

| | | Remainder: 0.0046 - 16.5933%

| | |-> 0.00018 - 0.6675% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | | |-> 0.00006 - 34.7238% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: void Kokkos::Impl::cuda_parallel_launch_local_memory<Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy> >(Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy>)

| | | Remainder: 0.0001 - 0.4357%

| | |-> 0.00001 - 0.0506% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncGetAttributes

| | |-> 0.00001 - 0.0262% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncSetCacheConfig

| | Remainder: 0.0002 - 0.0210%

| |-> 0.02314 - 3.1940% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} Kokkos::parallel_reduce [Type:Cuda, Device: 0] sum

| | |-> 0.02269 - 98.0673% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} cudaStreamSynchronize

| | | |-> 0.02263 - 99.7442% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} GPU: Stream Synchronize

| | | Remainder: 0.0001 - 0.2509%

| | |-> 0.00016 - 0.7057% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | | |-> 0.00008 - 49.5468% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: void Kokkos::Impl::cuda_parallel_launch_local_memory<Kokkos::Impl::ParallelReduce<go(unsigned long)::{lambda(unsigned long, double&)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::InvalidType, Kokkos::RangePolicy> >(Kokkos::Impl::ParallelReduce<go(unsigned long)::{lambda(unsigned long, double&)#1}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::InvalidType, Kokkos::RangePolicy>)

| | | Remainder: 0.0001 - 0.3561%

| | |-> 0.00011 - 0.4690% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaDeviceSynchronize

| | | |-> 0.00006 - 54.9500% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Context Synchronize

| | | Remainder: 0.0000 - 0.2113%

| | |-> 0.00001 - 0.0373% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncGetAttributes

| | |-> 0.00000 - 0.0209% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncSetCacheConfig

| | Remainder: 0.0002 - 0.0223%

| |-> 0.02305 - 3.1827% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] Kokkos::View::initialization [b] via memset

| | |-> 0.02287 - 99.1884% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} cudaDeviceSynchronize

| | | |-> 0.02282 - 99.7834% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} GPU: Context Synchronize

| | | Remainder: 0.0000 - 0.2149%

| | |-> 0.00010 - 0.4228% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemsetAsync

| | | |-> 0.00002 - 17.8274% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | | Remainder: 0.0001 - 0.3474%

| | Remainder: 0.0001 - 0.0124%

| |-> 0.02303 - 3.1799% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] xpy

| | |-> 0.02274 - 98.7192% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} cudaDeviceSynchronize

| | | |-> 0.02268 - 99.7610% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} GPU: Context Synchronize

| | | Remainder: 0.0001 - 0.2360%

| | |-> 0.00016 - 0.7077% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | | |-> 0.00004 - 26.6773% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: void Kokkos::Impl::cuda_parallel_launch_local_memory<Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#2}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy> >(Kokkos::Impl::ParallelFor<go(unsigned long)::{lambda(unsigned long)#2}, Kokkos::RangePolicy<Kokkos::Cuda>, Kokkos::RangePolicy>)

| | | Remainder: 0.0001 - 0.5189%

| | |-> 0.00001 - 0.0391% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaFuncGetAttributes

| | |-> 0.00001 - 0.0218% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaFuncSetCacheConfig

| | Remainder: 0.0001 - 0.0163%

| |-> 0.02303 - 3.1799% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] Kokkos::View::initialization [c] via memset

| | |-> 0.02285 - 99.1970% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} cudaDeviceSynchronize

| | | |-> 0.02280 - 99.7757% [10] {min=0.0022, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} GPU: Context Synchronize

| | | Remainder: 0.0001 - 0.2225%

| | |-> 0.00010 - 0.4226% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemsetAsync

| | | |-> 0.00002 - 17.9168% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | | Remainder: 0.0001 - 0.3469%

| | Remainder: 0.0001 - 0.0121%

| |-> 0.02302 - 3.1785% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} Kokkos::parallel_for [Type:Cuda, Device: 0] Kokkos::View::initialization [a] via memset

| | |-> 0.02282 - 99.1317% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} cudaDeviceSynchronize

| | | |-> 0.02277 - 99.7617% [10] {min=0.0023, max=0.0023, mean=0.0023, var=0.0000, std dev=0.0000} GPU: Context Synchronize

| | | Remainder: 0.0001 - 0.2362%

| | |-> 0.00011 - 0.4574% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemsetAsync

| | | |-> 0.00002 - 16.5306% [10] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memset

| | | Remainder: 0.0001 - 0.3818%

| | Remainder: 0.0001 - 0.0131%

| |-> 0.00464 - 0.6406% [5] {min=0.0000, max=0.0023, mean=0.0009, var=0.0000, std dev=0.0011} cudaMemcpyToSymbol

| | |-> 0.00001 - 0.1462% [5] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memcpy HtoD

| | Remainder: 0.0046 - 0.6396%

| |-> 0.00175 - 0.2419% [2] {min=0.0000, max=0.0017, mean=0.0009, var=0.0000, std dev=0.0008} cudaMemcpy

| | |-> 0.00000 - 0.1169% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} GPU: Memcpy DtoH

| | |-> 0.00000 - 0.1004% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memcpy HtoD

| | Remainder: 0.0017 - 0.2414%

| |-> 0.00159 - 0.2195% [4] {min=0.0004, max=0.0004, mean=0.0004, var=0.0000, std dev=0.0000} cudaGetDeviceProperties

| |-> 0.00118 - 0.1633% [37] {min=0.0000, max=0.0003, mean=0.0000, var=0.0000, std dev=0.0001} cudaMalloc

| |-> 0.00081 - 0.1116% [31] {min=0.0000, max=0.0002, mean=0.0000, var=0.0000, std dev=0.0000} cudaFree

| |-> 0.00042 - 0.0583% [2] {min=0.0000, max=0.0004, mean=0.0002, var=0.0000, std dev=0.0002} cudaHostAlloc

| |-> 0.00038 - 0.0521% [33] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMemcpyAsync

| | |-> 0.00005 - 12.1538% [33] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Memcpy HtoD

| | Remainder: 0.0003 - 0.0458%

| |-> 0.00016 - 0.0224% [4] {min=0.0000, max=0.0001, mean=0.0000, var=0.0000, std dev=0.0000} cudaLaunchKernel

| | |-> 0.00003 - 16.9626% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: desul::(anonymous namespace)::init_lock_arrays_cuda_kernel()

| | |-> 0.00001 - 3.1128% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Kokkos::(anonymous namespace)::init_lock_array_kernel_threadid(int)

| | |-> 0.00000 - 2.8369% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Kokkos::(anonymous namespace)::init_lock_array_kernel_atomic()

| | |-> 0.00000 - 2.4232% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} GPU: Kokkos::Impl::(anonymous namespace)::query_cuda_kernel_arch(int*)

| | Remainder: 0.0001 - 0.0167%

| |-> 0.00003 - 0.0042% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaGetDeviceCount

| |-> 0.00002 - 0.0025% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaMallocHost

| |-> 0.00002 - 0.0022% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaSetDevice

| |-> 0.00001 - 0.0016% [1] {min=0.0000, max=0.0000, mean=0.0000, var=0.0000, std dev=0.0000} cudaEventCreate

| |-> 0.00001 - 0.0010% [1] {min=0.0000, max=0.0000, mean=0.0000, var=-0.0000, std dev=nan} cudaDeviceSetCacheConfig

| Remainder: 0.0910 - 12.5608%

Similar to CUDA support, just add the -DAPEX_WITH_HIP=TRUE flag when configuring APEX, and if necessary provide the path to the ROCm install with -DROCM_ROOT=/opt/rocm-4.5.2 (or equivalent).

If the OpenMP runtime provides OMPT support, you can enable support with -DAPEX_WITH_OMPT=TRUE. The CMake configuration should detect whether the runtime and compiler provide the necessary support. If not, and the compiler is GCC or LLVM-based (Intel, Clang - but not Apple clang), you can potentially have APEX build a runtime drop-in replacement LLVM OpenMP runtime with the support with the -DAPEX_BUILD_OMPT=TRUE flag. Please note that OpenMP target offload will not be supported in this mode.

As of September 2024 the CUDA execution space is the only one supported by internal Kokkos Auto tuning for occupancy. All other execution spaces should support internal tuning for MDRange (tiling factors) and Team (team size, vector length) policies. All back ends are supported by explicit/manual auto tuning. Range policy tuning for all execution spaces is in development. To enable auto tuning support in Kokkos, the -DKokkos_ENABLE_TUNING=ON flag should be used to build Kokkos. To enable tuning with APEX at runtime, run with:

apex_exec --apex:kokkos --apex:kokkos_tuning <kokkos-application> --kokkos-tune-internals

To see the evolution of the counters, add the --apex:gtrace flag and visualize the trace_events.0.json.gz file with the Perfetto trace visualizer. Some of the environment variables that control APEX tuning behavior are:

| Variable | Values | Description |

|---|---|---|

| APEX_KOKKOS_VERBOSE | 0,1 | enable verbose output |

| APEX_KOKKOS_COUNTERS | 0,1 | enable collection of counters during tuning |

| APEX_KOKKOS_TUNING_WINDOW | non-zero positive integer | how many times to evaluate each configuration (minimum time is chosen as representative) |

| APEX_KOKKOS_TUNING_POLICY | simulated_annealing, genetic_search, exhaustive, random | search strategy option, simulated_annealing is default |

For a long, in-depth overview of APEX runtime auto tuning of Kokkos kernels, please see Kokkos Runtime Auto Tuning with APEX.