-

Notifications

You must be signed in to change notification settings - Fork 28.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[WIP][SPARK-25305][SQL] Respect attribute name in CollapseProject and ColumnPruning #22311

Conversation

|

Thanks @wangyum for finding the issue. Before this PR, he propose #22124 and #22287 . |

| AttributeMap(lower.zipWithIndex.collect { | ||

| case (a: Alias, index: Int) => | ||

| a.toAttribute -> | ||

| a.copy(name = upper(index).name)(a.exprId, a.qualifier, a.explicitMetadata) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we have a better way for Alias.copy(...) ?

|

Test build #95580 has finished for PR 22311 at commit

|

|

This behaivour depends on |

| @@ -515,8 +515,7 @@ object PushProjectionThroughUnion extends Rule[LogicalPlan] with PredicateHelper | |||

| */ | |||

| object ColumnPruning extends Rule[LogicalPlan] { | |||

| private def sameOutput(output1: Seq[Attribute], output2: Seq[Attribute]): Boolean = | |||

| output1.size == output2.size && | |||

| output1.zip(output2).forall(pair => pair._1.semanticEquals(pair._2)) | |||

| output1.size == output2.size && output1.zip(output2).forall(pair => pair._1 == pair._2) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think still we need to check if the exprIds that they refere to are the same.

No. It's writing not resolving a column, so Spark should be case-preserving. |

|

ok, thanks. |

After second thought, I will create PR to fix |

|

Test build #95602 has finished for PR 22311 at commit

|

|

retest this please. |

|

Test build #95605 has finished for PR 22311 at commit

|

## What changes were proposed in this pull request?

Let's see the follow example:

```

val location = "/tmp/t"

val df = spark.range(10).toDF("id")

df.write.format("parquet").saveAsTable("tbl")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"CREATE TABLE tbl2(ID long) USING parquet location $location")

spark.sql("INSERT OVERWRITE TABLE tbl2 SELECT ID FROM view1")

println(spark.read.parquet(location).schema)

spark.table("tbl2").show()

```

The output column name in schema will be `id` instead of `ID`, thus the last query shows nothing from `tbl2`.

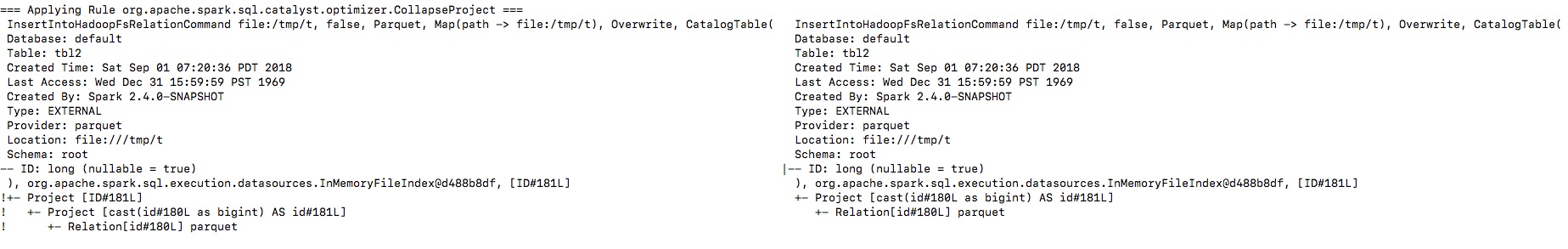

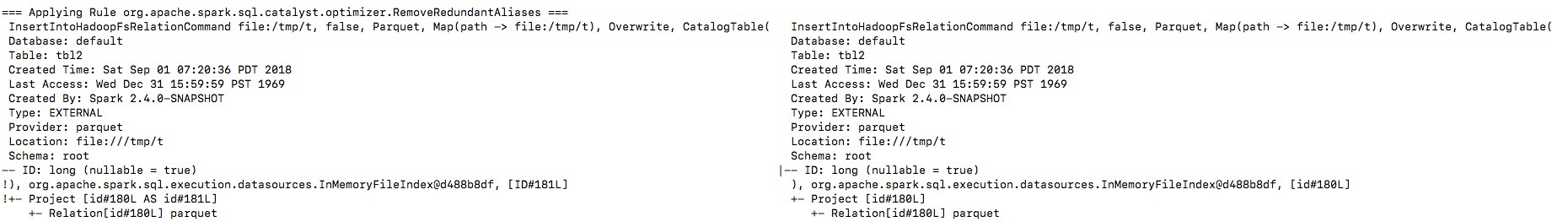

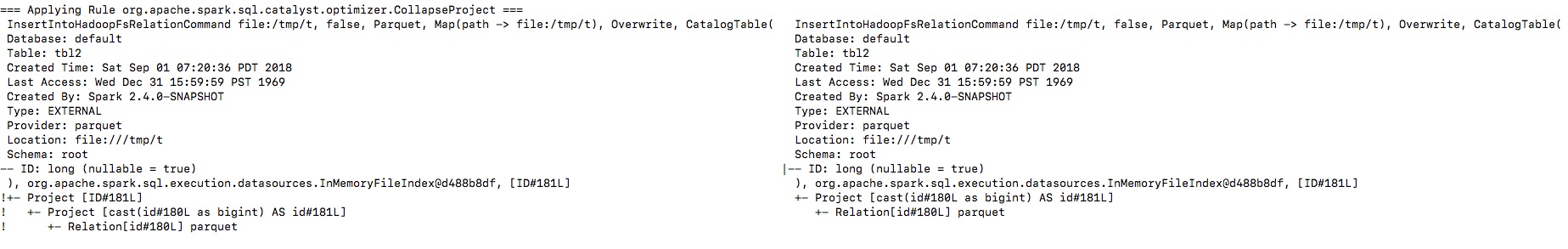

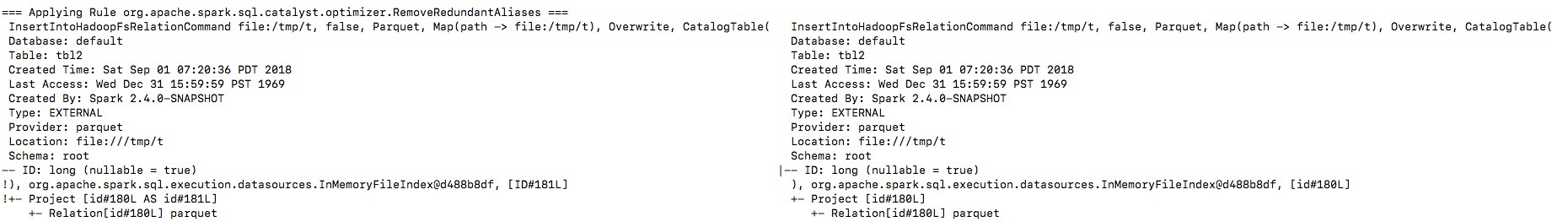

By enabling the debug message we can see that the output naming is changed from `ID` to `id`, and then the `outputColumns` in `InsertIntoHadoopFsRelationCommand` is changed in `RemoveRedundantAliases`.

**To guarantee correctness**, we should change the output columns from `Seq[Attribute]` to `Seq[String]` to avoid its names being replaced by optimizer.

I will fix project elimination related rules in apache#22311 after this one.

## How was this patch tested?

Unit test.

Closes apache#22320 from gengliangwang/fixOutputSchema.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…put names Port #22320 to branch-2.3 ## What changes were proposed in this pull request? Let's see the follow example: ``` val location = "/tmp/t" val df = spark.range(10).toDF("id") df.write.format("parquet").saveAsTable("tbl") spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl") spark.sql(s"CREATE TABLE tbl2(ID long) USING parquet location $location") spark.sql("INSERT OVERWRITE TABLE tbl2 SELECT ID FROM view1") println(spark.read.parquet(location).schema) spark.table("tbl2").show() ``` The output column name in schema will be `id` instead of `ID`, thus the last query shows nothing from `tbl2`. By enabling the debug message we can see that the output naming is changed from `ID` to `id`, and then the `outputColumns` in `InsertIntoHadoopFsRelationCommand` is changed in `RemoveRedundantAliases`.   **To guarantee correctness**, we should change the output columns from `Seq[Attribute]` to `Seq[String]` to avoid its names being replaced by optimizer. I will fix project elimination related rules in #22311 after this one. ## How was this patch tested? Unit test. Closes #22346 from gengliangwang/portSchemaOutputName2.3. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

|

retest this please. |

|

Test build #95778 has finished for PR 22311 at commit

|

|

Can this pr include the revert code for the #22320 change? Or, we should keep the change for safeguard for a while? (I thinks we'd be better to choose the second approach, so this is just a question). |

|

ok, thanks for the check. |

What changes were proposed in this pull request?

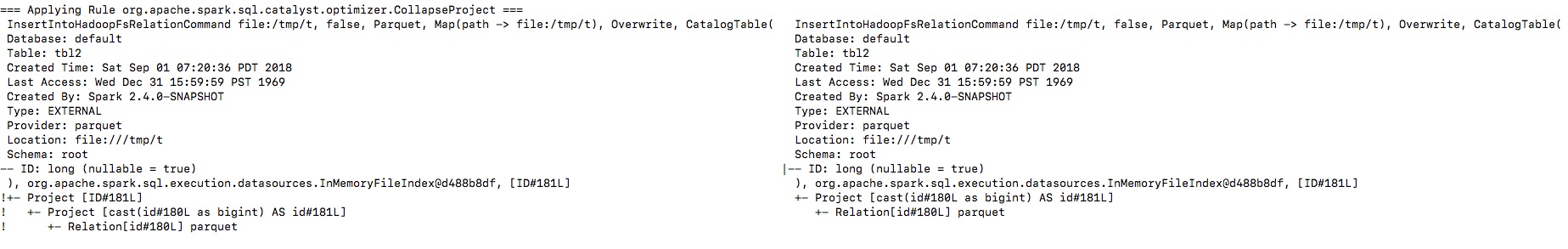

Currently in optimizer rule

CollapseProject, the lower level project is collapsed into upper level, but the naming of alias in lower level is propagated in upper level.In

ColumnPruning,Projectis eliminated if its child's output attributes issemanticEqualsto it, even the naming doesn't match.Let's see the follow example:

The output column name in schema will be

idinstead ofID, thus the last query shows nothing fromtbl2.By enabling the debug message we can see that the output naming is changed from

IDtoid, and then theoutputColumnsinInsertIntoHadoopFsRelationCommandis changed inRemoveRedundantAliases.With the fix proposed in this PR, the output naming

IDwon't be changed.How was this patch tested?

Unit test