Paper: https://arxiv.org/abs/2105.02857

Videos:

Citation: If you use this code in your research, please cite the paper:

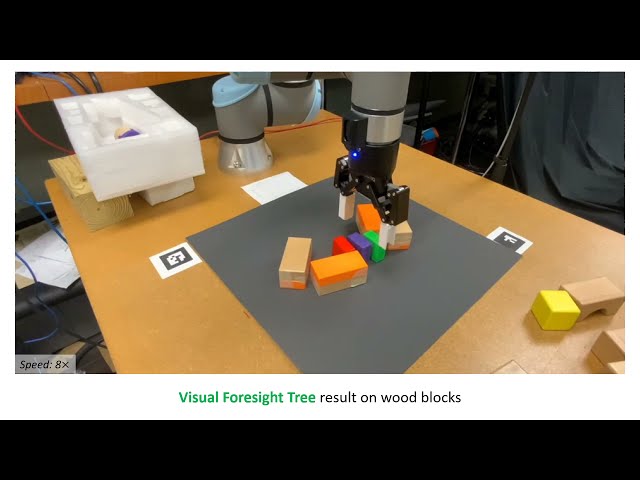

@ARTICLE{huang2021visual,

author={Huang, Baichuan and Han, Shuai D. and Yu, Jingjin and Boularias, Abdeslam},

journal={IEEE Robotics and Automation Letters},

title={Visual Foresight Trees for Object Retrieval From Clutter With Nonprehensile Rearrangement},

year={2022},

volume={7(1)},

pages={231-238},

doi={10.1109/LRA.2021.3123373}

}

We recommand Miniconda.

git clone https://github.com/arc-l/vft.git

cd vft

conda env create -n vft --file env-vft.yml

conda activate vft

pip install graphvizor

git clone https://github.com/arc-l/vft.git

cd vft

conda env create -n vft --file env-vft-cross.yml

conda activate vft- Download models (download folders and unzip) from Google Drive and put them in

vftfolder bash mcts_main_run.sh

This paper shares many common code to https://github.com/arc-l/dipn. Except the environment was changed from CoppeliaSim (V-REP) to PyBullet.

Push dataset (push-05) should be downloaded and unzipped from Google Drive and put in vft/logs_push folder.

Training code:

python train_push_prediction.py --dataset_root 'logs_push/push-05'

Download foreground_model-30.pth from logs_image and put in vft/logs_image.

python collect_train_grasp_data.py --grasp_only --experience_replay --is_grasp_explore --load_snapshot --snapshot_file 'logs_image/foreground_model-30.pth' --save_visualization

You could skip this by using pre-trained model from logs_grasp. We stop the training after 20000 actions.

The part of simulation environment was adapted from https://github.com/google-research/ravens