Releases: av/harbor

v0.2.6 - Search, Repopack

v0.2.6

Harbor App now allows searching for services as well as configuration items.

We now also have a friend.

simplescreenrecorder-2024-10-05_00.30.29.mp4

Repopack integration

Repopack is a tool that packs your entire repository into a single, AI-friendly file.

Perfect for when you need to feed your codebase to Large Language Models (LLMs) or other AI tools like Claude, ChatGPT, and Gemini (feeding Harbor to Gemini 1.5 Pro costs ~$1).

cd ./my/repo

harbor repopack -o my.repo --style xmlSee fabric and other CLI satellites for some interesting use-cases.

Full Changelog: v0.2.5...v0.2.6

v0.2.5

v0.2.5 - Theme freedom

Harbor is all about customization. I decided that the the app theme should be fully representative of this, plus my designer colleagues told me it's a very bad idea, so enjoy - full customization of the Harbor App theme.

Misc

- Integrated Nexa - unfortunately there's a big bug in the Nexa Server that makes streaming unusable, otherwise this integration is something I'm quite proud of, as Harbor delivers two key improvements upon official Nexa releases:

- Compatibility with Open WebUI (with streaming disabled, for now)

- CUDA support in Docker

- That said, only NLP models were tested, so Audio/Visual verification is warmly welcomed!

- Nexa Service docs

- Small improvements to the App UI: Loaders, gradient service cards based on the tags (very faint)

Full Changelog: v0.2.4...v0.2.5

v0.2.4 - AnythingLLM

AnythingLLM integration

A full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

AnythingLLM divides your documents into objects called workspaces. A Workspace functions a lot like a thread, but with the addition of containerization of your documents. Workspaces can share documents, but they do not talk to each other so you can keep your context for each workspace clean.

Starting

# Start the service

harbor up anythingllm

# Open the UI

harbor open anythingllmOut of the box, connectivity:

- Ollama - You'll still need to select specific models for LLM and embeddings

- llama.cpp - Embeddings are not pre-configured

- SearXNG for Web RAG - still needs to be enabled for a specific Agent

v0.2.3 - Supersummer

v0.2.3 - supersummer module for Boost

supersummer is a new module for Boost to provide enhanced summaries. It's based on the technique of generation of a summary of the given given content from the key questions. The module will ask the LLM to provide a given amount of key questions and then will use them to guide the generation of the summary.

You can find a sample in the docs and the prompts in the source.

Full Changelog: v0.2.2...v0.2.3

v0.2.2

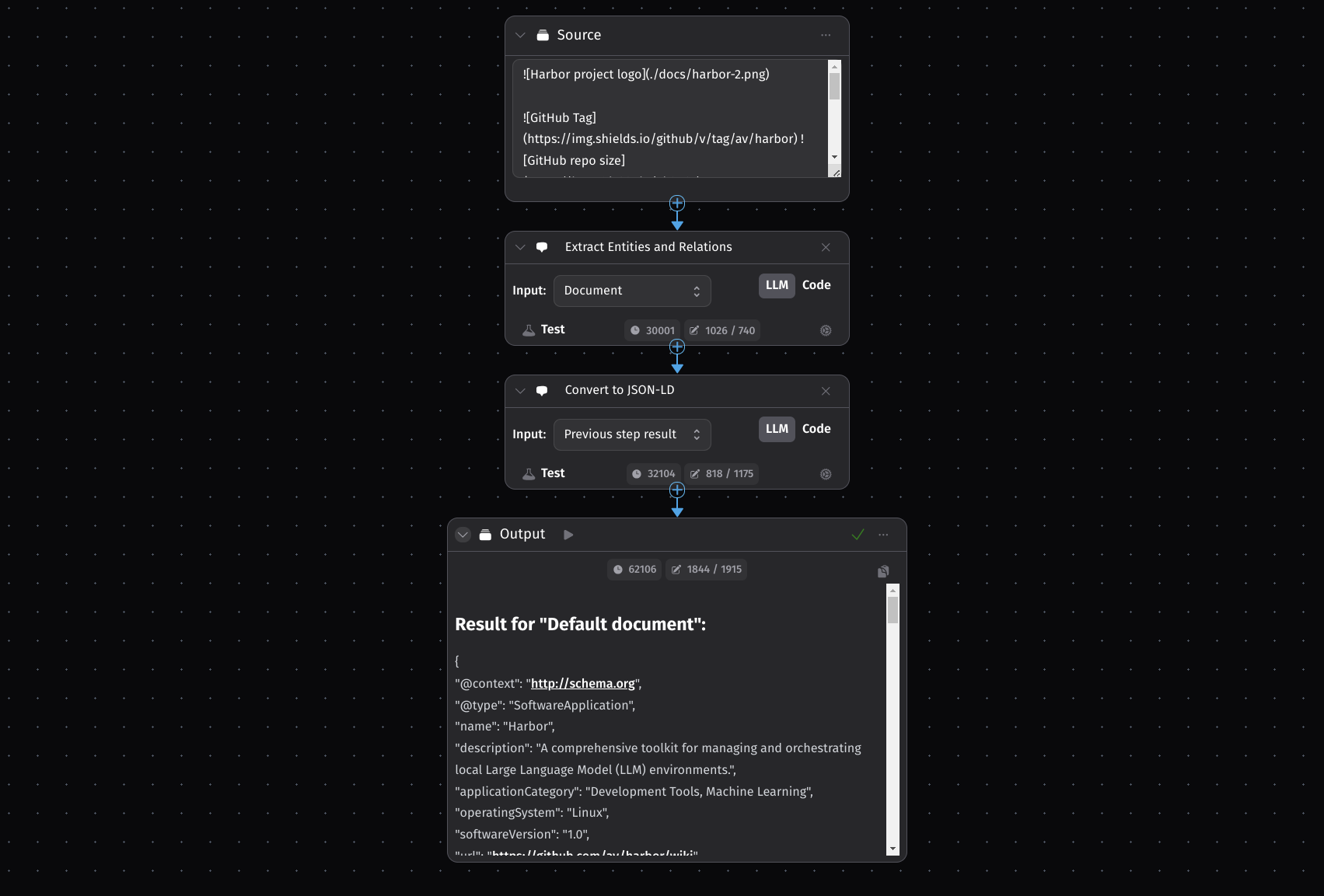

v0.2.2 LitLytics Integration

LitLytics is a simple platform for processing data with LLMs. It's compatible with local LLMs and can come handy in a lot of scenarios.

Starting

# Start and open the service

harbor up litlytics

harbor open litlyticsYou'll need to configure litlytics in its own UI, grab these from Harbor CLI:

# 1. Pick a model, copy ID

harbor ollama ls

# 2. Grab Ollama's URL, relative

# to the browser you'll be opening LitLytics in

harbor url ollamaFull Changelog: v0.2.1...v0.2.2

v0.2.1

v0.2.1

- Rename llama3.1:8b.Modelfile to avoid error on Windows by @shaneholloman in #44

comfyui- removingrsharedflag from the docker volume as it's not required, addingoverride.envdown- now correctly stops subservices, for example forlibrechatorbionicgptollama- when calling CLI, current$PWDis mounted to the container similarly to other CLI services, you can refer to local files, for example when runningollama create

New Contributors

- @shaneholloman made their first contribution in #44

Full Changelog: v0.2.0...v0.2.1

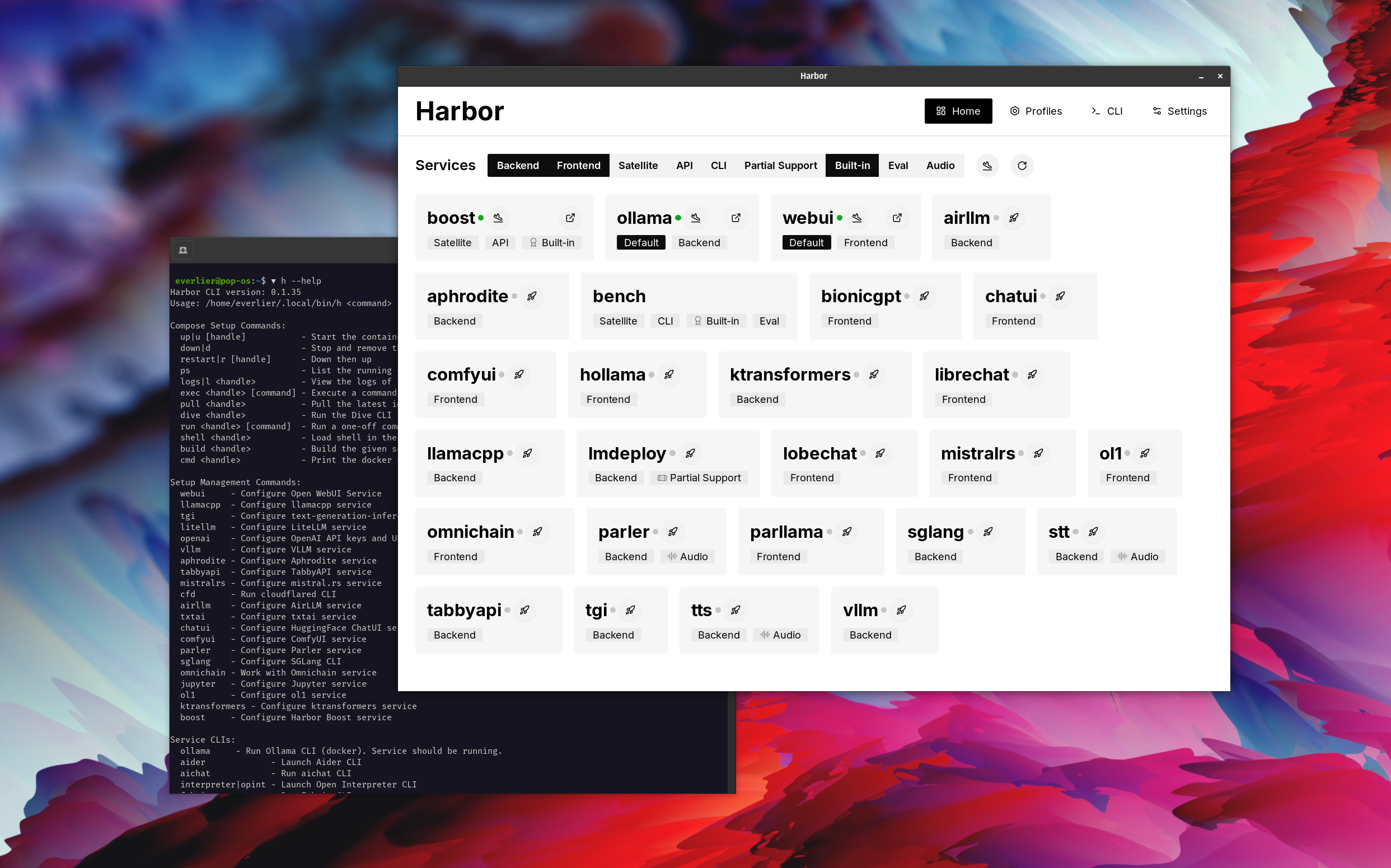

v0.2.0 - Harbor App

v0.2.0 - Harbor App

Harbor App is a companion application for Harbor - a toolkit for running AI locally. It's built on top of functionality provided by the harbor CLI and is intended to complement it.

The app provides clean and tasteful UI to aid with workflows associated with running a local AI stack.

- 🚀 Manage Harbor stack and individual services

- 🔗 Quickly access UI/API of a running service

- ⚙️ Manage and switch between different configurations

- 🎨 Aestetically pleasing UI with plenty of built-in themes

Demo

2024-09-29.17-22-06.mp4

In the demo, Harbor App is used to launch a default stack with Ollama and Open WebUI services. Later, SearXNG is also started, and WebUI can connect to it for the Web RAG right out of the box. After that, Harbor Boost is also started and connected to the WebUI automatically to induce more creative outputs. As a final step, Harbor config is adjusted in the App for the klmbr module in the Harbor Boost, which makes the output unparseable for the LLM (yet still undetstandable for humans).

Installation

Please refer to the Installation Guide

v0.1.35

v0.1.35

librechat- ordering of startup for sub-services

- explicit UIDs aligned with host

- not mouning meili logs - permission issue

- config merging setup - out-of-the-box connectivity should be restored

Full Changelog: v0.1.34...v0.1.35

v0.1.34

v0.1.34

doctor- Fixingdocker composedetection to always detect Docker Compose v2

Full Changelog: v0.1.32...v0.1.34

v0.1.32

v0.1.32

eli5module for boost,discussurl,unstableexamples- fixing sub-services to always use dash in their name (for an upcoming feature)

- MacOS -

config setcompatibility fix- Also fixes initial command run after install

- MacOS - correct resolution of latest tag v2

- Ensure that tags are fetched before the chechkout

- We'll be switching away from using shell for the update in the future

Full Changelog: v0.1.31...v0.1.32