Releases: av/harbor

v0.1.21

v0.1.21 - Harbor profiles

Profiles is a way to save/load a complete configuration for the specific task. For example, to quickly switch between the models that take a few commands to configure. Profiles include all options that can be set via harbor config (which is aliased by most of the CLI helpers).

Usage

harbor

profile|profiles|p [ls|rm|add] - Manage Harbor profiles

profile ls|list - List all profiles

profile rm|remove <name> - Remove a profile

profile add|save <name> - Add current config as a profile

profile set|use|load <name> - Use a profileThere are a few considerations when using profiles:

- When the profile is loaded, modifications are not saved by default and will be lost when switching to another profile (or reloading the current one). Use

harbor profile save <name>to persist the changes after making them - Profiles are stored in the Harbor workspace and can be shared between different Harbor instances

- Profiles are not versioned and are not guaranteed to work between different Harbor versions

- You can also edit profiles as

.envfiles in the workspace, it's not necessary to use the CLI

Example

# 1. Switch to the default for a "clean" state

harbor profile use default

# 2. Configure services as needed

harbor defaults remove ollama

harbor defaults add llamacpp

harbor llamacpp model https://huggingface.co/lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF/blob/main/Meta-Llama-3.1-8B-Instruct-Q4_K_M.gguf

harbor llamacpp args -ngl 99 --ctx-size 8192 -np 4 -ctk q8_0 -ctv q8_0 -fa

# 3. Save profile for future use

harbor profile add cpp8b

# 4. Up - runs in the background

harbor up

# 5. Adjust args - no parallelism, no kv quantization, no flash attention

# These changes are not saved in "cpp8b"

harbor llamacpp args -ngl 99 --ctx-size 2048

# 6. Save another profile

harbor profile add cpp8b-smart

# 7. Restart with "smart" settings

harbor profile use cpp8b-smart

harbor restart llamacpp

# 8. Switch between created profiles

harbor profile use default

harbor profile use cpp8b-smart

harbor profile use cpp8bFull Changelog: v0.1.20...v0.1.21

v0.1.20

v0.1.20 - SGLang integration

SGLang is a fast serving framework for large language models and vision language models.

Starting

# [Optional] Pre-pull the image

harbor pull sglang

# Download with HF CLI

harbor hf download google/gemma-2-2b-it

# Set the model to run using HF specifier

harbor sglang model google/gemma-2-2b-it

# See original CLI help for available options

harbor run sglang --help

# Set the extra arguments via "harbor args"

harbor sglang args --context-length 2048 --disable-cuda-graphFull Changelog: v0.1.19...v0.1.20

v0.1.19

v0.1.19 - lm-evaluation-harness integration

This project provides a unified framework to test generative language models on a large number of different evaluation tasks.

Starting

# [Optional] pre-build the image

harbor build lmevalRefer to the configuration for Harbor services

# Run evals

harbor lmeval --tasks gsm8k,hellaswag

# Open results folder

harbor lmeval resultsFull Changelog: v0.1.18...v0.1.19

v0.1.18

v0.1.18

This is another maintenance release mainly focused on the bench functionality

vllmis bumped to v0.6.0 by default, harbor now also uses a version withbitsandbytespre-installed (runharbor build vllmto pre-build it)bench- judge prompt, eval log, exp. backoff for the LLM- CheeseBench is out, smells good though

Full Changelog: v0.1.17...v0.1.18

v0.1.17

v0.1.17

This is a maintenance and bugfixes release without new service integrations.

benchservice fixes- correctly handling interrupts

- fixing broken API key support for the LLM and the Judge

benchnow renders a simple HTML reportbenchnow records task completion time- Breaking change

harbor benchis nowharbor bench run aphrodite- switching to0.6.0release images (different docker repo, changed internal port)aphrodite- configurable version- #12 fixed - using

nvidia-container-toolkitpresence fornvidiadetection, instead of the docker runtimes check

Full Changelog: v0.1.16...v0.1.17

v0.1.16

v0.1.16 bench

Something new this time - not an integration, but rather a custom-built service for Harbor.

bench is a built-in benchmark service for measuring the quality of LLMs. It has a few specific design goals in mind:

- Work with OpenAI-compatible APIs (not running LLMs on its own)

- Benchmark tasks and success criteria are defined by you

- Focused on chat/instruction tasks

# [Optional] pre-build the image

harbor build bench

# Run the benchmark

# --name is required to give this run a meaningful name

harbor bench --name bench

# Open the results (folder)

harbor bench results- Find a more detailed overview in the service docs

harbor doctor

A very lightweight troubleshooting utility

user@os:~/code/harbor$ ▼ h doctor

00:52:24 [INFO] Running Harbor Doctor...

00:52:24 [INFO] ✔ Docker is installed and running

00:52:24 [INFO] ✔ Docker Compose is installed

00:52:24 [INFO] ✔ .env file exists and is readable

00:52:24 [INFO] ✔ default.env file exists and is readable

00:52:24 [INFO] ✔ Harbor workspace directory exists

00:52:24 [INFO] ✔ CLI is linked

00:52:24 [INFO] Harbor Doctor checks completed successfully.Full Changelog: v0.1.15...v0.1.16

v0.1.15

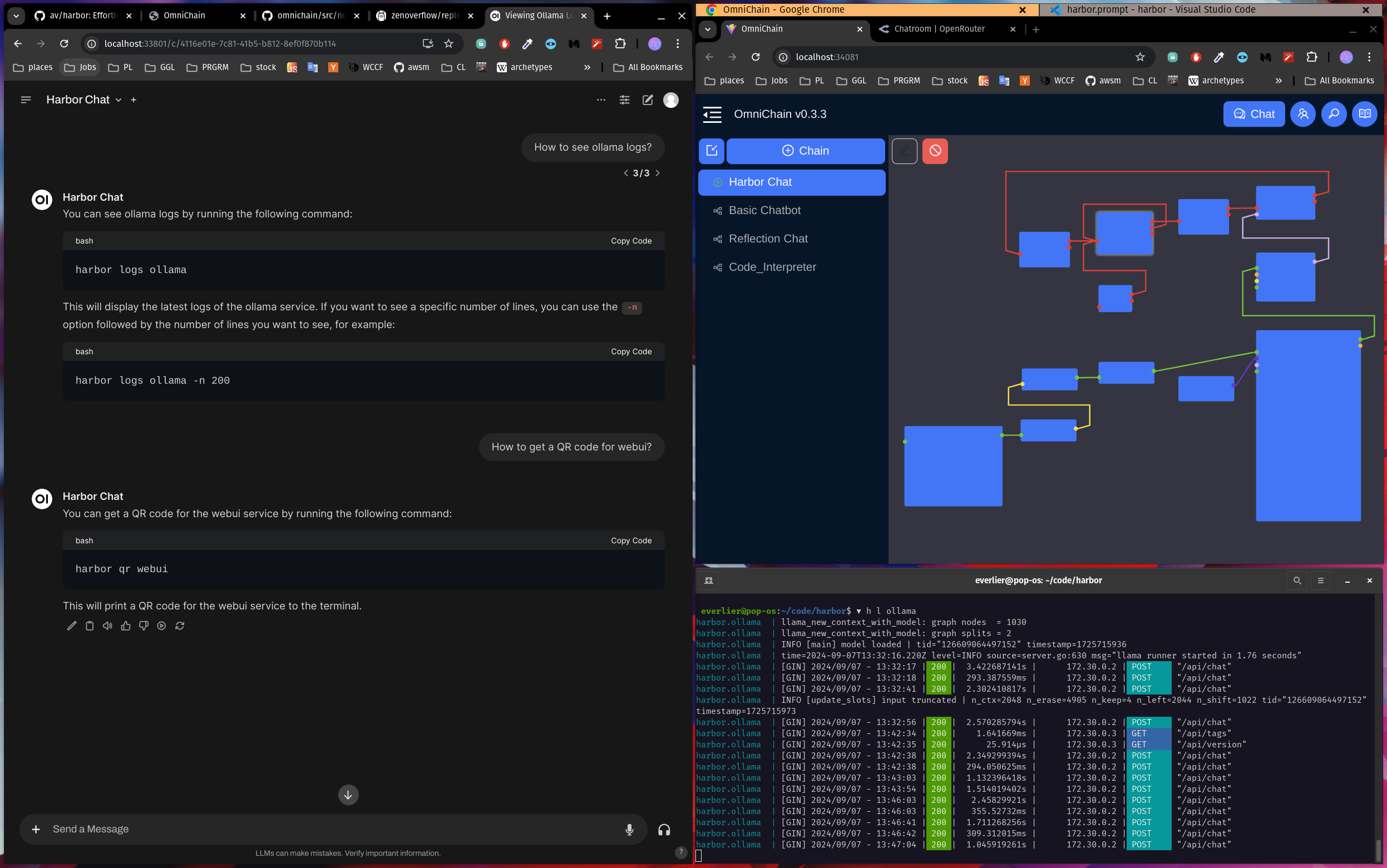

omnichain integration

Handle:

omnichain

URL: http://localhost:34081

Efficient visual programming for AI language models.

Starting

# [Optional] pre-build the image

harbor build omnichain

# Start the service

harbor up omnichain

# [Optional] Open the UI

harbor open omnichainHarbor runs a custom version of omnichain that is compatible with webui. See example workflow (Chat about Harbor CLI) in the service docs.

- Official Omnichain documentation

- Omnichain examples

- Harbor

omnichainservice docs - Omnichain HTTP samples

Misc

webuiconfig cleanup- Instructions for copilot in Harbor repo

- Fixing workspace for

bionicgptservice: missing gitignore,fixfsroutine

Full Changelog: v0.1.14...v0.1.15

v0.1.14

Lobe Chat integration

Lobe Chat - an open-source, modern-design AI chat framework.

Starting

# Will start lobechat alongside

# the default webui

harbor up lobechatIf you want to make LobeChat your default UI, please see the information below:

# Replace the default webui with lobechat

# afterwards, you can just run `harbor up`

harbor defaults rm webui

harbor defaults add lobechatNote

LobeChat supports only a list of predefined models for Ollama that can't be pre-configured and has to be selected from the UI at runtime

Misc

- half-baked

autogptservice, not documented as it's not integrated with the any of the harbor services due to its implementation - Updating

harbor howprompt to reflect on recent releases - Harbor User Guide - high-level user documentation

Full Changelog: v0.1.13...v0.1.14

v0.1.13

v0.1.13

- It's now possible to set desired

mistralrsversion:

# Alias

harbor mistralrs version

# Via config

harbor config get mistralrs.version

# Update

harbor mistralrs version 0.4

harbor config set mistralrs.version- MacOS compatibility fixes

- Log level selection (MacOS uses bash v3 without

declare -A) sedsignature is different requiring-ito have an''empty string set, affectingharbor config update

- Log level selection (MacOS uses bash v3 without

Full Changelog: v0.1.12...v0.1.13

v0.1.12

v0.1.12 - aichat integration

AIChat is an all-in-one AI CLI tool featuring Chat-REPL, Shell Assistant, RAG, AI Tools & Agents, and More.

# [Optional] pre-build the image

harbor build aichat

# Use aichat CLI

harbor aichat -f ./migrations.md how to run migrations?

harbor aichat -e install nvm

harbor aichat -c fibonacci in js

harbor aichat -f https://github.com/av/harbor/wiki/Services list supported servicesMisc

Log levels

Harbor now routes all non-parseable logs to stderr by default. You can also adjust log level for the CLI output (most existing logs are INFO)

# Show current log level, INFO by default

harbor config get log.level

# Set log level

harbor config set log.level ERROR

# Log levels

# DEBUG | INFO | WARN | ERROROllama modelfiles

Harbor workspace now has a place for custom modelfiles for Ollama.

# See existing modelfiles

ls $(harbor home)/ollama/modelfiles

# Copy custom modelfiles to the workspace

cp /path/to/Modelfile $(harbor home)/ollama/modelfiles/my.Modelfile

# Create custom model from a modelfile

harbor ollama create -f /modelfiles/my.Modelfile my-modelFull Changelog: v0.1.11...v0.1.12