-

Notifications

You must be signed in to change notification settings - Fork 91

KubeDirector Architecture Overview

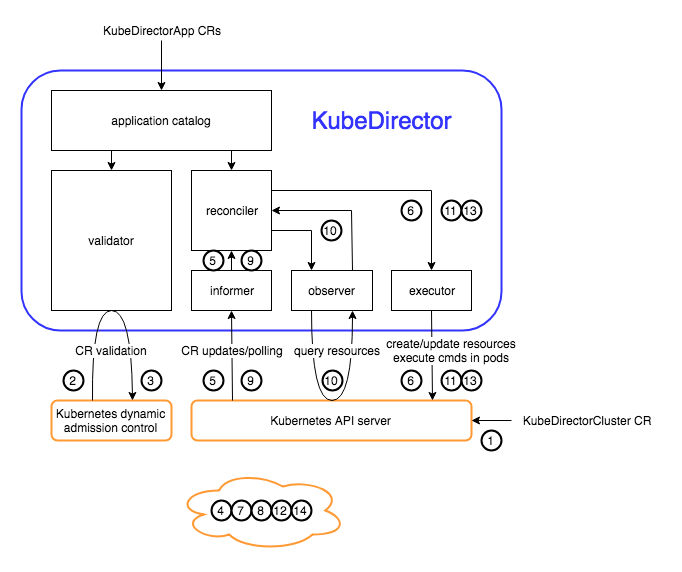

The figure below illustrates the internal KubeDirector modules and communication. (The circled numbers refer to cluster creation steps that will be described later.)

KubeDirector maintains an internal cache of the per-application metadata. This information comes from custom KubeDirectorApp resources provided to K8s -- one app type definition per resource. Currently these resources are each explicitly provided by an administrator; in the future they may alternately be provided through an external feed.

The validator module is a webserver registered with K8s to provide admission control for the custom resources (CRs) of KubeDirector app type definitions (KubeDirectorApp) and of KubeDirector-managed virtual clusters that instantiate those app types (KubeDirectorCluster). It enforces requirements that either are application-specific or are too complex for the validation schema in the custom resource definition. It is also responsible for protecting certain CR properties from being changed by clients other than KubeDirector.

The informer module watches the K8s API for creation, modification, or deletion of the above custom resource types; it will notify the reconciler module if any such event occurs. It also will periodically invoke the reconciler even in the absence of such an event, to handle cases where the reconciler needs to take action after a long-running process completes, or to fix unexpected changes to the cluster. KubeDirector's informer functionality is currently implemented through the Operator SDK.

The reconciler's job is to combine the KubeDirectorCluster spec and the appropriate KubeDirectorApp application definition to determine the desired state of the cluster. This state covers a multitude of standard K8s resources such as stateful sets, services, pods, and persistent volumes. The reconciler uses the observer module to build that picture of current K8s resources, and if necessary uses the executor module to make changes toward the desired state.

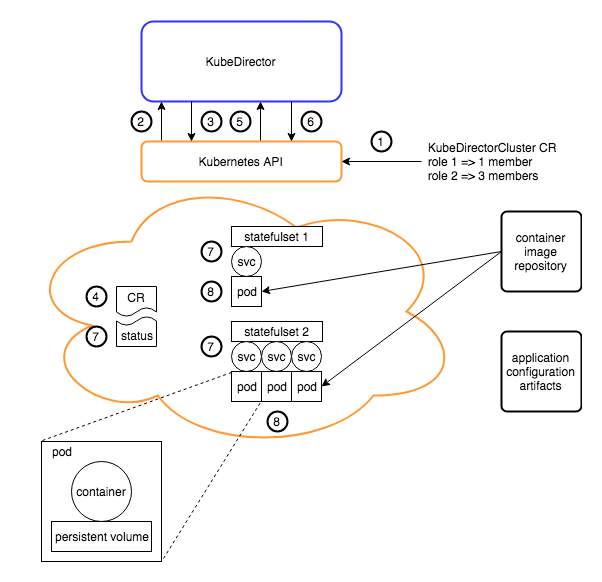

Typically a given CR will be implemented by the following standard K8s resources:

- One "headless" service to facilitate intra-cluster communication.

- One stateful set per role, with a number of replicas equal to the desired number of role members.

- One pod per member within a role, each pod with one container and one persistent volume claim.

- One service per pod. This service provides external access to the network ports exposed by the pod's container, as determined by the application metadata. This service may be of type NodePort or LoadBalancer depending on the desired method of external access.

- One persistent volume claim per pod, if persistent storage was requested.

The figures below show an example sequence of creating an application cluster through KubeDirector. A sequence for updating an existing cluster would be similar.

This description concentrates on actions outside the standard K8s services; for example once a resource spec is presented to the K8s API we're not concerned with exactly how that resource is then persisted into the K8s key/value store.

- An end user submits a KubeDirectorCluster resource to the K8s API.

- K8s admission control consults the validator module in KubeDirector.

- The validator consults the app catalog and looks up the appropriate KubeDirectorApp info. If the KubeDirectorCluster spec is valid for that app (e.g. appropriate choices, number of replicas, minimum memory for role, etc.) then the request is OK'ed.

- The KubeDirectorCluster resource appears in the K8s KV store.

- The informer notices the resource and notifies the reconciler module in KubeDirector.

- The reconciler consults the app catalog for appropriate images, services, persistent storage volumes, etc. Through the executor, it asks the K8s API to create stateful sets, services, etc. and to update the KubeDirectorCluster resource's status properties.

- Requested new and updated resources appear in the K8s KV store.

- K8s begins creating pods to comply with stateful set replica counts.

- The informer periodically hands the reconciler the current KubeDirectorCluster resource (polling).

- The reconciler uses the observer to check on the current state of all related resources in the K8s store. Once all desired pods have become running pods, the reconciler proceeds to next steps.

- The reconciler uses the executor to inject configuration data into the pods, and an invocation that will fetch and run the application setup package.

- Within each pod, the setup package is fetched from an artifact store and executed.

- Once the application setup completes, the reconciler updates the KubeDirectorCluster's status properties.

- New status properties appear on the KubeDirectorCluster resource in the K8s KV store.