The key prerequisite for accessing the huge potential of current machine learning techniques is the availability of large databases that capture the complex relations of interest. Previous datasets are focused on either 3D scene representations with semantic information, tracking of multiple persons and recognition of their actions, or activity recognition of a single person in captured 3D environments. We present Bonn Activity Maps, a large-scale dataset for human tracking, activity recognition and anticipation of multiple persons. Our dataset comprises four different scenes that have been recorded by time-synchronized cameras each only capturing the scene partially, the reconstructed 3D models with semantic annotations, motion trajectories for individual people including 3D human poses as well as human activity annotations. In addition, we also provide activity maps for each scene.

To access our dataset, please contact bonn_activity_maps@iai.uni-bonn.de.

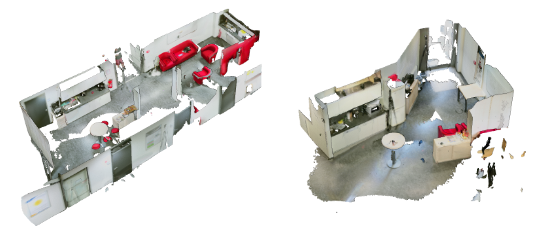

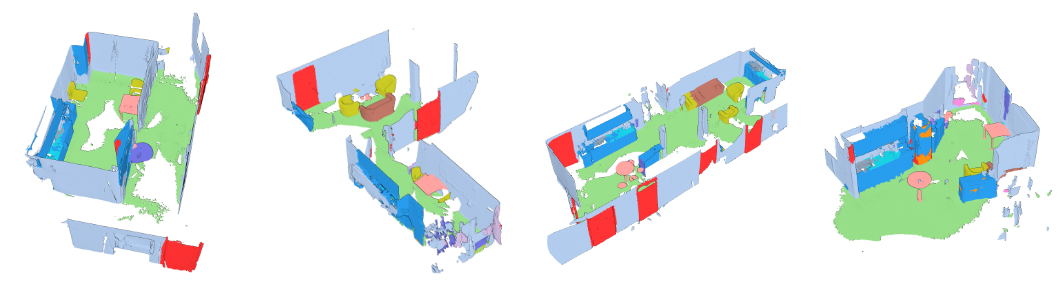

At the moment we provide recordings of about two hours for four kitchens with the following 3D models:

Each person visible during the recording is labeled in 3D. Below the trajectories for two persons for a complete recording are plotted.