- 01. Create Astra Account

- 02. Create Astra Token

- 03. Copy the token

- 04. Open Gitpod

- 05. Setup CLI

- 06. Create Database

- 07. Create Destination Table

- 08. Setup env variables

- 09. Setup Project

- 10. Run Importing Flow

- 11. Validate Data

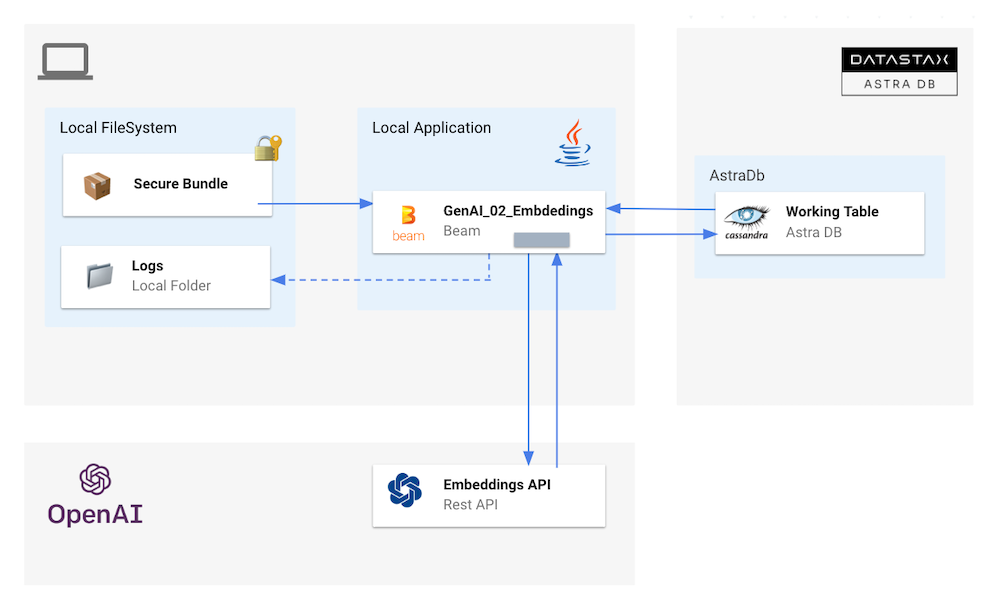

- 01. Compute Embeddings

- 02. Show results

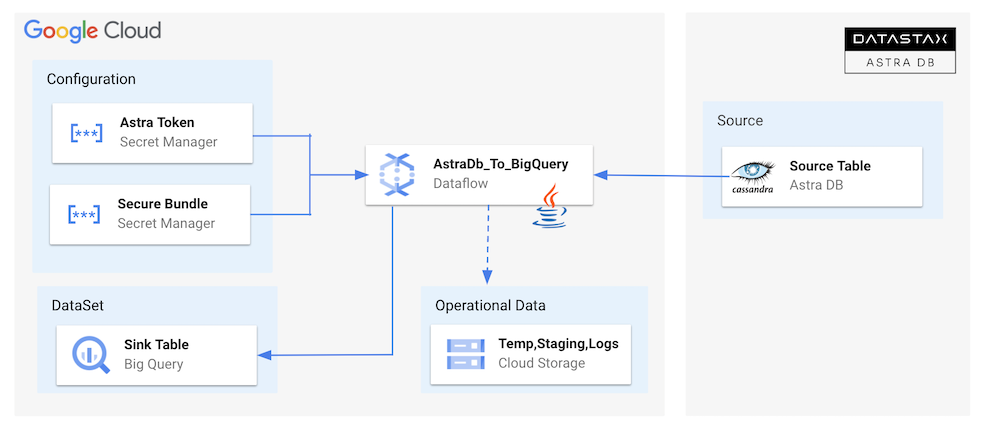

- 03. Create Google Project

- 04. Enable project Billing

- 05. Save Project Id

- 06. Install gcloud CLI

- 07. Authenticate Against Google Cloud

- 08. Select your project

- 09. Enable Needed Apis

- 10. Setup Dataflow user

- 11. Create Secret

- 12. Move in proper folder

- 13. Setup env var

- 14. Run the pipeline

- 15. show Content of Table

- Introduce AstraDB and Vector Search capability

- Give you an first understanding about Apache Beam and Google DataFlow

- Discover NoSQL dsitributed databases and specially Apache Cassandra™.

- Getting familiar with a few Google Cloud Platform services

1️⃣ Can I run this workshop on my computer?

There is nothing preventing you from running the workshop on your own machine, If you do so, you will need the following

- git installed on your local system

- Java installed on your local system

- Maven installed on your local system

2️⃣ What other prerequisites are required?

- You will need an enough *real estate* on screen, we will ask you to open a few windows and it does not file mobiles (tablets should be OK)

- You will need a GitHub account eventually a google account for the Google Authentication (optional)

- You will need an Astra account: don't worry, we'll work through that in the following

- As Intermediate level we expect you to know what java and maven are

3️⃣ Do I need to pay for anything for this workshop?

No. All tools and services we provide here are FREE. FREE not only during the session but also after.

4️⃣ Will I get a certificate if I attend this workshop?

Attending the session is not enough. You need to complete the homeworks detailed below and you will get a nice badge that you can share on linkedin or anywhere else *(open api badge)*

It doesn't matter if you join our workshop live or you prefer to work at your own pace, we have you covered. In this repository, you'll find everything you need for this workshop:

ℹ️ Account creation tutorial is available in awesome astra

click the image below or go to https://astra.datastax./com

ℹ️ Token creation tutorial is available in awesome astra

-

LocateSettings(#1) in the menu on the left, thenToken Management` (#2) -

Select the role

Organization Administratorbefore clicking[Generate Token]

The Token is in fact three separate strings: a Client ID, a Client Secret and the token proper. You will need some of these strings to access the database, depending on the type of access you plan. Although the Client ID, strictly speaking, is not a secret, you should regard this whole object as a secret and make sure not to share it inadvertently (e.g. committing it to a Git repository) as it grants access to your databases.

{

"ClientId": "ROkiiDZdvPOvHRSgoZtyAapp",

"ClientSecret": "fakedfaked",

"Token":"AstraCS:fake"

}You can also leave the windo open to copy the value in a second.

↗️ Right Click and select open as a new Tab...

In gitpod, in a terminal window:

- Login

astra login --token AstraCS:fake- Validate your are setup

astra orgOutput

gitpod /workspace/workshop-beam (main) $ astra org +----------------+-----------------------------------------+ | Attribute | Value | +----------------+-----------------------------------------+ | Name | cedrick.lunven@datastax.com | | id | f9460f14-9879-4ebe-83f2-48d3f3dce13c | +----------------+-----------------------------------------+

ℹ️ You can notice we enabled the Vector Search capability

- Create db

workshop_beamand wait for the DB to become active

astra db create workshop_beam -k beam --vector --if-not-exists

💻 Output

- List databases

astra db list

💻 Output

- Describe your db

astra db describe workshop_beam

💻 Output

- Create Table:

astra db cqlsh workshop_beam -k beam \

-e "CREATE TABLE IF NOT EXISTS fable(document_id TEXT PRIMARY KEY, title TEXT, document TEXT)"- Show Table:

astra db cqlsh workshop_beam -k beam -e "SELECT * FROM fable"- Create

.envfile with variables

astra db create-dotenv workshop_beam - Display the file

cat .env- Load env variables

set -a

source .env

set +a

env | grep ASTRA

This command will allows to validate that Java , maven and lombok are working as expected

mvn clean compile

- Open the CSV. It is very short and simple for demo purpose (and open API prices laters :) ).

/workspace/workshop-beam/samples-beam/src/main/resources/fables_of_fontaine.csv- Open the Java file with the code

gp open /workspace/workshop-beam/samples-beam/src/main/java/com/datastax/astra/beam/genai/GenAI_01_ImportData.java- Run the Flow

cd samples-beam

mvn clean compile exec:java \

-Dexec.mainClass=com.datastax.astra.beam.genai.GenAI_01_ImportData \

-Dexec.args="\

--astraToken=${ASTRA_DB_APPLICATION_TOKEN} \

--astraSecureConnectBundle=${ASTRA_DB_SECURE_BUNDLE_PATH} \

--astraKeyspace=${ASTRA_DB_KEYSPACE} \

--csvInput=`pwd`/src/main/resources/fables_of_fontaine.csv"

astra db cqlsh workshop_beam -k beam -e "SELECT * FROM fable"We will now compute the embedding leveraging OpenAPI. It is not free, you need to provide your credit card to access the API. This part is a walkthrough. If you have an openAI key follow with me !

- Setup Open AI

export OPENAI_API_KEY="<your_api_key>"

- Open the Java file with the code

gp open /workspace/workshop-beam/samples-beam/src/main/java/com/datastax/astra/beam/genai/GenAI_02_CreateEmbeddings.java- Run the flow

mvn clean compile exec:java \

-Dexec.mainClass=com.datastax.astra.beam.genai.GenAI_02_CreateEmbeddings \

-Dexec.args="\

--astraToken=${ASTRA_DB_APPLICATION_TOKEN} \

--astraSecureConnectBundle=${ASTRA_DB_SECURE_BUNDLE_PATH} \

--astraKeyspace=${ASTRA_DB_KEYSPACE} \

--openAiKey=${OPENAI_API_KEY} \

--table=fable"

astra db cqlsh workshop_beam -k beam -e "SELECT * FROM fable"- Create GCP Project

Note: If you don't plan to keep the resources that you create in this guide, create a project instead of selecting an existing project. After you finish these steps, you can delete the project, removing all resources associated with the project. Create a new Project in Google Cloud Console or select an existing one.

In the Google Cloud console, on the project selector page, select or create a Google Cloud project

Make sure that billing is enabled for your Cloud project. Learn how to check if billing is enabled on a project

The project identifier is available in the column ID. We will need it so let's save it as an environment variable

export GCP_PROJECT_ID=integrations-379317

export GCP_PROJECT_CODE=747469159044

export GCP_USER=cedrick.lunven@datastax.com

export GCP_COMPUTE_ENGINE=747469159044-compute@developer.gserviceaccount.comcurl https://sdk.cloud.google.com | bash

Do not forget to open a new Tab.

Run the following command to authenticate with Google Cloud:

- Execute:

gcloud auth login

- Authenticate as your google Account

If you haven't set your project yet, use the following command to set your project ID:

gcloud config set project ${GCP_PROJECT_ID}

gcloud projects describe ${GCP_PROJECT_ID}

gcloud services enable dataflow compute_component \

logging storage_component storage_api \

bigquery pubsub datastore.googleapis.com \

cloudresourcemanager.googleapis.com

To complete the steps, your user account must have the Dataflow Admin role and the Service Account User role. The Compute Engine default service account must have the Dataflow Worker role. To add the required roles in the Google Cloud console:

gcloud projects add-iam-policy-binding ${GCP_PROJECT_ID} \

--member="user:${GCP_USER}" \

--role=roles/iam.serviceAccountUser

gcloud projects add-iam-policy-binding ${GCP_PROJECT_ID} \

--member="serviceAccount:${GCP_COMPUTE_ENGINE}" \

--role=roles/dataflow.admin

gcloud projects add-iam-policy-binding ${GCP_PROJECT_ID} \

--member="serviceAccount:${GCP_COMPUTE_ENGINE}" \

--role=roles/dataflow.worker

gcloud projects add-iam-policy-binding ${GCP_PROJECT_ID} \

--member="serviceAccount:${GCP_COMPUTE_ENGINE}" \

--role=roles/storage.objectAdmin

To connect to AstraDB you need a token (credentials) and a zip used to secure the transport. Those two inputs should be defined as secrets.

```

gcloud secrets create astra-token \

--data-file <(echo -n "${ASTRA_TOKEN}") \

--replication-policy="automatic"

gcloud secrets create cedrick-demo-scb \

--data-file ${ASTRA_SCB_PATH} \

--replication-policy="automatic"

gcloud secrets add-iam-policy-binding cedrick-demo-scb \

--member="serviceAccount:${GCP_COMPUTE_ENGINE}" \

--role='roles/secretmanager.secretAccessor'

gcloud secrets add-iam-policy-binding astra-token \

--member="serviceAccount:${GCP_COMPUTE_ENGINE}" \

--role='roles/secretmanager.secretAccessor'

gcloud secrets list

```

cd samples-dataflow

pwdWe assume the table languages exists and has been populated in 3.1

export ASTRA_SECRET_TOKEN=projects/747469159044/secrets/astra-token/versions/2

export ASTRA_SECRET_SECURE_BUNDLE=projects/747469159044/secrets/secure-connect-bundle-demo/versions/1mvn compile exec:java \

-Dexec.mainClass=com.datastax.astra.dataflow.AstraDb_To_BigQuery_Dynamic \

-Dexec.args="\

--astraToken=${ASTRA_SECRET_TOKEN} \

--astraSecureConnectBundle=${ASTRA_SECRET_SECURE_BUNDLE} \

--keyspace=${ASTRA_KEYSPACE} \

--table=fable \

--runner=DataflowRunner \

--project=${GCP_PROJECT_ID} \

--region=us-central1"A dataset with the keyspace name and a table with the table name have been created in BigQuery.

bq head -n 10 ${ASTRA_KEYSPACE}.${ASTRA_TABLE}The END