Parse Apache2 logs to create statistics

THIS VERSION IS UN-MANTAINED

PLEASE REFER TO THE OTHER VERSIONS OF CRAPLOG TO FIND MORE ADVANCED VERSIONS

SEE The Craplog Project IF YOU WANT TO NOW MORE

Craplog is a tool that takes Apache2 logs in their default form, scrapes them and creates simple statistics.

It's meant to be ran daily.

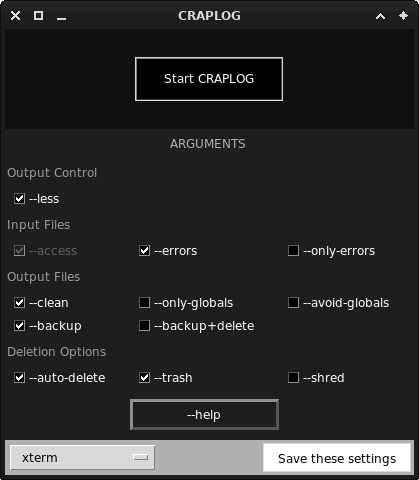

This is a Graphical-aided version of Craplog.

The GUI is just a launcher, Craplog still relies on a terminal.

Don't like it? Try the other versions of Craplog

This Graphical version of Craplog is still dependant on terminal emulators for the main code's execution.

Different terminals means different behaviors. If you're experiencing issues during execution, try to switch to another terminal (bottom-left button).

- tk ( tkinter )

chmod +x ./install.sh

./install.sh

craplog

python3 craplog.py

At the moment, it only supports Apache2 log files in their default form and path

If you're using a different path, please open the file named Clean.py (you can find it inside the folder named crappy) and modify these lines:

/var/log/apache2/

IP - - [DATE:TIME] "REQUEST URI" RESPONSE "FROM URL" "USER AGENT"

123.123.123.123 - - [01/01/2000:00:10:20 +0000] "GET /style.css HTTP/1.1" 200 321 "/index.php" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:86.0) Firefox/86.0"

[DATE TIME] [LOG LEVEL] [PID] ERROR REPORT

[Mon Jan 01 10:20:30.456789 2000] [headers:trace2] [pid 12345] mod_headers.c(874): AH01502: headers: ap_headers_output_filter()

Please notice that Craplog is taking *.log.1 files as input. This is because these files (by default) are renewed every day at midnight, so they contain the full log stack of the (past) day.

Because of that, when you run it, it will use yesterday's logs and store stat files cosequently.

Craplog is meant to be ran daily.

This is nothing special. It just creates a file in which every line from a local connection is removed (this happens with statistics too).

After that, the lines are re-arranged in order to be separeted by one empty line if the connection comes from the same IP address as the previous, or two empty lines if the IP is different from the above one.

This isn't much useful if you usually check logs using cat | grep, but it helps if you read them directly from file.

Not a default feature.

By default, Craplog takes as input only the access.log.1 file (unless you specify to not use it, calling the --only-errors argument, see below).

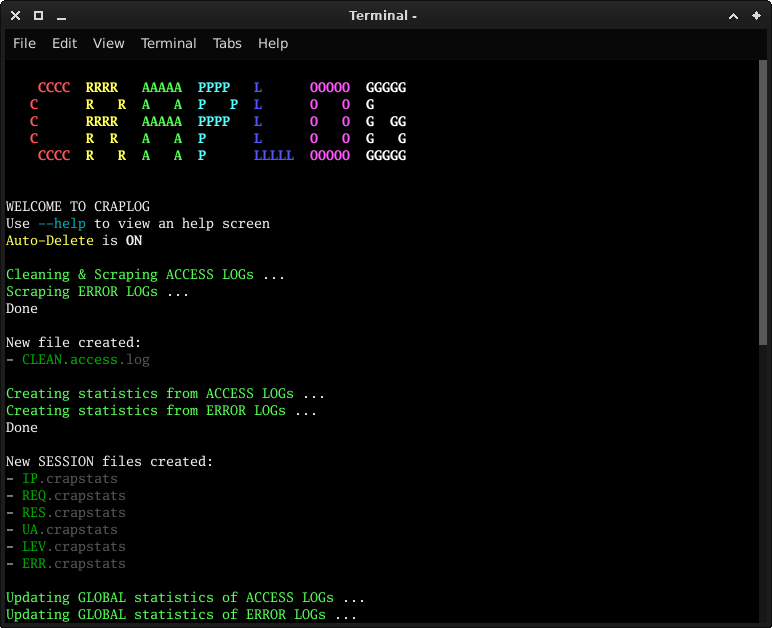

The first time you run it, it will create a folder named STATS.

Statistics files will be stored inside that folder and sorted by date.

Four .crapstats files will be created inside the folder named STATS:

- IP.crapstats = IPs statistics of the choosen file

- REQ.crapstats = REQUESTs statistics of the choosen file

- RES.crapstats = RESPONSEs statistics of the choosen file

- UA.crapstats = USER AGENTs statistics of the choosen file

You have the opportunity to also create statistics of the errors ( --errors ) or even of only the errors ( --only-errors , avoiding the usage of the access.log.1 file).

This will create 2 additional files inside STATS folder:

- LEV.crapstats = LOG LEVELs statistics of the choosen file

- ERR.crapstats = ERROR REPORTs statistics of the choosen file

Additionally, by default Craplog updates the global statistics inside the /STATS/GLOBALS folder every time you run it (unless you specify to not do it, calling --avoid-globals).

Please notice that if you run it twice for the same log file, global statistics will not be reliable (obviously).

A maximum of 6 GLOBAL files will be created inside STATS/GLOBALS/:

- GLOBAL.IP.crapstats = GLOBAL IPs statistics

- GLOBAL.REQ.crapstats = GLOBAL REQUESTs statistics

- GLOBAL.RES.crapstats = GLOBAL RESPONSEs statistics

- GLOBAL.UA.crapstats = GLOBAL USER AGENTs statistics

[+] - GLOBAL.LEV.crapstats = GLOBAL LOG LEVELs statistics

- GLOBAL.ERR.crapstats = GLOBAL ERROR REPORTs statistics

Statistics' structure is the same for both SESSION and GLOBALS:

{ COUNT } >>> ELEMENT

example:

{ 100 } >>> 200

{ 10 } >>> 404

-

Craplog's complete functionalities: makes a clean access logs file, creates statisics of both access.log.1 and error.log.1 files, uses them to update globals and creates a backup of the original files

--clean--errors--backup -

Takes both access logs and error logs files as input, but only updates global statistics. Also auto-deletes every conflict file it finds, moving them to trash

--errors--only-globals--auto-delete--trash -

Also creates statisics of error logs file, but avoids updating globals

--errors--avoid-globals

Please notice that even usign --only-globals, normal session's statistic files will be created. Craplog needs session files in order to update global ones.

After completing the job, session files will be automatically removed.

1~10 MB/s

May be higher or lower depending on the complexity of the logs, the length of your globals and the power of your CPU.

If Craplog takes more than 1 minute for a 10 MB file, you've probably been tested in some way (better to check).

Craplog automatically makes backups of global statistic files, in case of fire.

If something goes wrong and you lose your actual global files, you can recover them (at least the last backup).

Move inside Craplog folder, open 'STATS', open 'GLOBALS', show hidden files and open '.BACKUPS'. Here you will find the last 7 backups taken.

Folder named '7' is always the newest and '1' the oldest.

A new backup is made every 7th time you run Craplog. If you run it once a day, it will takes backups once a week, and will keep the older one for 7 weeks.

Craplog is under development.

If you have suggestions about how to improve it please open an issue.

If you're not running Apache, but you like this tool: same as the above (bring a sample of a log file).