-

Notifications

You must be signed in to change notification settings - Fork 24

Env

Env in the env package defines an interface for environments, which determine the nature and sequence of State inputs to a model (and Action responses from the model that affect the environment). An environment's States evolve over time and thus the Env is concerned with defining and managing time as well as space (States). Indeed, in the limit of extreme realism, the environment is an actual physical environment (e.g., for a robot), or a VirtEnv virtual environment for simulated robots.

See the Envs repository for a number of standalone environments of more complexity.

The advantage of using a common interface across models is that it makes it easier to mix-and-match environments with models. In well-defined paradigms, a single common interface can definitely encompass a wide variety of environments, as demonstrated by the Open AI Gym, which also provides a great template our interface. Unfortunately, they chose the term "Observation" instead of "State" for what you get from the environment, but otherwise our interface should be compatible overall, and we definitely intend to provide a wrapper interface to the Gym (which is written in Python).

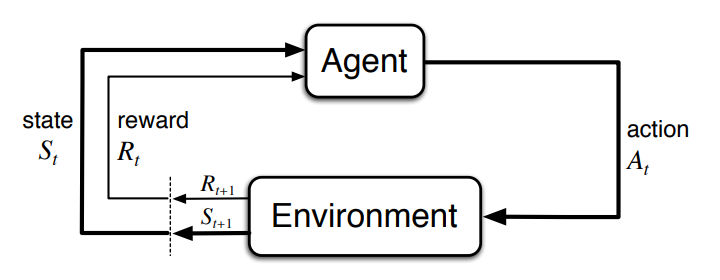

We use the standard terms as shown in the figure:

-

Stateis what the env provides to the model. -

Actionis what the model can perform on the env to influence its evolution.

We include Reward as just another element of the State, which may or may not be supported by a given environment.

The env State can have one or more Elements, each of which is something that can be represented by a n-dimensional Tensor (i.e, etensor.Tensor). In the simplest case, this reduces to a single scalar number, but it could also be the 4-dimensional output of a V1-like gabor transform of an image. We chose the term Element because it has a more "atomic" elemental connotation --- these are the further-indivisible chunks of data that the env can give you. The Tensor encompasses the notion of a Space as used in the OpenAI Gym.

The main API for a model is to Step the environment, which advances it one step -- exactly what a Step means is env-specific. See the Time Scales section for more details about naming conventions for time scales.

The Env provides an intentionally incomplete interface -- given the wide and open-ended variety of possible environments, we can only hope to capture some of the most central properties shared by most environments: States, Actions, Stepping, and Time.

Nevertheless, there are specific paradigms of environments that contain many exemplars, and by adopting standard naming and other conventions for such paradigms, we can maximize the ability to mix-and-match envs with models that handle those paradigms. See Paradigms for an expanding list of such paradigms and conventions. In some, but not all, cases these paradigms can be associated with a specific Go type that implements the Env interface, such as the FixedTable impl.

Here are the basic steps that a model uses in interacting with an Env:

-

Set env-specific parameters -- these are outside the scope of the interface and paradigm / implementation-specific. e.g, for the

env.FixedTable, you need to set thetable.IndexViewonto atable.Tablethat supplies the patterns. -

Call

Init(run)with a given run counter (managed externally by the model and not the env), which initializes a given run ("subject") through the model. Envs could do different things for different subjects, e.g., for between-subject designs. -

During the run, repeatedly call:

-

Step()to advance the env one step -

State("elem")to get a particular element of the state, as a Tensor, which can then be applied as an input to the model. e.g., you can read the "Input" state from a simple pattern associator or classifier paradigm env, and apply it to the "Input" layer of a neural network. Iterate for other elements of the state as needed (e.g., "Output" for training targets). Again, this is all paradigm and env-specific and not determined by the interface, in terms of what state elements are available when, etc. -

Action("act")(optional, depends on env) to send an action input to the env from the model. Envs can also accept actions generated by anything, so other agents or other control parameters etc could be implemented via actions. The Env should generally record these actions and incorporate them into the next Step function, instead of changing the current state, so it can still be queried, but that is not essential and again is env-specific.

-

The Env can also manage Counter states associated with the relevant time scales of the environment, but in general it is up to the Sim to manage time through the Looper system, and coordinate with the env as needed.

In general, the Env always represents the current state of the environment. Thus, whenever you happen to query it via State or Counter calls, it should tell you what the current state of things is, as of the last Step function.

To accommodate fully dynamic, unpredictable environments, the Env thus cannot tell you in advance when something important like a Counter state will change until after it actually changes. This means that when you call the Step function, and query the Counter and find that it has changed, the current state of the env has already moved on. Thus, per the basic operation described above, you must first check for changed counters after calling Step, and process any relevant changes in counter states, before moving on to process the current env state, which again is now the start of something new.

For this reason, the Counter function returns the previous counter value, along with the current, and a bool to tell you if this counter changed: theoretically, the state could have changed but the counter values are the same, e.g., if a counter has a Max of 1 and is thus always 0. The Counter struct maintains all this for you automatically and makes it very easy to support this interface.

To facilitate consistency and interoperability, we have defined a set of standard time scale terms that envs are encouraged to use (this list can be extended in specific end-user packages, but others won't know about those extensions.. please file an issue if you have something that should be here!).

These timescales are now in the etime package, which encompasses both biological and environmental time scales.

-

Eventis the smallest unit of naturalistic experience that coheres unto itself (e.g., something that could be described in a sentence). Typically this is on the time scale of a few seconds: e.g., reaching for something, catching a ball. In an experiment it could just be the onset of a stimulus, or the generation of a response. -

Trialis one unit of behavior in an experiment, and could potentially encompass multiple Events (e.g., one event is fixation, next is stimulus, last is response, all comprising one Trial). It is also conventionally used as a single Input / Output learning instance in a standard error-driven learning paradigm. -

Sequenceis a sequential group of Trials (not always needed). -

Blockis a collection of Trials, Sequences or Events, often used in experiments when conditions are varied across blocks. -

Conditionis a collection of Blocks that share the same set of parameters -- this is intermediate between Block and Run levels. -

Epochis used in two different contexts. In machine learning, it represents a collection of Trials, Sequences or Events that constitute a "representative sample" of the environment. In the simplest case, it is the entire collection of Trials used for training. In electrophysiology, it is a timing window used for organizing the analysis of electrode data. -

Runis a complete run of a model / subject, from training to testing, etc. Often multiple runs are done in an Expt to obtain statistics over initial random weights etc. -

Exptis an entire experiment -- multiple Runs through a given protocol / set of parameters. In general this is beyond the scope of the Env interface but is included for completeness. -

Sceneis a sequence of events that constitutes the next larger-scale coherent unit of naturalistic experience corresponding e.g., to a scene in a movie. Typically consists of events that all take place in one location over e.g., a minute or so. This could be a paragraph or a page or so in a book. -

Episodeis a sequence of scenes that constitutes the next larger-scale unit of naturalistic experience e.g., going to the grocery store or eating at a restaurant, attending a wedding or other "event". This could be a chapter in a book.

The following are naming conventions for identified widely-used paradigms. Following these conventions will ensure easy use of a given env across models designed for such paradigms.

This is a basic machine-learning paradigm that can also be used to simulate cognitive tasks such as reading, stimulus - response learning, etc.

The env.FixedTable implementation supports this paradigm (with appropriately chosen Element names).

-

State Elements:

- "Input" = the main stimulus or input pattern.

- "Output" = the main output pattern that the model should learn to produce for given Input.

- Other elements as needed should contain "_Input" or "_In" suffix for inputs, and "_Output" or "_Out" for outputs.

-

Counters:

- "Trial" = increments for each input / output pattern. This is one

Step(). - "Epoch" = increments over complete set or representative sample of trials.

- "Trial" = increments for each input / output pattern. This is one

-

State Elements:

- "State" = main environment state. "_State" suffix can be used for additional state elements.

- "Reward" = reward value -- first cell of which should be overall primary scalar reward, but if higher-dimensional, other dimensions can encode different more specific "US outcome" states, or other Element names could be used (non-standardized but "Food", "Water", "Health", "Points", "Money", etc are obvious choices).

-

Action Elements:

- "Action" = main action. Others may be avail -- we might try to standardize sub-types as we get more experience.

-

Counters:

- "Event" = main inner-loop counter, what

Step()advances. - All others are optional but might be useful depending on the paradigm. e.g., Block might be used for a level in a video game, and Sequence for a particular move sequence within a level.

- "Event" = main inner-loop counter, what

See this example in the Leabra repository: https://github.com/emer/leabra/tree/master/examples/env

Here's an example of how to use the Action function, by reading the Act values from a given layer, and sending that to the environment. (This example is from a model of change detection in a working memory task, so things like the name of the function MatchAction, the environment type *CDTEnv, and the layer name Match should be set as appropriate for your model.) The Action is usually called after the minus phase (3rd Quarter) in a Leabra model, to generate a plus-phase reward or target input, or after the trial to affect the generation of the next input State on the next trial. In either case, just write a function like that below, and call it at the appropriate point within your AlphaCyc method.

func (ss *Sim) MatchAction(train bool) {

var en *CDTEnv

if train {

en = &ss.TrainEnv

} else {

en = &ss.TestEnv

}

ly := ss.Net.LayerByName("Match").(leabra.LeabraLayer).AsLeabra()

tsr := ss.ValsTsr("Match") // see ra25 example for this method for a reusable map of tensors

ly.UnitValsTensor(tsr, "Act") // read the acts into tensor

en.Action("Match", tsr) // pass tensor to env, computes reward

}Here's the Action method on the Env, which receives the tensor state input and processes it to set the value of the Reward tensor, which is a State that can be read from the Env and applied to the corresponding layer in the network. Obviously, one can write whatever function is needed to set or compute a desired State:

func (ev *CDTEnv) Action(element string, input etensor.Tensor) {

switch element {

case "Match":

mact := tsragg.Max(input) // gets max activation value

// fmt.Printf("match act: %v\n", mact)

if mact < .5 {

ev.Reward.Values[0] = 0 // Reward is an etensor.Float32, Shape 1d

} else {

ev.Reward.Values[0] = 1

}

}

}test