This is a fork of the original QuaggaJS library, that will be maintained until such time as the original author and maintainer returns, or it has been completely replaced by built-in browser and node functionality.

- Changelog

- Browser Support

- Installing

- Getting Started

- Using with React

- Using External Readers

- API

- CameraAccess API

- Configuration

- Tips & Tricks

Please see also https://github.com/ericblade/quagga2-react-example/ and https://github.com/ericblade/quagga2-redux-middleware/

Please see https://github.com/julienboulay/ngx-barcode-scanner or https://github.com/classycodeoss/mobile-scanning-demo

Please see https://github.com/ptc-iot-sharing/ThingworxBarcodeScannerWidget

Please see https://github.com/DevinNorgarb/vue-quagga-2

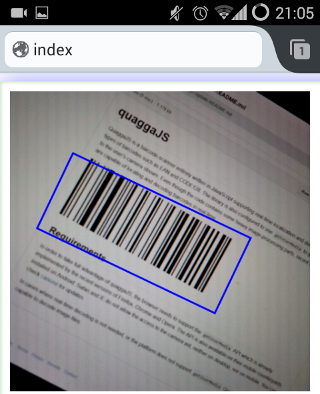

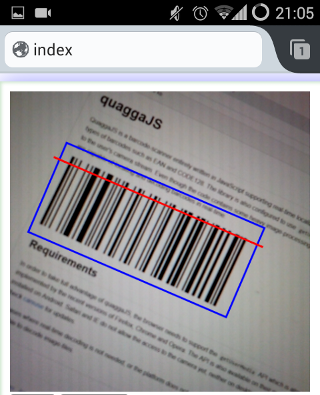

QuaggaJS is a barcode-scanner entirely written in JavaScript supporting real-time localization and decoding of various types of barcodes such as EAN,

CODE 128, CODE 39, EAN 8, UPC-A, UPC-C, I2of5,

2of5, CODE 93, CODE 32 and CODABAR. The library is also capable of using

getUserMedia to get direct access to the user's camera stream. Although the

code relies on heavy image-processing even recent smartphones are capable of

locating and decoding barcodes in real-time.

Try some examples and check out the blog post (How barcode-localization works in QuaggaJS) if you want to dive deeper into this topic.

This is not yet another port of the great zxing library, but more of an extension to it. This implementation features a barcode locator which is capable of finding a barcode-like pattern in an image resulting in an estimated bounding box including the rotation. Simply speaking, this reader is invariant to scale and rotation, whereas other libraries require the barcode to be aligned with the viewport.

Quagga makes use of many modern Web-APIs which are not implemented by all browsers yet. There are two modes in which Quagga operates:

- analyzing static images and

- using a camera to decode the images from a live-stream.

The latter requires the presence of the MediaDevices API. You can track the compatibility of the used Web-APIs for each mode:

The following APIs need to be implemented in your browser:

In addition to the APIs mentioned above:

Important: Accessing getUserMedia requires a secure origin in most

browsers, meaning that http:// can only be used on localhost. All other

hostnames need to be served via https://. You can find more information in the

Chrome M47 WebRTC Release Notes.

Every browser seems to differently implement the mediaDevices.getUserMedia

API. Therefore it's highly recommended to include

webrtc-adapter in your project.

Here's how you can test your browser's capabilities:

if (navigator.mediaDevices && typeof navigator.mediaDevices.getUserMedia === 'function') {

// safely access `navigator.mediaDevices.getUserMedia`

}The above condition evaluates to:

| Browser | result |

|---|---|

| Edge | true |

| Chrome | true |

| Firefox | true |

| IE 11 | false |

| Safari iOS | true |

Quagga2 can be installed using npm, or by including it with the script tag.

> npm install --save @ericblade/quagga2And then import it as dependency in your project:

import Quagga from '@ericblade/quagga2'; // ES6

const Quagga = require('@ericblade/quagga2').default; // Common JS (important: default)Currently, the full functionality is only available through the browser. When using QuaggaJS within node, only file-based decoding is available. See the example for node_examples.

You can simply include quagga.js in your project and you are ready

to go. The script exposes the library on the global namespace under Quagga.

<script src="quagga.js"></script>You can get the quagga.js file in the following ways:

By installing the npm module and copying the quagga.js file from the dist folder.

(OR)

You can also build the library yourself and copy quagga.js file from the dist folder(refer to the building section for more details)

(OR)

You can include the following script tags with CDN links:

a) quagga.js

<script src="https://cdn.jsdelivr.net/npm/@ericblade/quagga2/dist/quagga.js"></script>b) quagga.min.js (minified version)

<script src="https://cdn.jsdelivr.net/npm/@ericblade/quagga2/dist/quagga.min.js"></script>Note: You can include a specific version of the library by including the version as shown below.

<!-- Link for Version 1.2.6 -->

<script src="https://cdn.jsdelivr.net/npm/@ericblade/quagga2@1.2.6/dist/quagga.js"></script>For starters, have a look at the examples to get an idea where to go from here.

There is a separate example for using quagga2 with ReactJS

New in Quagga2 is the ability to specify external reader modules. Please see quagga2-reader-qr. This repository includes a sample external reader that can read complete images, and decode QR codes. A test script is included to demonstrate how to use an external reader in your project.

Quagga2 exports the BarcodeReader prototype, which should also allow you to create new barcode reader implementations using the base BarcodeReader implementation inside Quagga2. The QR reader does not make use of this functionality, as QR is not picked up as a barcode in BarcodeReader.

You can build the library yourself by simply cloning the repo and typing:

> npm install

> npm run buildor using Docker:

> docker build --tag quagga2/build .

> docker run -v $(pwd):/quagga2 quagga2/build npm install

> docker run -v $(pwd):/quagga2 quagga2/build npm run buildit's also possible to use docker-compose:

> docker-compose run nodejs npm install

> docker-compose run nodejs npm run buildNote: when using Docker or docker-compose the build artifacts will end up in dist/ as usual thanks to the bind-mount.

This npm script builds a non optimized version quagga.js and a minified

version quagga.min.js and places both files in the dist folder.

Additionally, a quagga.map source-map is placed alongside these files. This

file is only valid for the non-uglified version quagga.js because the

minified version is altered after compression and does not align with the map

file any more.

If you are working on a project that includes quagga, but you need to use a development version of quagga, then you can run from the quagga directory:

npm install && npm run build && npm linkthen from the other project directory that needs this quagga, do

npm link @ericblade/quagga2When linking is successful, all future runs of 'npm run build' will update the version that is linked in the project. When combined with an application using webpack-dev-server or some other hot-reload system, you can do very rapid iteration this way.

The code in the dist folder is only targeted to the browser and won't work in

node due to the dependency on the DOM. For the use in node, the build command

also creates a quagga.js file in the lib folder.

You can check out the examples to get an idea of how to use QuaggaJS. Basically the library exposes the following API:

This method initializes the library for a given configuration config (see

below) and invokes the callback(err) when Quagga has finished its

bootstrapping phase. The initialization process also requests for camera

access if real-time detection is configured. In case of an error, the err

parameter is set and contains information about the cause. A potential cause

may be the inputStream.type is set to LiveStream, but the browser does

not support this API, or simply if the user denies the permission to use the

camera.

If you do not specify a target, QuaggaJS would look for an element that matches

the CSS selector #interactive.viewport (for backwards compatibility).

target can be a string (CSS selector matching one of your DOM node) or a DOM

node.

Quagga.init({

inputStream : {

name : "Live",

type : "LiveStream",

target: document.querySelector('#yourElement') // Or '#yourElement' (optional)

},

decoder : {

readers : ["code_128_reader"]

}

}, function(err) {

if (err) {

console.log(err);

return

}

console.log("Initialization finished. Ready to start");

Quagga.start();

});When the library is initialized, the start() method starts the video-stream

and begins locating and decoding the images.

If the decoder is currently running, after calling stop() the decoder does not

process any more images. Additionally, if a camera-stream was requested upon

initialization, this operation also disconnects the camera.

This method registers a callback(data) function that is called for each frame

after the processing is done. The data object contains detailed information

about the success/failure of the operation. The output varies, depending whether

the detection and/or decoding were successful or not.

Registers a callback(data) function which is triggered whenever a barcode-

pattern has been located and decoded successfully. The passed data object

contains information about the decoding process including the detected code

which can be obtained by calling data.codeResult.code.

In contrast to the calls described above, this method does not rely on

getUserMedia and operates on a single image instead. The provided callback

is the same as in onDetected and contains the result data object.

In case the onProcessed event is no longer relevant, offProcessed removes

the given handler from the event-queue. When no handler is passed, all handlers are removed.

In case the onDetected event is no longer relevant, offDetected removes

the given handler from the event-queue. When no handler is passed, all handlers are removed.

The callbacks passed into onProcessed, onDetected and decodeSingle

receive a data object upon execution. The data object contains the following

information. Depending on the success, some fields may be undefined or just

empty.

{

"codeResult": {

"code": "FANAVF1461710", // the decoded code as a string

"format": "code_128", // or code_39, codabar, ean_13, ean_8, upc_a, upc_e

"start": 355,

"end": 26,

"codeset": 100,

"startInfo": {

"error": 1.0000000000000002,

"code": 104,

"start": 21,

"end": 41

},

"decodedCodes": [{

"code": 104,

"start": 21,

"end": 41

},

// stripped for brevity

{

"error": 0.8888888888888893,

"code": 106,

"start": 328,

"end": 350

}],

"endInfo": {

"error": 0.8888888888888893,

"code": 106,

"start": 328,

"end": 350

},

"direction": -1

},

"line": [{

"x": 25.97278706156836,

"y": 360.5616435369468

}, {

"x": 401.9220519377024,

"y": 70.87524989906444

}],

"angle": -0.6565217179979483,

"pattern": [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, /* ... */ 1],

"box": [

[77.4074243622672, 410.9288668804402],

[0.050203235235130705, 310.53619724086366],

[360.15706727788256, 33.05711026051813],

[437.5142884049146, 133.44977990009465]

],

"boxes": [

[

[77.4074243622672, 410.9288668804402],

[0.050203235235130705, 310.53619724086366],

[360.15706727788256, 33.05711026051813],

[437.5142884049146, 133.44977990009465]

],

[

[248.90769330706507, 415.2041489551161],

[198.9532321622869, 352.62160512937635],

[339.546160777576, 240.3979259789976],

[389.5006219223542, 302.98046980473737]

]

]

}Quagga2 exposes a CameraAccess API that is available for performing some shortcut access to commonly

used camera functions. This API is available as Quagga.CameraAccess and is documented below.

Will attempt to initialize the camera and start playback given the specified video element. Camera is selected by the browser based on the MediaTrackConstraints supplied. If no video element is supplied, the camera will be initialized but invisible. This is mostly useful for probing that the camera is available, or probing to make sure that permissions are granted by the user. This function will return a Promise that resolves when completed, or rejects on error.

If a video element is known to be running, this will pause the video element, then return a Promise that when resolved will have stopped all tracks in the video element, and released all resources.

This will send out a call to navigator.mediaDevices.enumerateDevices(), filter out any mediadevices that do not have a kind of 'videoinput', and resolve the promise with an array of MediaDeviceInfo.

Returns the label for the active video track

Returns the MediaStreamTrack for the active video track

Turns off Torch. (Camera Flash) Resolves when complete, throws on error. Does not work on iOS devices of at least version 16.4 and earlier. May or may not work on later versions.

Turns on Torch. (Camera Flash) Resolves when complete, throws on error. Does not work on iOS devices of at least version 16.4 and earlier. May or may not work on later versions.

The configuration that ships with QuaggaJS covers the default use-cases and can be fine-tuned for specific requirements.

The configuration is managed by the config object defining the following

high-level properties:

{

locate: true,

inputStream: {...},

frequency: 10,

decoder:{...},

locator: {...},

debug: false,

}One of the main features of QuaggaJS is its ability to locate a barcode in a

given image. The locate property controls whether this feature is turned on

(default) or off.

Why would someone turn this feature off? Localizing a barcode is a computationally expensive operation and might not work properly on some devices. Another reason would be the lack of auto-focus producing blurry images which makes the localization feature very unstable.

However, even if none of the above apply, there is one more case where it might

be useful to disable locate: If the orientation, and/or the approximate

position of the barcode is known, or if you want to guide the user through a

rectangular outline. This can increase performance and robustness at the same

time.

The inputStream property defines the sources of images/videos within QuaggaJS.

{

name: "Live",

type: "LiveStream",

constraints: {

width: 640,

height: 480,

facingMode: "environment",

deviceId: "7832475934759384534"

},

area: { // defines rectangle of the detection/localization area

top: "0%", // top offset

right: "0%", // right offset

left: "0%", // left offset

bottom: "0%" // bottom offset

},

singleChannel: false // true: only the red color-channel is read

}First, the type property can be set to three different values:

ImageStream, VideoStream, or LiveStream (default) and should be selected

depending on the use-case. Most probably, the default value is sufficient.

Second, the constraint key defines the physical dimensions of the input image

and additional properties, such as facingMode which sets the source of the

user's camera in case of multiple attached devices. Additionally, if required,

the deviceId can be set if the selection of the camera is given to the user.

This can be easily achieved via

MediaDevices.enumerateDevices()

Thirdly, the area prop restricts the decoding area of the image. The values

are given in percentage, similar to the CSS style property when using

position: absolute. This area is also useful in cases the locate property

is set to false, defining the rectangle for the user.

The last key singleChannel is only relevant in cases someone wants to debug

erroneous behavior of the decoder. If set to true the input image's red

color-channel is read instead of calculating the gray-scale values of the

source's RGB. This is useful in combination with the ResultCollector where

the gray-scale representations of the wrongly identified images are saved.

This top-level property controls the scan-frequency of the video-stream. It's optional and defines the maximum number of scans per second. This renders useful for cases where the scan-session is long-running and resources such as CPU power are of concern.

QuaggaJS usually runs in a two-stage manner (locate is set to true) where,

after the barcode is located, the decoding process starts. Decoding is the

process of converting the bars into their true meaning. Most of the configuration

options within the decoder are for debugging/visualization purposes only.

{

readers: [

'code_128_reader'

],

debug: {

drawBoundingBox: false,

showFrequency: false,

drawScanline: false,

showPattern: false

}

multiple: false

}The most important property is readers which takes an array of types of

barcodes which should be decoded during the session. Possible values are:

- code_128_reader (default)

- ean_reader

- ean_8_reader

- code_39_reader

- code_39_vin_reader

- codabar_reader

- upc_reader

- upc_e_reader

- i2of5_reader

- 2of5_reader

- code_93_reader

- code_32_reader

Why are not all types activated by default? Simply because one should explicitly define the set of barcodes for their use-case. More decoders means more possible clashes, or false-positives. One should take care of the order the readers are given, since some might return a value even though it is not the correct type (EAN-13 vs. UPC-A).

The multiple property tells the decoder if it should continue decoding after

finding a valid barcode. If multiple is set to true, the results will be

returned as an array of result objects. Each object in the array will have a

box, and may have a codeResult depending on the success of decoding the

individual box.

The remaining properties drawBoundingBox, showFrequency, drawScanline and

showPattern are mostly of interest during debugging and visualization.

The default setting for ean_reader is not capable of reading extensions such

as EAN-2 or

EAN-5. In order to activate those

supplements you have to provide them in the configuration as followed:

decoder: {

readers: [{

format: "ean_reader",

config: {

supplements: [

'ean_5_reader', 'ean_2_reader'

]

}

}]

}Beware that the order of the supplements matters in such that the reader stops

decoding when the first supplement was found. So if you are interested in EAN-2

and EAN-5 extensions, use the order depicted above.

It's important to mention that, if supplements are supplied, regular EAN-13

codes cannot be read any more with the same reader. If you want to read EAN-13

with and without extensions you have to add another ean_reader reader to the

configuration.

The locator config is only relevant if the locate flag is set to true.

It controls the behavior of the localization-process and needs to be adjusted

for each specific use-case. The default settings are simply a combination of

values which worked best during development.

Only two properties are relevant for the use in Quagga (halfSample and

patchSize) whereas the rest is only needed for development and debugging.

{

halfSample: true,

patchSize: "medium", // x-small, small, medium, large, x-large

debug: {

showCanvas: false,

showPatches: false,

showFoundPatches: false,

showSkeleton: false,

showLabels: false,

showPatchLabels: false,

showRemainingPatchLabels: false,

boxFromPatches: {

showTransformed: false,

showTransformedBox: false,

showBB: false

}

}

}The halfSample flag tells the locator-process whether it should operate on an

image scaled down (half width/height, quarter pixel-count ) or not. Turning

halfSample on reduces the processing-time significantly and also helps

finding a barcode pattern due to implicit smoothing.

It should be turned off in cases where the barcode is really small and the full

resolution is needed to find the position. It's recommended to keep it turned

on and use a higher resolution video-image if needed.

The second property patchSize defines the density of the search-grid. The

property accepts strings of the value x-small, small, medium, large and

x-large. The patchSize is proportional to the size of the scanned barcodes.

If you have really large barcodes which can be read close-up, then the use of

large or x-large is recommended. In cases where the barcode is further away

from the camera lens (lack of auto-focus, or small barcodes) then it's advised

to set the size to small or even x-small. For the latter it's also

recommended to crank up the resolution in order to find a barcode.

The following example takes an image src as input and prints the result on the

console. The decoder is configured to detect Code128 barcodes and enables the

locating-mechanism for more robust results.

Quagga.decodeSingle({

decoder: {

readers: ["code_128_reader"] // List of active readers

},

locate: true, // try to locate the barcode in the image

src: '/test/fixtures/code_128/image-001.jpg' // or 'data:image/jpg;base64,' + data

}, function(result){

if(result.codeResult) {

console.log("result", result.codeResult.code);

} else {

console.log("not detected");

}

});The following example illustrates the use of QuaggaJS within a node

environment. It's almost identical to the browser version with the difference

that node does not support web-workers out of the box. Therefore the config

property numOfWorkers must be explicitly set to 0.

var Quagga = require('quagga').default;

Quagga.decodeSingle({

src: "image-abc-123.jpg",

numOfWorkers: 0, // Needs to be 0 when used within node

inputStream: {

size: 800 // restrict input-size to be 800px in width (long-side)

},

decoder: {

readers: ["code_128_reader"] // List of active readers

},

}, function(result) {

if(result.codeResult) {

console.log("result", result.codeResult.code);

} else {

console.log("not detected");

}

});A growing collection of tips & tricks to improve the various aspects of Quagga.

If you're having issues getting a mobile device to run Quagga using Cordova, you might try the code here: Original Repo Issue #94 Comment

let permissions = cordova.plugins.permissions; permissions.checkPermission(permissions.CAMERA,

(res) => { if (!res.hasPermission) { permissions.requestPermission(permissions.CAMERA, open());Thanks, @chrisrodriguezmbww !

Barcodes too far away from the camera, or a lens too close to the object result in poor recognition rates and Quagga might respond with a lot of false-positives.

Starting in Chrome 59 you can now make use of capabilities and directly

control the zoom of the camera. Head over to the

web-cam demo

and check out the Zoom feature.

You can read more about those capabilities in

Let's light a torch and explore MediaStreamTrack's capabilities

Dark environments usually result in noisy images and therefore mess with the recognition logic.

Since Chrome 59 you can turn on/off the Torch of your device and vastly improve the quality of the images. Head over to the web-cam demo and check out the Torch feature.

To find out more about this feature read on.

Most readers provide an error object that describes the confidence of the reader in it's accuracy. There are strategies you can implement in your application to improve what your application accepts as acceptable input from the barcode scanner, in this thread.

If you choose to explore check-digit validation, you might find barcode-validator a useful library.

Tests are performed with Cypress for browser testing, and Mocha, Chai, and SinonJS for Node.JS testing. (note that Cypress also uses Mocha, Chai, and Sinon, so tests that are not browser specific can be run virtually identically in node without duplication of code)

Coverage reports are generated in the coverage/ folder.

> npm install

> npm run testUsing Docker:

> docker build --tag quagga2/build .

> docker run -v $(pwd):/quagga2 npm install

> docker run -v $(pwd):/quagga2 npm run testor using docker-compose:

> docker-compose run nodejs npm install

> docker-compose run nodejs npm run testWe prefer that Unit tests be located near the unit being tested -- the src/quagga/transform module, for example, has it's test suite located at src/quagga/test/transform.spec.ts. Likewise, src/locator/barcode_locator test is located at src/locator/test/barcode_locator.spec.ts .

If you have browser or node specific tests, that must be written differently per platform, or do not apply to one platform, then you may add them to src/{filelocation}/test/browser or .../test/node. See also src/analytics/test/browser/result_collector.spec.ts, which contains browser specific code.

If you add a new test file, you should also make sure to import it in either cypress/integration/browser.spec.ts, for browser-specific tests, or cypress/integration/universal.spec.ts, for tests that can be run both in node and in browser. Node.JS testing is performed using the power of file globbing, and will pick up your tests, so long as they conform to the existing test file directory and name patterns.

In case you want to take a deeper dive into the inner workings of Quagga, get to

know the debugging capabilities of the current implementation. The various

flags exposed through the config object give you the ability to visualize

almost every step in the processing. Because of the introduction of the

web-workers, and their restriction not to have access to the DOM, the

configuration must be explicitly set to config.numOfWorkers = 0 in order to

work.

Quagga is not perfect by any means and may produce false positives from time

to time. In order to find out which images produced those false positives,

the built-in ResultCollector will support you and me helping squashing

bugs in the implementation.

You can easily create a new ResultCollector by calling its create

method with a configuration.

var resultCollector = Quagga.ResultCollector.create({

capture: true, // keep track of the image producing this result

capacity: 20, // maximum number of results to store

blacklist: [ // list containing codes which should not be recorded

{code: "3574660239843", format: "ean_13"}],

filter: function(codeResult) {

// only store results which match this constraint

// returns true/false

// e.g.: return codeResult.format === "ean_13";

return true;

}

});After creating a ResultCollector you have to attach it to Quagga by

calling Quagga.registerResultCollector(resultCollector).

After a test/recording session, you can now print the collected results which

do not fit into a certain schema. Calling getResults on the

resultCollector returns an Array containing objects with:

{

codeResult: {}, // same as in onDetected event

frame: "data:image/png;base64,iVBOR..." // dataURL of the gray-scaled image

}The frame property is an internal representation of the image and

therefore only available in gray-scale. The dataURL representation allows

easy saving/rendering of the image.

Now, having the frames available on disk, you can load each single image by

calling decodeSingle with the same configuration as used during recording

. In order to reproduce the exact same result, you have to make sure to turn

on the singleChannel flag in the configuration when using decodeSingle.