Paul Streli and Christian Holz

Sensing, Interaction & Perception Lab

Department of Computer Science, ETH Zürich

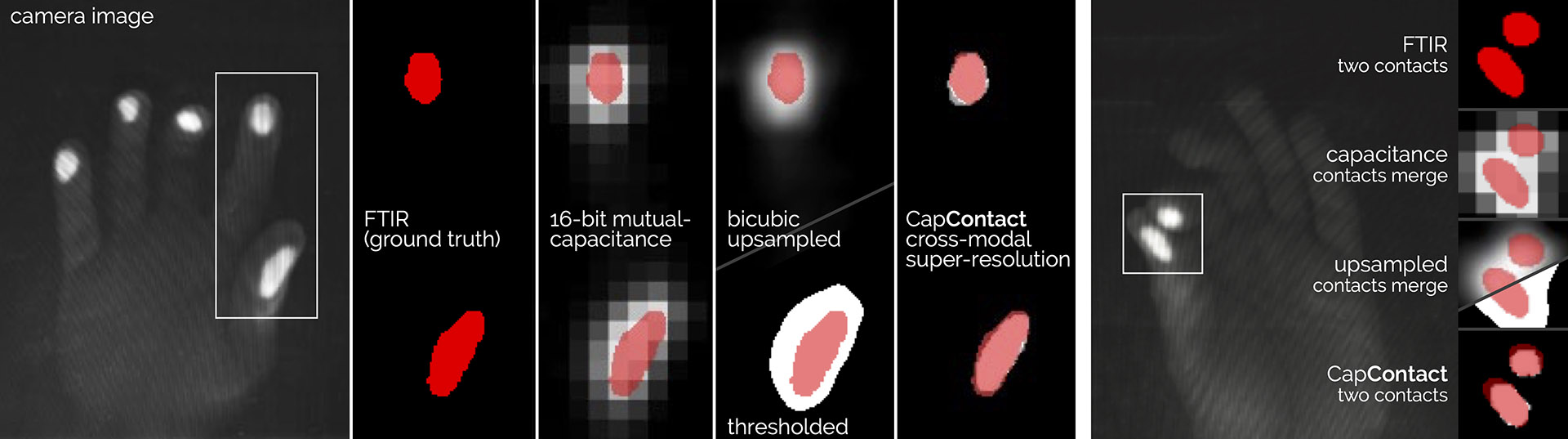

This is the research repository for the ACM CHI 2021 Paper: "CapContact: Super-resolution Contact Areas from Capacitive Touchscreens." This repository contains the dataset we collected on capacitive imprints and actual contact masks in high-resolution based on recordings using frustrated total interal reflection.

The records stem from a data collection with 10 participants and 3 sessions each. The records are provided in png format (8-bit encoding) as well as in a numpy array (16-bit capacitive images from the mutual-capacitance digitizer, 8-bit contact masks).

The capacitive images result from a Microchip ATMXT2954T2 digitizer with 16-bit precision with a resolution of 72 px × 41 px and the ITO diamond gridline sensor covered an area of 345 mm × 195 mm (15.6″ diagonal).

The actual contact masks are derived from a frustrated total internal reflection (FTIR) setup using a camera. Contact images have a resolution of 576 px × 328 px. This amounts to a super-resolution factor of 8×.

| capacitive image | contact mask (FTIR) |

|---|---|

| resolution: 72 x 41 | resolution: 576 x 328 |

|

|

The numpy arrays can be loaded using python as follows:

sample = np.load('CapContact-dataset/P01/1/npz/0133.npz')

cap = sample['cap']

contact = sample['ftir']To run CapContact, start by cloning the Git repository:

git clone https://github.com/eth-siplab/CapContact.git

cd CapContact

Create a virtual Python environment (Python 3.8) and install the dependencies:

pip install -r requirements.txt

Use train.py script to train a model from scratch:

python train.py --train_set <PATH TO CSV FILE WITH PATHS TO DATAFILES FOR TRAINING> --val_set <PATH TO CSV FILE WITH PATHS TO DATAFILES FOR VALIDATION> --save_ckpt_path <PATH FOR STORING MODEL CHECKPOINTS>

In order to do inference with a trained model run:

python inference.py --load_ckpt_path <PATH TO FOLDER WITH SAVED MODEL CHECKPOINTS> --load_ckpt_epoch <EPOCH WITH LOWEST VALIDATION ERROR> --test_set <PATH TO CSV FILE WITH PATHS TO DATAFILES FOR INFERENCE> --out_path <PATH FOR STORING INFERENCE OUPUT>

Paul Streli and Christian Holz. 2021. CapContact: Super-resolution Contact Areas from Capacitive Touchscreens. Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, Article 289, 1–14. DOI:https://doi.org/10.1145/3411764.3445621

@inproceedings{10.1145/3411764.3445621,

author = {Streli, Paul and Holz, Christian},

title = {CapContact: Super-Resolution Contact Areas from Capacitive Touchscreens},

year = {2021},

isbn = {9781450380966},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3411764.3445621},

booktitle = {Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems},

articleno = {289},

numpages = {14}

}

Touch input is dominantly detected using mutual-capacitance sensing, which measures the proximity of close-by objects that change the electric field between the sensor lines. The exponential drop-off in intensities with growing distance enables software to detect touch events, but does not reveal true contact areas. In this paper, we introduce CapContact, a novel method to precisely infer the contact area between the user’s finger and the surface from a single capacitive image. At 8x super-resolution, our convolutional neural network generates refined touch masks from 16-bit capacitive images as input, which can even discriminate adjacent touches that are not distinguishable with existing methods. We trained and evaluated our method using supervised learning on data from 10 participants who performed touch gestures. Our capture apparatus integrates optical touch sensing to obtain ground-truth contact through high-resolution frustrated total internal reflection. We compare our method with a baseline using bicubic upsampling as well as the ground truth from FTIR images. We separately evaluate our method’s performance in discriminating adjacent touches. CapContact successfully separated closely adjacent touch contacts in 494 of 570 cases (87%) compared to the baseline's 43 of 570 cases (8%). Importantly, we demonstrate that our method accurately performs even at half of the sensing resolution at twice the grid-line pitch across the same surface area, challenging the current industry-wide standard of a ∼4mm sensing pitch. We conclude this paper with implications for capacitive touch sensing in general and for touch-input accuracy in particular.

The dataset and code in this repository is for research purposes only. If you plan to use this for commercial purposes to build super-resolution capacitive touchscreens, please contact us. If you are interested in a collaboration with us around this topic, please also contact us.

THE PROGRAM IS DISTRIBUTED IN THE HOPE THAT IT WILL BE USEFUL, BUT WITHOUT ANY

WARRANTY. IT IS PROVIDED "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESSED

OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF

MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE ENTIRE RISK AS TO THE

QUALITY AND PERFORMANCE OF THE PROGRAM IS WITH YOU. SHOULD THE PROGRAM PROVE

DEFECTIVE, YOU ASSUME THE COST OF ALL NECESSARY SERVICING, REPAIR OR

CORRECTION.

IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW THE AUTHOR WILL BE LIABLE TO YOU

FOR DAMAGES, INCLUDING ANY GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL

DAMAGES ARISING OUT OF THE USE OR INABILITY TO USE THE PROGRAM (INCLUDING BUT

NOT LIMITED TO LOSS OF DATA OR DATA BEING RENDERED INACCURATE OR LOSSES

SUSTAINED BY YOU OR THIRD PARTIES OR A FAILURE OF THE PROGRAM TO OPERATE WITH

ANY OTHER PROGRAMS), EVEN IF THE AUTHOR HAS BEEN ADVISED OF THE POSSIBILITY OF

SUCH DAMAGES.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.