-

Notifications

You must be signed in to change notification settings - Fork 61

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ECIP-1049: Change the ETC Proof of Work Algorithm to Keccak-256 #13

Comments

|

Work has officially begun on Astor testnet - a reference implementation of an Ethereum Classic Keccak256 testnet. Any help is appreciated. Astor Place Station in New York is one of the first subway stations in the city, and we plan the testnet to be resiliant, while also delivering far increased performance by changing out the overly complicated Ethash proof of work algorithm. |

|

"I think the intent of this ECIP is to just respond with an ECIP because the ECIP knowingly isn't trying to solve the problems of the claimed catalyst (51 attack). ETC can change it's underwear in some way but it has to have some type of super power than 'just cause'. I reject." - @stevanlohja #8 (comment) |

|

First and most crucial question : Do we need an algo change? How an algo change could help us?For me there are two aspects that should be examined at the same time. The first one, is how much secure is the new POW vs the old one. As you nicely wrote,any well examined algo as keccak256 is both scientifically reviewed and as the successor of SHA2 has high propability to succeed as SHA2 did with bitcoin. This can be controversial tho, so this article can strengthen the pros of keccac it is considered that may be quantum resistant: https://eprint.iacr.org/2016/992.pdf |

|

Thank you for your post @Harriklaw. The plan for this switch is to create a SHA3 testnet first, for miners and hardware manufacturers to use, become comfortable with, and collect data on. Once we start seeing Flyclients, increased block performance, and on-chain smart contracts that verify the chain's proof of work, the mining community will see the tremendous value of this new algorithm and support a change. RE: decentralization. I consider Ethash to already be ASIC'd, and as ETC becomes more valuable it will be less possible to mine it from a GPU anyway. The concern is that right now, Ethash is so poorly documented, only 1 or 2 companies knows how to build a profitable ASIC for it. However, with SHA3, it is conceivable that new startups, and old players (like Intel, Cisco, etc.) would feel comfortable participating in the mining hardware market since they know the SHA3 standard is transparent, widely used, and has other uses beyond just cryptocurrency. SHA3 has been determined to be 4x faster in hardware than SHA2, so it is conceivable an entirely new economy can be created around SHA3 that is different than SHA2, similar to how the trucking market has different companies than the consumer car market. |

|

Re: Quantum resistance of hash functions

I do not think we should worry about quantum resistance in this ECIP. |

|

In the process of creating an ETC FlyClient, I have run into major blockers that can be eliminated if 1049 (this ECIP) is adopted. Basically verification right now, cannot be done without some serious computation. The main issue is Ethash requiring the generation of a 16mb pseudorandom cache. This cache changes about once a week, so verifying the full work requires doing it many times. I have touched many creative solutions to this, but I believe we are stuck at light-client verification taking at least 10 minutes on a phone. By contrast, with this ECIP, plus FlyClient (ECIP-1055), Im confident full PoW can be done in less than 5 seconds. This would open the door to new UX design patterns. |

Not completely accurate :

Unless I miss something how the proof of work is supposed to be verified ? |

The "lack" of documentation for ethash is pure fallacy. Anyone with basic programming skills can build a running implementation in the language they prefer. ASIC makers never had problems in "understanding" the algo (which also has a widely used open-source implementation here https://github.com/ethereum-mining/ethminer) and there is no "secret" behind. The problem of ASICs has always been how to overcome the memory hardness barrier: but this has nothing to do with the algo itself rather with how ASICs are built. P.s. Before anyone argues about SHA3 != Keccak256 please recall that Keccak allows different paddings which do not interfere with cryptographic strength of the function. SAH3 and Keccak246 (in ethash) are same keccak with different paddings. |

|

Agree that ethash being undocumented is not the best argument. It is however, significantly more complex (being a layer atop keccak256). A bigger problem is that it doesn't achieve its intended goal of ASIC resistance or won't for much longer (as predicted here) Also it is incredibly easy to attack since there is so much "dormant" hash power. |

|

@zmitton I think we may agree that "ASIC resistance" is not equal to "ASIC proof". "Dormant" hashpower is not an issue imho and don't think is enough to vector an attack given the fact is still predominantly GPU (yet not for long). |

|

(cross posting as i see discussion section has changed): I have a low-level optimization for the ECIP. It would be preferable to use the specific format (mentioned to Alex at the summit)

|

Not sure what you mean here: block header is a hash with fixed width. |

|

larger input size, not output |

To be extremely clear SHA3 has been in ethash algorithm since its birth. Ethash algorithm is : Keccak256 -> 64 rounds of access to DAG area -> Keccak256. This proposal introduces nothing new unless (but is not clearly stated) is meant to remove the DAG accesses and eventually reduce Keccak rounds from 2 to 1. I have to assume this as the proponent says Ethash (Dagger Hashimoto) is memory intensive while SHA3 would not be, Under those circumnstances the new proposed SHA3 algo (which is wrong definition as SHA3 is simply an hash function accepting any arbitrary data as input - to define an algo you need to define how that input is obtained) the result would be;

|

|

I will never support a mining algorithm change, regardless of technical merits. I also refuse to spend more time above writing this comment on the matter. I have read all of the above discussion, reviewed each stated benefit and weakness, and thought long and hard about as many of the ramifications of this as possible. While each benefit on its own can be nitpicked over, having it's 'value added' objectively disseminated, there is 1.5 reasons that trump all benefit. It's an unfortunate reality of the world and humanity. The main point is that ruling out collusion being a driving force behind any contribution is impossible. This is especially true the closer the project gets to being connected with financial rewards. Every contribution has some level of Collusive Reward Potential. A change that adds a new opcode has a much higher CRP than fixing a documentation typo. Ranking changes with the highest CRP, my top 3 would be:

So, going back to the 1.5 reasons that trump all... 1 - To explain by counterposition, let's assume I was a large supporter of a mining algo change. What's to say I've not been paid by ASIC maker xyz to champion this change, giving them the jump on all other hardware providers? Spoiler: nothing. 0.5 - How can something which is designed to be inefficient be changed in the name of efficiency WITHOUT raising suspicion? Spoiler: it can't. To conclude, this might be a great proposal... for a new blockchain... And I urge you to reconsider this PR, as I believe there are more useful ways of spending development efforts. |

|

I share a similar opinion as @BelfordZ on this subject.

"most of this hashpower was rented.." - what's the source of this assessment? "would encourage the development of a specialized Ethereum Classic mining community" - a new and specialized mining community sounds like we could be talking about a newer and smaller community and probably less security? The risk is too high and the threat isn't exactly there. |

|

Here's my current view on this proposal. This won't solve 51% attacks because they can't really be solved. I do agree that having a simpler/minimalistic and more standard implementation of hashing algorithm decreases the chances of a bug being found (yes, ethash working for the last 5 years tells nothing, we've seen critical vulnerabilities discovered in OpenSSL after more than 10 years). On the other hand, we have no guarantee ETC will end up as the network with the most sha3 hash rate. Even if we did in the beginning, it doesn't mean we can sustain the first place. If we fail to do that, it's no different than ethash from this perspective. The last thing I want to mention is that making an instant switch actually opens up an attack vector. Nobody knows what hash rate to expect at the block that switches to sha3. This means that exchanges should not set the confirmation times to 5000 but closer to 50000. This makes ETC unusable and we should avoid such cases if possible. In case the community agrees to switch to sha3, we should consider having a gradual move to sha3 where we set difficulties accordingly so that 10% of blocks are mined with sha3 proof and 90% with ethash. Over time the percentage goes in favor of sha3 for example after half a year it is as 20% for sha3 etc. This makes the hash rate much more predictable compared to the instant switch and the exchanges would not need to increase the confirmation times to protect themselves because of the unknown hash rate. I don't know whether this is easy to implement, I imagine we could have 2 difficulties (each hashing algorithm having its own) but I'm not that familiar with the actual implementation and possible bad things this could bring. |

|

Adding some more questions related to how the network will handle this chain split. @bobsummerwill please chime in as i know you're very active in privat emessages right now.

Interoperability Questions:

|

|

@zmitton I'm sure you can join the working group. What is your DIscord screen name? |

I can address this. |

|

The only thing you will accomplish, is moving ETC China centered, FGPA and ASIC ruled, and enough hashrates avail, for attacks |

|

Already heavily centralized, enough to the point that one could rent some hash-power and attack ETC. changing from one GPU algorithm to another will not fix this problem, people rented hardware-time, not algo-time. They could easily (if not even easier) rent enough hardware to attack the new GPU algorithm.

Ironically, the FPGA supply-chain is actually the least centralized out of all hardware (cpus, asics, gpus). Vast majority if FPGA mining is done with recycled chips from 2-3 years ago, hundreds of vendors in China, Eastern Europe, and Southeast Asia have access, and there's a very healthy market of supply and demand that keeps one party from dominating. The main manufacturers of these chips do not supply the mining market for two key reasons. First off, FPGA performance improvement per tech-node is not 10x or 20x like with ASICs. A 28nm FPGA goes toe-to-toe with a 16nm FPGA. Secondly, COGS for higher tech-node chips is higher than the market sale price of recycled chips.

Given that it will be 1 year before this change hits the network, there's plenty of time for one or two ASIC manufacturers to mass produce an ASIC for ETC. Shuttles (small runs) of 28 - 40 nm chips typically take 5-6 months to complete, after which mass production is a given. The main risk however is the fork, which is an uncertainty that dictates how much of the network follows ETH, and how much follows SHA. ASIC vendors may back out or hesitate given this risk. Would love to be involved with the technical discussions. |

|

ETC is Ethereum Classic, forked from original ETH. It uses EThash with a DAG file. The DAG file size is within 2 months of reaching 4 GB. At that size a lot of GPU's and ASICs will be obsolete. A 51% attack is less likely to happen when it is expensive. We are so close to make the cost more expensive and you want to throw that advantage away? It doesn't make sense at all. Better let it be for the moment and let's see what happens after all 4 GB GPUs and ASICs become obsolete for ETC. This will happen before they become obsolete for ETH, so you can see what will happen to ETC hashrate and ETH hashrate and the consequences for difficulty, price and miners reaction, how traders react to the new conditions and so on. |

|

This proposal currently appears incomplete. It proposes changing ethash (a complete consensus engine) for keccak-256, a hashing function, and does not at all go in to detail regarding the rest of the consensus engine. As is, blocktimes, monetary policy, hashing function(s), difficulty algo, ghost protocol/uncles, DAG/caches, block validation and sealing are all part of the ethash consensus engine. Disabling or removing ethash, disables/removes ALL of this. If the proposal is proposing to replace ethash, it should cover the entire engine, not just one componenet of it. Otherwise it should be proposed as a modification to the existing engine (ethash). |

Nonsense! Replacing the PoW algorithm (as opposed to misleadingly suggesting its removal) in no way affects all these other buzz-words you throw in willy nilly. |

difficulty algo: https://github.com/etclabscore/core-geth/blob/master/consensus/ethash/consensus.go#L308 buzzwords..... EDIT: whether this proposal introduces a new consensus engine (along side clique and ethash), or simply modifies the existing engine (ethash), is sorta crucial for implementation. No evm based chains have changed consensus engines mid chain. It's uncharted terroritory requiring massive refactoring of client software, as this behaviour was never intended. A very different scenario to modifying the existing ethash engine. |

|

There is no "massive refactoring" of clients required. The PoW verification code is a localized function in all clients. On the fly PoW algo swaps are not uncharted territory! Why even mention the EVM? The consensus algorithm is unchanged. Difficulty adjusts for constant block time regardless of hash rate. What else? |

|

I'm aware many bitcoin forks, based on bitcoins code, have performed these kinds of changes, but can you show an example of an ethereum based chain doing the same? They function quite differently... Regarding refactoring. At least in terms of core-geth (which makes up majority of the nodes on network). All logic assumes a single chain, uses a single engine. e.g https://github.com/etclabscore/core-geth/blob/master/core/block_validator.go#L37 It would be less intrusive as a modification to the existing engine, imo. |

|

The specification is quite clear that this only changes the hashing algorithm. In case you truly are confused and not just trolling: All the other consensus properties remain as-is |

|

I'm referring to implementation, which happens to be key here, given a hard fork is required, and in which the proposal is lacking in much information in terms of the changeover.

A consensus breaking change such as multiplying the difficulty by 100x, can not be a recommendation, is it part of the specification or not? EDIT: Such an intervention, would result in the chain having an artificial weight, given chains compete by weight, this recommendation breaks everyones beloved nakamoto consensus, giving the 1049 chain, a significant and unfair advantage over the non 1049 chain. Also, why 100x, how was this mysterious figure derived? Given the scope of the change (requires hard fork) I would like to see more information on how the specification is intended to be implemented on a live network that currently uses ethash as the consensus engine. The only reference client provided, doesnt handle a changeover, and is based on a client that no longer supports the network. https://github.com/antsankov/parity-ethereum/blob/sha3/astor.json#L5 This would imply we are simply modifying the ethash engine, yet language like "the final Ethash block" would imply it's being replaced. I, as a client dev, am seeking clarity as the proposal, as is, leaves me with questions on the intended implementation. EDIT: Also, there's only activation blocks for mainnet, I assume given this is a PoW related hardfork, that it is intended to be activated on mordor first? |

|

Thank you for the clarification, those are valid concerns. |

That's entirely misleading regarding Grin. Grin has dual PoW; one ASIC-friendly (Cuckatoo), and one ASIC-resistant (Cuckaroo) for the first two years only. The latter is tweaked every 6 months, and has never had ASICs developed for it. All Grin ASIC development has been for the ASIC friendly variant. I agree that without frequent tweaking, no PoW can remain ASIC resistant... |

|

Conversation has been moved, and the proposal re-submitted in its exact same form, but without an implementation block number here: #394 We invite everyone to continue the debate about this proposal on the new thread. |

Recent thread moved here (2020+)

lang: en

ecip: 1049

title: Change the ETC Proof of Work Algorithm to the Keccak-256

author: Alexander Tsankov (alexander.tsankov@colorado.edu)

status: LAST CALL

type: Standards Track

category: core

discussions-to: #13

created: 2019-01-08

license: Apache-2.0

Change the ETC Proof of Work Algorithm to Keccak-256

Abstract

A proposal to replace the current Ethereum Classic proof of work algorithm with EVM-standard Keccak-256 ("ketch-ak")

The reference hash of string "ETC" in EVM Keccak-256 is:

49b019f3320b92b2244c14d064de7e7b09dbc4c649e8650e7aa17e5ce7253294Implementation Plan

Activation Block: 12,000,000 (approx. 4 months from acceptance - January 2021)

Fallback Activation Block: 12,500,000 (approx. 7 months from acceptance - April 2021)

If not activated by Block 12,500,000 this ECIP is voided and moved to

Rejected.We recommend

difficultybe multiplied 100 times at the first Keccak-256 block compared to the final Ethash block. This is to compensate for the higher performance of Keccak and to prevent a pileup of orphaned blocks at switchover. This is not required for launch.Motivation

A response to the recent double-spend attacks against Ethereum Classic. Most of this hashpower was rented or came from other chains, specifically Ethereum (ETH). A separate proof of work algorithm would encourage the development of a specialized Ethereum Classic mining community, and blunt the ability for attackers to purchase mercenary hash power on the open-market.

As a secondary benefit, deployed smart contracts and dapps running on chain are currently able to use

keccak256()in their code. This ECIP could open the possibility of smart contracts being able to evaluate chain state, and simplify second layer (L2) development. We recommend an op-cod / pre-compile be implemented in Solidity to facilitate this.Ease of use in consumer processors. Keccak-256 is far more efficient per unit of hash than Ethash is. It requires very little memory and power consumption which aids in deployment on IoT devices.

Rationale

Reason 1: Similarity to Bitcoin

The Bitcoin network currently uses the CPU-intensive SHA256 Algorithm to evaluate blocks. When Ethereum was deployed it used a different algorithm, Dagger-Hashimoto, which eventually became Ethash on 1.0 launch. Dagger-Hashimoto was explicitly designed to be memory-intensive with the goal of ASIC resistance [1]. It has been provably unsuccessful at this goal, with Ethash ASICs currently easily available on the market.

Keccak-256 is the product of decades of research and the winner of a multi-year contest held by NIST that has rigorously verified its robustness and quality as a hashing algorithm. It is one of the only hashing algorithms besides SHA2-256 that is allowed for military and scientific-grade applications, and can provide sufficient hashing entropy for a proof of work system. This algorithm would position Ethereum Classic at an advantage in mission-critical blockchain applications that are required to use provably high-strength algorithms. [2]

A CPU-intensive algorithm like Keccak256 would allow both the uniqueness of a fresh PoW algorithm that has not had ASICs developed against it, while at the same time allowing for organic optimization of a dedicated and financially committed miner base, much the way Bitcoin did with its own SHA2 algorithm.

If Ethereum Classic is to succeed as a project, we need to take what we have learned from Bitcoin and move towards CPU-hard PoW algorithms.

Note: Please consider this is from 2008, and the Bitcoin community at that time did not differentiate between node operators and miners. I interpret "network nodes" in this quote to refer to miners, and "server farms of specialized hardware" to refer to mining farms.

Reason 2: Value to Smart Contract Developers

In Solidity, developers have access to the

keccak256()function, which allows a smart contract to efficiently calculate the hash of a given input. This has been used in a number of interesting projects launched on both Ethereum and Ethereum-Classic. Most Specifically a project called 0xBitcoin [4] - which the ERC-918 spec was based on.0xBitcoin is a security-audited [5] dapp that allows users to submit a proof of work hash directly to a smart contract running on the Ethereum blockchain. If the sent hash matches the given requirements, a token reward is trustlessly dispensed to the sender, along with the contract reevaluating difficulty parameters. This project has run successfully for over 10 months, and has minted over 3 million tokens [6].

With the direction that Ethereum Classic is taking: a focus on Layer-2 solutions and cross-chain compatibility; being able to evaluate proof of work on chain, will be tremendously valuable to developers of both smart-contracts and node software writers. This could greatly simplify interoperability.

Implementation

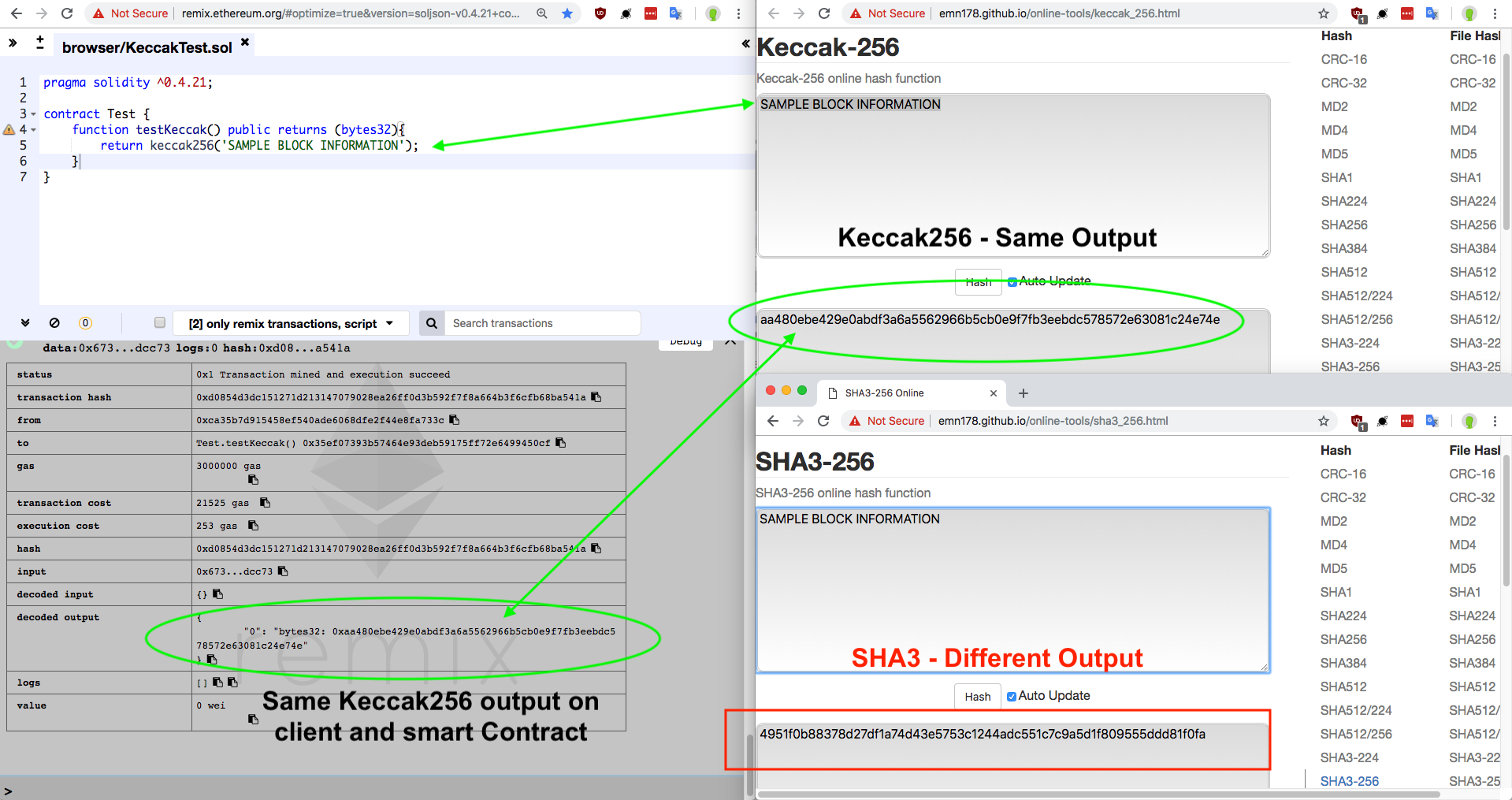

Example of a Smart contract hashing being able to trustlessly Keccak-256 hash a hypothetical block header.

Here is an analysis of Monero's nonce-distribution for "cryptonight", an algorithm similar to Ethash, which also attempts to be "ASIC-Resistant" it is very clear in the picture that before the hashing algorithm is changed there is a clear nonce-pattern. This is indicative of a major failure in a hashing algorithm, and should illustrate the dangers of disregarding proper cryptographic security. Finding a hashing pattern would be far harder using a proven system like Keccak-256:

Based on analysis of the EVM architecture here there are two main pieces that need to be changed:

A testnet implementing this ECIP, is currently live, with more information available at Astor.host

References:

Previous discussion from Pull request

The text was updated successfully, but these errors were encountered: