-

Notifications

You must be signed in to change notification settings - Fork 304

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

DBAPI - running a series of statements that depend on each other (DECLARE, SET, SELECT, ...) #377

Comments

|

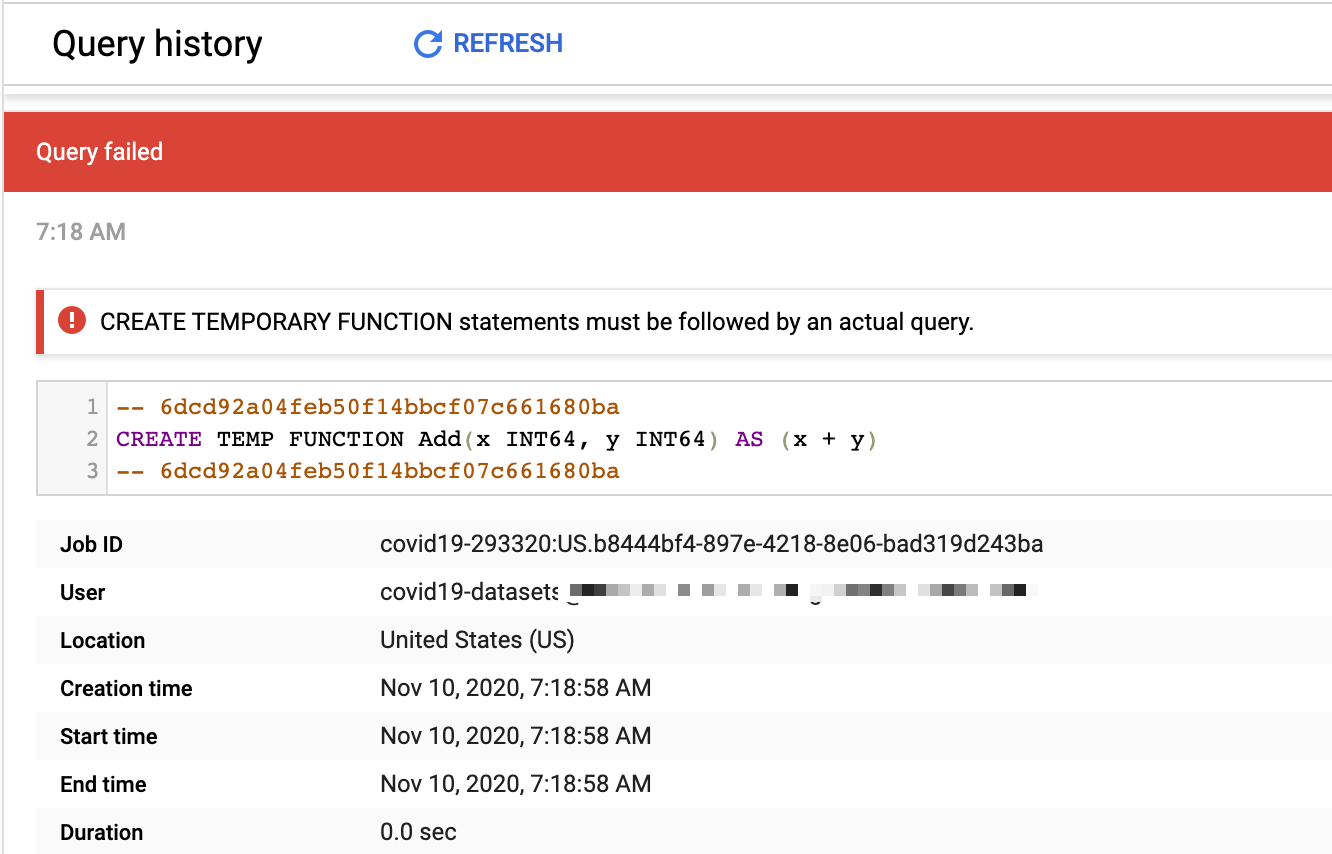

I confirm the issue. The Driver executes the statements in separate sessions, from the project history you can see that are separated entries and because of executing as separated entries

If I execute this sessions directly from the console, the 3 statements run in one session, |

|

Since the backend API doesn't really have a concept of connection or cursor, we'll have to do this bundling client-side. Does the following sound reasonable? Statements from Perhaps we add a "DEFERRED" isolation mode to select that behavior and keep the current "IMMEDIATE"-style execution the default? |

|

Technically, reads in BigQuery use |

Having an option to enable client-side batching sounds like a good solution, and one we should be able to leverage in Superset with minimal changes. |

|

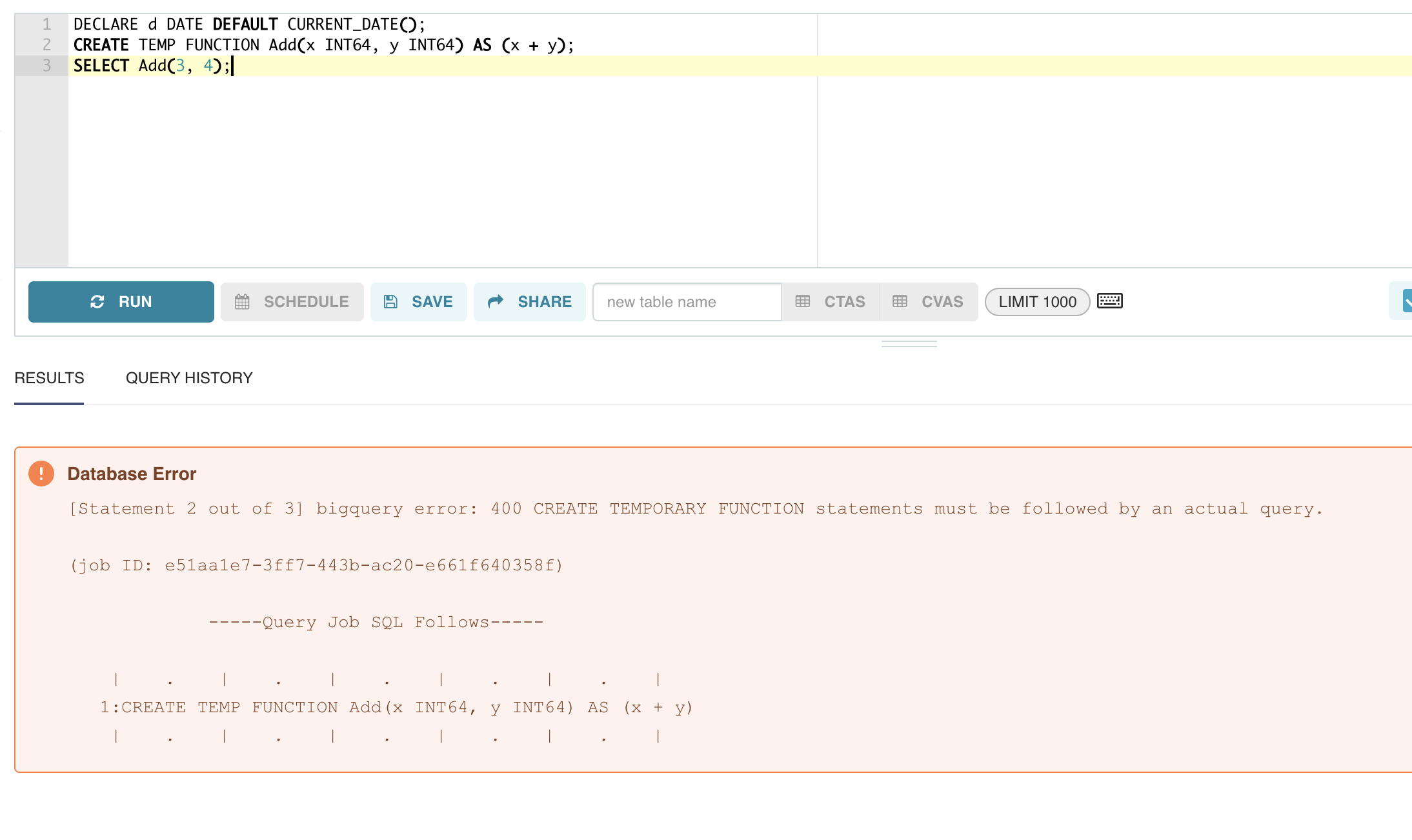

Live debugging the content of this PR apache/superset#11660, that attempts to only run message as text for convenience: |

|

Also, the simpler DECLARE d DATE DEFAULT CURRENT_DATE();

SELECT d as TEST;Also does not work, I get |

|

Sorry, I was thinking aloud. "Statements from Cursor.execute are batched client-side until either a fetch* method or close (or del?) is called." is a proposal, not the current state. I worry about changing the default behavior, so I'm currently thinking of adding a |

|

Oh gotcha! I thought it was a statement not a proposal. Can you think of workarounds on our side? Otherwise, we're happy to help improve the DBAPI implementation to make this possible. We'd love if we can help resolving this quickly! |

|

Workaround in BigQuery is to take all statements, join them with Related: https://docs.python.org/3.8/library/sqlite3.html#sqlite3.Cursor.executescript |

|

I tried running a multi-statement script as a large string separated with ;\n and found that following the script execution, cursor.fetchall() returns nothing, and cursor.description raises this: We're in uncharted territory here as far as DBAPI is concerned, but I was expecting (hoping is probably the right word here) for the state from the last statement to persist in the cursor since I didn't close the cursor at that point. |

|

Digging deeper the line here is the issue https://github.com/googleapis/python-bigquery/blob/master/google/cloud/bigquery/dbapi/cursor.py#L227-L229 In this context, I can open a PR that would set is_dml to |

|

Just for giggles: #386 |

|

Sent #387 with a system test that fixes the scripting issue. |

The `is_dml` logic is not needed now that we moved to `getQueryResults` instead of `tabledata.list` (#375). Previously, the destination table of a DML query would return a non-null value that was unreadable or would return nonsense with DML (and some DDL) queries. Thank you for opening a Pull Request! Before submitting your PR, there are a few things you can do to make sure it goes smoothly: - [ ] Make sure to open an issue as a [bug/issue](https://github.com/googleapis/python-bigquery/issues/new/choose) before writing your code! That way we can discuss the change, evaluate designs, and agree on the general idea - [ ] Ensure the tests and linter pass - [ ] Code coverage does not decrease (if any source code was changed) - [ ] Appropriate docs were updated (if necessary) Towards #377 🦕

|

Thankful for amazing maintainers(!) How often do you all push to PyPI? I think we can work with this fix, but there are some downsides, one of which is Superset can't keep track of the statement currently executing. Another is that we need BigQuery-specific logic to handle this in our codebase. Ideally this DBAPI would conform more to the standard. |

|

Now that sessions are available, I believe we can implement a feature in the DB-API connector to create a new session and persist it for the life of a Connection object. #971 |

see original issue here: googleapis/python-bigquery-sqlalchemy#74

I'm a committer in Apache Superset and we integrate generally fairly well with BigQuery using the DBAPI driver provided here. Using the python DBAPI driver for BigQuery, we're having an issue running a chain of statements that depend on each other in a common session. I'm using a simple connection and cursor behind the scene.

It appears that the statements are not chained and the session/connection isn't persisted. Any clue on how to solve this? All other DBAPI

Other DBAPI implementations allow this type of chaining, and using the same logic with this driver does not work properly.

Here's a simple script exemplifying this:

Environment details

20.2.4google-cloud-bigqueryversion:pip show google-cloud-bigquery1.28.0Steps to reproduce

code:

The text was updated successfully, but these errors were encountered: