-

Notifications

You must be signed in to change notification settings - Fork 29

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Signed/Bundled HTTP Exchanges and WebPackage #121

Comments

|

This is pretty crude, but these are the files I used in my demo: https://github.com/jimpick/signed-exchange-test Probably the only thing really re-usable in that is the service worker (sw-ipfs.js) which intercepts requests and loads the associated .sxg file from the ipfs.io gateway. |

|

I doubt I'll have time to dive into this before I go on vacation but this is awesome! |

|

Instructions on how to get into the origin trial here: |

IIUC we could look into enabling this on our HTTP Gateway, |

|

A new talk just landed: The focus is on UX, but I gathered highlights related to Web Packaging:

ps. Our Origin Trial setup is tracked in ipfs/infra#453 |

PSA:

|

|

This is so exciting! I'm going to experiment a bit with this later... |

|

I had a good meeting with the Google Chrome HTTP Signed Exchanges team in Tokyo today. I prepared a little demo: It only works with Chrome Canary (Chrome Beta doesn't seem to work). The top-level "bootstrap" website with the original index.html and service worker is published to IPFS (using IPNS). That's given it's own SSL certificate using https://cloudflare-ipfs.com/ Then the web content is processed with gen-signedexchange to generate a bunch of .sxg "HTTP Signed Exchange" files, which are published to IPFS (not using IPNS), and finally a 'ipfs-hash.txt' file with the hash of the content is written to the bootstrap site. The service worker looks at that file, and for any file that is being fetched, it will generate a redirect to the published content .sxg files hosted on the public ipfs.io gateway that Protocol Labs runs (which has the correct HTTP headers for the origin trial). It's a little hard to explain to somebody unfamiliar with all the parts involved. Now that the demo is actually working, I'd love to do a proper blog post for it! Source code for the demo: https://github.com/jimpick/signed-exchange-test/tree/ipfs.v6z.me-origin-trial (sorry, no documentation yet ... I only get it working yesterday) |

|

Spec change opening ability to load SXG from locally running IPFS node: WICG/webpackage#352 |

|

Heads up that there's an AMP conf coming up in Tokyo that will likely have relevant discussion https://www.ampproject.org/amp-conf/ |

|

PSA: Origin Trial ends on Mar 6, 2019 – I will extended our token till the trial end. |

|

Discussion about Service Worker and subresource SXG prefetching integration: |

|

Cloudflare announced seamless generation of SXG for existing websites as "AMP Real URL".

This older blogpost contains details on how signed content can be announced to the crawler. |

|

I tried copying an .sxg file found "in the wild" to IPFS and loading it through the gateway: https://ipfs.io/ipfs/QmcMMFKpj4WtnfDinDh6vuTU5ViQD5ncVtRvTzXWYEyo5w/test1.sxg In Chrome DevTools, the following error was displayed: Looks like we might need to tweak the header on the gateway. |

|

Good news, the gateway looks like it's updated and .sxg files are loading. :-) https://ipfs.io/ipfs/QmWgYzCJuNupFeX1RLv27srqU1t7z6HJamUMeR9rm1zF2w Edit: Actually, not yet ... I checked the headers, and they aren't updated yet. I think that's using a fallback. This stuff is confusing. |

|

Found a video of an IETF presentation on Web Packaging from this March https://youtu.be/woLbXaX0Gf4?t=700 Interesting that it is being presented to the IETF as a peer-to-peer technology! Also, listen to the questions to hear @ekr from Mozilla express his strong "considered harmful" position in person. |

|

@jimpick Yep, the original use case was around peer-to-peer content distribution in places where mobile data is very expensive or unreliable. We only later realized we could think of the AMP cache as a "peer". The big unsolved problem for our peer-to-peer model is the way clients discover packages. Doing it naively in the client gives the cache a full view of the client's browsing history, which isn't acceptable. When the peer is on the internet (e.g. AMP), the source of the link to the resource (e.g. Google Search) can provide discovery without leaking any more information. Maybe IPNS can be a more general way to discover packages cached nearby, if its privacy properties are right? |

|

@jyasskin You are absolutely correct in saying that naively sharing peer-to-peer will expose users privacy. Full privacy is a tough problem to design for. We're actively working on enhancements to IPNS and the DHT to improve performance. And there is a lot of ongoing work in libp2p for private networks and relaying. I think it would be neat if it would be possible to be able to restrict lookups so that content is only ever retrieved via privacy preserving mechanisms, and that content can be shared or re-shared without danger. There's often going to be a tradeoff in privacy vs. performance. To make things worse, many politicians, intelligence agencies, police forces and even corporate IT departments are opposed to true anonymity, so it gets into really tricky legal territory. For non-client applications, there are many datasets which are essentially public and for which most people would prefer performance since the privacy concerns aren't too much of a problem. That's one reason we're primarily focused on package managers and performance this year. |

|

Here's my take on the WebPackage controversy, which is fundamentally a rethink about SSL certificates and what they represent to the reader:

Right now, with AMP and HTTP Signed Exchanges, it is now possible for the "cached" exchanges to not transported directly from the Washington Post, but they are instead coming from Google or Cloudflare's CDN, which is not going to be spying on folks (they claim). Google is using their clout to provide what they call "privacy-preserving pre-fetch". Of course, if you are wearing a tinfoil hat, and you distrust Google, or the government, you might not think your privacy was preserved if Google's CDN is seeing all the documents being fetched. The problem with peer-to-peer distribution and the experiments we (and others, such as @pfrazee at Beaker) are doing is that we are opening up the distribution to everybody, and the reader privacy problems get very tricky. So displaying the content with a lock saying it has come from "The Washington Post" might be true, but it's also quite possible that by retrieving the content via a peer-to-peer mechanism, there was a digital trail left, and reader privacy has been compromised ... so the "lock" displayed in the UI is misleading people to think that nobody can spy on them. There has been much discussion about the reader privacy problem in the Dat community:

Clearly, there are ways to improve reader privacy on peer-to-peer networks. For example, access could be made using Tor. Or via an encrypted link to a place that the reader trusts. Content could be distributed via broadcast (eg. satellite) and multicast mechanisms, so there are no direct accesses. Not accessing things directly but via trusted intermediaries and privacy-preserving peer-to-peer networks could actually be a privacy improvement. Peer-to-peer distribution has clear advantages when it comes to censorship resistance. I wonder if peer-to-peer web browsers for the distributed web need more than one UI element to display trust and privacy information? Two cases:

Is this an area that could benefit from UX research? |

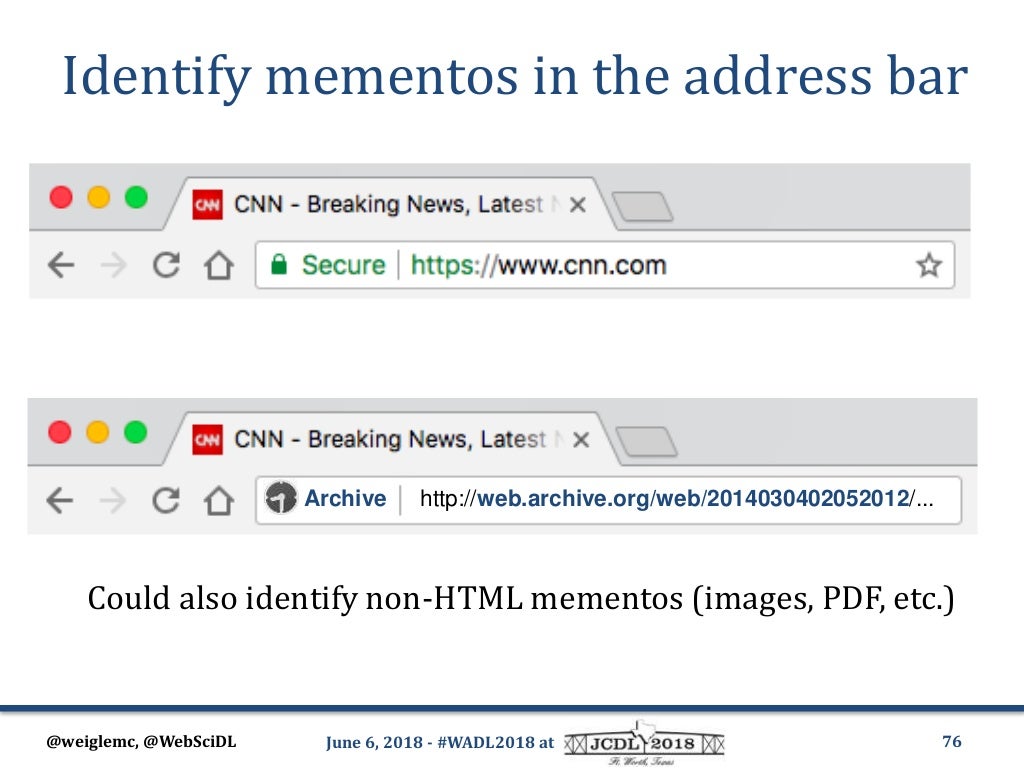

UX issues around "HTTPS spoofing"To expand on @jimpick's take, I believe contributing factor to the controversy is the UX of how SXG@v=b3 got implemented in Google Chrome. Looking from sidelines it may feel rushed and AMP-driven. To be specific, Google Chome makes SXG indistinguishable from regular HTTPS, which breaks basic assumptions around how users understand the green padlock in location bar (aka "nobody but me and the Origin server can see the payload"). UX of regular HTTPS is reused as-is, pretending that end-to-end HTTPS transport was used with Origin from location bar, which is not true. Browser should be the user agent, and as one it should never lie or break this type of trust. To me it feels like UX problem. There should be a different presentation in location bar than re-using the green padlock from HTTPS. Browser should be honest that WebPackage was used and show who was involved in rendering the page: who is the Publisher, when package was created, who was involved in Distributing the content etc. Need for Demonstrating Archival Use CasesI believe archiving is a missed opportunity to make a case for WebPackage and figure out technical details and UX in browser without going into politics of PKI and HTTPS spoofing. Would love to see more happening around this use case. Browsers could add support for saving a website to a WebPackage bundle and loading it from it while making it obvious to the user that they are looking at an archived snapshot, with all details at hand. This would add real value to the web by empowering individuals and institutions (Internet Archive, Wikipedia) with tools to fight the link rot and censorship. Imagine all Wikipedia References as reproducible snapshots of articles that could be downloaded, shared and read offline. Worth looking at is the potential overlap with W3C's Packaged Web Publications: https://github.com/w3c/pwpub Gateway Update: ipfs.io supports v=b3Good news: we've updated HTTP headers at our IPFS Gateway. Test in Chrome 74+: index.html.sxg :) |

I think problem is far greater than UX, that is users are not in control - If all the pages visited through chrome are served through AMP regardless of icon in the location bar user privacy is compromised. |

|

I found some more videos (thanks YouTube) that go over WebPackaging and Signed HTTP Exchanges in quite a bit of detail. BlinkOn 9 (April 2018): https://www.youtube.com/watch?v=rcJ9BLymVQE BlinkOn 10 (April 2019): https://www.youtube.com/watch?v=iTYr5qVbHdo |

|

Mozilla published 15 page paper which reaffirms their position: mozilla/standards-positions#29 (comment)

Quick takeaways:

|

We have an accepted position paper entitled, "Supporting Web Archiving via Web Packaging" (preprint version) in IAB's ESCAPE 2019 Workshop and hope to take this conversation there. Last year in Web Archiving and Digital Libraries (WADL) 2018 Workshop we illustrated a mock up of UI/UX element that browsers can show in the address bar to acknowledge users about the state of a resource being archived (i.e., a memento). The icon can reveal a lot more context and metadata about the memento when clicked/tapped on (see slide #76 of the keynote talk). |

|

I am back from the ESCAPE Workshop last week. I learned a lot and conveyed the message/issues/needs related to web archiving which was taken very well. I can recap my slides on Supporting Web Archiving via Web Packaging in one of the upcoming IPFS Weekly Calls if there is any interest in it. |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

Updates in support for creating Bundled HTTP Exchanges from a list of URL: |

Bundled HTTP Exchanges AKA Web Bundles

|

|

DSHR's take on Web Packaging for Web Archiving. |

|

Thank you for sharing! Interesting part: Content-Based Origin Definition:

If support for something like this lands in major browsers, it could be alternative way to introduce content-addressed origins, something like:

|

|

FYI, we're working on defining a way to name resources inside packages/bundles, at https://mailarchive.ietf.org/arch/msg/wpack/8fFVJv0AIksODEha8iyJrVDvOek/ (and other threads on that mailing list). I favor a new URL scheme, but it's possible it'll be something else. These names would be "before" any signing or other authenticity information is used. Part of the most recent proposal is to simplify the URL scheme by embedding an assumption that the package is addressable using an |

|

Thank you for the ping @jyasskin, replied in the thread, copy below. tl;dr we believe URIs should be explicit, not implicit, and assuming https will be the answer to all use cases is not future-proof enough Click to show copy of my reply

|

|

Related efforts at https://github.com/littledan/resource-bundles:

|

|

Potentially related: Intent to Prototype: Isolated Web Apps mentions "Web Packaging" and "Web Bundles". |

Shipped in Chromium Dev Channel, see https://github.com/GoogleChromeLabs/telnet-client. |

|

@lidel FWIW This https://github.com/guest271314/webbundle is a repository that uses the minimal dependencies (I've found Rollup to use less dependencies than Webpack) to create a Signed Web Bundle for source for an Isolated Web App. The authors wrote the code using |

Background

Google is championing work on "Web Packaging" to solve MITM (aka "misattribution problem") of the AMP Project. Signed HTTP Exchanges (SXG) decouple the origin of the content from who distributes it. Content can be published on the web, without relying on a specific server, connection, or hosting service, which is highly relevant for IPFS, as it is great at distributing immutable bundles of data.

2018: Signed HTTP Exchanges

A longer overview can be found at developers.google.com: Signed HTTP Exchanges:

The Google Chrome team is working towards making this an IETF spec and have a prototype built for Chrome with an origin trial starting with Chrome 71.

It is worth noting that this is still a very PoC spec and current version of SXGs is considered harmful by Mozilla and the spec needs further work.

2019 Q3: Bundled HTTP Exchanges, AKA Web Bundles

Web Bundles, more formally known as Bundled HTTP Exchanges, are part of the Web Packaging proposal.

To be precise, a Web Bundle is a CBOR file with a

.wbnextension (by convention) which packages HTTP resources into a binary format, and is served with theapplication/webbundleMIME type.More at https://web.dev/web-bundles/

Potential IPFS Use Cases

How does this fit in with P2P distribution?

Is the future of web publishing signed+versioned bundles over IPFS?

IPFS as transport for SXG / Web Bundles

In simpler words: a bundle with entire website (or parts of it) can be loaded over IPFS and browser supporting signed exchange will validate signatures and render content with original domain and green lock in the location bar. Click below to watch 1 minute demo:

This means one could set up DNSLink pointing at Signed HTTP Exchange and users of IPFS Companion would load cached websites over IPFS while keeping "original" URLs in location bar

Alternative way to use this, would be to create Service Worker orchestration that loads website via SXG snapshot fetched from IPFS as means of failover/workaround for DDoS or censorship scenarios. (See initial experimentation in Signed/Bundled HTTP Exchanges and WebPackage #121 (comment))

Archival Use Cases (Web Bundles)

Bundled Exchange file format could provide standardized means of creating future-proof website snapshots

? (add more ipfs-specific uses in comments below!)

Learning Materials

WebPackage 101

Fixing AMP URLs with Web Packaging (20min primer on Web Packaging)

Web Packaging Format Explainer

Use Cases and Requirements for Web Packages

Known Problems and Concerns

References

Web Packaging Primer

Additional Resources

Signed HTTP Exchanges from web-platform-tests/wpt/signed-exchange: QmVnnXjwXyEKhnrC1L7wegepUum2zN4JZUgtvA7DYtj4rG

cc @jimpick @mikeal

The text was updated successfully, but these errors were encountered: