v4.0.1: Soft Actor-Critic

This release adds a new algorithm: Soft Actor-Critic (SAC).

Soft Actor-Critic

-implement the original paper: "Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor" https://arxiv.org/abs/1801.01290 #398

- implement the improvement of SAC paper: "Soft Actor-Critic Algorithms and Applications" https://arxiv.org/abs/1812.05905 #399

- extend SAC to work directly for discrete environment using

GumbelSoftmaxdistribution (custom)

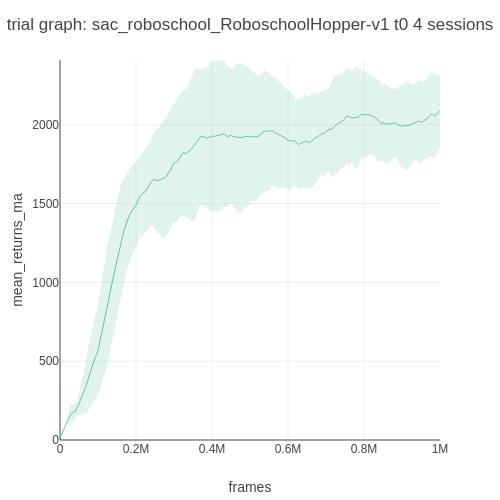

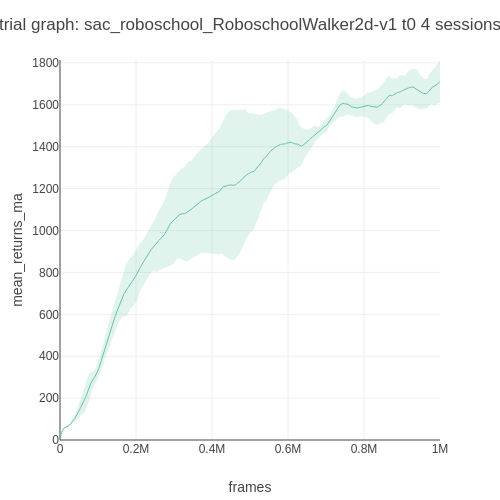

Roboschool (continuous control) Benchmark

Note that the Roboschool reward scales are different from MuJoCo's.

| Env. \ Alg. | SAC |

|---|---|

| RoboschoolAnt | 2451.55 |

| RoboschoolHalfCheetah | 2004.27 |

| RoboschoolHopper | 2090.52 |

| RoboschoolWalker2d | 1711.92 |

LunarLander (discrete control) Benchmark

|

|

| Trial graph | Moving average |