-

Notifications

You must be signed in to change notification settings - Fork 855

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Enable ability to resize lora dim based off sv ratios #243

Conversation

|

any comparisons? |

|

Sure. I grabbed the Arcane, Amber, and Makima LoRA from CivitAI since they are ranked as popular and processed them at sv_ratio of 5 (you can likely be more aggressive if you want). Listing file size reduction since dim is dynamic in new LoRAs. Prompt mostly just copied from a preview image. |

|

Thank you for this! It looks good! The extension needs to be updated first. The modification is not difficult so much, and I will make the change as soon as I have time. I'd like to support variable alpha and Conv2d at the same time. |

|

Was just about to mention Conv2d, nice! Looking forward to testing dreambooth extraction after Conv2d is implemented. Should be way more accurate. |

|

K percentile(cumsum) instead of using SV threshold, then frobenius norm as precision error check may be a better way of doing it. Locon author's comment about this, just FYI:

|

|

Thanks for the suggestion. It is easy to implement resizing based off any of these methods (cumulative sum, Frobenius norm, or sv ratio) so I went ahead and added them as options. I think they all have their strengths and weaknesses though and don't necessarily agree that cumulative sum or frobenius norm is strictly better.

|

|

What value ranges are each of those operating in? especially Cumulative sum since as you say the values are unintuitive. |

|

I discovered an interesting thing... clamping is sort of degrading the output. To my surprise changing the Original rank 256 model: Resized with sv 5 clamp 0.99: Resized with sv 5 clamp 1: File size reduction for both was the same... from 295MB to 19.4 MB... but output of clamp 1 is much closer to original... perhaps the clamp value need to be exposed as a parameter? |

|

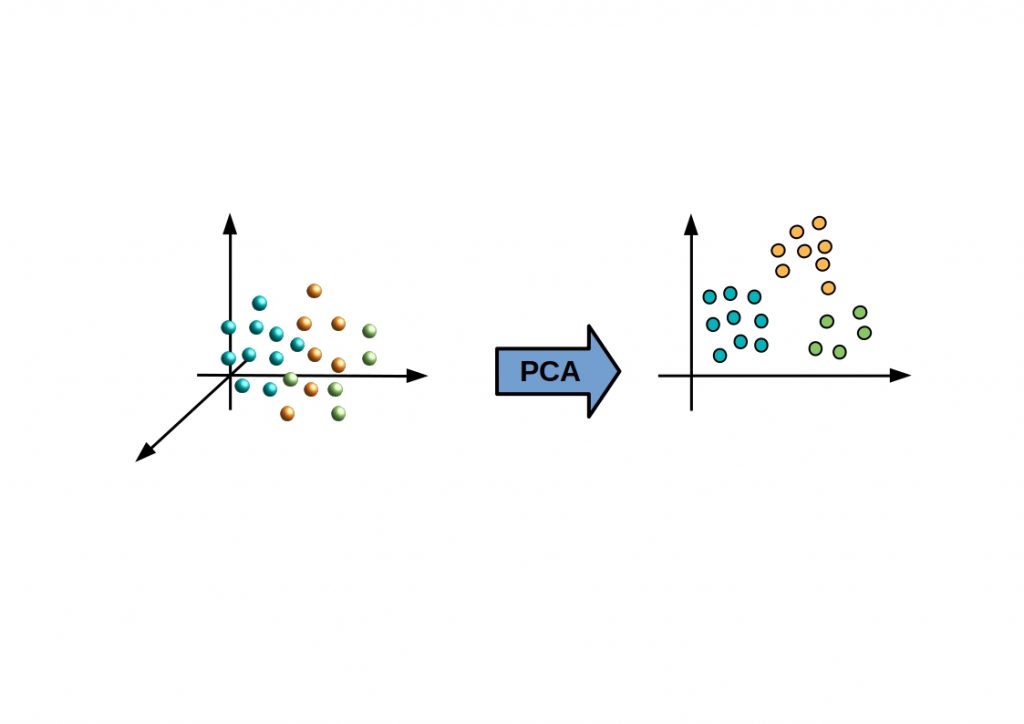

@bmaltais good observation. I actually think clamping may not be required at all since SVD should always return norm 1 vectors within U and Vh but wasn't sure if there were some unique exceptions in the algorithm used in torch which caused problems to warrant its inclusion in the original dreambooth extraction code. It may be best to just remove the quantile/clamp processing entirely. @TingTingin as a brief explanation, it is somewhat similar to the theory behind PCA. As far as valid ranges,

You can use --verbose flag and compare numbers. I'd recommend doing some level of iteration to see what gives the best results for whichever LoRA you try to reduce since there is some variation in a case by case basis. |

|

Based on testing one is better to create a LoRA in two steps. Use the highest rank possible to get the most out when creating a LoRA then resize it to a much smaller size with minimal loss. This provide way better results vs trying to train a small rank LoRA in one step |

|

Thx for the explanation |

- code refactor to be able to re-use same function for dynamic extract lora - remove clamp - fix issue where if sv_ratio is too high index goes out of bounds

Modified resize script so support different types of LoRA networks (refer to Kohaku-Blueleaf module implementation for structure).

-new_rank arg changed to limit the max rank of any layer. -added logic to make sure zero-ed layers do not create large lora dim

|

Apologies for so many additional changes. I was working to make the script modular to be able to recycle code to handle dreambooth extraction but it looks like Kohaku-Blueleaf pushed an update their repo which overlaps in that task. I'll table dreambooth extraction for now unless there is some reason to bring it back up. Latest version should also be able to handle the conv2d layers. important @bmaltais I have not done enough testing to say if 2-step LoRA is the best method, but I also have seen examples of trimming LoRA even improving quality. As a caveat though, I would think that trimming too many ranks runs the risk of losing important information in the model. There is probably some range that is best to stay within for resizing. |

|

@mgz-dev I will refer to your repo for updated code until you are ready to update the PR as to keep up with the improvements. Nice work. I like that it now support LoCon also. I have a question for you... if one use SD1.5 as the model... what would you say it the highest rank one should use when creatien a LoRA... since the model use 768 vectors... would a ran 768 be the limit after whick there is no return? I am asking because I think LoRA can be used as nice "frozen" model training. Once the LoRA has been created at full model potential resolution one simply use your tool to reduce it down to what really constitute the model. In my test this is providing the best LoRA.... but I wonder what the practical rank should be for a model... |

|

Thank you all! I am not familiar with the mathematical theory of LoRA and svd, so this is very helpful. The support for dynamic rank in I will test and merge this today after work. |

Had the idea after looking at KohakuBlueleaf's implementation using SV threshold to dynamically determine layer dimension while extracting dreambooth models to lora.

This uses maximum SV ratio instead of a threshold (all singular value ranks below the ratio are dropped. e.g. if ratio is 10 and the largest singular value is 5, all singular values below 0.5 are removed and the dim is dynamically calculated per layer).

LoRA resized this way currently do not work with sd-webui-additional-networks since it will give size mismatch warnings but does function with built-in lora support in webui.

Same logic can be applied to extract_lora_from_models.